Lua IPC パイプが OpenResty または Nginx イベントループをブロックする場合

最近、OpenResty XRay を使用して、ネットワークセキュリティ業界のある企業顧客の OpenResty/Nginx アプリケーションのリクエスト処理能力が低いという問題を最適化しました。

OpenResty XRay は、顧客の本番環境で自動的にすべての分析を行い、診断レポートを生成しました。そのレポートで、私たちはすぐにボトルネックとなる問題を特定しました。Lua IPC1 パイプ操作、io.popen などが、OpenResty/Nginx のイベントループを深刻にブロックしていました。

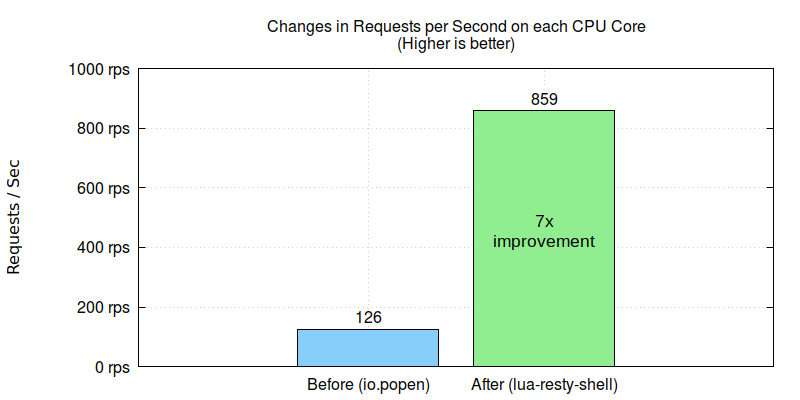

OpenResty 独自のノンブロッキング lua-resty-shell ライブラリを使用して標準の Lua API 関数呼び出しを置き換えたところ、スループットが約7倍改善されました。

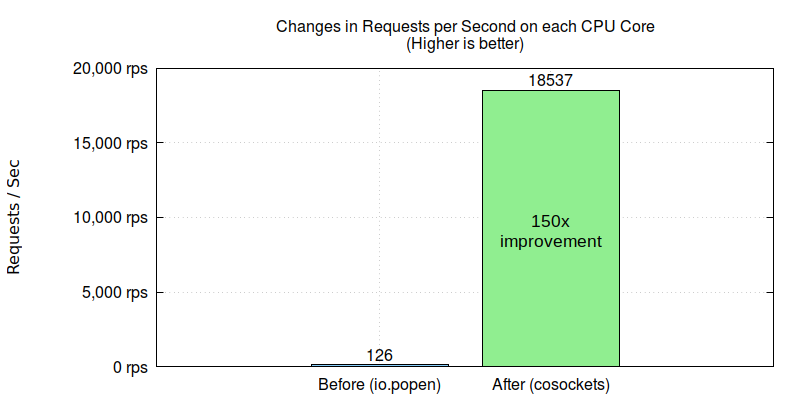

OpenResty のノンブロッキング cosocket API でシステムパイプを置き換えることで、スループットをさらに 150 倍向上させることができました。

OpenResty XRay は動的トレーシング製品で、実行中のアプリケーションを自動的に分析し、パフォーマンスの問題、動作の問題、セキュリティの脆弱性を特定し、実行可能な提案を提供します。基盤技術として、OpenResty XRay は当社の Y 言語によって駆動され、Stap+、eBPF+、GDB、ODB など、さまざまな環境で複数の異なるランタイムをサポートしています。

問題点

顧客のオンライン OpenResty アプリケーションで深刻なパフォーマンス問題が発生しました。彼らのサーバーでの1秒あたりの最大リクエスト数が非常に低く、約130程度でした。これはサービスがほぼ使用不可能なほど悪い状況でした。さらに、高性能 CPU を搭載したサーバーを使用しているにもかかわらず、Nginx ワーカープロセスは CPU リソースの半分以下しか利用できていませんでした。

分析

OpenResty XRay は顧客のオンラインプロセスを詳細に分析しました。顧客のアプリケーションに協力を求めることなく分析を行いました。

- 追加のプラグイン、モジュール、ライブラリは不要です。

- コードの注入やパッチも必要ありません。

- 特別なコンパイルや起動オプションも必要ありません。

- アプリケーションプロセスの再起動さえ必要ありません。

分析は完全に「事後」的に行われました。これは OpenResty XRay が採用している動的トレーシング技術のおかげです。

このような性能問題は OpenResty XRay にとって分析が容易です。CPU と off-CPU 操作が一緒に OpenResty/Nginx のイベントループをブロックしていることが判明しました。

CPU 操作

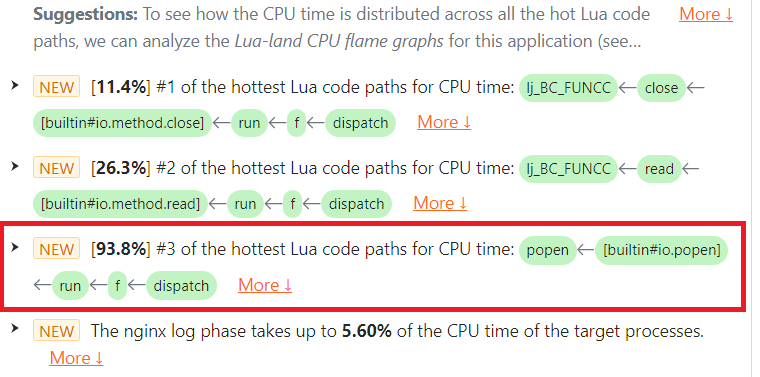

OpenResty XRay の自動分析レポートでは、io.popen とそれに関連する file:close() 操作が CPU 使用率を非常に高くしていることがわかりました。

io.popen

CPU カテゴリーで、io.popen の問題を見つけることができます。これは対象プロセスが消費した総 CPU 時間の 93.8% を占めていました。

ハイライト表示された [buildin#io.popen] Lua 関数フレームに注目してください。

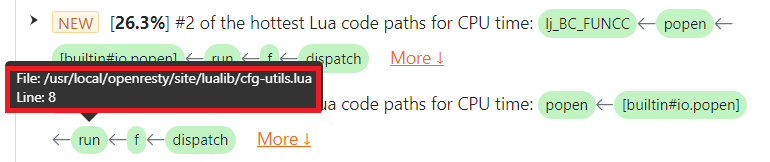

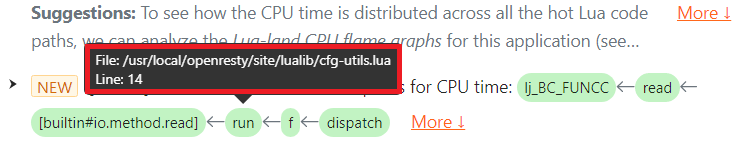

問題のテキストには、仮想マシン内の LuaJIT プリミティブを含む完全な Lua コードパスが表示されているため、ユーザーは該当する Lua ソースコードをすぐに特定できます。Lua 関数 run() の緑色のボックスにマウスカーソルを合わせると、Lua ソースファイル名や行番号などの詳細情報がツールチップとして表示されます。

io.popen の呼び出し位置が Lua ソースファイル /usr/local/openresty/site/lualib/cfg-utils.lua の 8 行目にあることがわかります。

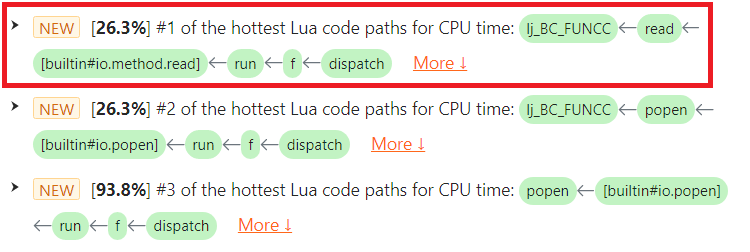

file:read()

CPU カテゴリーで、file:read() の問題を発見しました。これは対象プロセスが消費した総 CPU 時間の 26.3% を占めていました。

問題のハイライト表示された [buildin#io.method.read] 関数フレームに注目してください。

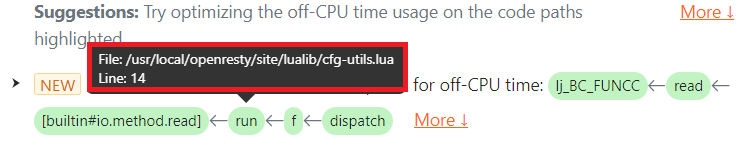

問題のテキストには、仮想マシン内の LuaJIT プリミティブを含む完全な Lua コードパスが表示されているため、ユーザーは対応する Lua ソースコードをすぐに特定できます。Lua 関数 run() の緑色のボックスにマウスカーソルを合わせると、Lua ソースファイル名や行番号などの詳細情報がツールチップとして表示されます。

file:read() の呼び出し位置が Lua ソースファイル /usr/local/openresty/site/lualib/cfg-utils.lua の 14 行目にあることがわかります。顧客はこの行を確認し、これが以前の io.popen 呼び出しで開かれたファイルハンドル上で行われていることを確認しました。

off-CPU 操作

ここでの “off-CPU” とは、オペレーティングシステムのスレッドがブロックされて待機状態になり、後続のコードを実行できない状態を指します。

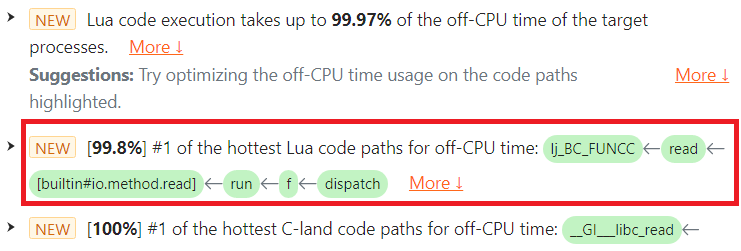

file:read()

診断レポートでは、file:read() の呼び出しが off-CPU 時間の観点からホットであることがわかりました。これは対象プロセスが消費した総 off-CPU 時間の 99.8% を占めており、本来ブロックされるべきのは Nginx イベントループのイベント待機操作(epoll_wait システムコールなど)だけのはずです。

問題のハイライト表示された [buildin#io.method.read] Lua 関数フレームに注目してください。

問題のテキストには、仮想マシン内の LuaJIT プリミティブを含む完全な Lua コードパスが表示されているため、ユーザーは Lua ソースコードをすぐに特定できます。Lua 関数 run() の緑色のボックスにマウスカーソルを合わせると、Lua ソースファイル名や行番号などの詳細情報がツールチップとして表示されます。

file:read() の呼び出し位置が Lua ソースファイル /usr/local/openresty/site/lualib/cfg-utils.lua の 14 行目にあることがわかります。顧客はこの行を確認し、これも以前の io.popen 呼び出しで開かれたファイルハンドル上で行われていることを確認しました。

解決策

上記の分析に基づき、問題の原因は io.popen やパイプファイルハンドルの読み取りと閉じる操作を含む、パイプの Lua API にあることがわかりました。これらは CPU と off-CPU 時間の両方で Nginx のイベントループを深刻にブロックしていました。したがって、解決策も明確です。

- OpenResty アプリケーションで io.popen Lua API の使用を避けます。代わりに OpenResty の lua-resty-shell ライブラリや低レベルの Lua API ngx.pipe を使用します。

- システムコマンドと IPC パイプを完全に避けます。OpenResty が提供するより効率的な cosocket API やそれを基にした高レベルのライブラリを使用します。

結果

顧客は我々のアドバイスに従い、彼らのゲートウェイアプリケーションで標準の Lua API io.popen から OpenResty の lua-resty-shell ライブラリへの移行を行いました。その結果、約7倍の改善がすぐに見られました。

我々はさらに、高コストのシステムコマンド呼び出しを完全に避けるべきだとアドバイスしました。そこで顧客はビジネスロジックを整理し、OpenResty のノンブロッキング cosocket API を利用してメタデータを取得するようにしました。この変更により 150 倍の改善が見られ、1つの CPU コアで1秒あたり数万のリクエストを処理できるようになりました。

顧客は現在のパフォーマンスに満足しています。

OpenResty XRay について

OpenResty XRay は動的トレーシング製品であり、実行中のアプリケーションを自動的に分析して、パフォーマンスの問題、動作の問題、セキュリティの脆弱性を解決し、実行可能な提案を提供いたします。基盤となる実装において、OpenResty XRay は弊社の Y 言語によって駆動され、Stap+、eBPF+、GDB、ODB など、様々な環境下で複数の異なるランタイムをサポートしております。

著者について

章亦春(Zhang Yichun)は、オープンソースの OpenResty® プロジェクトの創始者であり、OpenResty Inc. の CEO および創業者です。

章亦春(GitHub ID: agentzh)は中国江蘇省生まれで、現在は米国ベイエリアに在住しております。彼は中国における初期のオープンソース技術と文化の提唱者およびリーダーの一人であり、Cloudflare、Yahoo!、Alibaba など、国際的に有名なハイテク企業に勤務した経験があります。「エッジコンピューティング」、「動的トレーシング」、「機械プログラミング」 の先駆者であり、22 年以上のプログラミング経験と 16 年以上のオープンソース経験を持っております。世界中で 4000 万以上のドメイン名を持つユーザーを抱えるオープンソースプロジェクトのリーダーとして、彼は OpenResty® オープンソースプロジェクトをベースに、米国シリコンバレーの中心部にハイテク企業 OpenResty Inc. を設立いたしました。同社の主力製品である OpenResty XRay動的トレーシング技術を利用した非侵襲的な障害分析および排除ツール)と OpenResty XRay(マイクロサービスおよび分散トラフィックに最適化された多機能

翻訳

英語版の原文と日本語訳版(本文)をご用意しております。読者の皆様による他の言語への翻訳版も歓迎いたします。全文翻訳で省略がなければ、採用を検討させていただきます。心より感謝申し上げます!

IPC はプロセス間通信を指します。 ↩︎