巨大な Nginx 設定による メモリフラグメンテーションの最適化

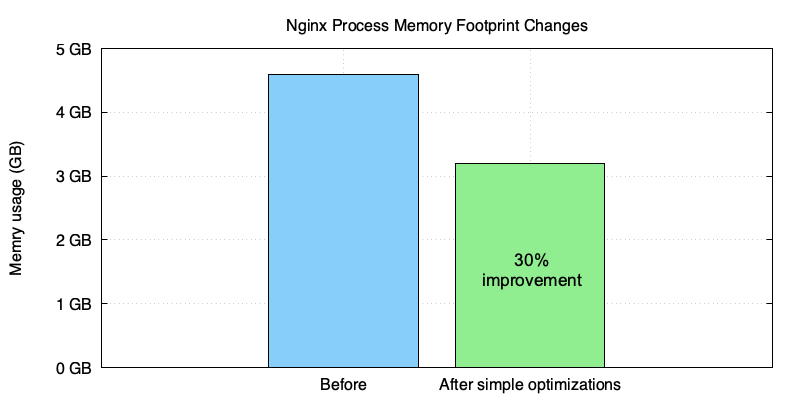

最近、OpenResty XRay を使用して、CDN およびトラフィックゲートウェイサービスを提供する企業顧客の OpenResty/Nginx サーバーのメモリ使用量を最適化しました。この顧客は、OpenResty/Nginx 設定ファイルに多数の仮想サーバーと URI ロケーションを定義していました。OpenResty XRay は、顧客の本番環境で自動的に分析を行い、分析結果に基づいて提案された解決策により、nginx プロセスのメモリ使用量が約 30% 削減されました。

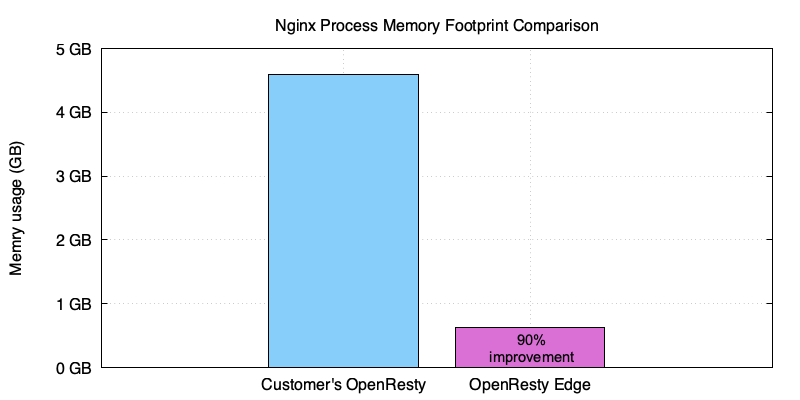

当社の OpenResty Edge の nginx ワーカープロセスと比較すると、さらなる最適化により約 90% の追加削減が可能であることが示されています。

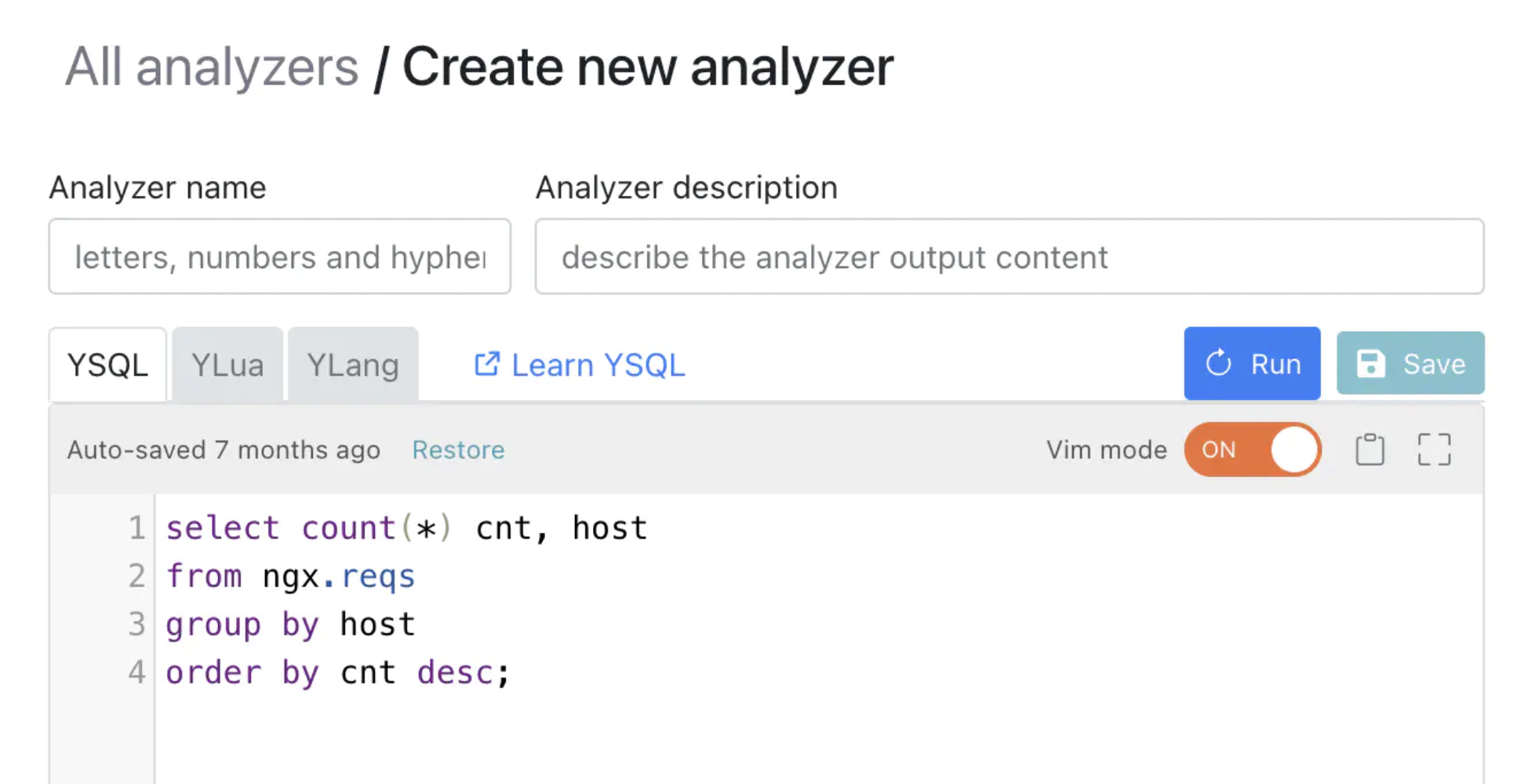

OpenResty XRay は動的トレーシング製品で、実行中のアプリケーションを自動的に分析し、パフォーマンスの問題、動作の問題、セキュリティの脆弱性を特定し、実行可能な提案を提供します。基盤技術として、OpenResty XRay は当社の Y 言語によって駆動され、Stap+、eBPF+、GDB、ODB など、さまざまな環境で複数のランタイムをサポートしています。

課題

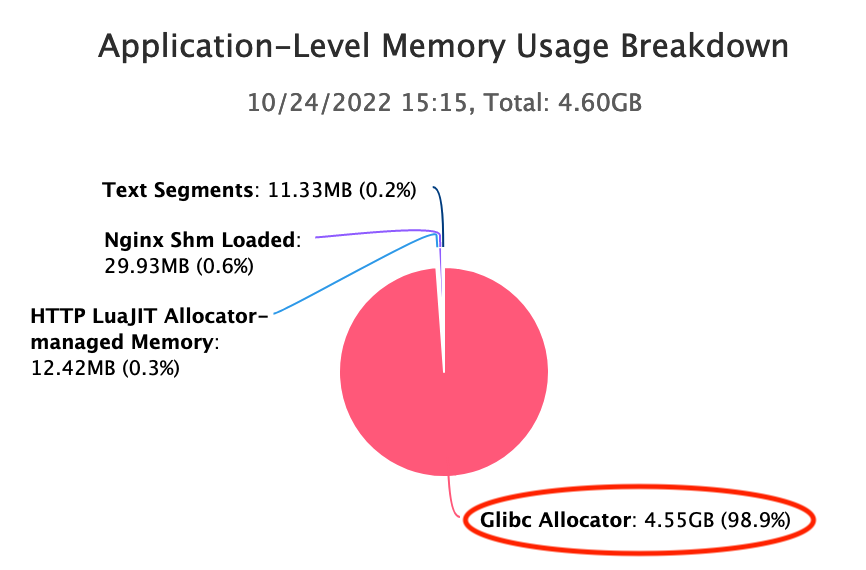

この CDN プロバイダーは、OpenResty サーバー上の約1万の仮想ホストにサービスを提供するために、巨大な 「nginx.conf」設定ファイルを使用していました。各 nginx マスタープロセスは、起動後に数ギガバイトのメモリを占有し、1 回以上の HUP リロード後にはメモリ使用量がほぼ倍増しました。以下の OpenResty XRay が生成したグラフから、最大メモリ使用量が約 4.60GB であることがわかります。

OpenResty XRay のアプリケーションレベルのメモリ使用量の内訳表から、Glibc アロケータが常駐メモリの大部分を占めており、4.55GB であることがわかります。

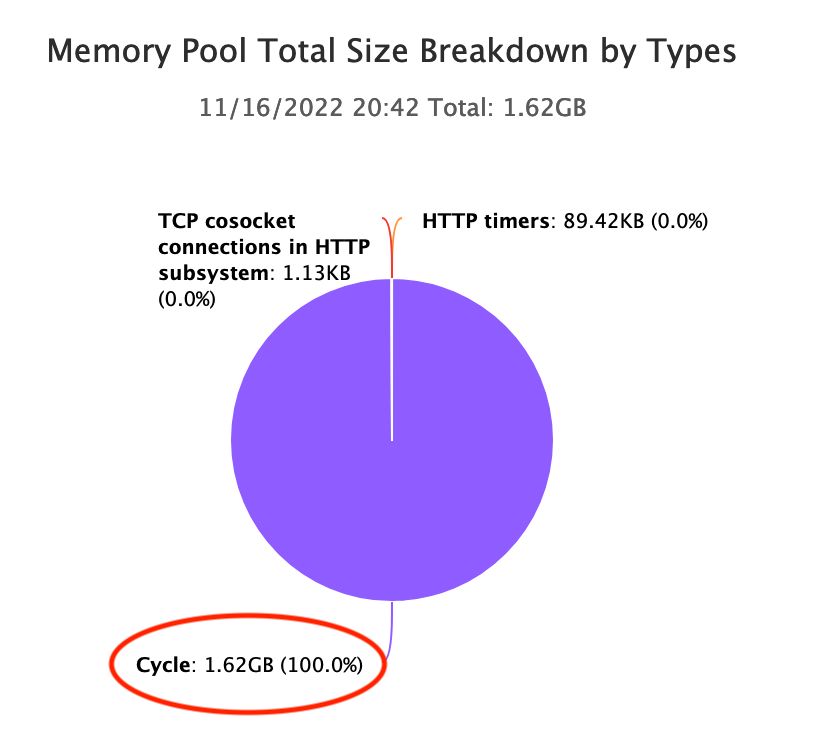

また、OpenResty XRay は Nginx cycle pool が大量のメモリを占有していることを発見しました:

Nginx が設定ファイルを読み込む際、このサイクルプール内の設定データ用のデータ構造を割り当てることは周知の事実です。1.62GB という巨大なサイズですが、上記の 4.60GB よりはるかに小さいです。

RAM は依然として高価で希少なハードウェアリソースであり、特に AWS や GCP などのパブリッククラウド上ではそうです。顧客はコスト削減のために、より小さなメモリを持つマシンにダウングレードすることを望んでいました。

分析

OpenResty XRay は顧客のオンラインプロセスを詳細に分析しました。顧客のアプリケーション側に変更を加えることなく分析を行いました。

- 追加のプラグイン、モジュール、ライブラリは不要です。

- コード注入やパッチも不要です。

- 特別なコンパイルや起動オプションも不要です。

- アプリケーションプロセスの再起動さえ必要ありません。

分析は完全に「事後」に行われました。これは OpenResty XRay が採用している動的トレーシング技術のおかげです。

過剰な空きチャンク

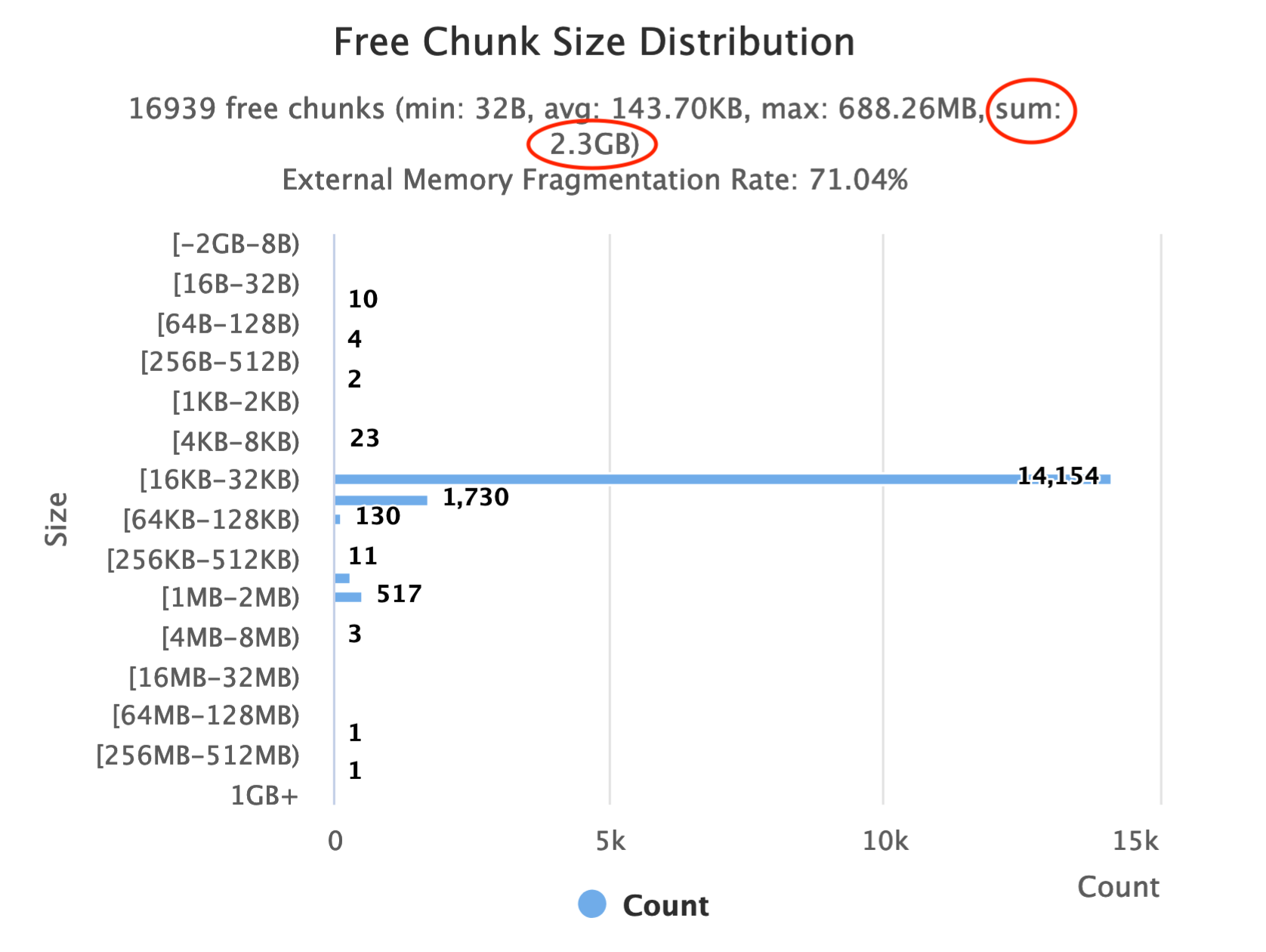

OpenResty XRay は Glibc メモリアロケータのアナライザーを使用して、オンラインの nginx プロセスを自動的にサンプリングしました。アナライザーは以下のヒストグラムを生成し、アロケータが管理する空きブロックのサイズがどのように分布しているかを示しています。

Glibc アロケータは通常、空きブロックをすぐにオペレーティングシステム(OS)に解放しません。後続の割り当てを高速化するために、いくつかの空きブロックを保持する場合があります。しかし、意図的に保持されるものは通常小さく、ギガバイト単位にはなりません。ここでは、空きブロックのサイズの合計が 2.3GB に達しています。したがって、より一般的な原因はメモリフラグメンテーションです。

通常ヒープのメモリフラグメンテーション問題の確認

ほとんどの小さなメモリ割り当ては、brk Linux システムコールを通じて「通常ヒープ」で発生します。このヒープは、「トップ」ポインタを移動させることでのみ成長または縮小できる線形の「スタック」のようなものです。ヒープの中間にある空きチャンクは OS に解放されることはありません。それらの上にあるすべてのチャンクも空きになるまで、返却されることはありません。

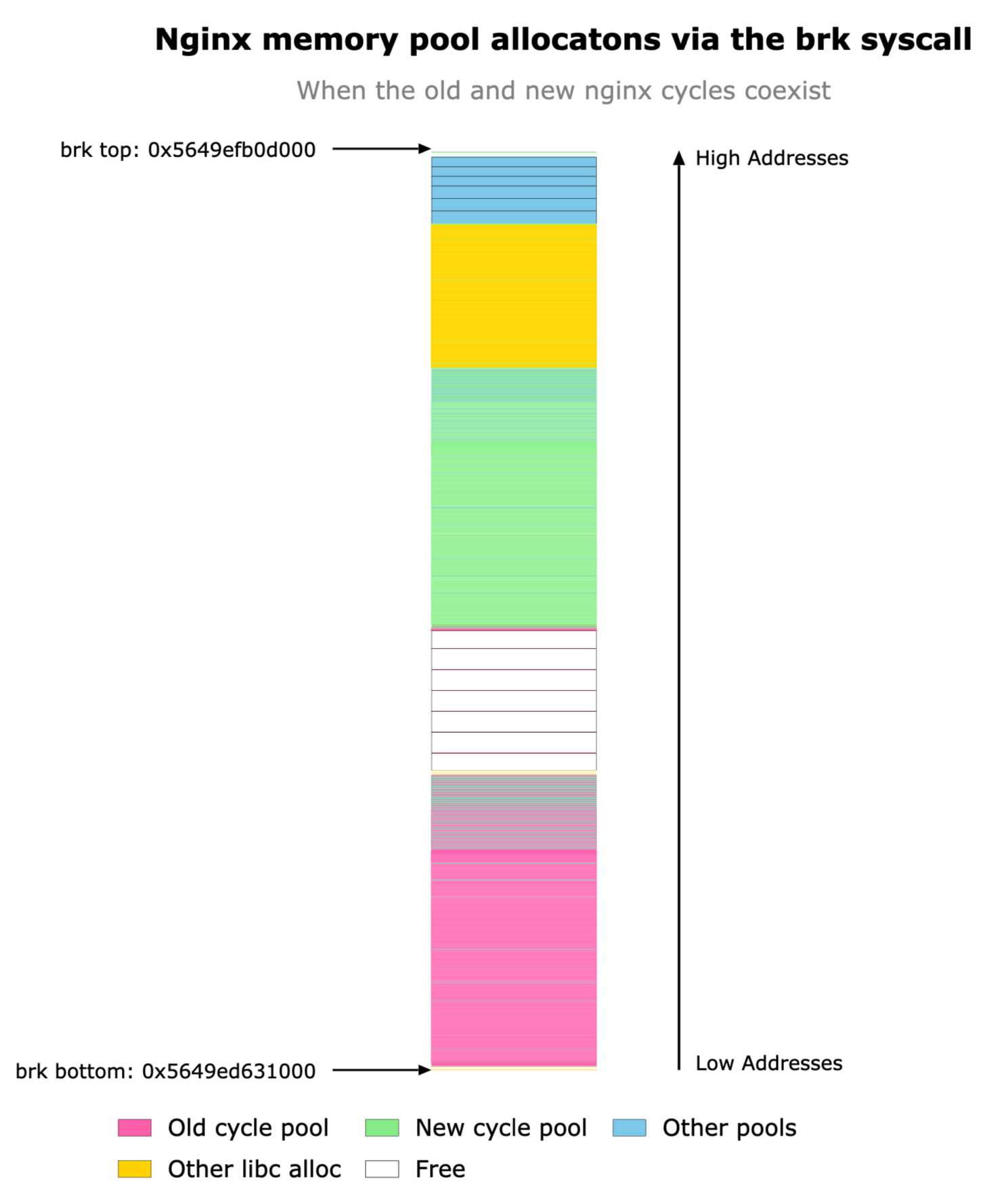

OpenResty XRay のメモリアナライザーは、このようなヒープの状態を確認するのに役立ちます。以下のヒープ図は、nginx マスタープロセスが HUP シグナルに応答して新しい設定を読み込んだ後のサンプリング図です。

ヒープが上向きに成長している、つまり高いメモリアドレスに向かって成長していることがわかります。brk top ポインタに注目してください。これが移動可能な唯一のものです。緑色のボックスは Nginx の新しい「サイクルプール」に属し、ピンク色のボックスは古い「サイクルプール」に属しています。興味深い現象は、Nginx が新しいサイクルプールが正常に読み込まれるまで、古いサイクルプールまたは古い設定データを保持することです。この動作は、新しい設定の読み込みに失敗した場合に古い設定に優雅にフォールバックするための Nginx の保護メカニズムによるものです。残念ながら、上記で見たように、古い設定データのボックス(緑色)が新しいデータ(ピンク色)の下にあるため、新しい設定データも解放されるまで、それらをオペレーティングシステムに解放することはできません。

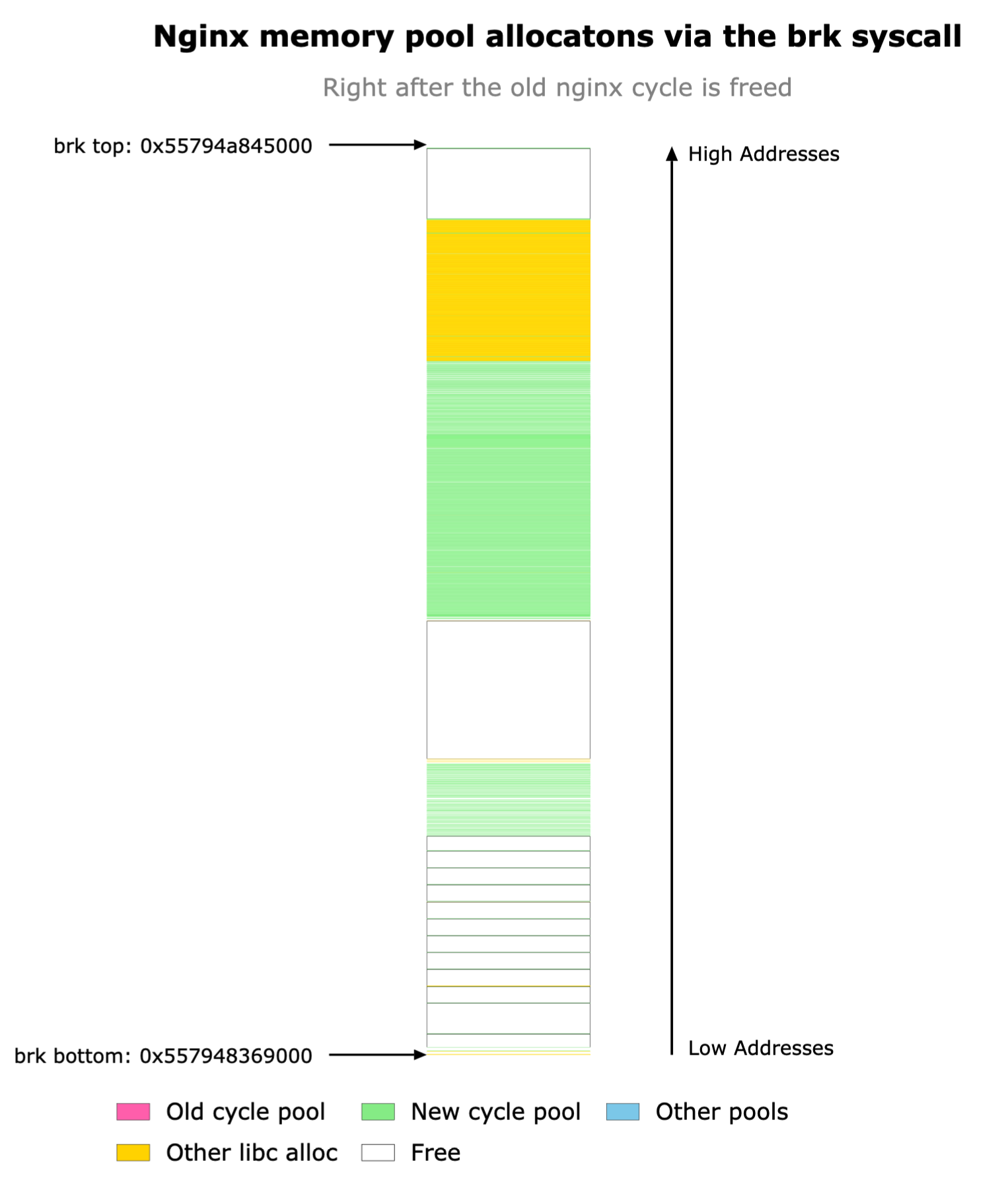

実際、Nginx が古い設定データと古いサイクルプールを解放した後、それらの元の位置は空きブロックになり、新しいサイクルプールのブロックの下に挟まれています。

これは教科書的なメモリフラグメンテーションの例です。通常ヒープはトップでのみメモリを解放できるため、mmap システムコールなどの他のメモリ割り当てメカニズムよりもメモリフラグメンテーションの影響を受けやすくなっています。しかし、mmap がここで私たちを救ってくれるでしょうか?必ずしもそうではありません。

mmap の世界

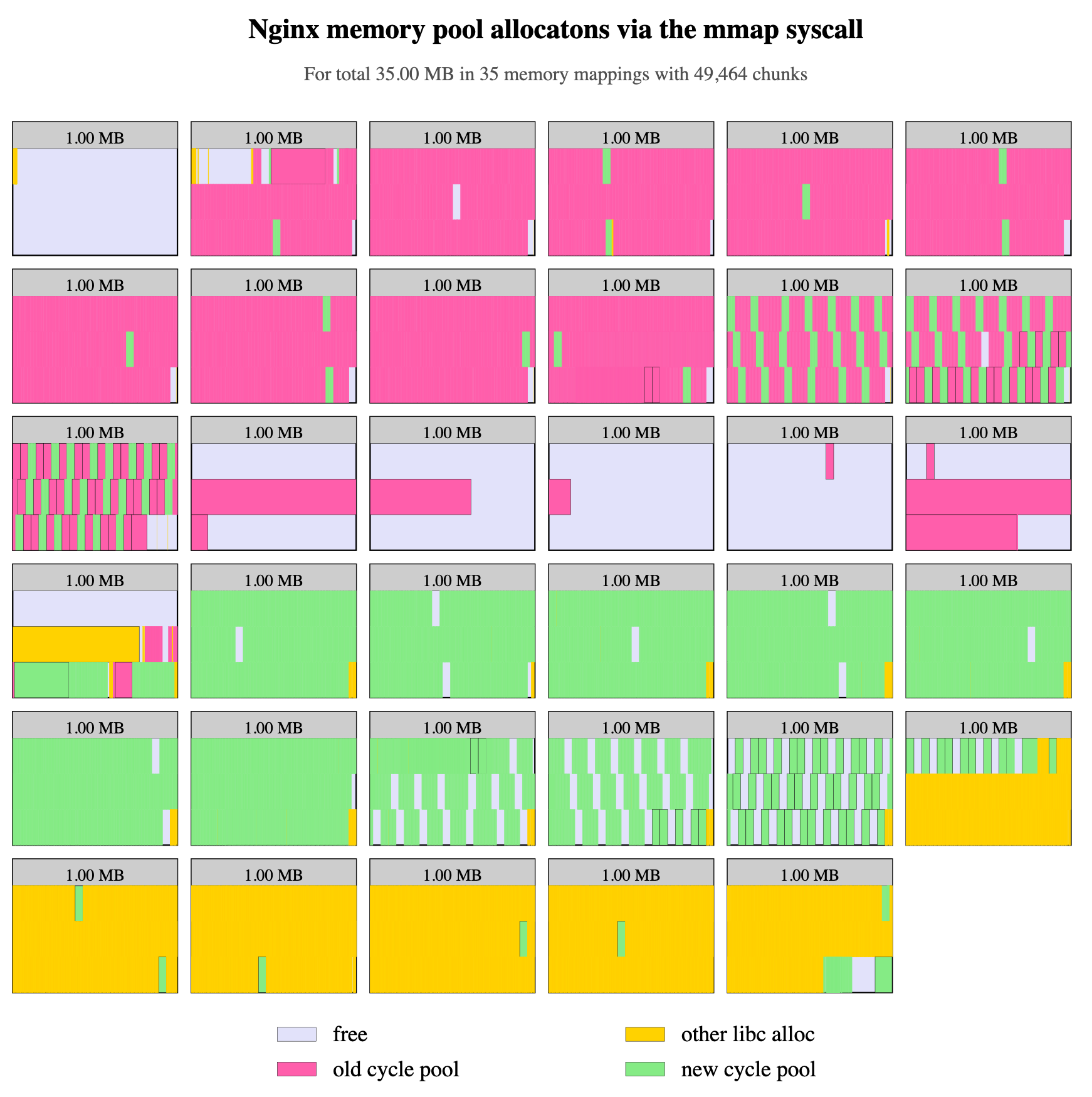

Glibc アロケータは mmap システムコールを通じてもメモリを割り当てることができます。これらのシステムコールは、プロセスのアドレス空間のほぼどこにでも配置でき、任意の数のメモリページにまたがる可能性のある、離散的なメモリブロックまたはメモリセグメントを割り当てます。

これは上記のメモリフラグメンテーション問題を緩和する良い方法に聞こえます。しかし、通常ヒープの成長を意図的に妨げた場合でも、OpenResty XRay のアナライザーが生成したグラフによると、同様の程度のメモリフラグメンテーションが依然として発生しています。

アプリケーション(ここでは Nginx)が比較的小さなメモリブロックの割り当てを要求した場合、Glibc は比較的大きなメモリセグメント(ここでは 1MB)を割り当てる傾向があります。したがって、メモリフラグメンテーションはこれらの 1MB の mmap セグメント内でも発生します。小さなメモリブロックがまだ使用中の場合、そのメモリセグメント全体がオペレーティングシステムに解放されることはありません。

上の図では、古いサイクルプールのブロック(ピンク色)と新しいサイクルプールのブロック(緑色)が多くの mmap セグメント内で依然として混在していることがわかります。

解決策

顧客に対していくつかの解決策を提案しました。

シンプルな方法

最もシンプルな方法は、メモリフラグメンテーションの問題に直接対処することです。上記の OpenResty XRay を使用した分析に基づいて、以下の1つまたは複数の変更を行うべきです。

- 「通常ヒープ」でのサイクルプールメモリの割り当てを避ける(つまり、この割り当ての

brkシステムコールをキャンセルする)。 - Glibc に対して、サイクルプールのメモリ割り当て要求を満たすために適切なサイズの mmap セグメントメモリ(大きすぎないように!)を使用するよう要求する。

- 異なるサイクルプールのメモリブロックを異なる mmap セグメントにクリーンに分離する。

OpenResty XRay の有料顧客には、詳細な最適化手順を提供しています。したがって、コーディングは全く必要ありません。

より良い方法

はい、さらに良い方法があります。オープンソースの OpenResty ソフトウェアは、Nginx 設定ファイルメカニズムを通さずに、Lua レベルの新しい設定データを動的に読み込む(およびアンロードする)ための Lua API と Nginx 設定ディレクティブを提供しています。これにより、小さな一定サイズのメモリを使用して、より多くの仮想サーバーとロケーションの設定データを処理することが可能になります。同時に、Nginx サーバーの起動時間と再読み込み時間も大幅に短縮されます(数秒から事実上ゼロに)。実際、動的設定の読み込みにより、HUP リロード操作自体が非常に稀になります。この方法の欠点は、ユーザー側で追加の Lua コーディングが必要になることです。

当社の OpenResty Edge ソフトウェア製品は、OpenResty の作者が想定した最良の方法でこの動的設定の読み込みとアンロードを実装しています。ユーザーによるコーディングは一切必要ありません。したがって、これも簡単な選択肢です。

結果

この顧客はまずシンプルな方法を試すことを決定し、その結果、数回の HUP リロード後に総メモリ使用量が 30% 削減されました。

さらなる注目に値する断片化が残っていますが、顧客はすでに満足しています。さらに、上記で言及したより良い方法を使用すると、総メモリ使用量の 90% 以上を節約できます(当社の OpenResty Edge 製品と同様に):

OpenResty XRay について

OpenResty XRay は動的トレーシング製品であり、実行中のアプリケーションを自動的に分析して、パフォーマンスの問題、動作の問題、セキュリティの脆弱性を解決し、実行可能な提案を提供いたします。基盤となる実装において、OpenResty XRay は弊社の Y 言語によって駆動され、Stap+、eBPF+、GDB、ODB など、様々な環境下で複数の異なるランタイムをサポートしております。

著者について

章亦春(Zhang Yichun)は、オープンソースの OpenResty® プロジェクトの創始者であり、OpenResty Inc. の CEO および創業者です。

章亦春(GitHub ID: agentzh)は中国江蘇省生まれで、現在は米国ベイエリアに在住しております。彼は中国における初期のオープンソース技術と文化の提唱者およびリーダーの一人であり、Cloudflare、Yahoo!、Alibaba など、国際的に有名なハイテク企業に勤務した経験があります。「エッジコンピューティング」、「動的トレーシング」、「機械プログラミング」 の先駆者であり、22 年以上のプログラミング経験と 16 年以上のオープンソース経験を持っております。世界中で 4000 万以上のドメイン名を持つユーザーを抱えるオープンソースプロジェクトのリーダーとして、彼は OpenResty® オープンソースプロジェクトをベースに、米国シリコンバレーの中心部にハイテク企業 OpenResty Inc. を設立いたしました。同社の主力製品である OpenResty XRay動的トレーシング技術を利用した非侵襲的な障害分析および排除ツール)と OpenResty XRay(マイクロサービスおよび分散トラフィックに最適化された多機能

翻訳

英語版の原文と日本語訳版(本文)をご用意しております。読者の皆様による他の言語への翻訳版も歓迎いたします。全文翻訳で省略がなければ、採用を検討させていただきます。心より感謝申し上げます!