OpenResty と Nginx のメモリ割り当てと管理方法

OpenResty® オープンソース Web プラットフォームは、高性能かつ低メモリ使用量で知られています。一部のユーザーでは、ロボットなどの組み込みシステムで複雑な OpenResty アプリケーションを実行しています。また、Java、NodeJS、PHP などの他の技術スタックから OpenResty に移行した後、メモリ使用量が大幅に減少したと報告するユーザーもいます。しかし、時には特定の OpenResty アプリケーションのメモリ使用量を最適化する必要があります。これらのアプリケーションの Lua コード、Nginx 設定、サードパーティの Lua ライブラリ、またはサードパーティの Nginx モジュールにバグやパフォーマンスの問題がある可能性があり、過剰なメモリ使用やメモリリークを引き起こす可能性があります。

OpenResty、Nginx、LuaJIT の内部でのメモリ割り当てと管理方法を理解することは、メモリの過剰使用やメモリリークの問題をデバッグし最適化する上で重要です。弊社の商用製品である OpenResty XRay は、対象アプリケーションを変更することなく、本番環境で稼働中のアプリケーションであっても、ほぼすべてのメモリ使用の問題を自動的に分析・診断することが可能です。この連載記事(本稿はその第一回目)では、OpenResty XRay を使用して実際のケースから得られたデータとグラフを用いて、OpenResty、Nginx、LuaJIT のメモリ割り当てと管理メカニズムについて詳しく説明していきます。

まず、システムレベルでの Nginx プロセスのメモリ使用分布について説明し、その後、アプリケーションレベルでの各種メモリアロケータについて順に説明します。

システムレベル

現代のオペレーティングシステムでは、プロセスが最上位レベルで要求し使用するメモリは、すべて仮想メモリです。オペレーティングシステムは各プロセスに仮想メモリを割り当てて管理し、実際に使用される仮想メモリページを物理メモリページ(DDR4 メモリモジュールなどのデバイス内)にマッピングします。重要な概念は、プロセスが大量の仮想メモリ空間を要求しても、実際にはその中のごく一部しか使用しない可能性があるということです。例えば、プロセスはシステムに 2TB の仮想メモリ空間を要求することができますが、現在のシステムの物理メモリ(RAM)が 8GB しかない場合もあります。このプロセスがこの巨大な仮想メモリ空間内で多くのメモリページを読み書きしない限り、問題は発生しません。物理メモリデバイスに実際にマッピングされるこの仮想メモリ空間の部分こそが、私たちが本当に注目すべきものです。したがって、ps や top コマンドで表示される大きな仮想メモリ空間(通常 VIRT と呼ばれる)を見て慌てる必要はありません。

実際に使用される仮想メモリの小さな部分(つまり、データが読み書きされた部分)は、通常 RSS、つまり 常駐メモリ(resident memory)と呼ばれます。システムの物理メモリが枯渇しそうになると、常駐メモリページのデータの一部がハードディスクにスワップアウトされます1。スワップアウトされたこのメモリ空間は、もはや常駐メモリの一部ではなく、「スワップアウトされたメモリ」(略して「swap」)となります。

OpenResty アプリケーションの nginx ワーカープロセスを含む任意のプロセスの仮想メモリ使用量、常駐メモリ使用量、スワップアウトされたメモリ空間のサイズを提供するツールが多数あります。OpenResty XRay は、実行中の任意の nginx ワーカープロセスを自動的に分析し、メモリ使用量の内訳を美しい円グラフで表示することができます:

この図では、円全体が Nginx プロセスがオペレーティングシステムから要求した全仮想メモリ空間を表しています。円の中の Resident Memory の部分は常駐メモリの使用量、つまり実際に使用されているメモリ量を表しています。最後に、Swap ブロックはスワップアウトされたメモリを表しています(この図では表示されていませんが、これはこのプロセスにスワップアウトされたメモリページがないためです)。

前述のように、通常最も注目すべきは Resident Memory の部分です。ただし、円グラフに Swap コンポーネントが表示された場合は非常に注意が必要です。これは、システムの物理メモリが不足しており、メモリページの頻繁な入れ替えによってオーバーロードする可能性があることを意味するためです。また、図中の 未使用 の仮想メモリ空間にも注意を払う必要があります。これは、アプリケーションが過剰に大きな Nginx 共有メモリ領域を要求したことが原因である可能性があります。これらの未使用の共有メモリ空間は、将来的にデータで満たされる可能性があり(つまり、Resident Memory コンポーネントの一部に変わる可能性があり)、物理メモリの枯渇につながる可能性があります。

常駐メモリに関するさらに興味深い問題については、後続の専門的な記事で詳しく説明します。次に、アプリケーションレベルのメモリ使用内訳を見てみましょう。

アプリケーションレベル

アプリケーションレベルでメモリ使用の詳細を分析することは、しばしばより有用です。現在使用されているメモリ空間のうち、LuaJIT メモリアロケータによって割り当てられた量、Nginx コアとモジュールによって割り当てられた量、Nginx の共有メモリ領域によって占有されている量などに、より関心があります。

例えば、以下の新しいタイプの円グラフは、OpenResty XRay が OpenResty アプリケーションの Nginx ワーカープロセスを自動分析した際に得られたものです:

Glibc アロケータ

円グラフの Glibc Allocator (Glibc アロケータ)部分は、Glibc ライブラリを通じて割り当てられた総メモリを表しています(Glibc は GNU が実装した標準 C ランタイムライブラリです)。通常、C コードで malloc()、realloc()、calloc() などの関数を呼び出す際に、このメモリアロケータを使用します。これは一般に システムアロケータ とも呼ばれます。Nginx コアとそのモジュールもこのシステムアロケータを通じてメモリを割り当てます(例外として Nginx の共有メモリ領域がありますが、これについては後述します)。C コンポーネントや FFI 呼び出しを含む一部の Lua ライブラリが、このシステムアロケータを直接呼び出すこともありますが、より一般的には LuaJIT の内蔵アロケータを使用します。もちろん、一部のユーザーは OpenResty や Nginx のコンパイルと構築に、musl libc など他の標準 C ランタイムライブラリの実装を選択することもあります。システムアロケータと Nginx のアロケータについては、後続の専門的な記事で詳しく説明します。

Nginx 共有メモリ

円グラフの Nginx Shm Loaded コンポーネントは、Nginx コアとそのモジュールによって割り当てられた共有メモリ(“shm”)領域の 実際に使用されている 部分の空間を表しています。これらの共有メモリは UNIX システムコール mmap() を通じて直接割り当てられるため、標準 C ランタイムライブラリのアロケータを完全に迂回します。

Nginx 共有メモリは全ての Nginx ワーカープロセス間で共有されます。これらの共有メモリ領域は通常、標準の Nginx 設定ディレクティブを通じて作成されます。例えば、ssl_session_cache、

proxy_cache_path、

limit_req_zone、

limit_conn_zone、

および upstream の zone ディレクティブなどです。

Nginx のサードパーティモジュールも独自の共有メモリ領域を作成する場合があります。例えば、OpenResty のコアコンポーネントである ngx_http_lua_module などです。

OpenResty アプリケーションは通常、Nginx 設定ファイル内で lua_shared_dict ディレクティブを使用して独自の共有メモリ領域を作成します。Nginx の共有メモリに関する詳細については、近日中に専門的な記事で詳しく説明する予定です。

詳細については、別のブログ記事 「OpenResty と Nginx の共有メモリ領域が物理メモリをどのように消費するか」 を参照してください。

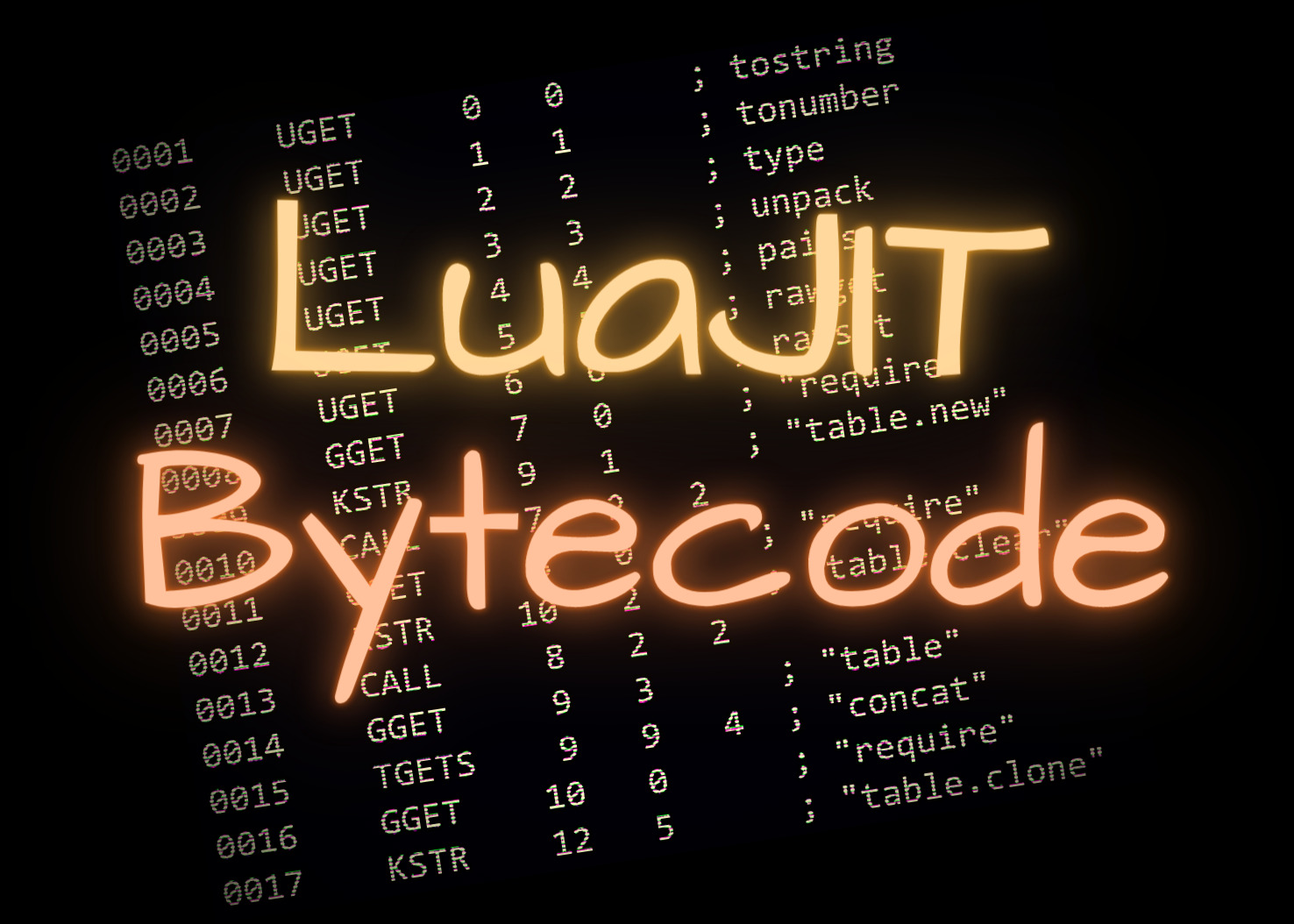

LuaJIT アロケータ

円グラフの HTTP/Stream LuaJIT Allocator という2つの構成要素は、LuaJIT の内蔵アロケータが割り当て、管理しているメモリサイズを表しています。

一方は Nginx の HTTP サブシステムにおける LuaJIT 仮想マシン(VM)インスタンスを、もう一方は Nginx の Stream サブシステムにおける LuaJIT VM インスタンスを表しています。LuaJIT にはシステムアロケータを強制的に使用するコンパイルオプションがありますが2、このオプションは通常、特殊なデバッグやテストツール(例えば Valgrind や AddressSanitizer)でのみ使用されます。Lua の文字列、テーブル、関数、cdata、userdata、upvalue などは、すべてこのアロケータを通じて割り当てられます。対照的に、整数3、浮動小数点数、light userdata、ブール値などの Lua のプリミティブ型の値は、動的メモリ割り当てを必要としません。さらに、Lua コード内で ffi.new() を呼び出して割り当てられる C レベルのメモリブロックも、LuaJIT 独自のアロケータを通じて割り当てられます。このアロケータによって割り当てられたすべてのメモリブロックは、LuaJIT のガベージコレクタ(GC)によって一元管理されるため、不要になったメモリブロックを手動で解放する必要はありません4。これらのメモリオブジェクトは「GC オブジェクト」とも呼ばれます。このトピックについては、別の記事で詳しく説明します。

プログラムコードセグメント

円グラフの Text Segments 構成要素は、すべての実行可能ファイルと動的リンクライブラリの .text セグメントが仮想メモリ空間にマッピングされた後の総サイズに対応します。

これらの .text セグメントには通常、実行可能なバイナリマシンコードが含まれています。

システムランタイムスタック

最後に、グラフの System Stacks 構成要素は、対象プロセス内のすべてのシステムランタイムスタック(または「C スタック」)が占める総サイズを指します。各オペレーティングシステム(OS)スレッドには独自のシステムスタックがあります。マルチスレッドを使用している場合にのみ、複数のシステムスタックが存在します(OpenResty で ngx.thread.spawn を使用して作成される「軽量スレッド」は、このようなシステムレベルのスレッドとは全く異なるものであることに注意してください)。Nginx ワーカープロセスは通常、OS スレッドプール(aio threads 設定ディレクティブを通じて)が構成されていない限り、1 つのシステムスレッドしか持ちません。

その他のシステムアロケータ

一部のユーザーは、自身でコンパイルした OpenResty や Nginx でサードパーティのメモリアロケータを使用することを選択する場合があります。一般的な例として tcmalloc や

jemalloc があります。これらはシステムアロケータ(例えば malloc)を高速化できるためです。一部の Nginx サードパーティモジュール、Lua C モジュール、または C ライブラリ(OpenSSL を含む!)で malloc() を直接呼び出して小さなメモリブロックを要求するシナリオでは、確かに顕著な高速化効果を提供できます。しかし、すでに設計の優れたアロケータ(Nginx のメモリプールや LuaJIT の内蔵アロケータなど)を使用している部分では、これらを使用してもあまり利点はありません。逆に、このような「外部」アロケータを使用するソフトウェアライブラリは、新たな複雑さと問題を引き起こす可能性があります。これについては、後続の記事でより詳細に説明します。

使用済みまたは未使用

上記で紹介したアプリケーションレベルのメモリ分解図を使用しても、どの仮想メモリページが実際に使用されているか、どれが使用されていないかを直接分析するのは困難です。円グラフの Nginx Shm Loaded 構成要素のみが実際に使用されている仮想メモリ空間であり、他の構成要素は使用済みと未使用の仮想メモリページの両方を含んでいます。幸いなことに、Glibc のアロケータと LuaJIT のアロケータによって割り当てられたメモリは、多くの場合すぐに実際に使用されるため、ほとんどの場合、両者に大きな違いはありません。

従来の Nginx サーバー

従来の Nginx サーバーソフトウェアは、OpenResty アプリケーションの厳密なサブセットに過ぎません。これらのユーザーは依然としてシステムアロケータのメモリ使用量と Nginx 共有メモリ領域の使用量を確認でき、時には他のメモリアロケータも関係してきます。OpenResty XRay は、これらのサーバープロセスを本番環境でも直接検査および分析するのに使用できます。もちろん、Lua モジュールを Nginx にコンパイルしていない場合は、Lua 関連のメモリ使用は表示されません。

結論

この記事は連載の第一回目となります。このシリーズでは、OpenResty と Nginx がメモリを割り当て、管理する詳細について説明し、これらの技術に基づいて構築されたアプリケーションがメモリ使用を効果的に最適化できるよう支援します。後続の記事では、各トピックを詳しく取り上げ、さまざまなメモリアロケータとメモリ管理メカニズムをカバーします。ご期待ください!

関連記事

著者について

章亦春(Zhang Yichun)は、オープンソースの OpenResty® プロジェクトの創始者であり、OpenResty Inc. の CEO および創業者です。

章亦春(GitHub ID: agentzh)は中国江蘇省生まれで、現在は米国ベイエリアに在住しております。彼は中国における初期のオープンソース技術と文化の提唱者およびリーダーの一人であり、Cloudflare、Yahoo!、Alibaba など、国際的に有名なハイテク企業に勤務した経験があります。「エッジコンピューティング」、「動的トレーシング」、「機械プログラミング」 の先駆者であり、22 年以上のプログラミング経験と 16 年以上のオープンソース経験を持っております。世界中で 4000 万以上のドメイン名を持つユーザーを抱えるオープンソースプロジェクトのリーダーとして、彼は OpenResty® オープンソースプロジェクトをベースに、米国シリコンバレーの中心部にハイテク企業 OpenResty Inc. を設立いたしました。同社の主力製品である OpenResty XRay動的トレーシング技術を利用した非侵襲的な障害分析および排除ツール)と OpenResty XRay(マイクロサービスおよび分散トラフィックに最適化された多機能

翻訳

英語版の原文と日本語訳版(本文)をご用意しております。読者の皆様による他の言語への翻訳版も歓迎いたします。全文翻訳で省略がなければ、採用を検討させていただきます。心より感謝申し上げます!

現代の Android オペレーティングシステムは、メモリページをメモリにスワップすることをサポートしていますが、これらのメモリページは圧縮されており、物理メモリ空間を節約することができます。 ↩︎

このコンパイルオプションは

-DLUAJIT_USE_SYSMALLOCと呼ばれますが、本番環境では絶対に使用しないでください! ↩︎通常、LuaJIT ランタイムは内部で1種類の数値型(倍精度浮動小数点数、double)のみを使用しますが、コンパイルオプション

-DLUAJIT_NUMMODE=2を渡すことで、32ビット整数の内部表現も同時に有効にすることができます。 ↩︎ただし、不要になったオブジェクトへのすべての参照を適切に削除する責任は依然としてあります。 ↩︎