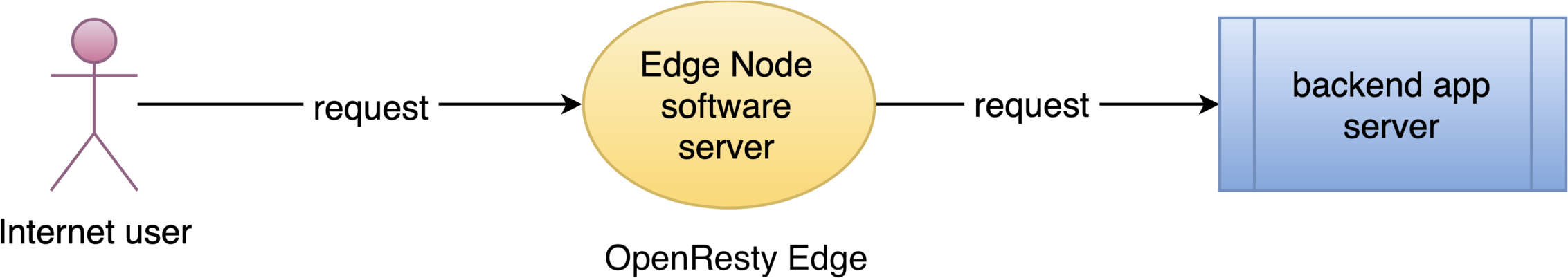

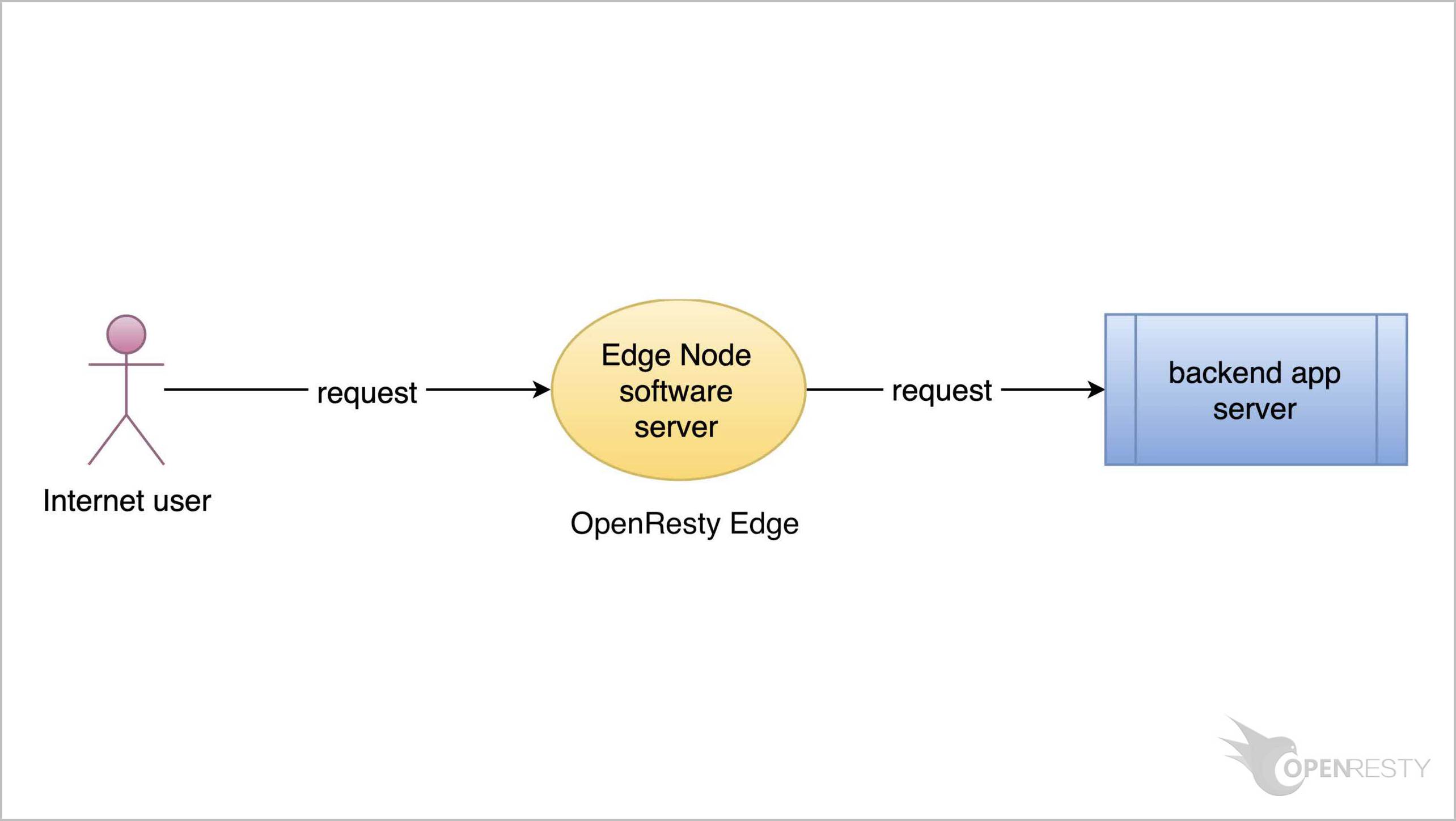

OpenResty Edge を使用した最もシンプルなリバースプロキシとロードバランサーの設定

今回は、OpenResty Edge を使用して最もシンプルなリバースプロキシとロードバランサーを設定する方法をご紹介いたします。

すべてのゲートウェイサーバーノードとその設定を一元的に管理する場所、つまり Edge Admin ウェブコンソールで管理を行います。

サンプルアプリケーションの作成

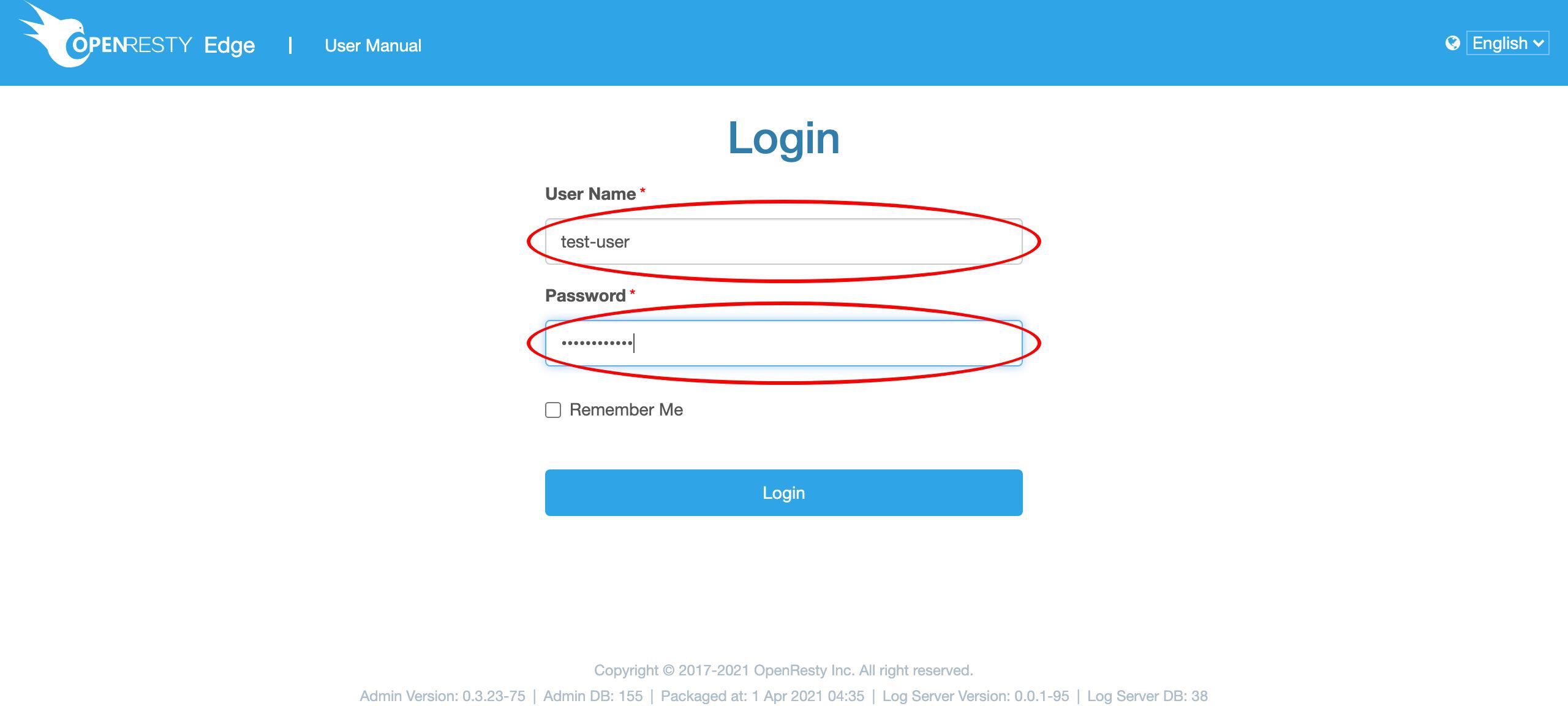

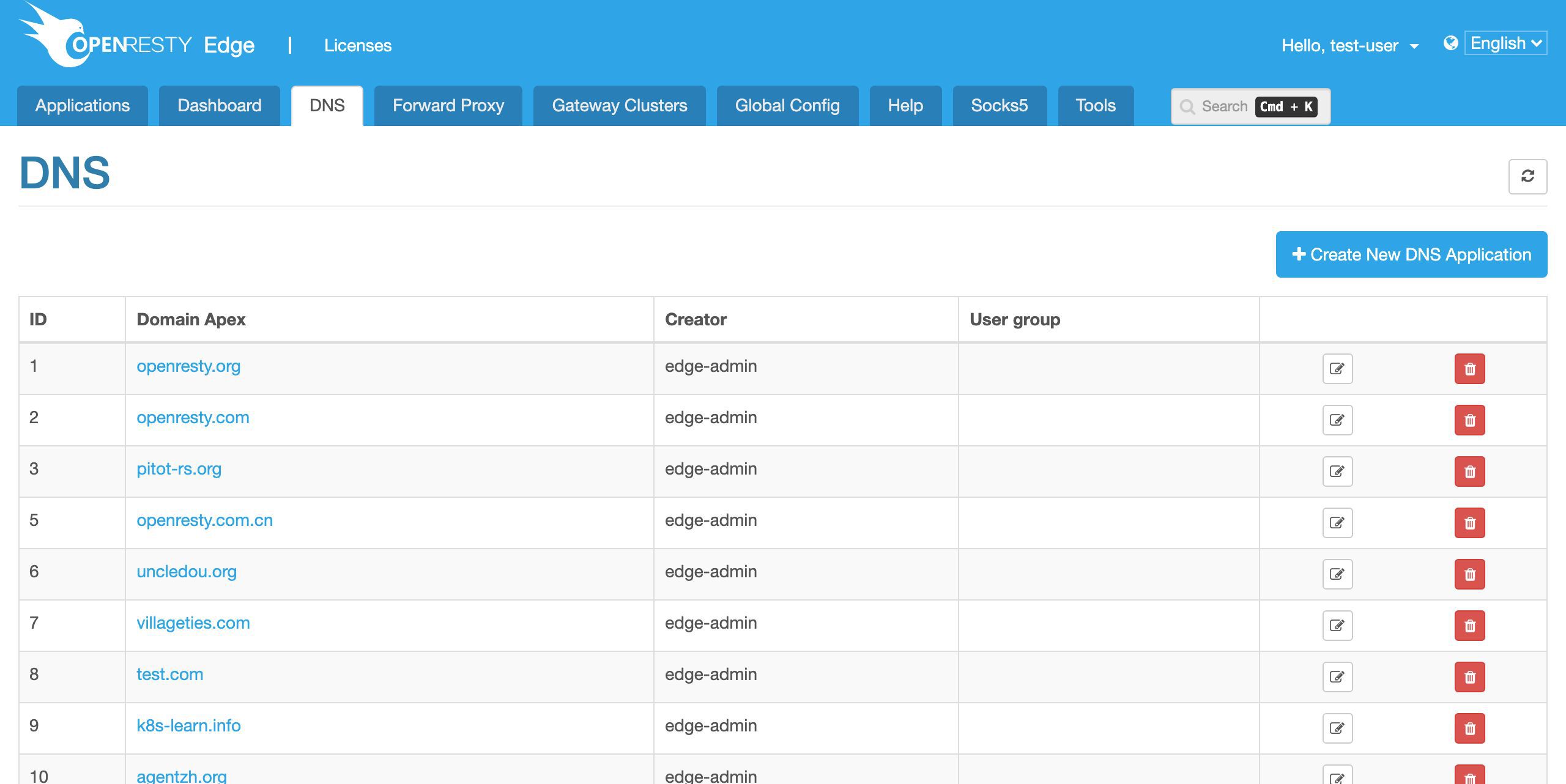

OpenResty Edge のウェブコンソールにアクセスしましょう。これはコンソールのサンプルデプロイメントです。各ユーザーが独自のデプロイメントを持つことができます。

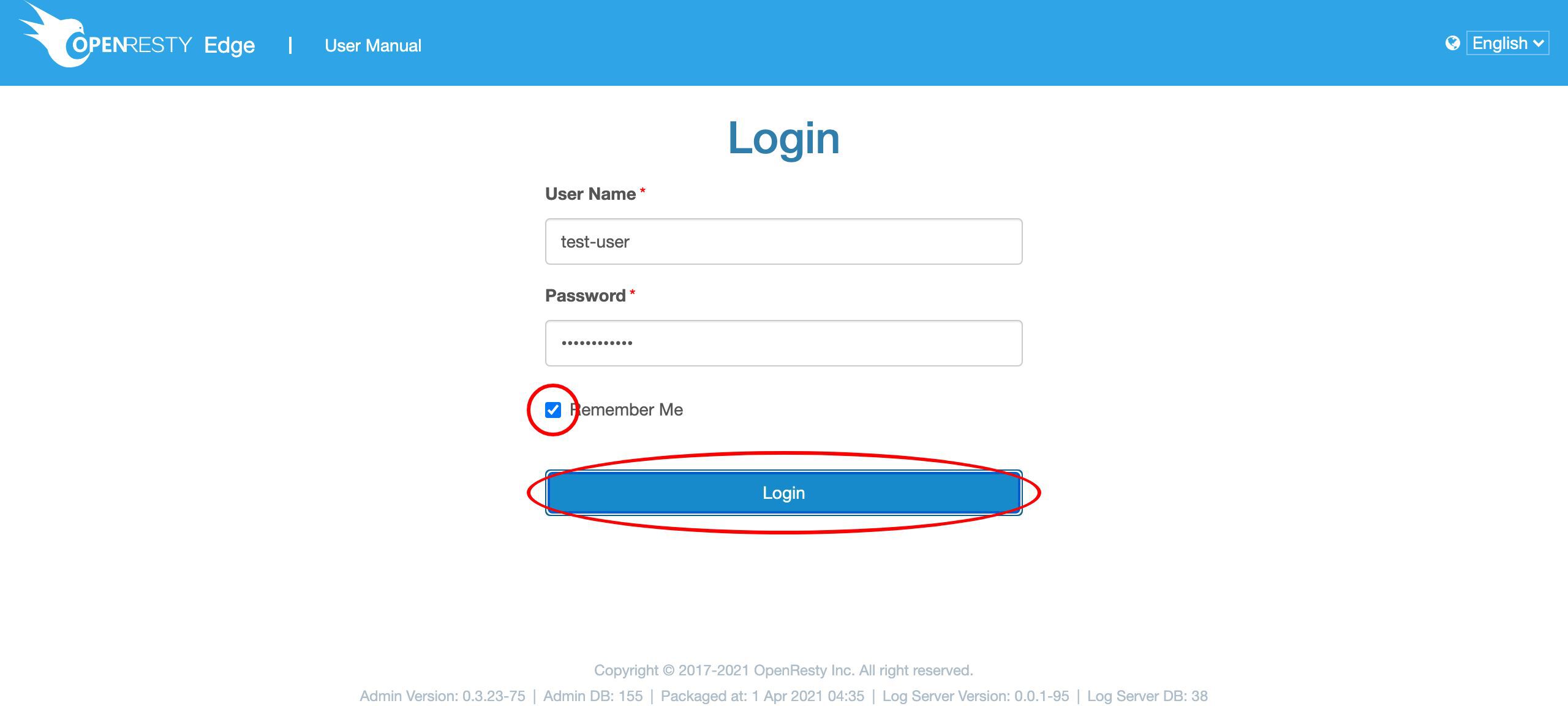

ユーザー名とパスワードでログインします。

他の認証方法も設定可能です。 それでは、ログインしましょう。

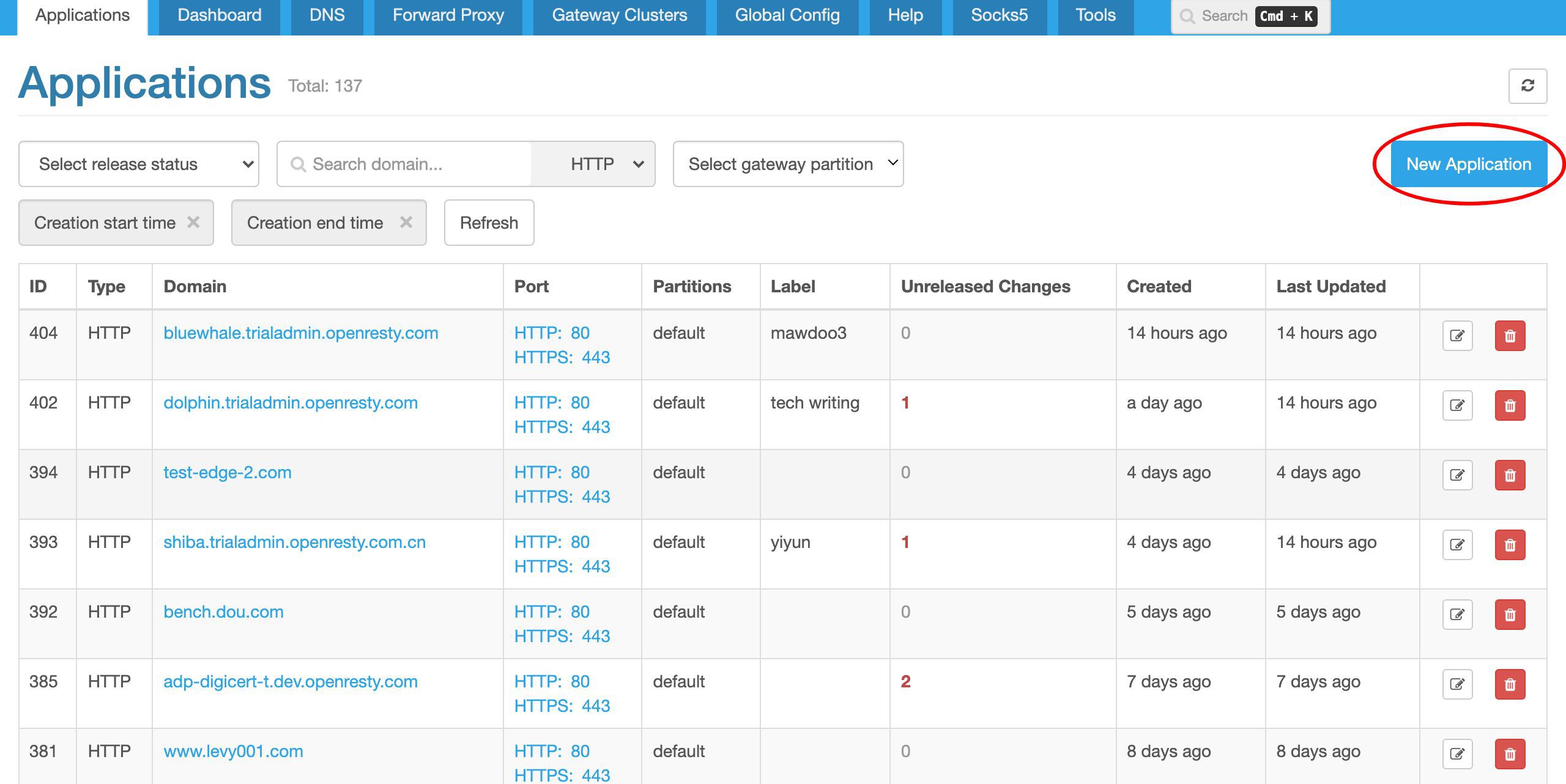

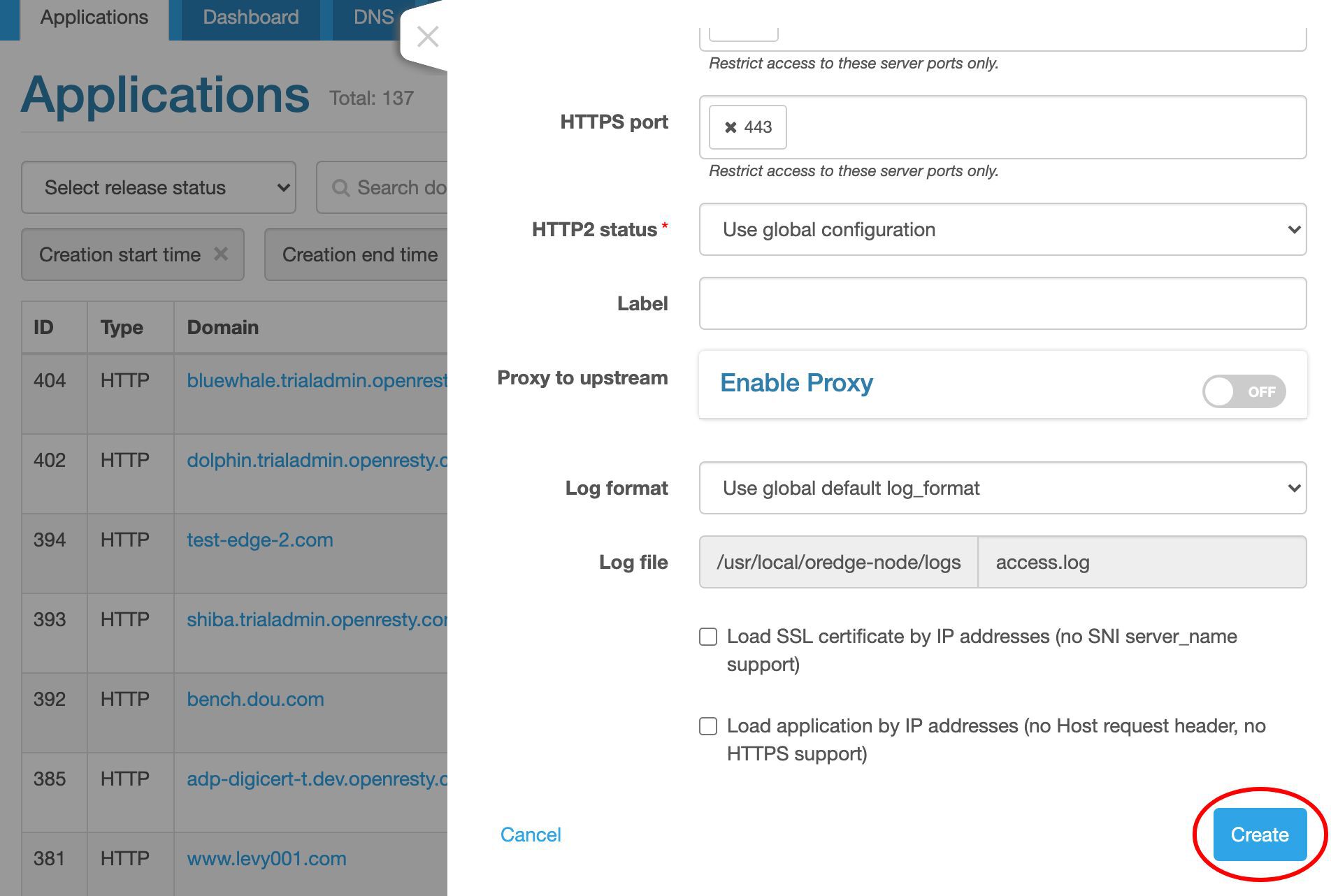

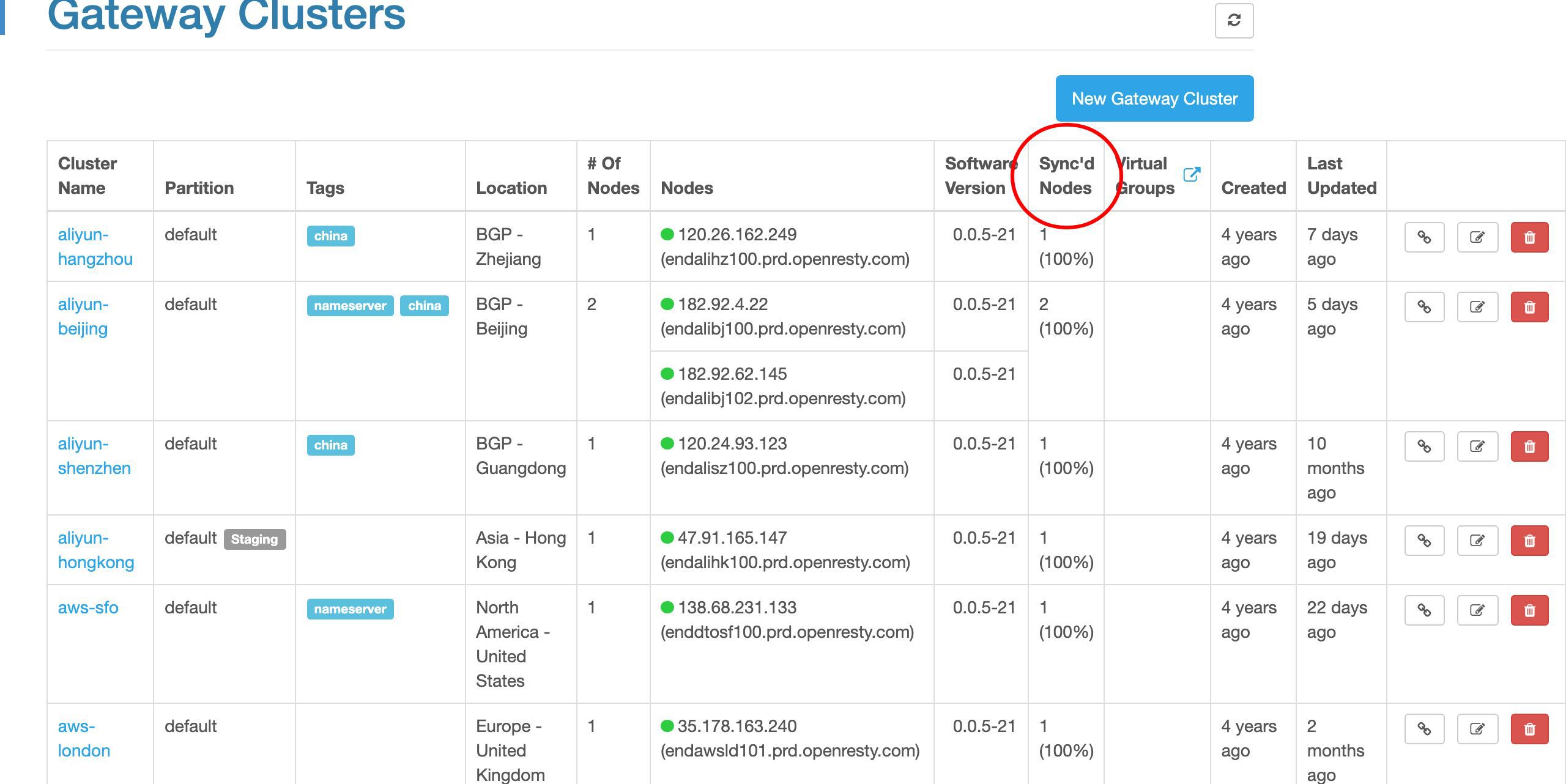

アプリケーション一覧ページに到達しました。すでに多くの以前作成したアプリケーションがあります。各アプリケーションは、同じゲートウェイ内の仮想ホストまたは仮想サーバーに対応しています。

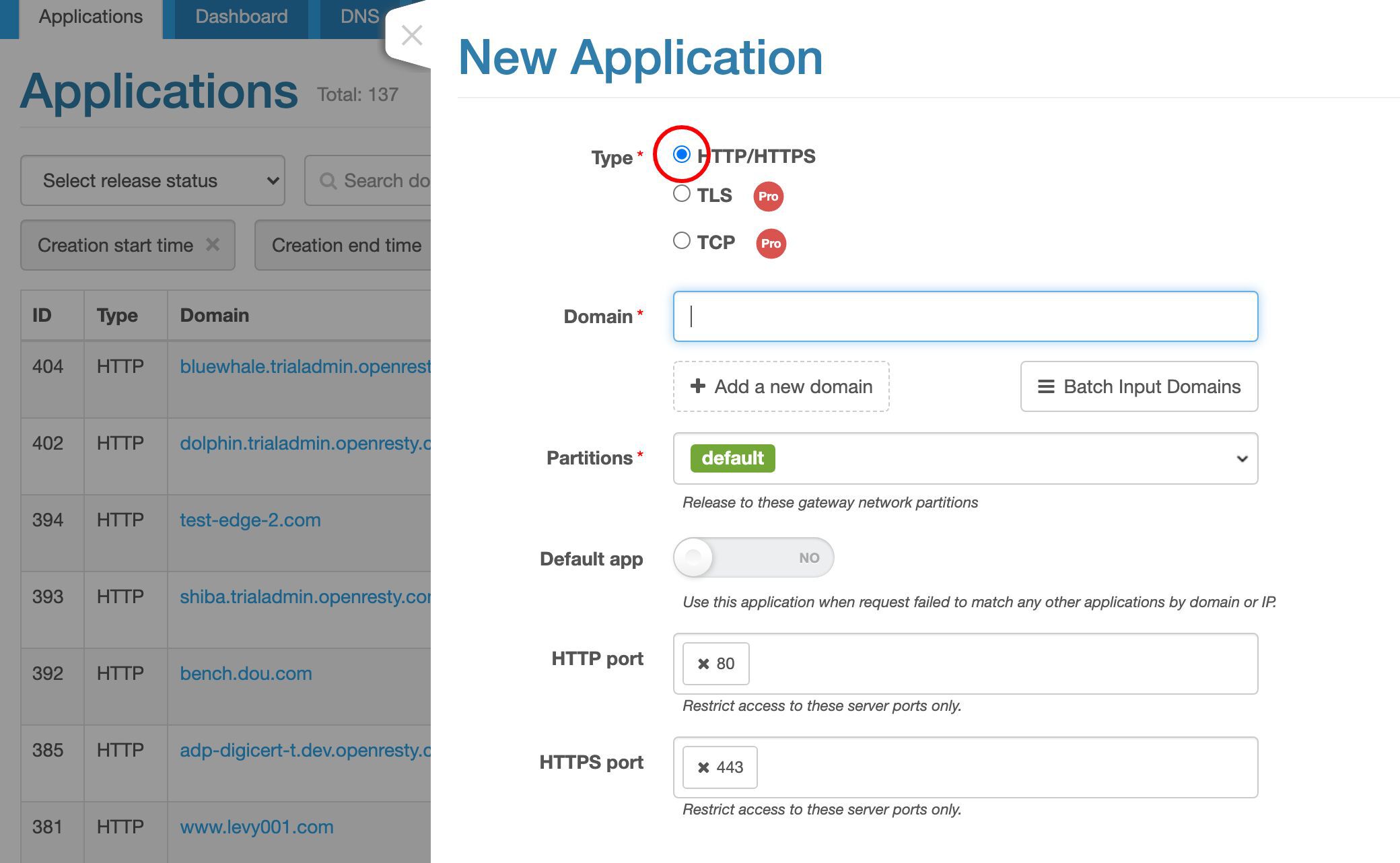

ここでは、「新規アプリケーション」ボタンをクリックして新しいアプリケーションを作成します。

HTTP タイプのアプリケーションを作成します。これがデフォルトです。

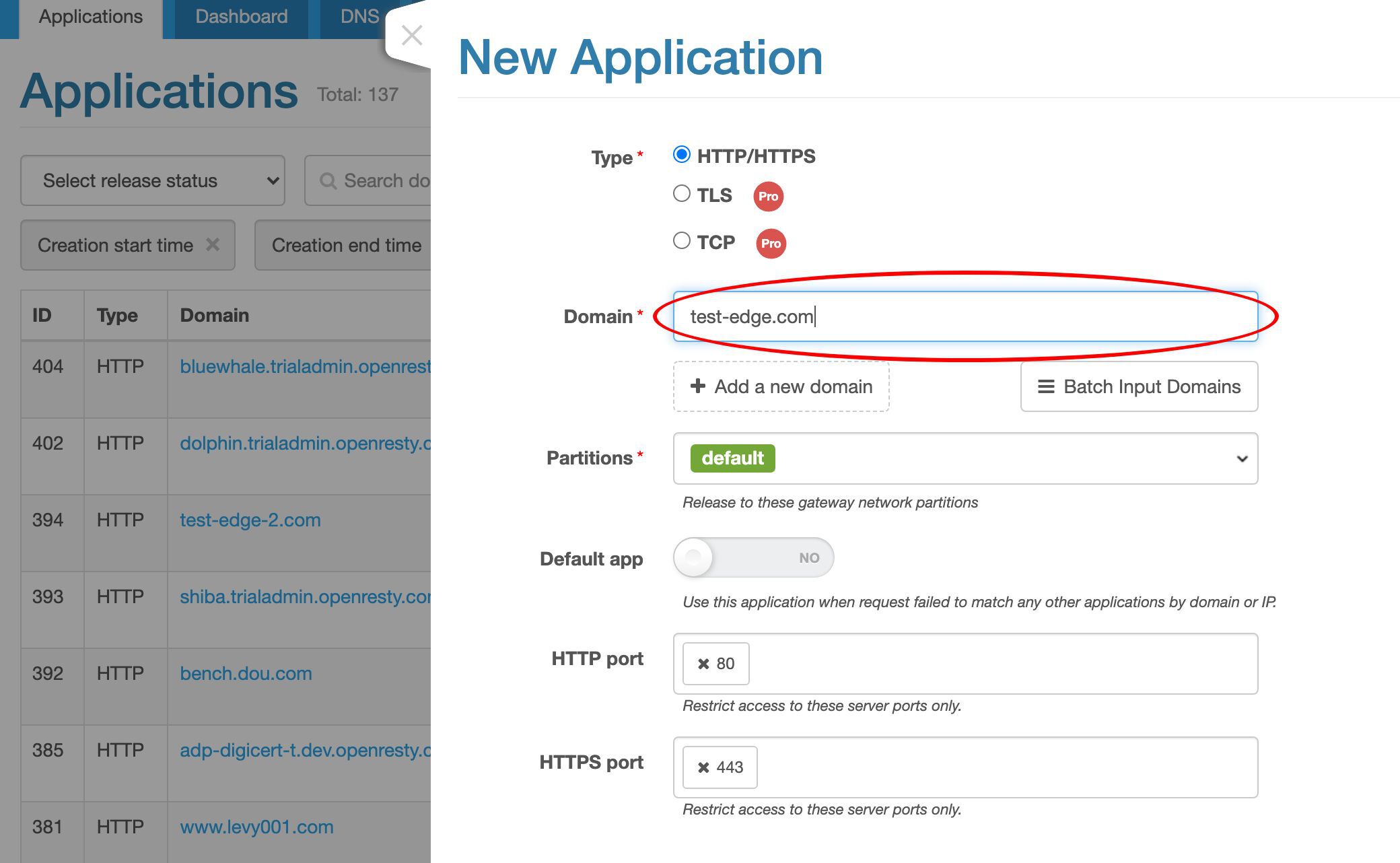

このアプリケーションに test-edge.com というドメイン名を割り当てます。

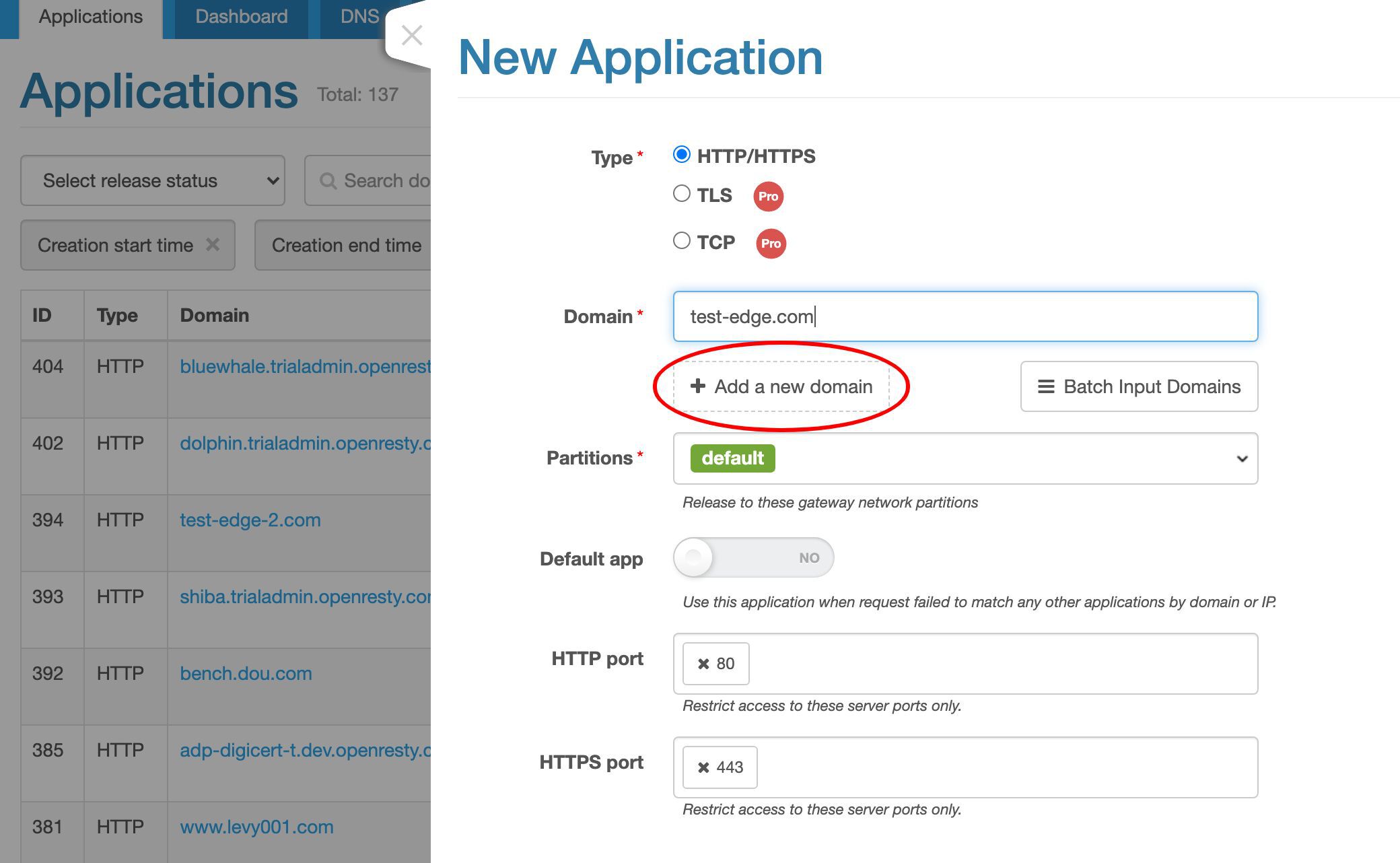

ワイルドカードドメインを含む、より多くのドメイン名を追加することもできます。

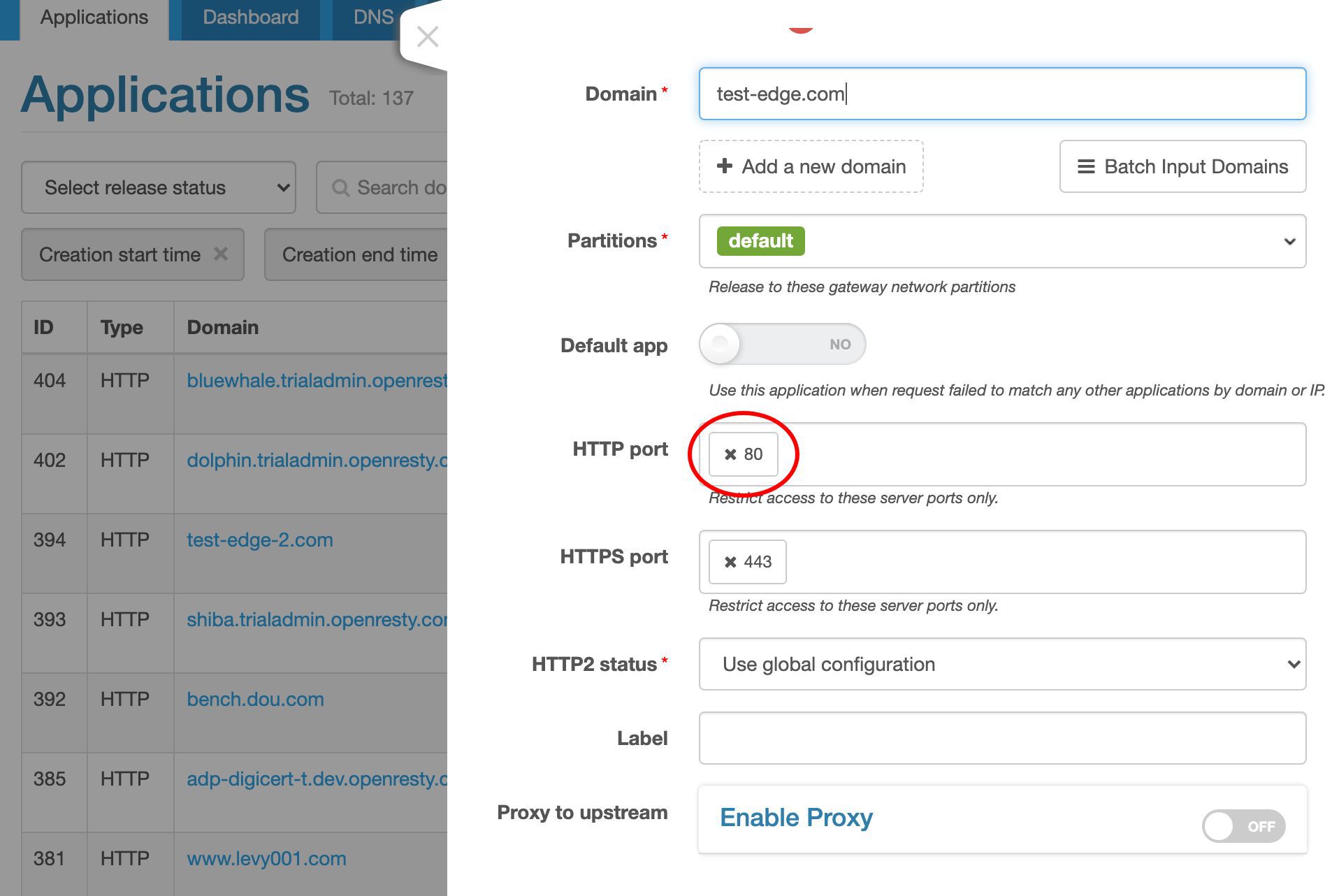

この例では、ポート 80 のみを対象とします。

それでは、このアプリケーションを作成しましょう!

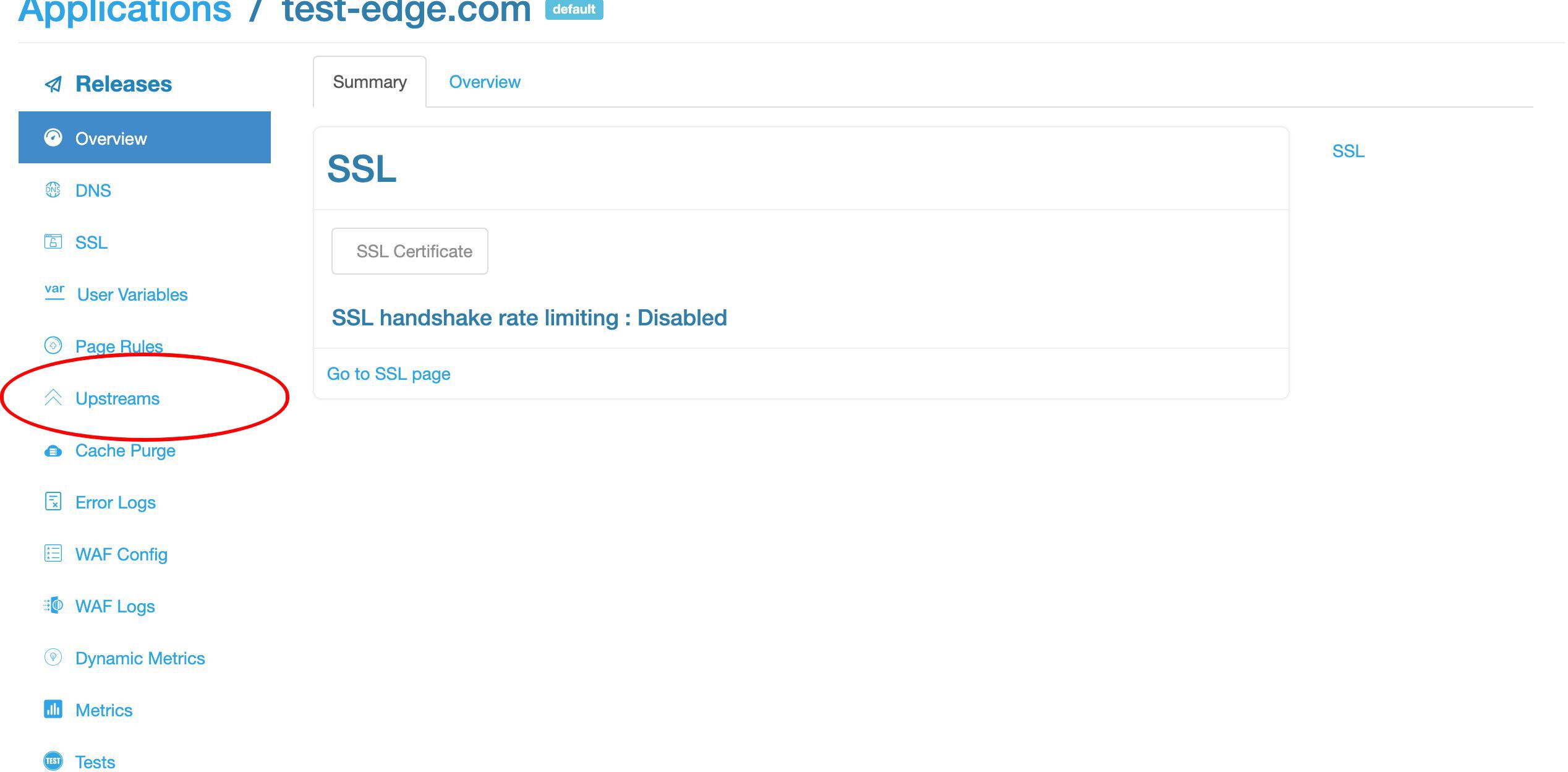

新しいアプリケーションに移動しました。現在は空の状態です。

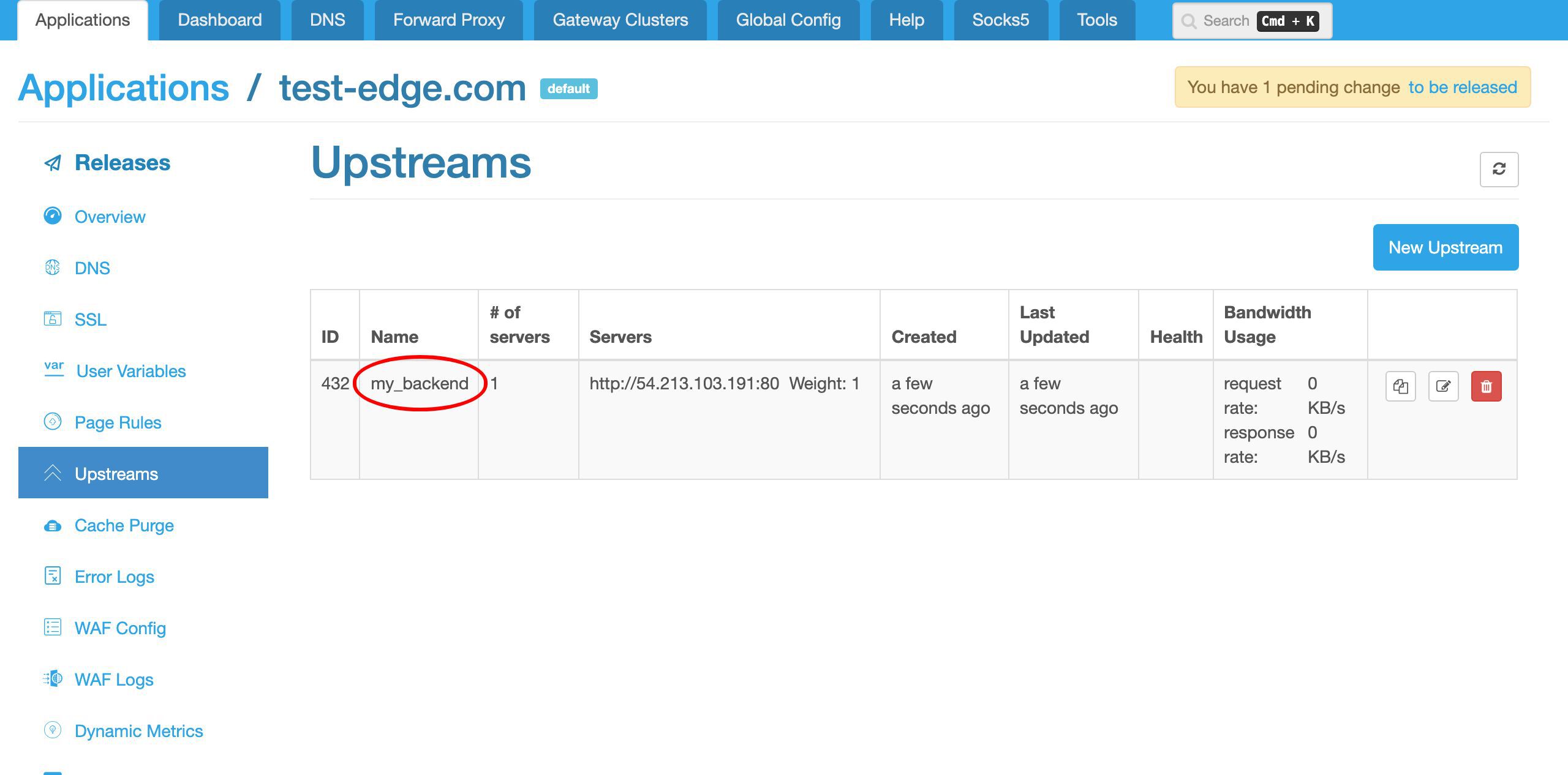

新しいアプリケーション用のアップストリームの作成

アップストリームページに移動しましょう。

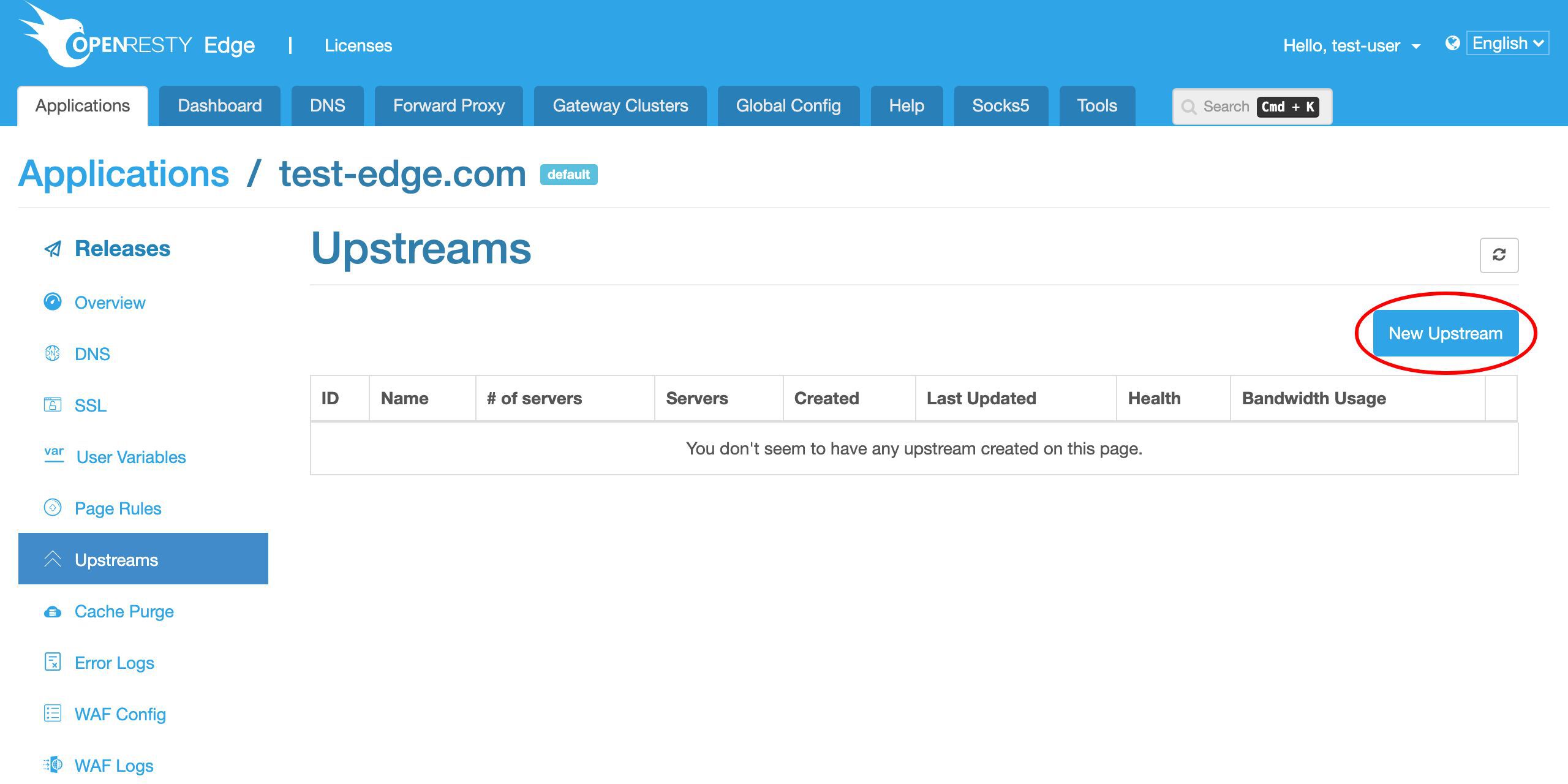

明らかに、まだアップストリームを定義していません。

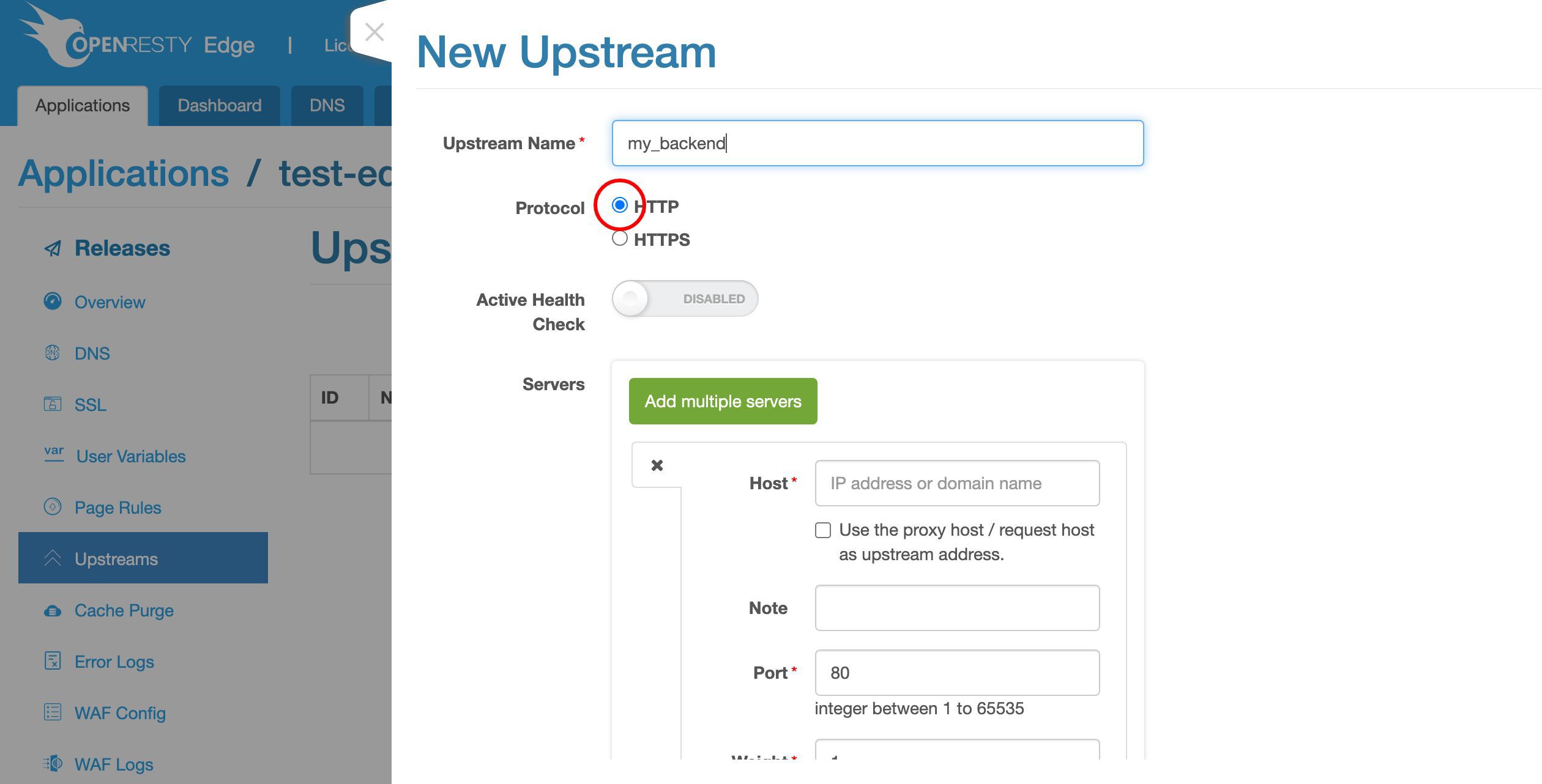

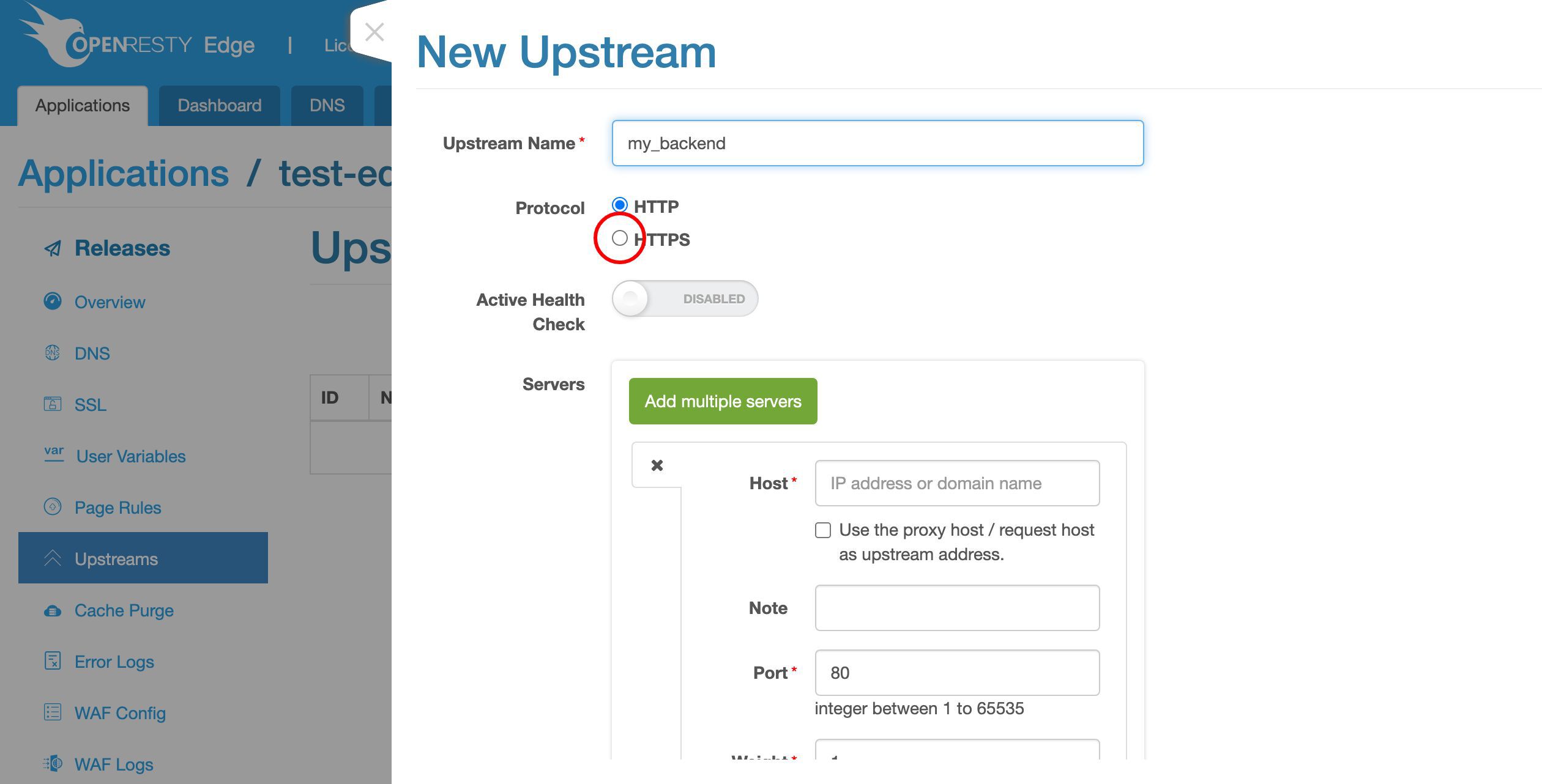

「新規アップストリーム」ボタンをクリックして、バックエンドサーバー用の新しいアップストリームを作成します。

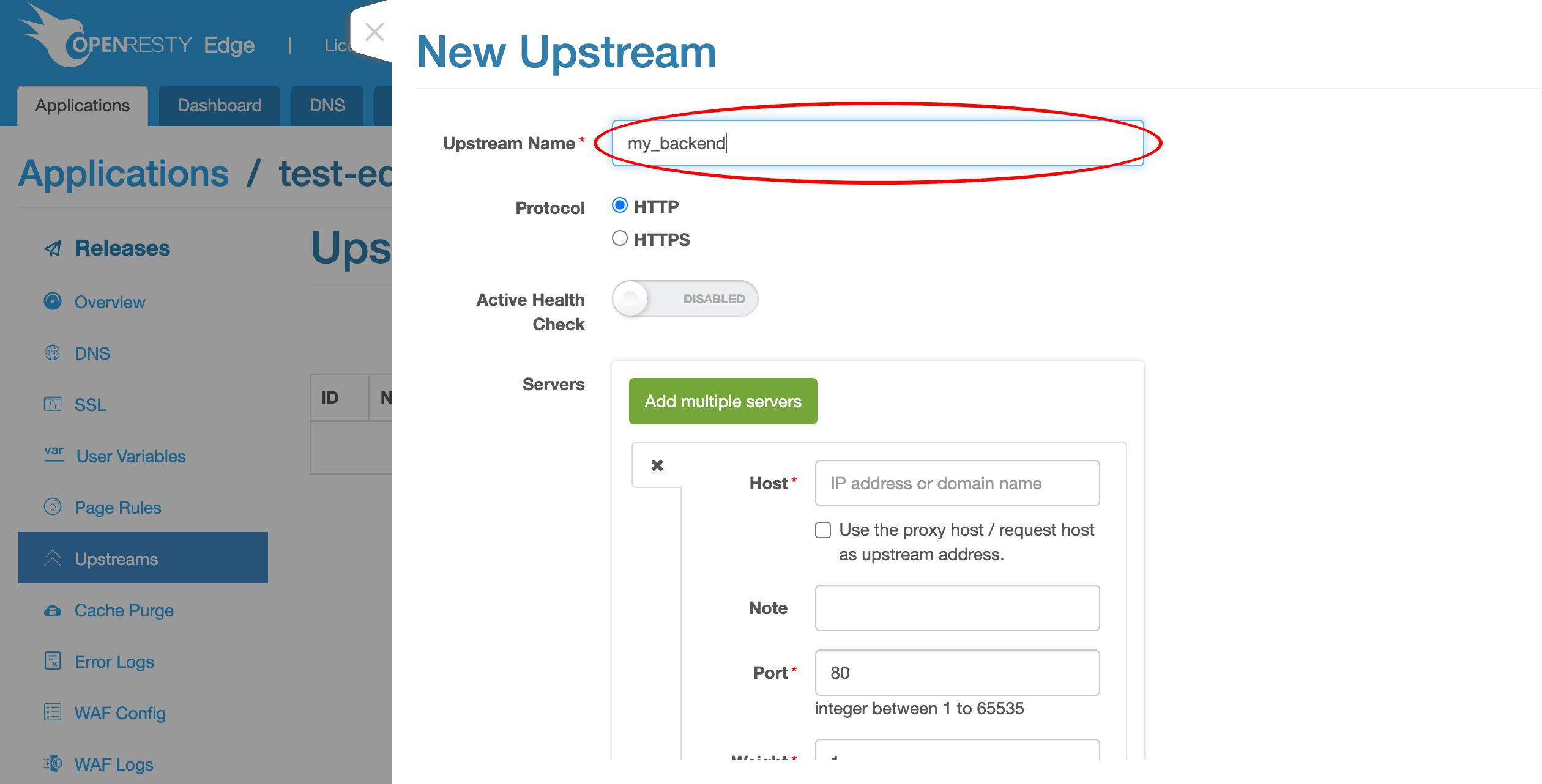

このアップストリームに名前を付けます。例えば、my_backend とします。

簡単にするため、ここでは HTTP プロトコルのみを使用します。

最終的には HTTPS プロトコルも有効にしたいかもしれません。

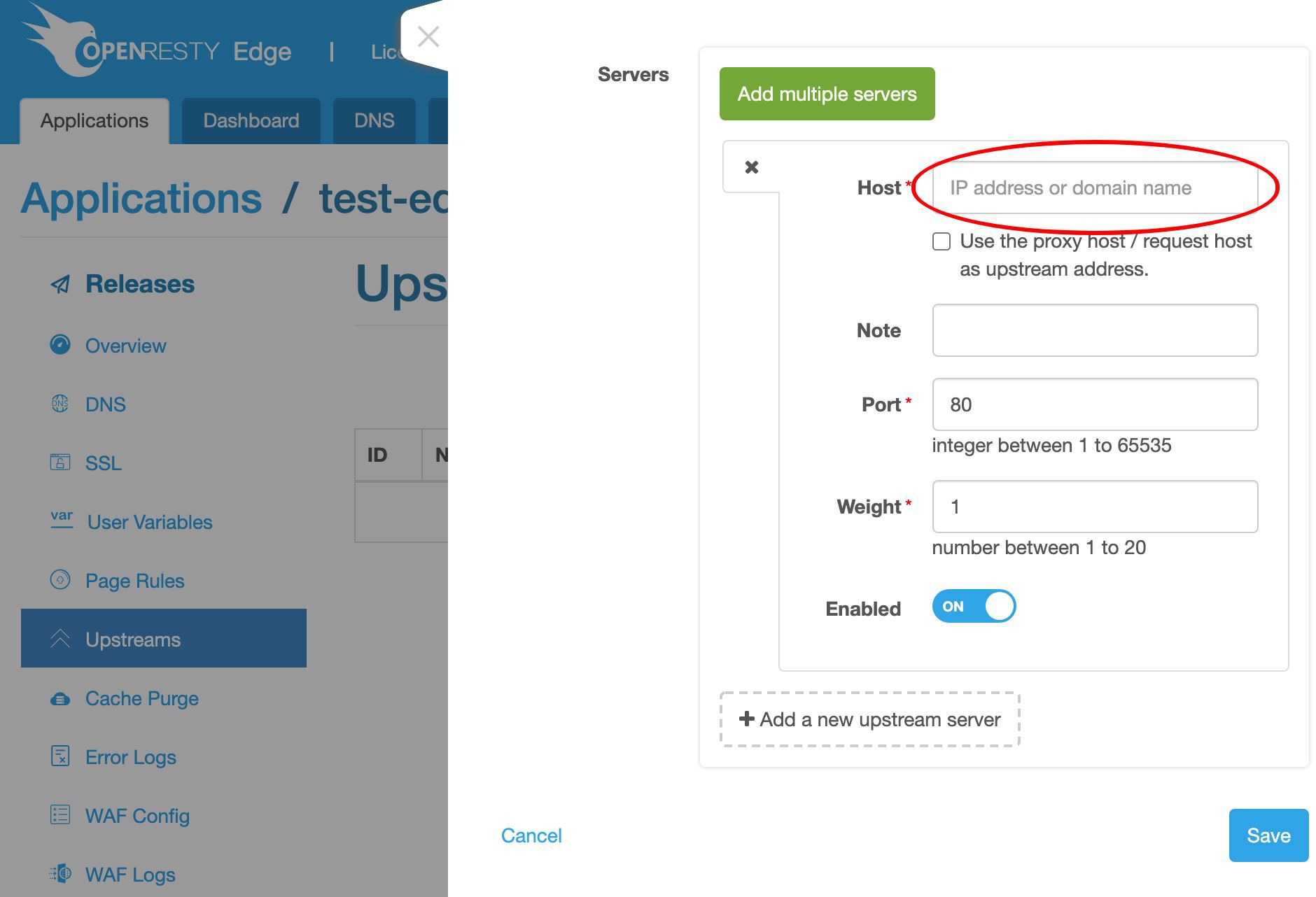

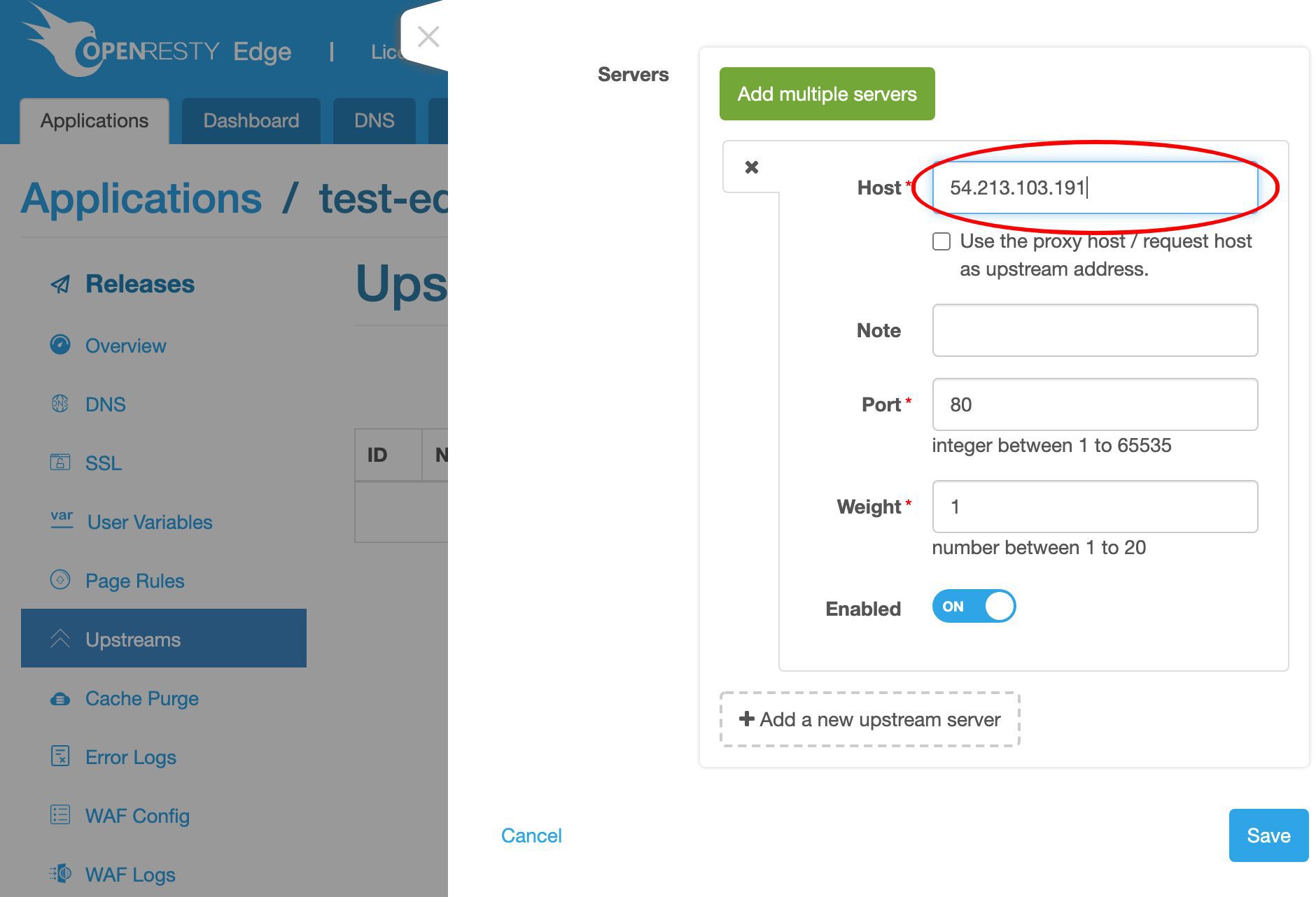

ここでバックエンドサーバーの IP アドレスが必要です。

この IP アドレス 54.213.103.191 にバックエンドサーバーのサンプルを用意しました。

これは単純にオープンソースの OpenResty サーバーソフトウェアのデフォルトホームページを返すだけです。

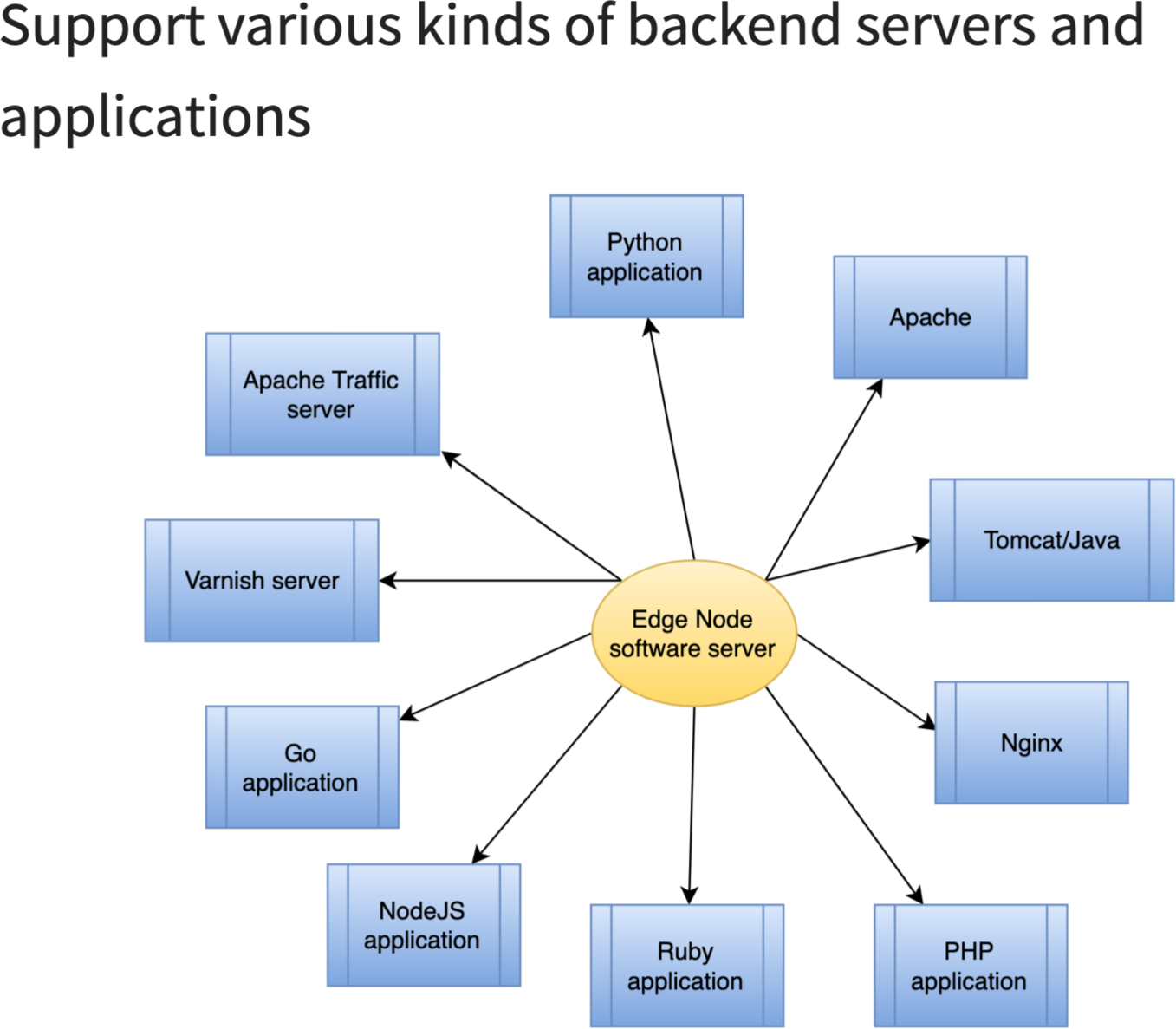

バックエンドには、HTTP プロトコルで通信可能な任意のソフトウェアを使用できます。

これでバックエンドサーバーのホストフィールドに入力できます。

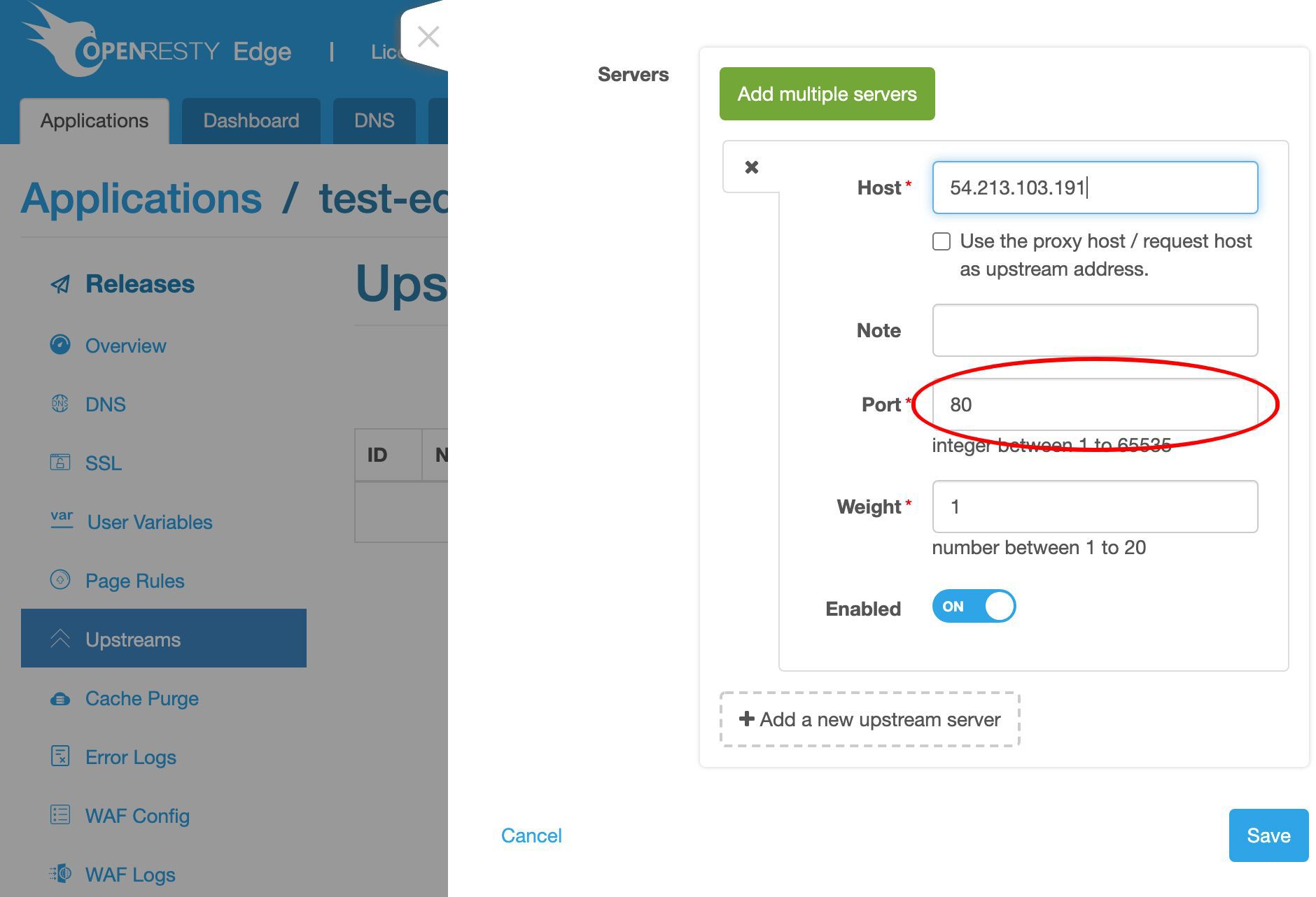

ポート 80 はそのままにしておきます。

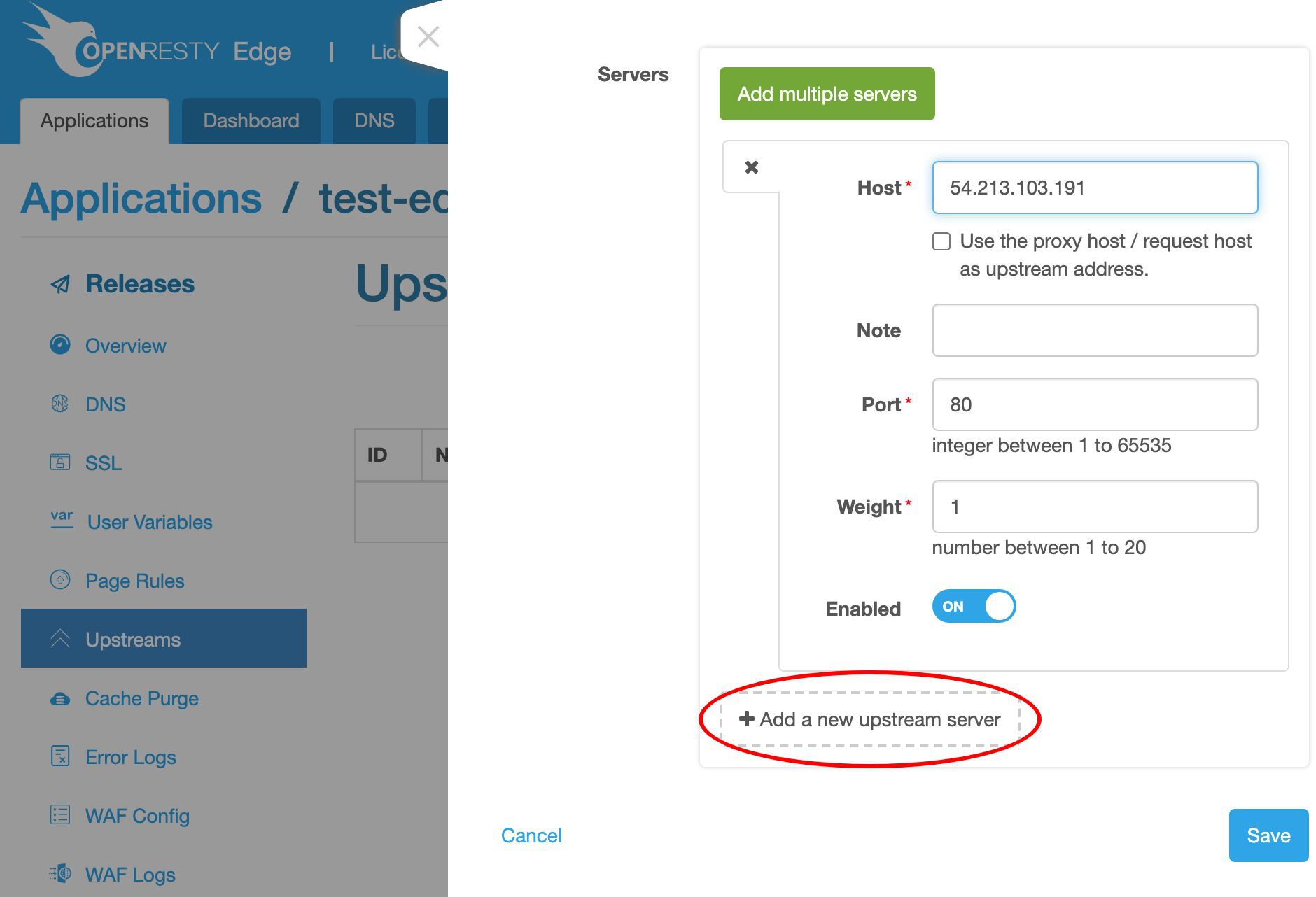

今後、「新規アップストリームサーバーの追加」ボタンをクリックして、このアップストリームにさらに多くのサーバーを追加できます。

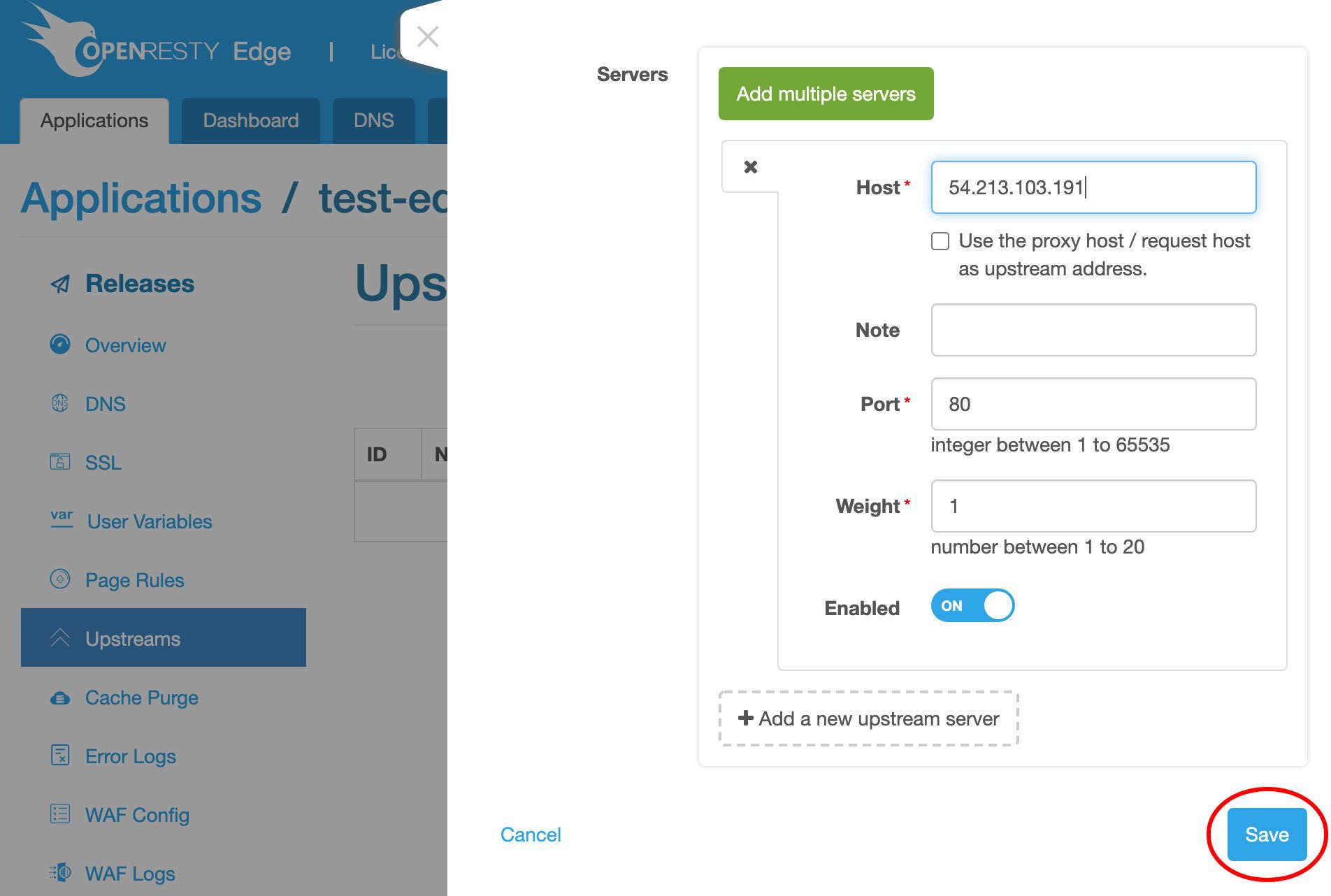

では、このアップストリームを保存しましょう。

my_backend アップストリームが作成されたことが確認できます。

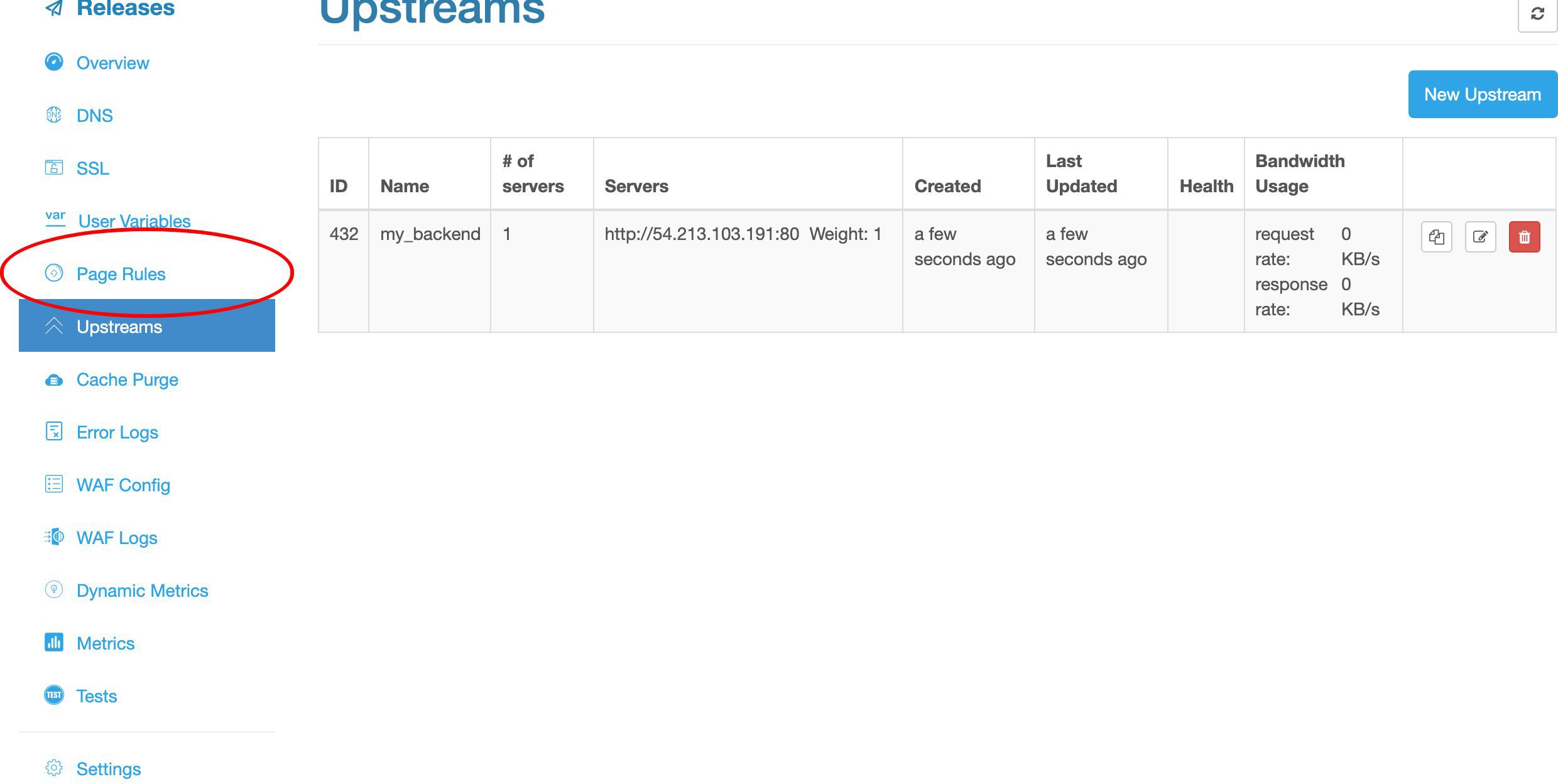

アップストリームを使用するページルールの作成

次に、このアップストリームを実際に利用する新しいページルールを作成しましょう。左側のサイドバーのアップストリームリンクをクリックします。

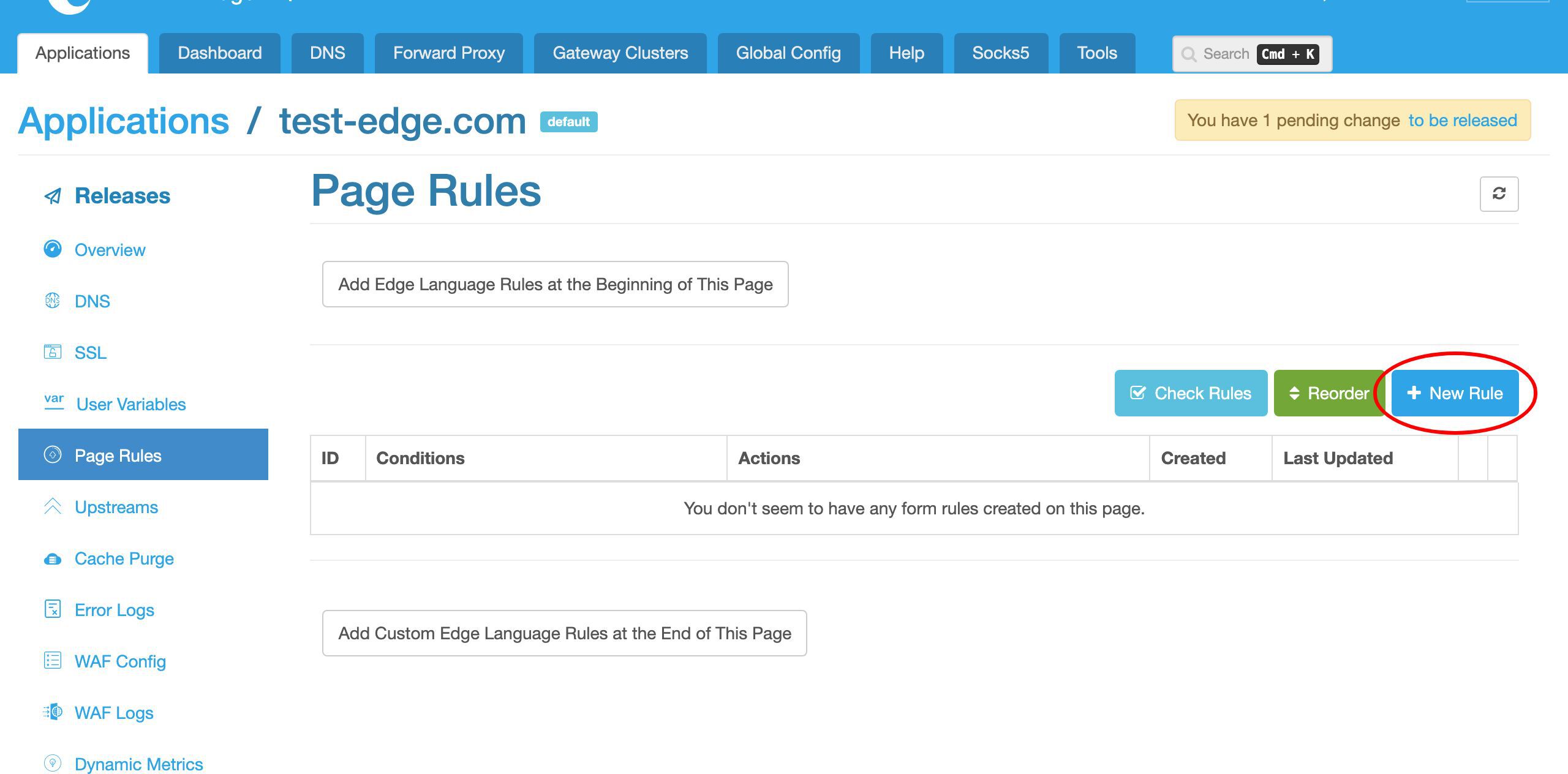

現在、ページルールは定義されていません。

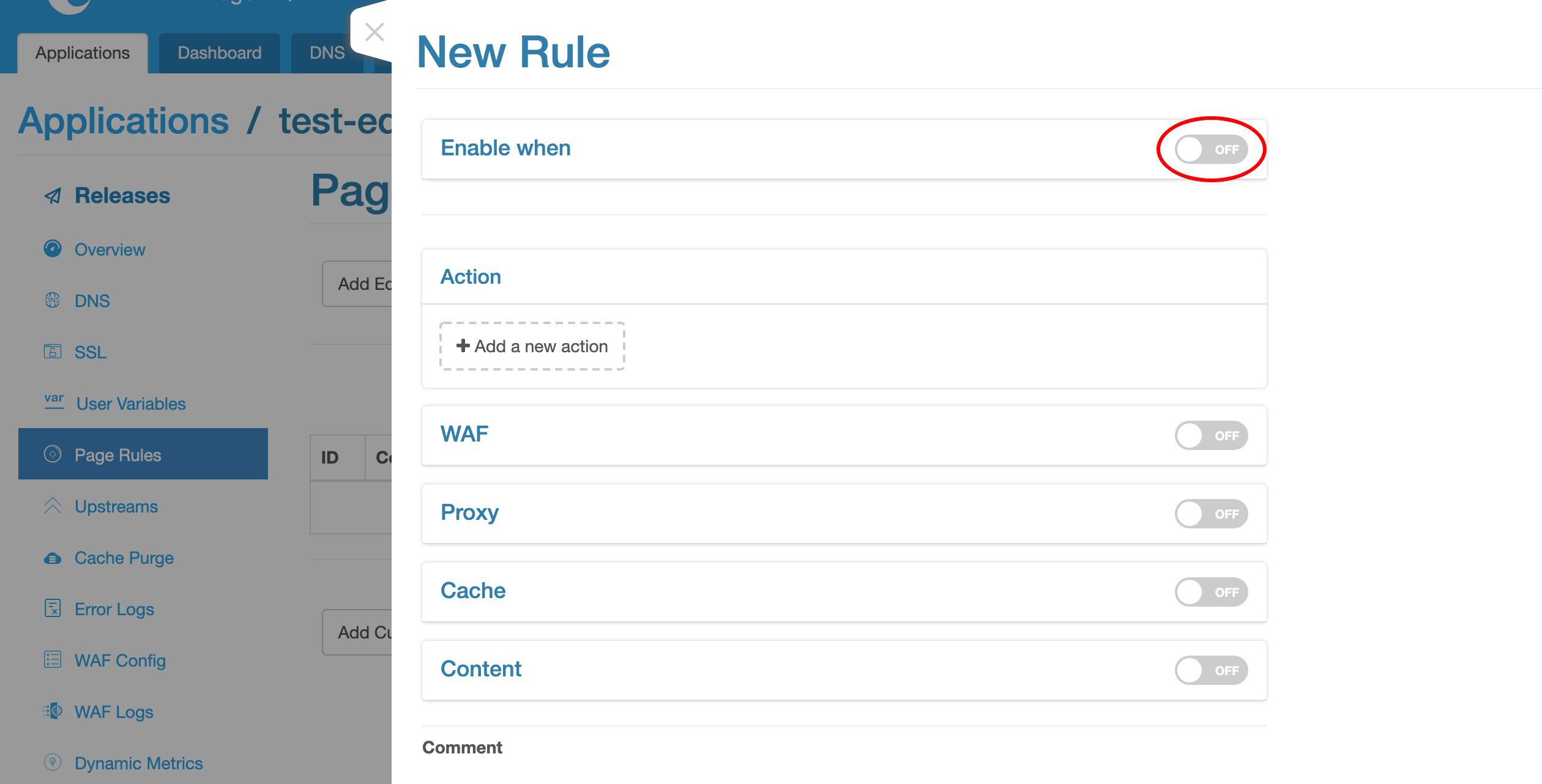

新しいページルールを作成します。「新規ルール」ボタンをクリックします。

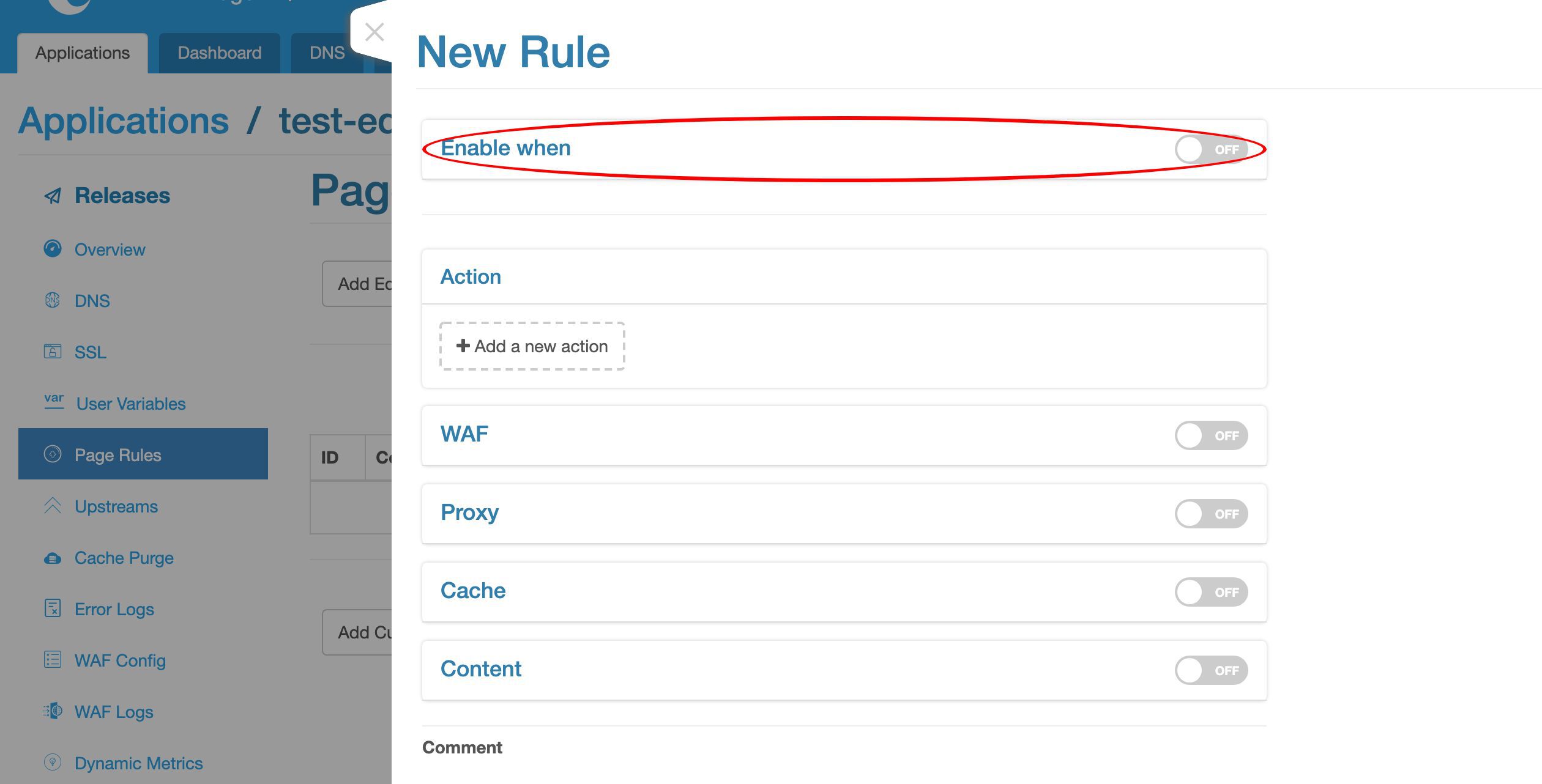

このページルールでは、条件を指定しません。これにより、すべての受信リクエストに適用されます。

条件を追加して、特定のリクエストにのみプロキシページルールを制限することもできます。

ここでは条件を無効にしておきます。

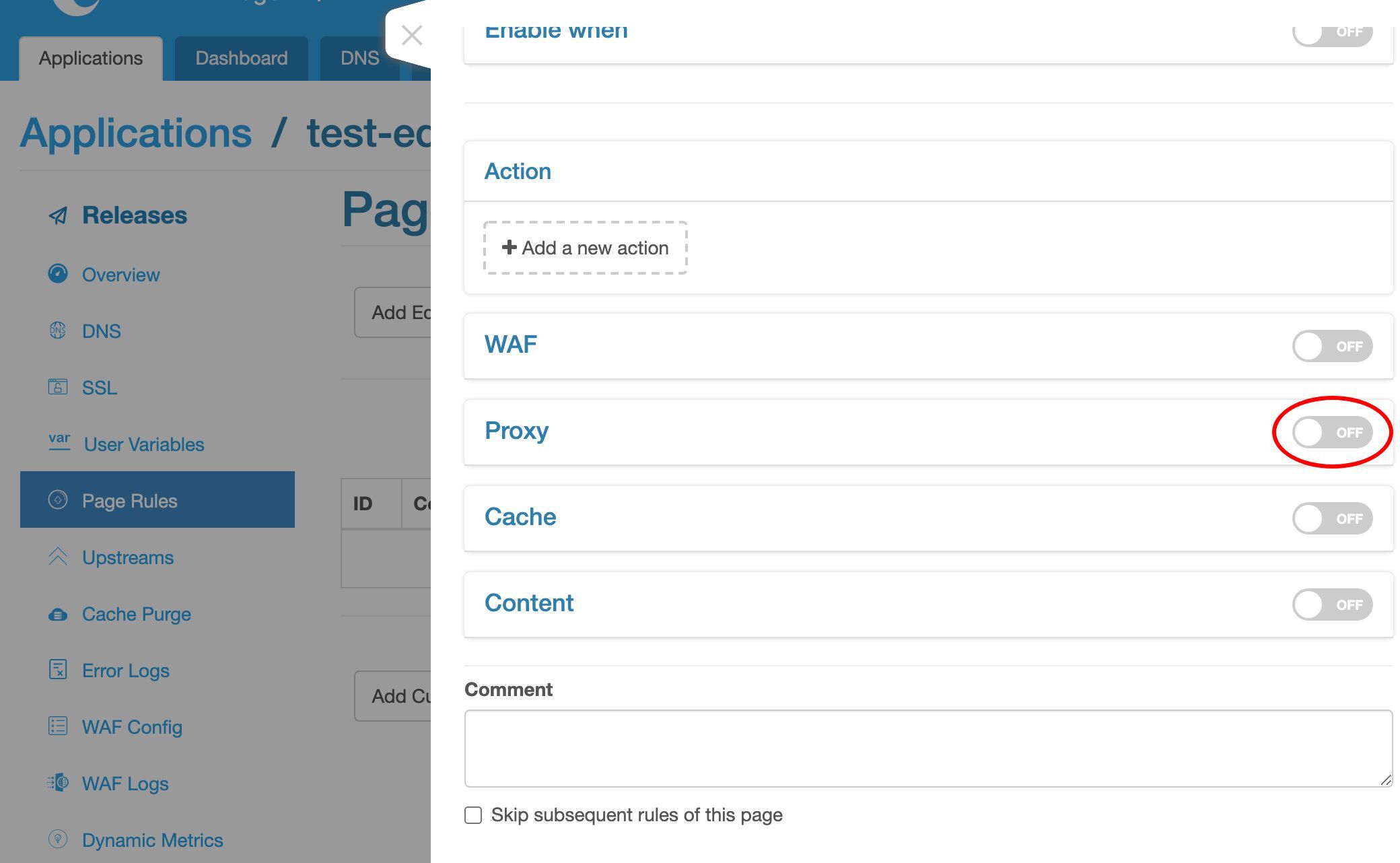

ここでプロキシターゲットを追加します。

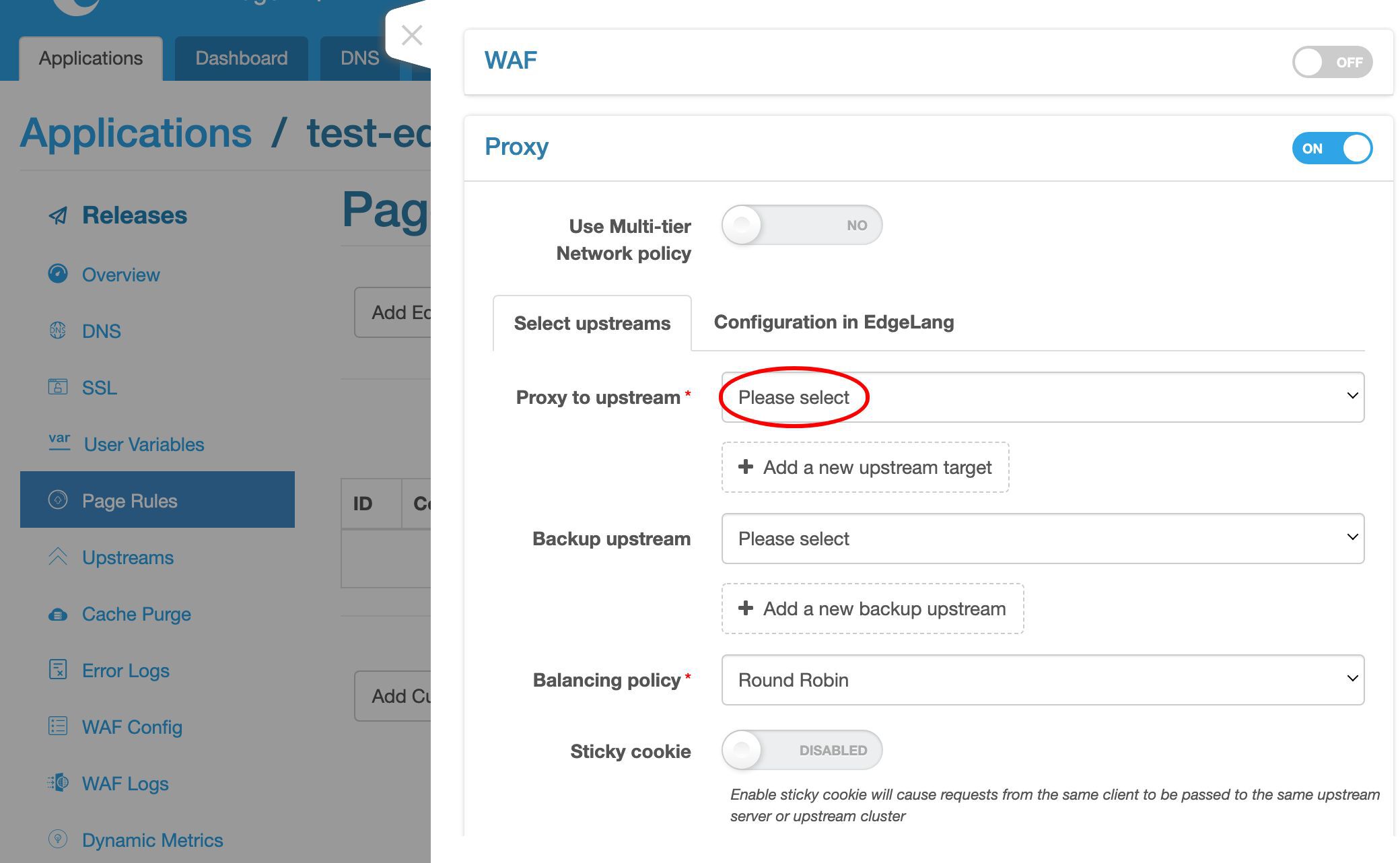

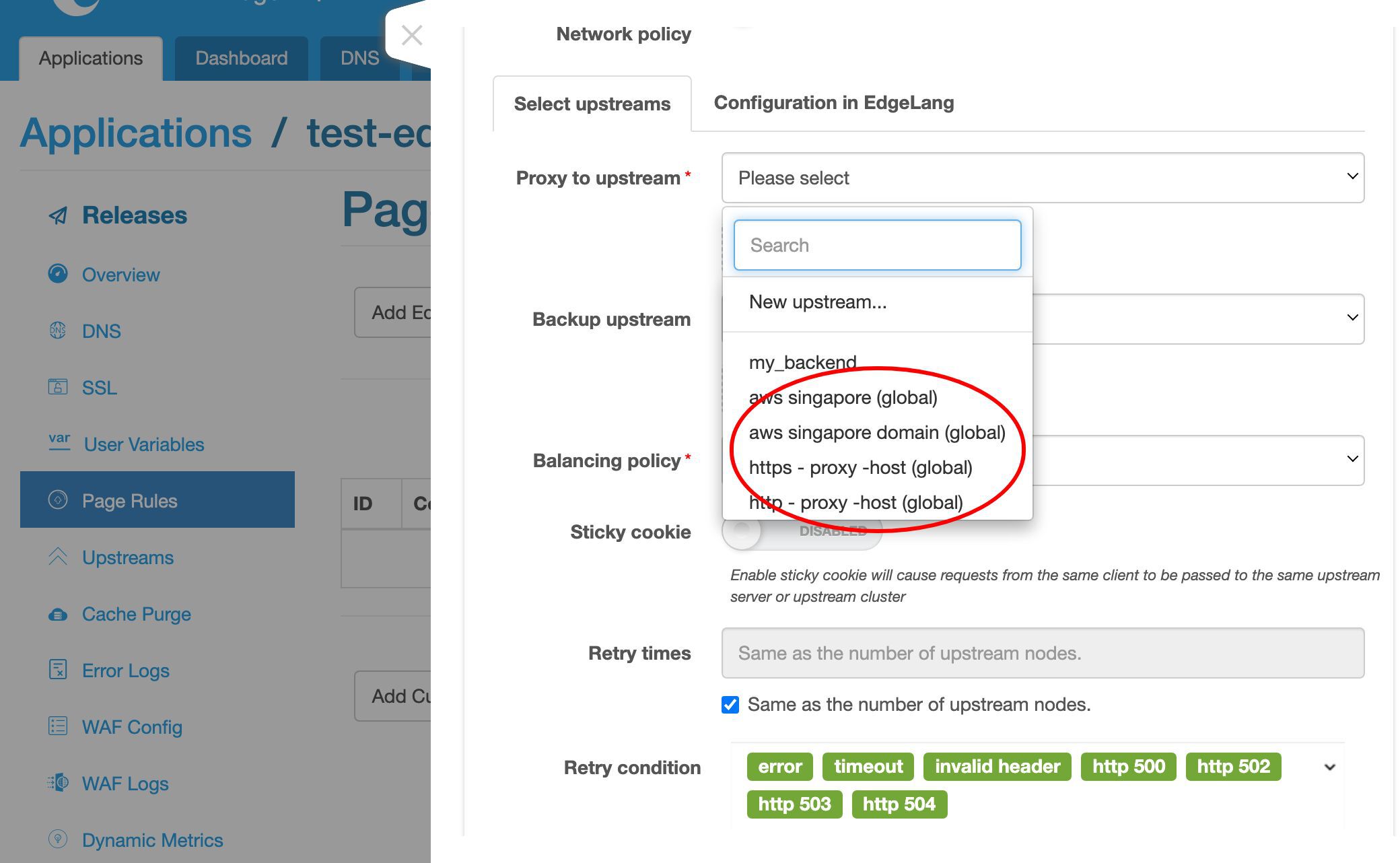

アップストリームを選択します。

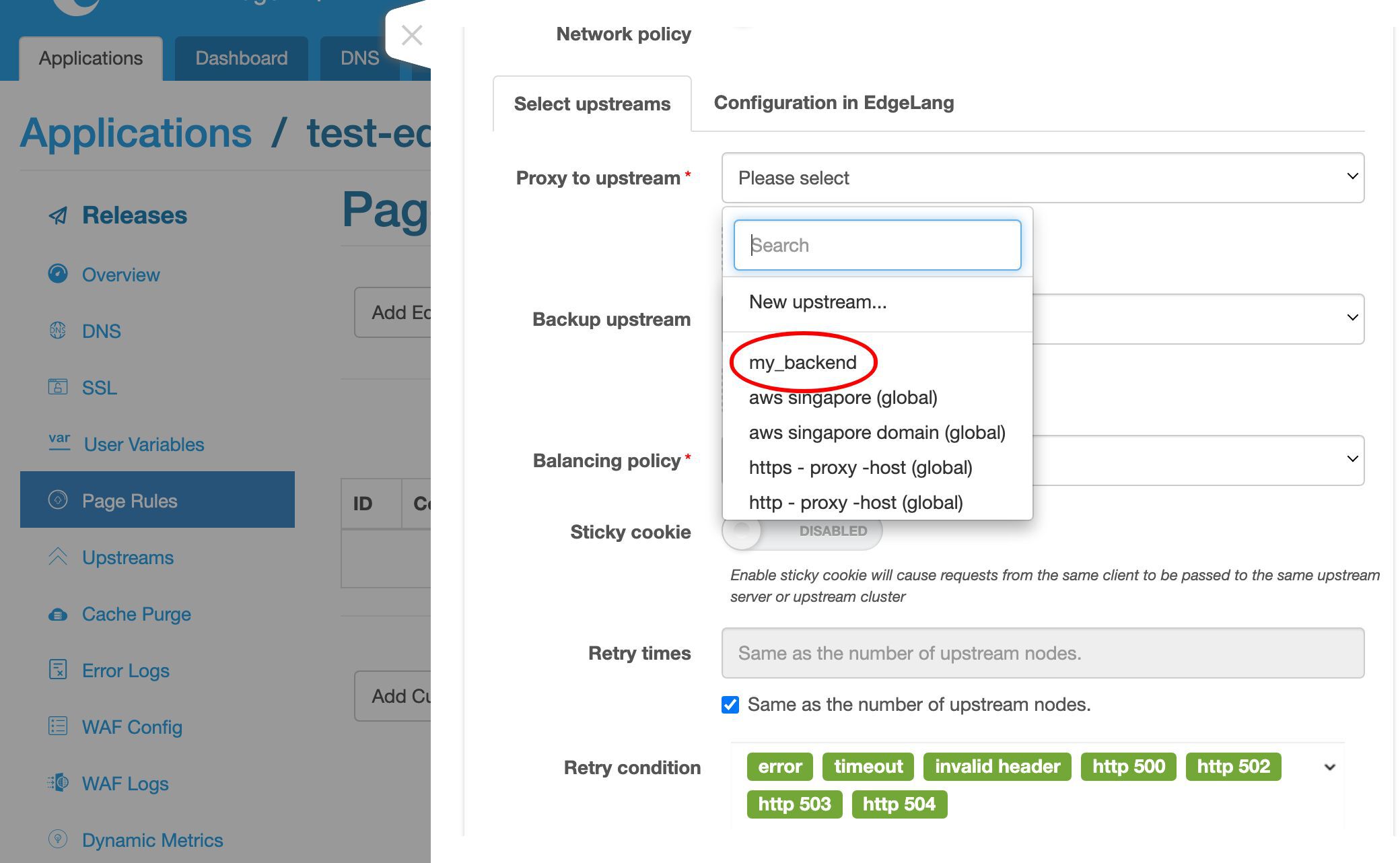

ここに新しく作成したアップストリームがあります。

事前に定義されたグローバルアップストリームもいくつかあります。これらは、このアプリケーションを含むすべてのアプリケーションで再利用できます。

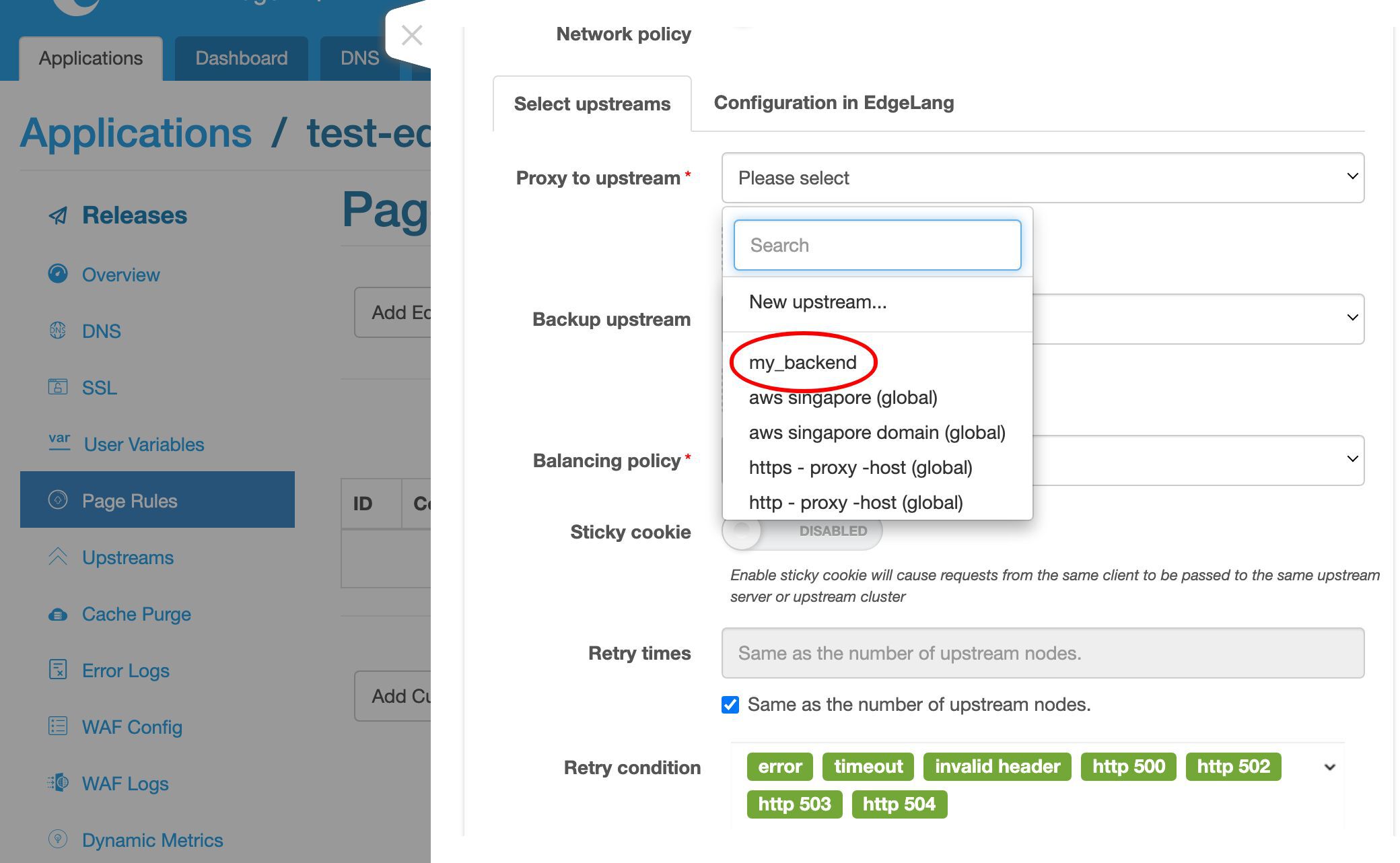

ここでは my_backend アップストリームを選択します。

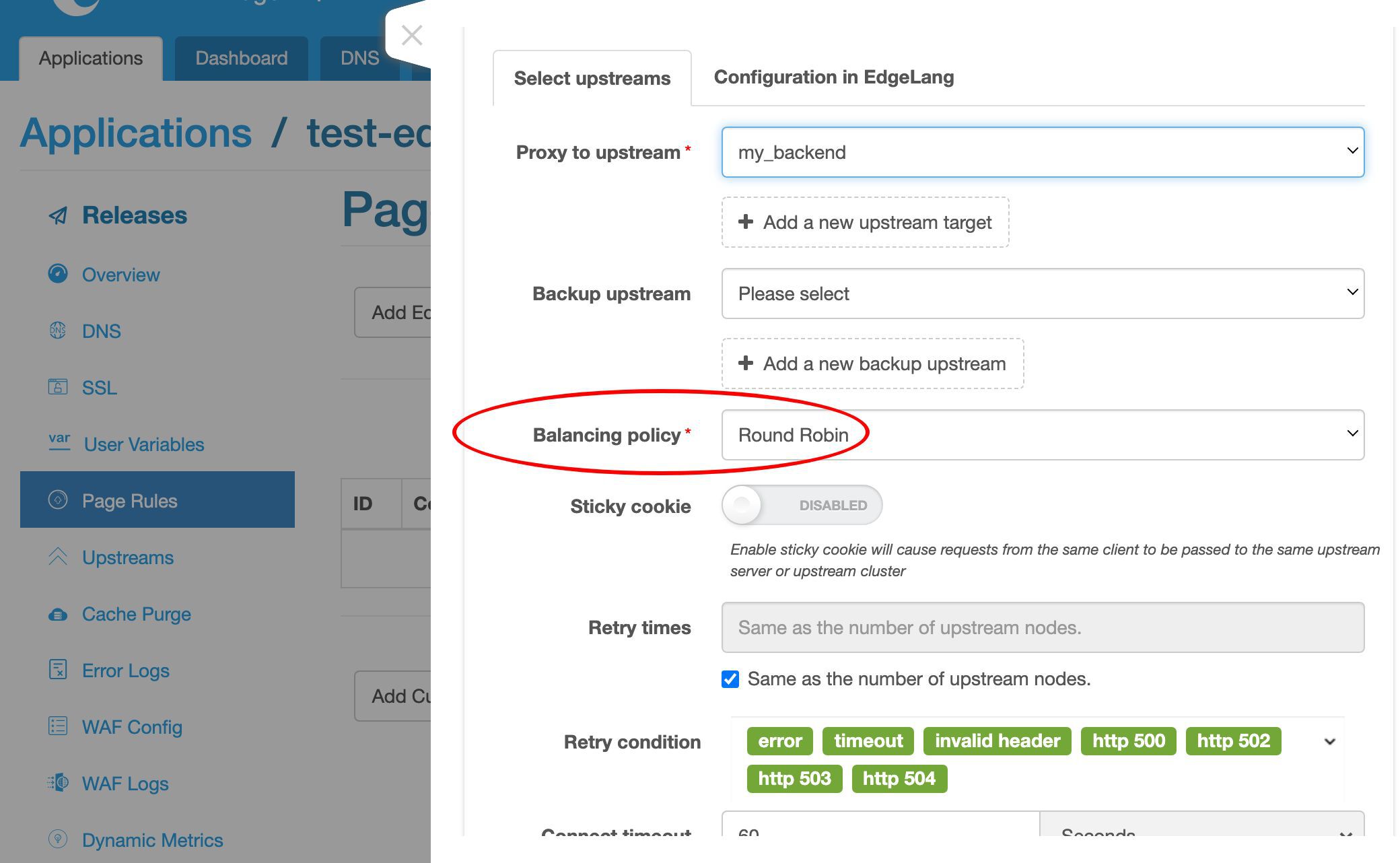

アップストリームにはサーバーが 1 台しかないため、ここではロードバランシング戦略は重要ではありません。

デフォルトのラウンドロビン戦略のままにしておきます。

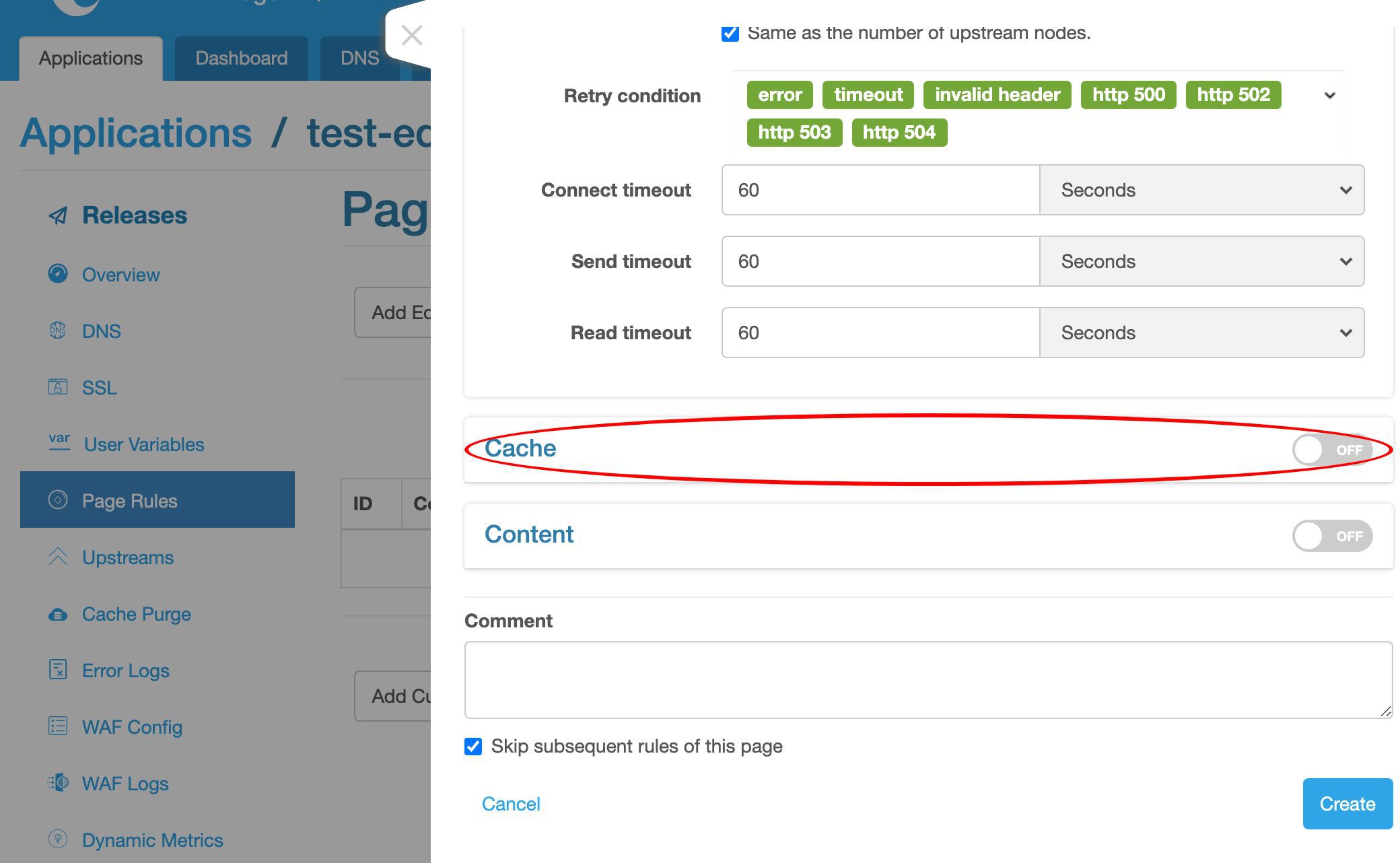

レスポンスのキャッシュを有効にしたい場合もあるでしょう。これについては別の動画で説明します。

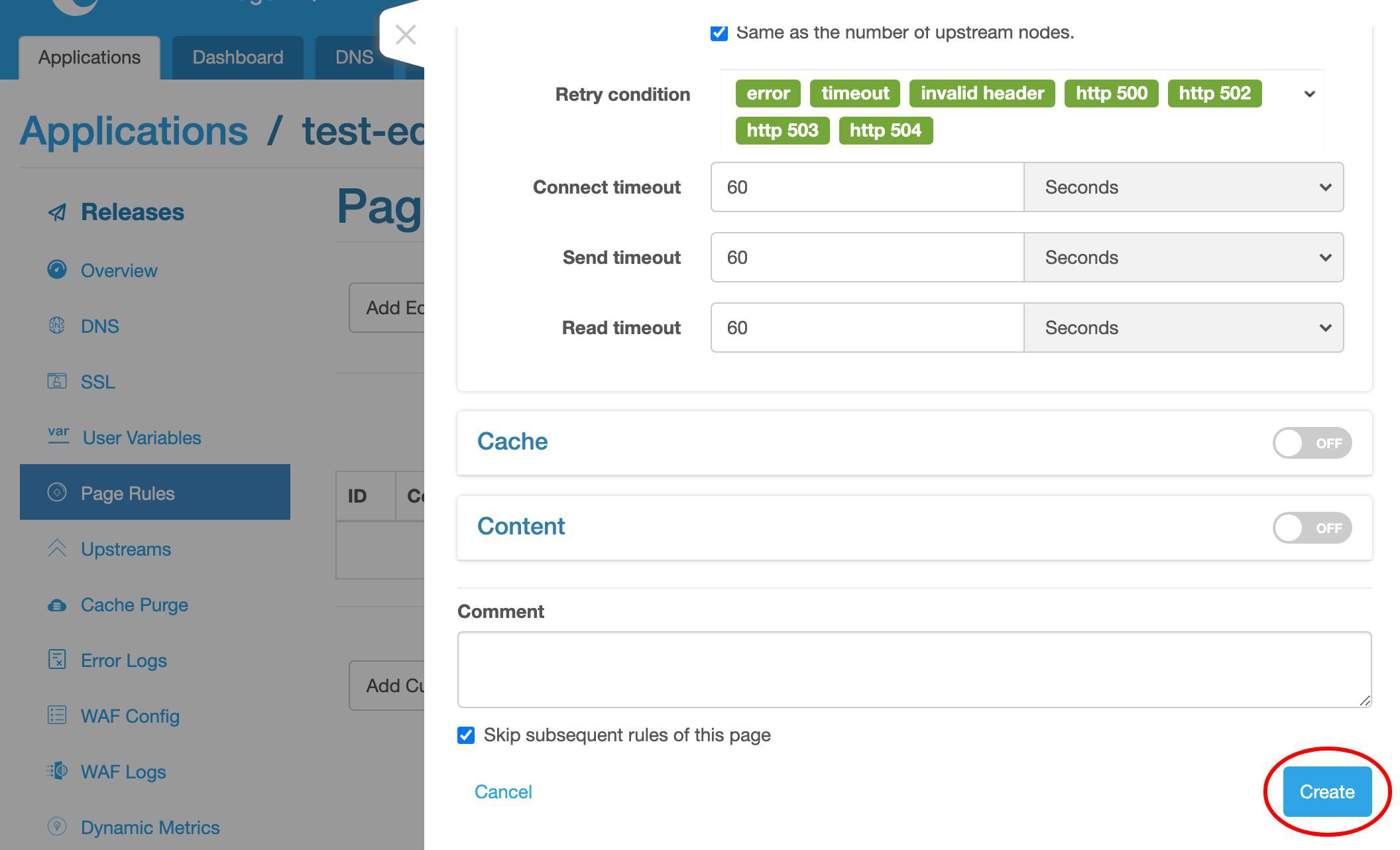

最後に、このルールを作成します。

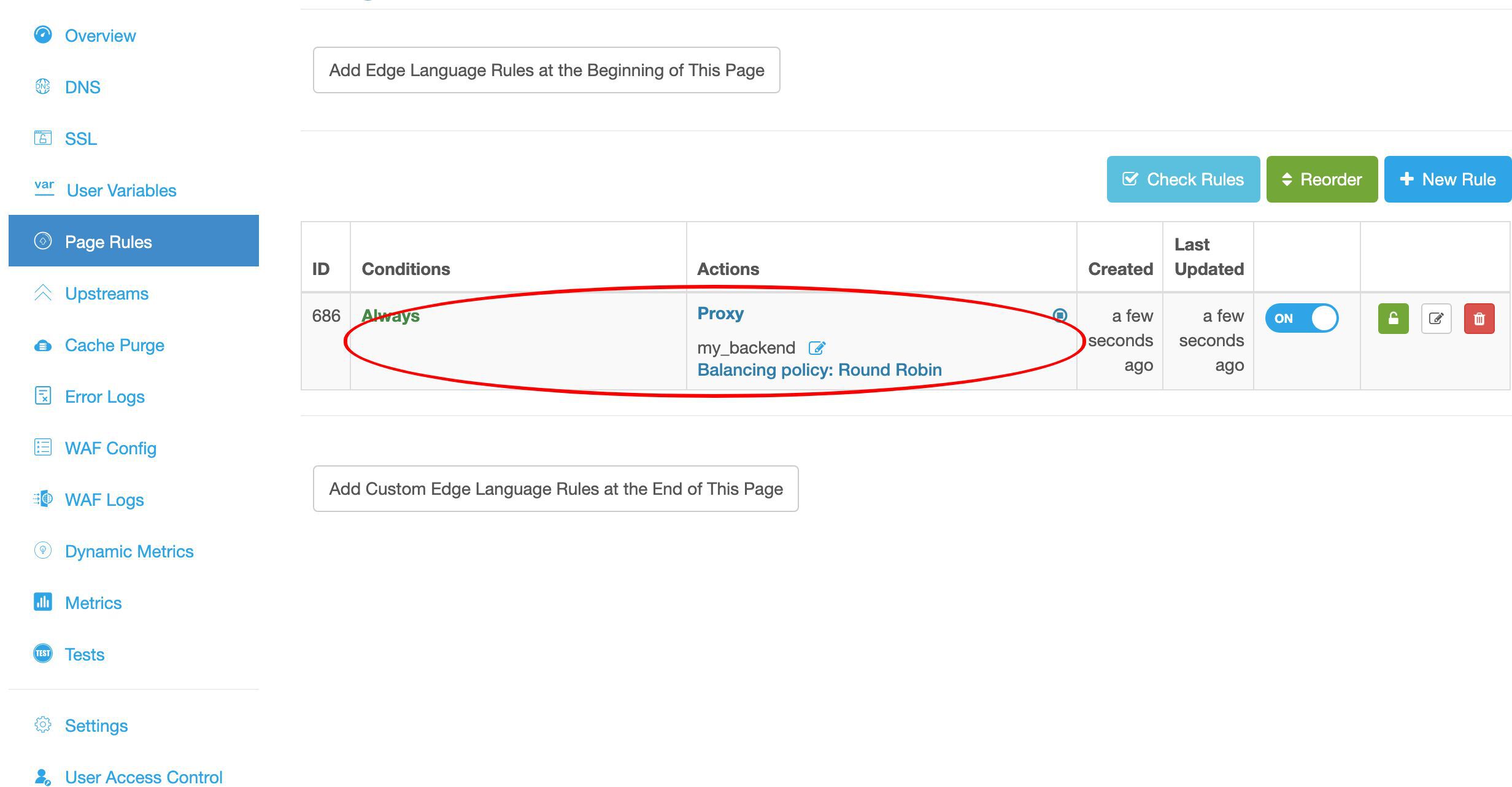

プロキシページルールがページルールリストに表示されていることが確認できます。

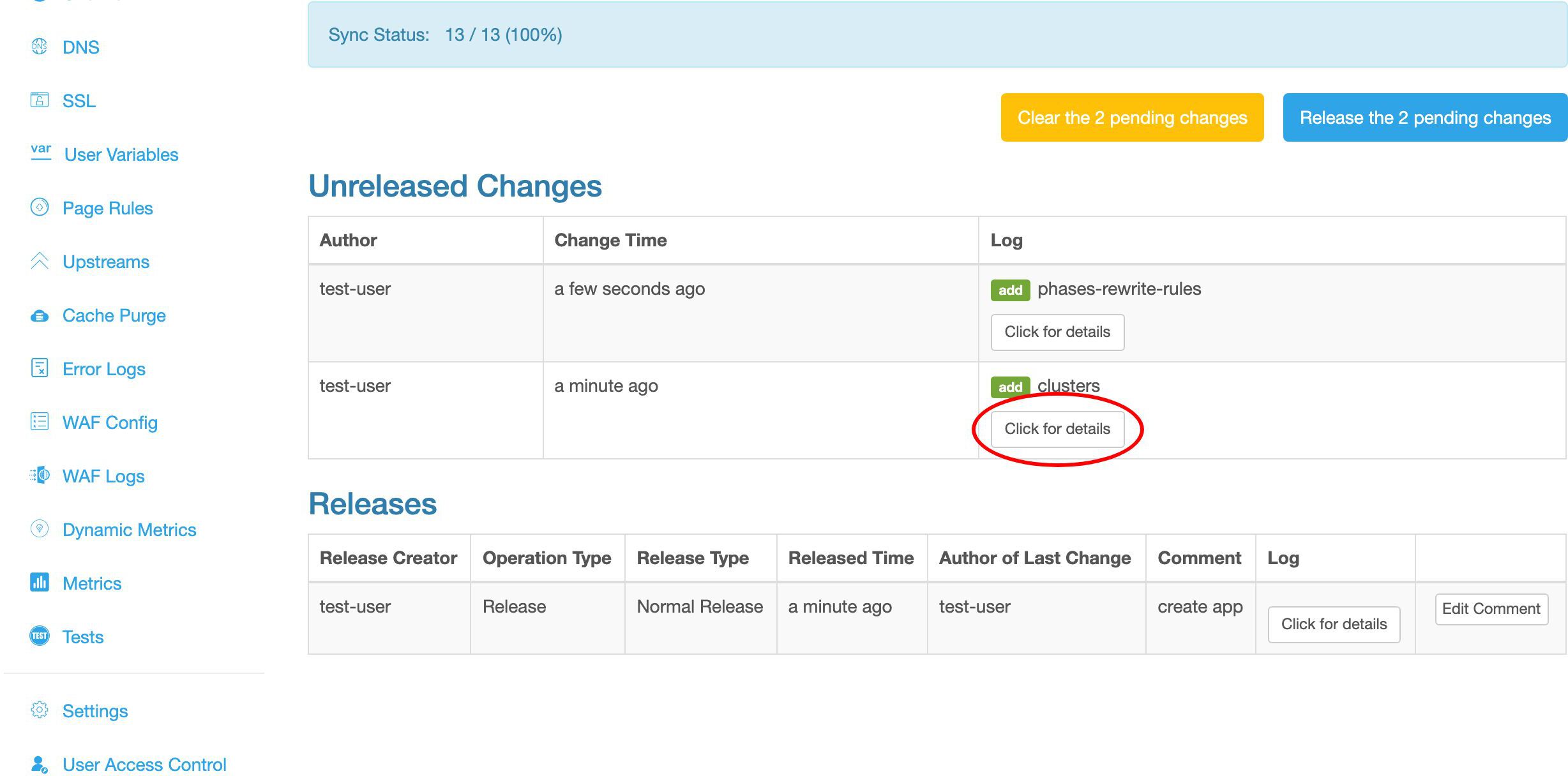

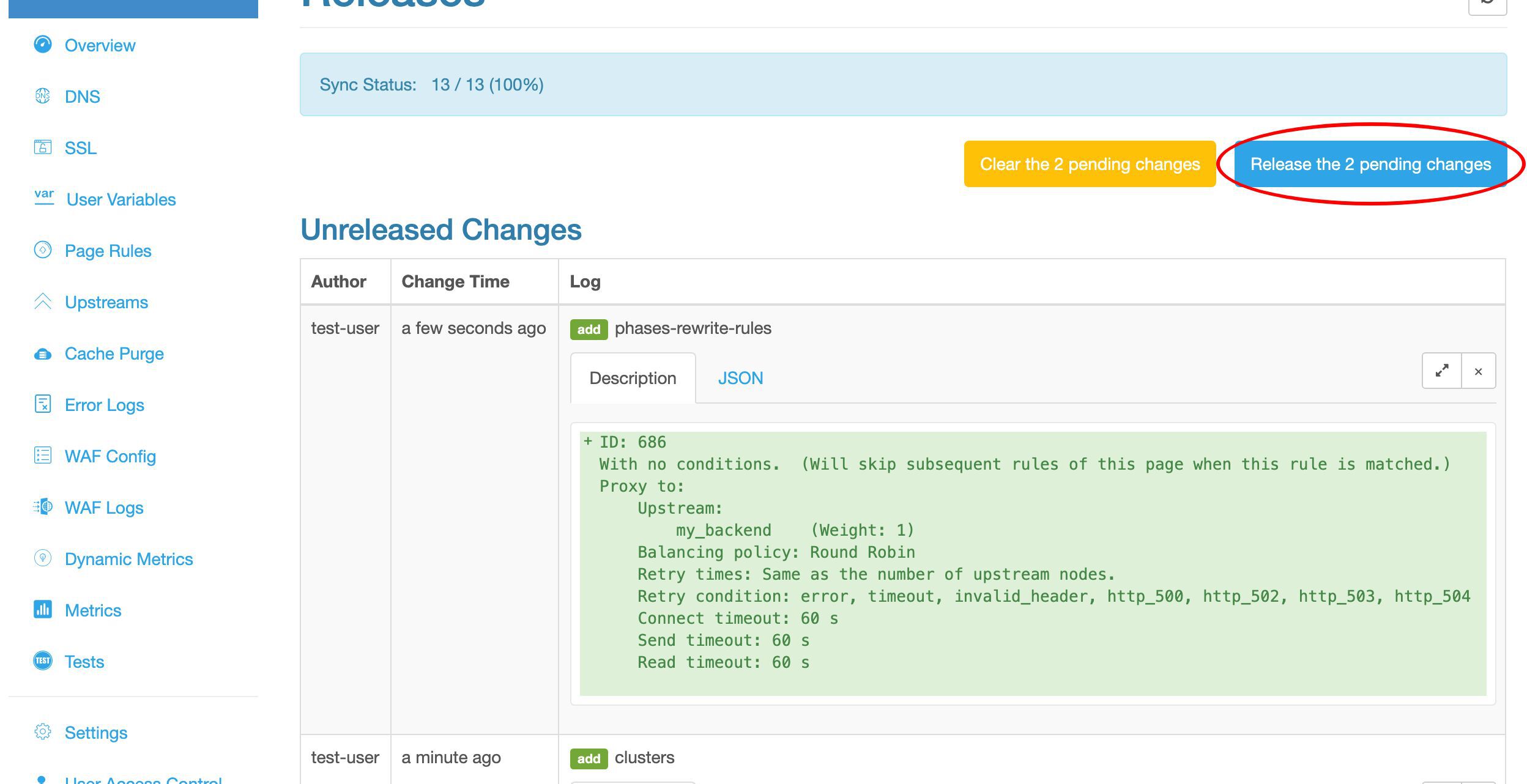

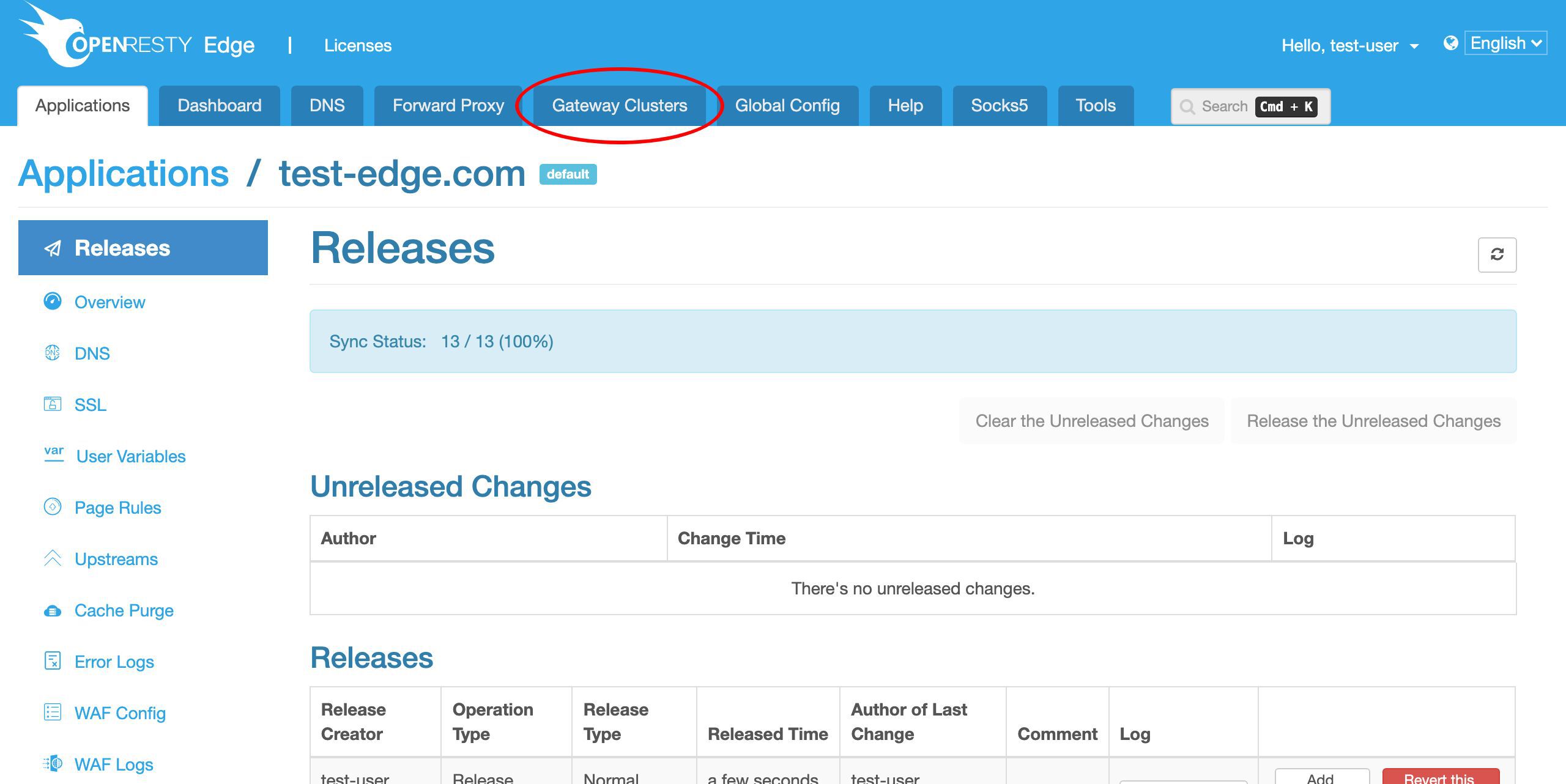

設定のリリース

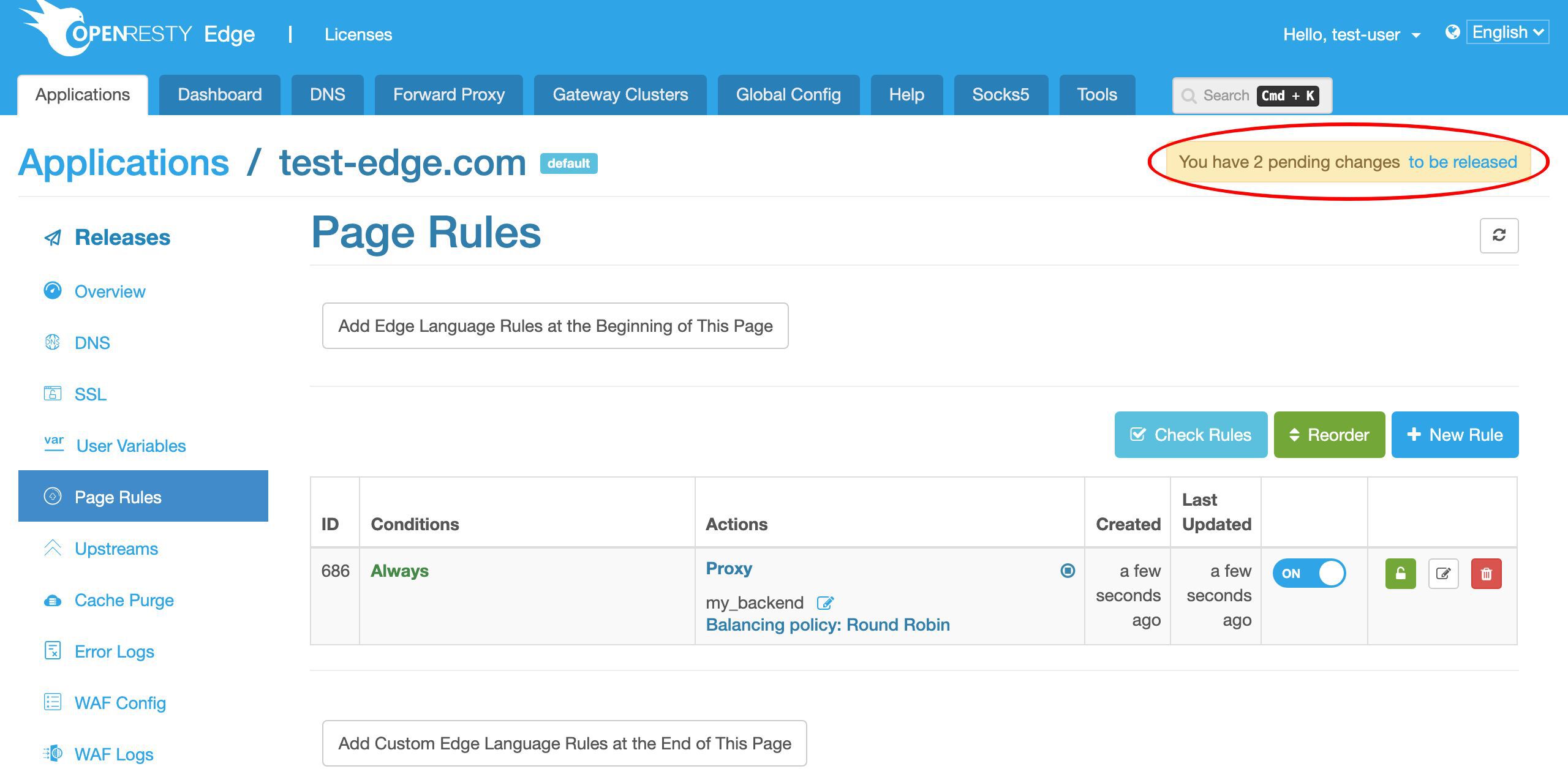

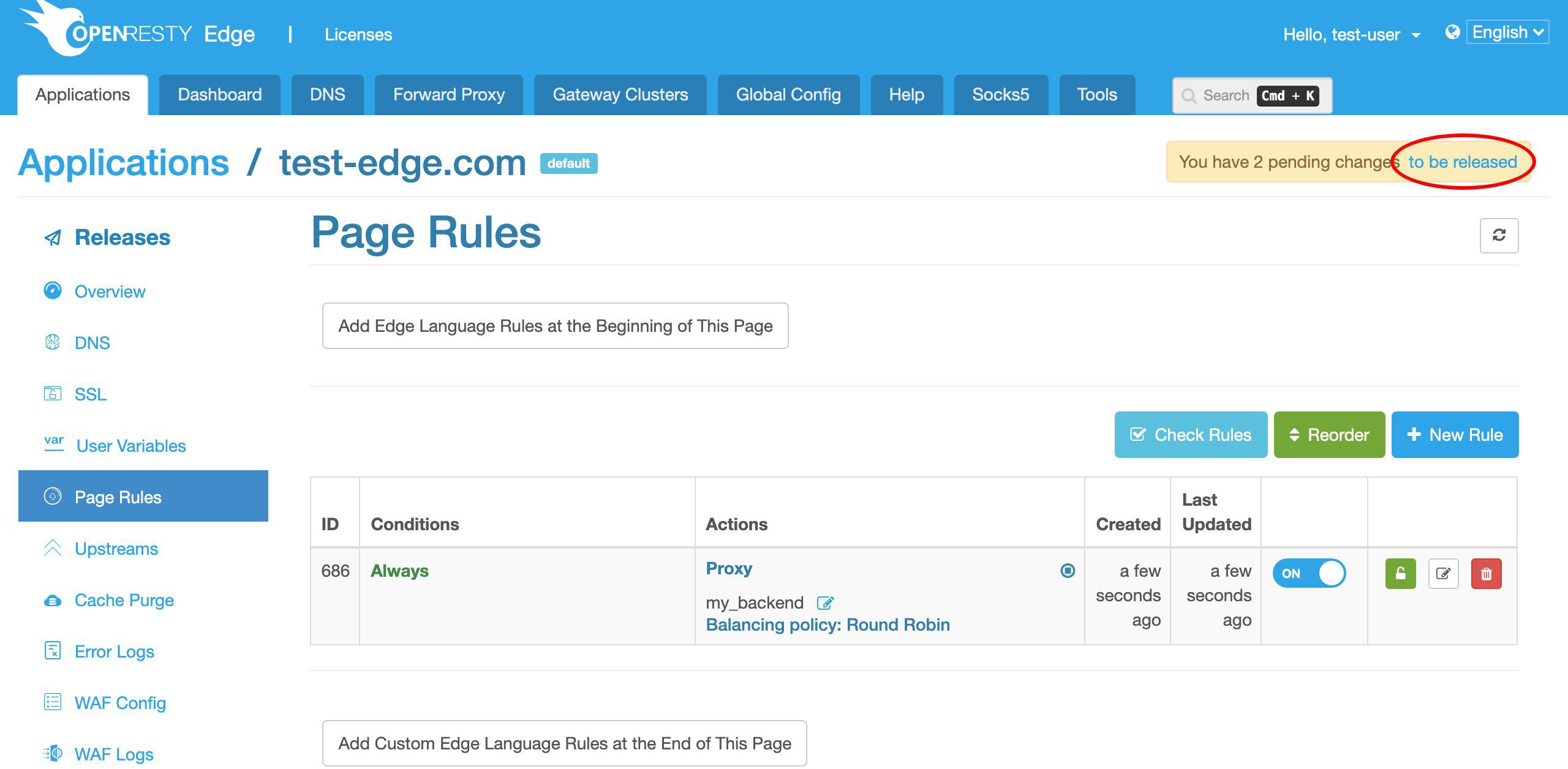

最後のステップは、新しい設定をリリースすることです。これにより、まだ完了していない変更がすべてのゲートウェイサーバーにプッシュされます。

ここのリンクをクリックしてリリースを行うことができます。

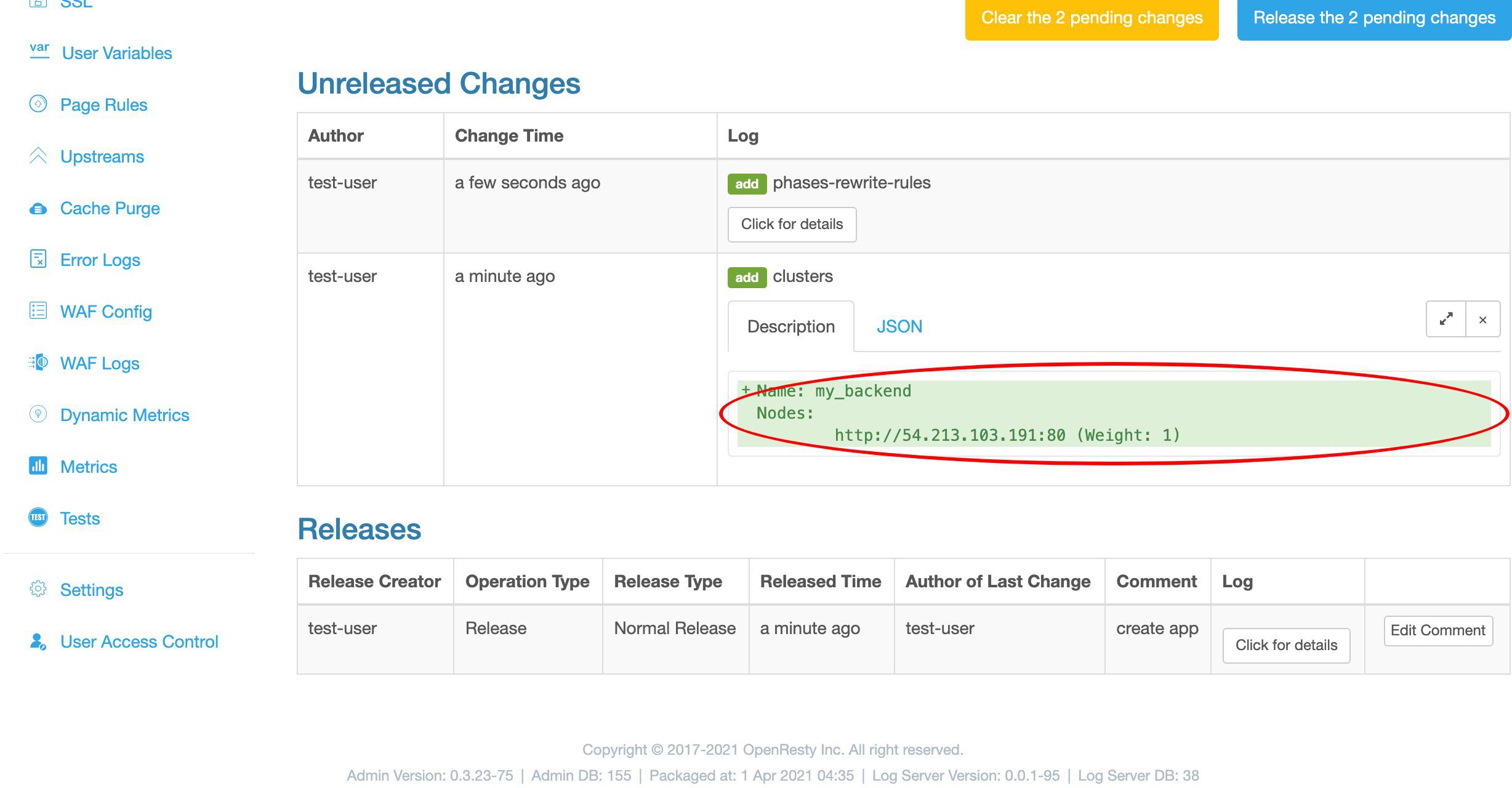

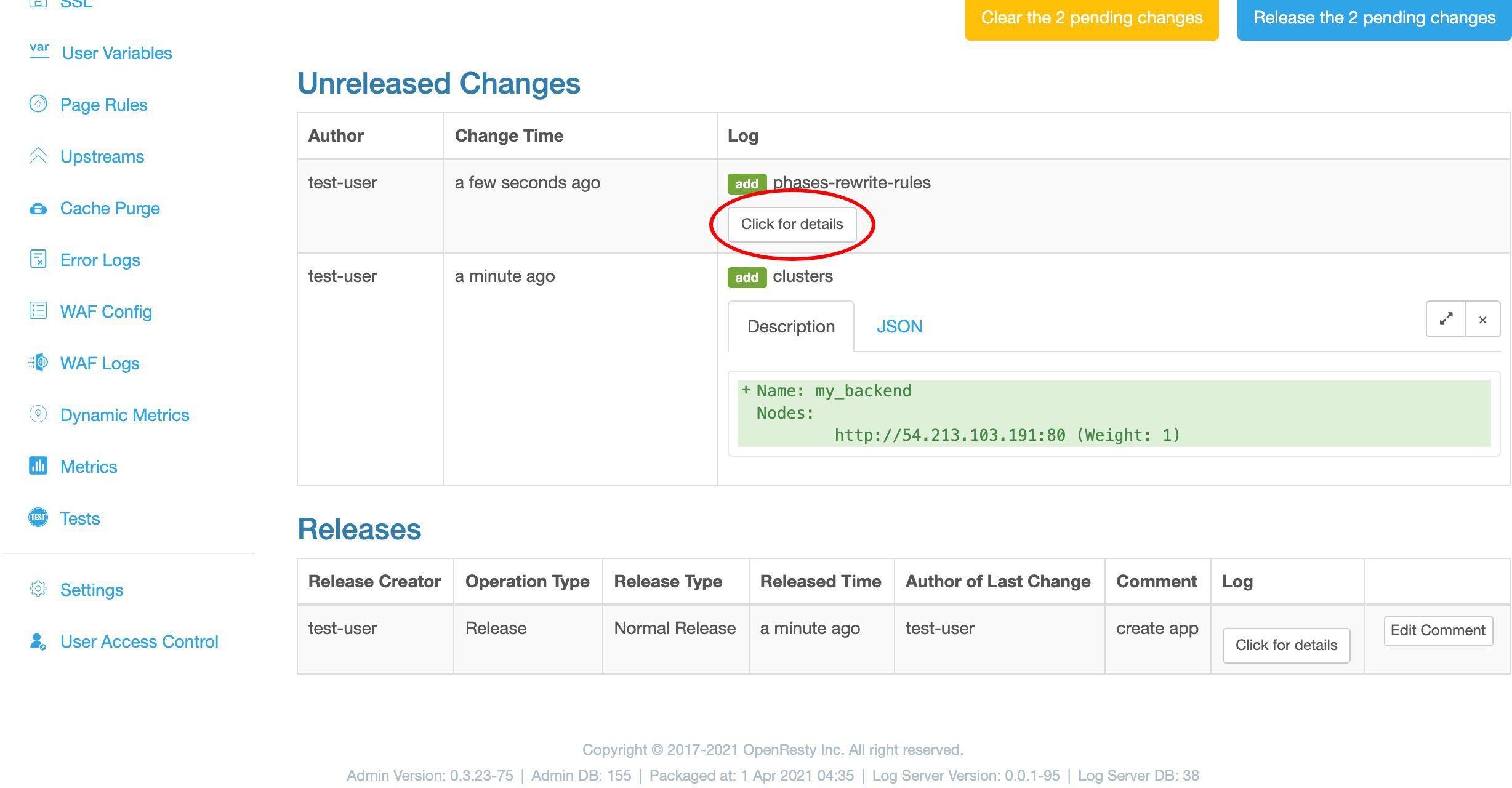

変更をプッシュする前に、変更内容を確認する機会があります。これは最初の変更です。

これは追加した my_backend アップストリームです。

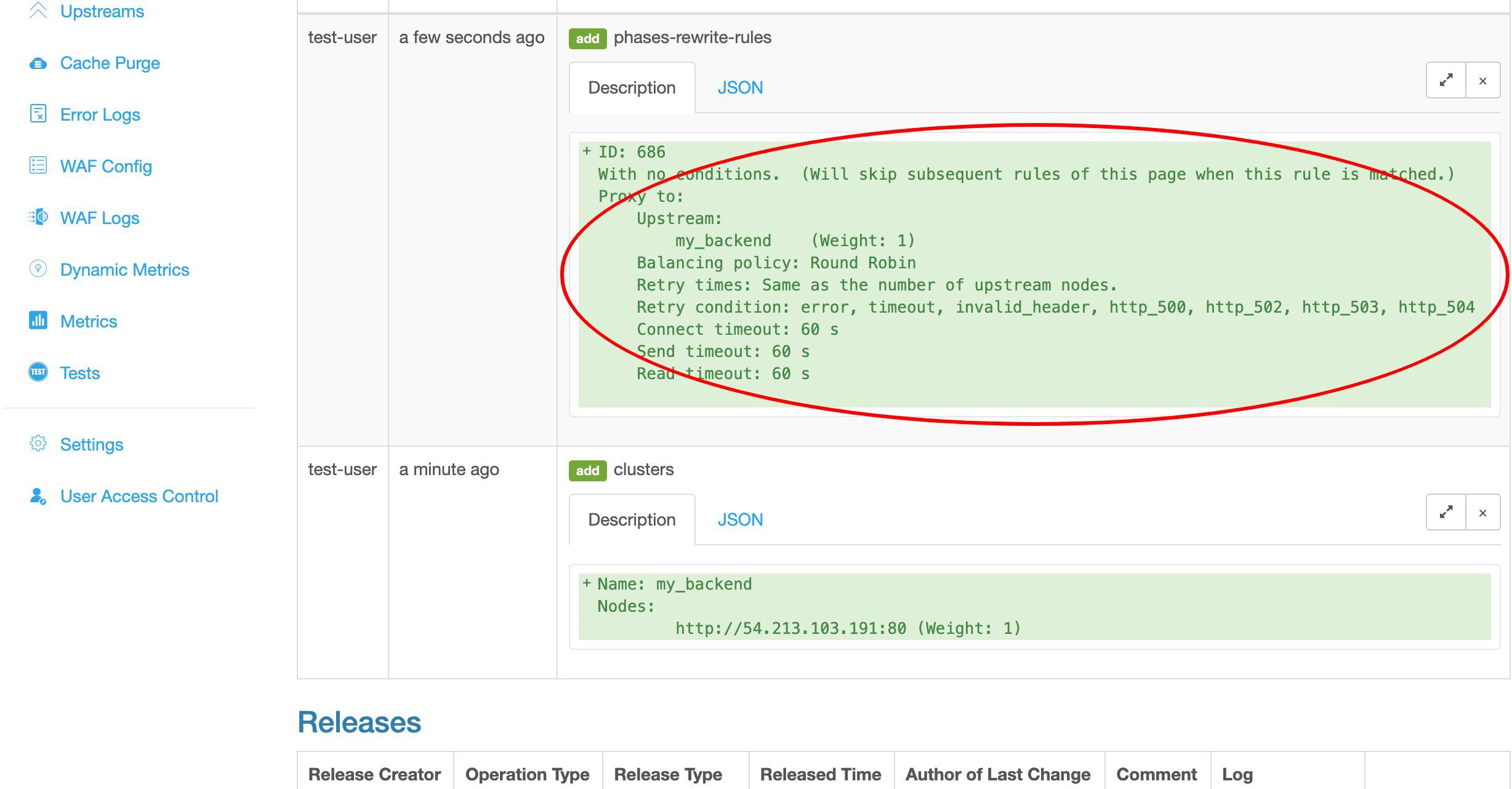

これは 2 つ目の変更です。

これは確かに我々のプロキシページルールです。

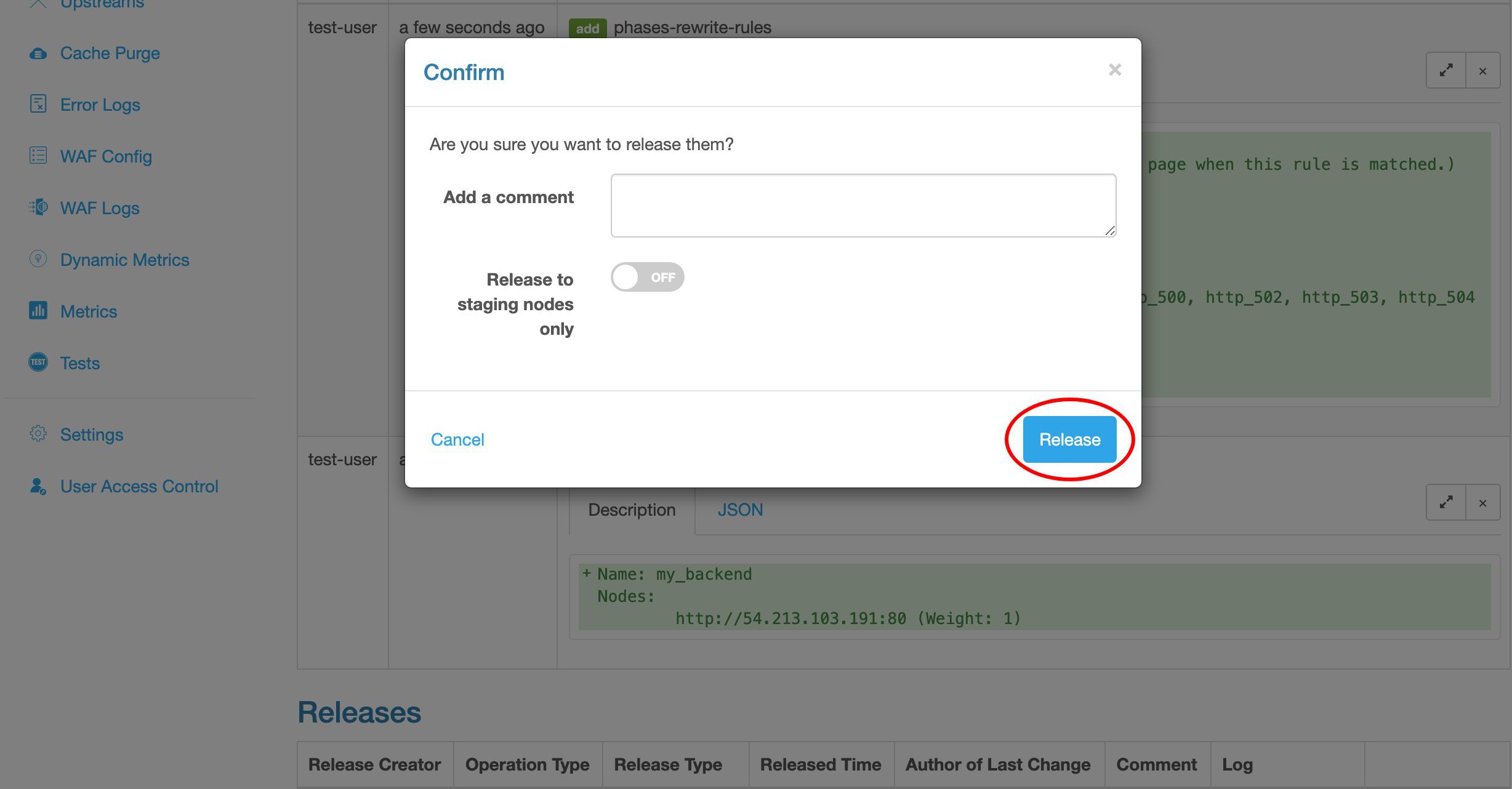

では、すべてのゲートウェイサーバーに設定更新をリリースしましょう。

送信します!

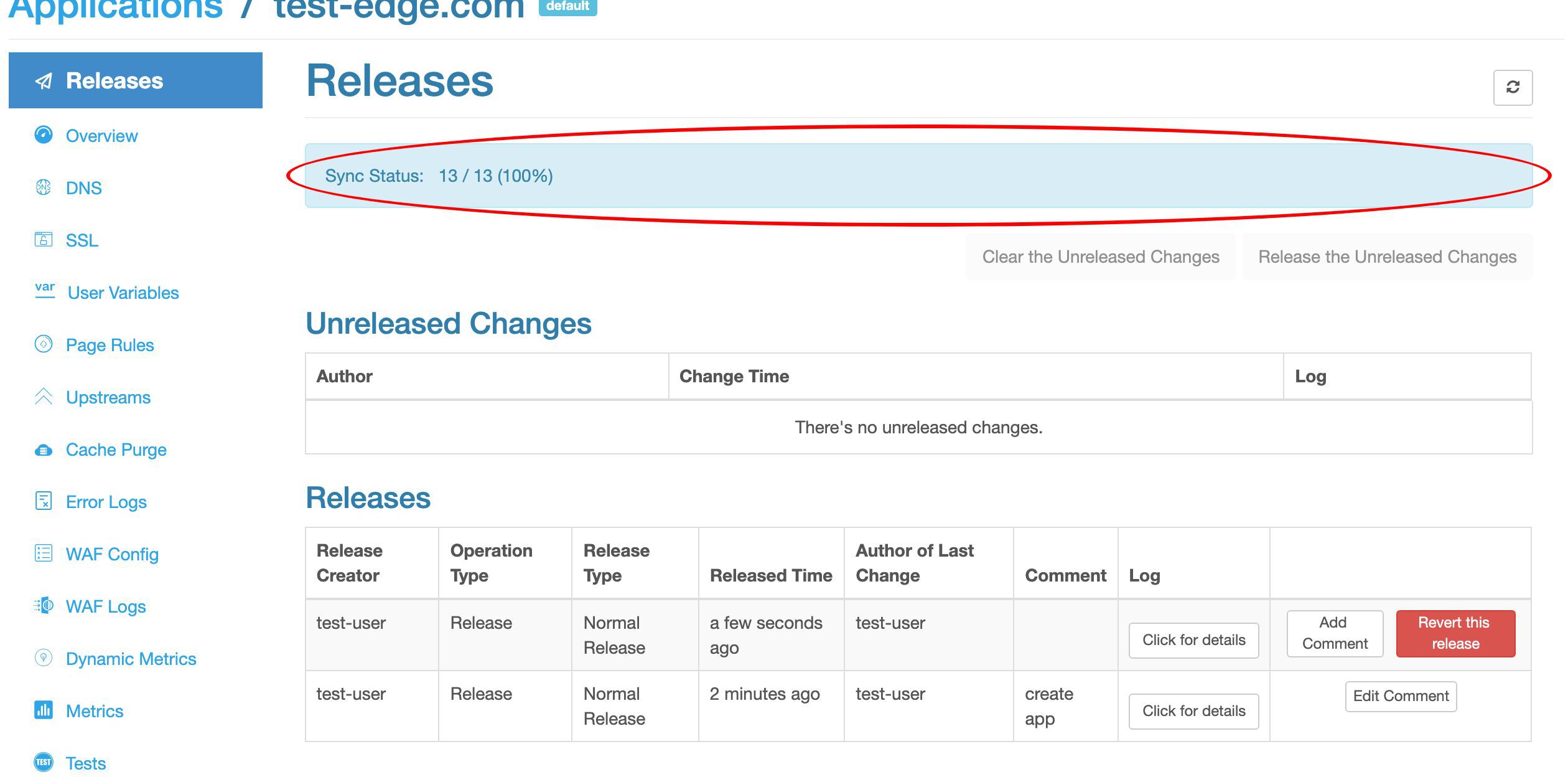

設定の同期進捗をリアルタイムで観察できます。これはゲートウェイネットワーク全体にプッシュされています。

同期が完了しました。このサンプルデプロイメントでは、ゲートウェイネットワークに 13 台のサーバーがあることがわかります。

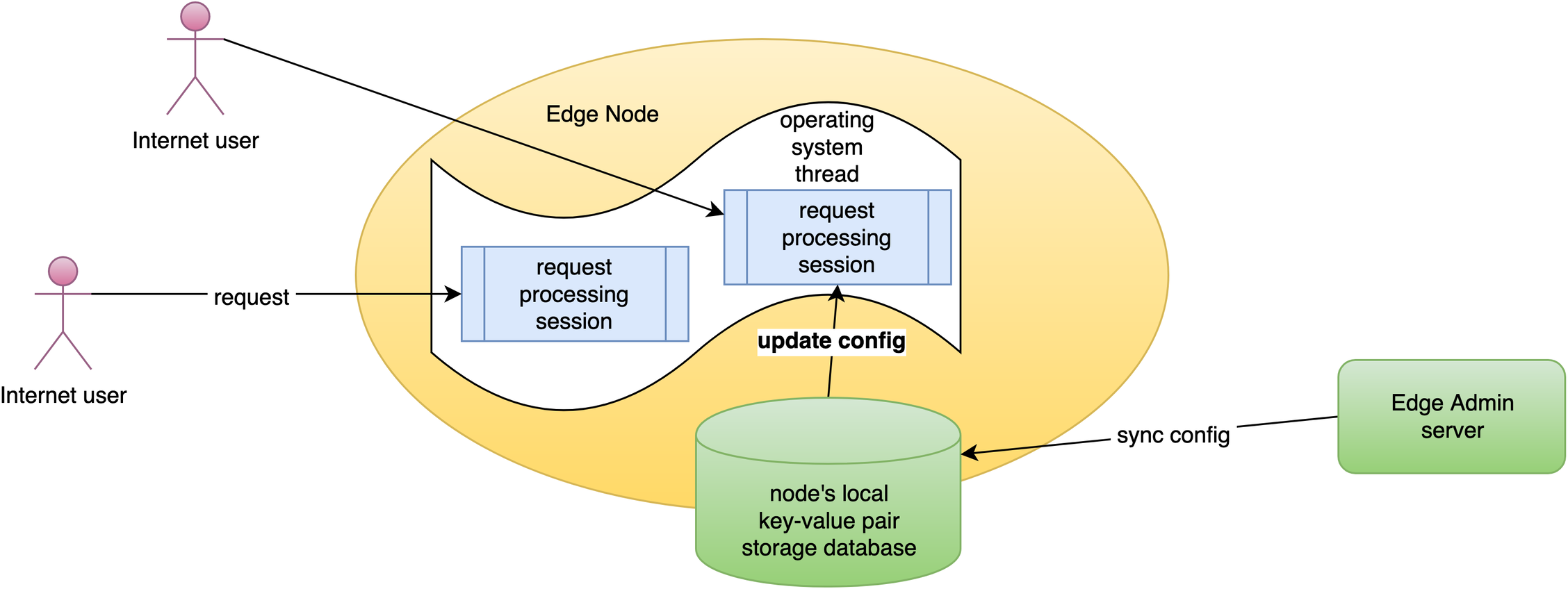

ネットワーク全体で増分的な設定同期を行っています。

リクエストレベルでリアルタイムに設定を更新しています。アプリケーションレベルの設定変更では、サーバーのリロード、再起動、バイナリアップグレードは必要ありません。そのため、多くの異なるユーザーが頻繁に公開を行っても、非常にスケーラブルです。

テスト

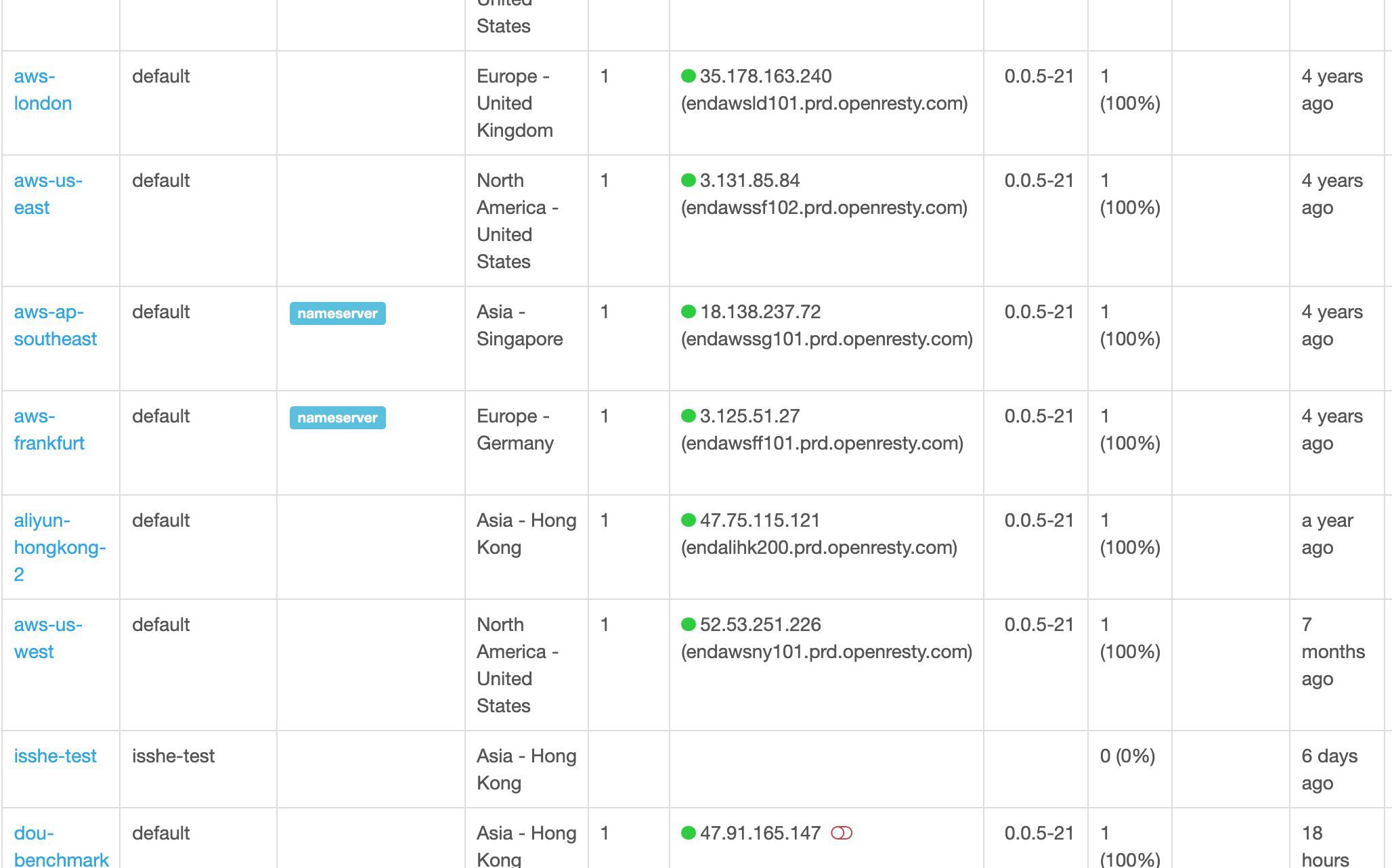

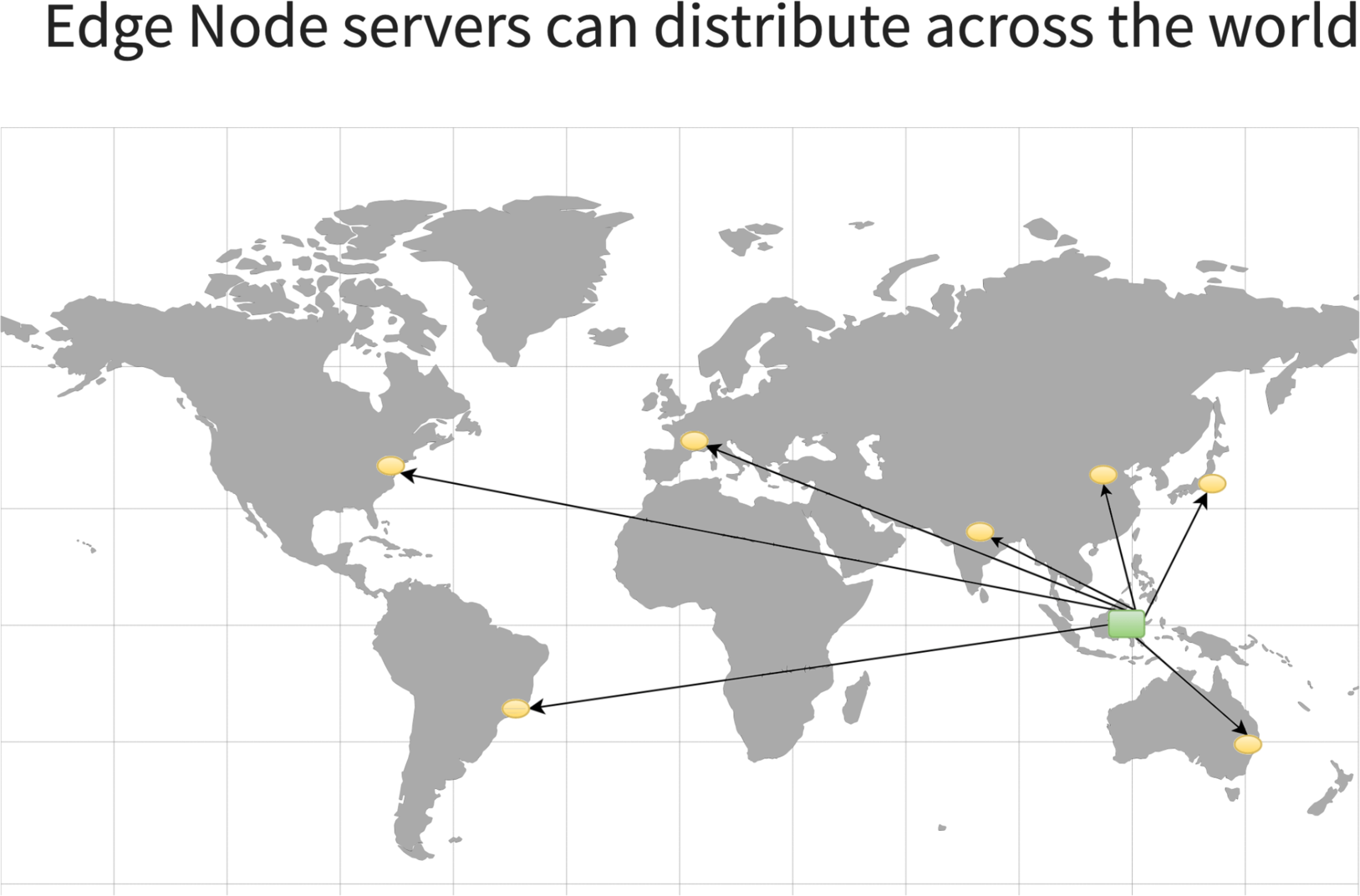

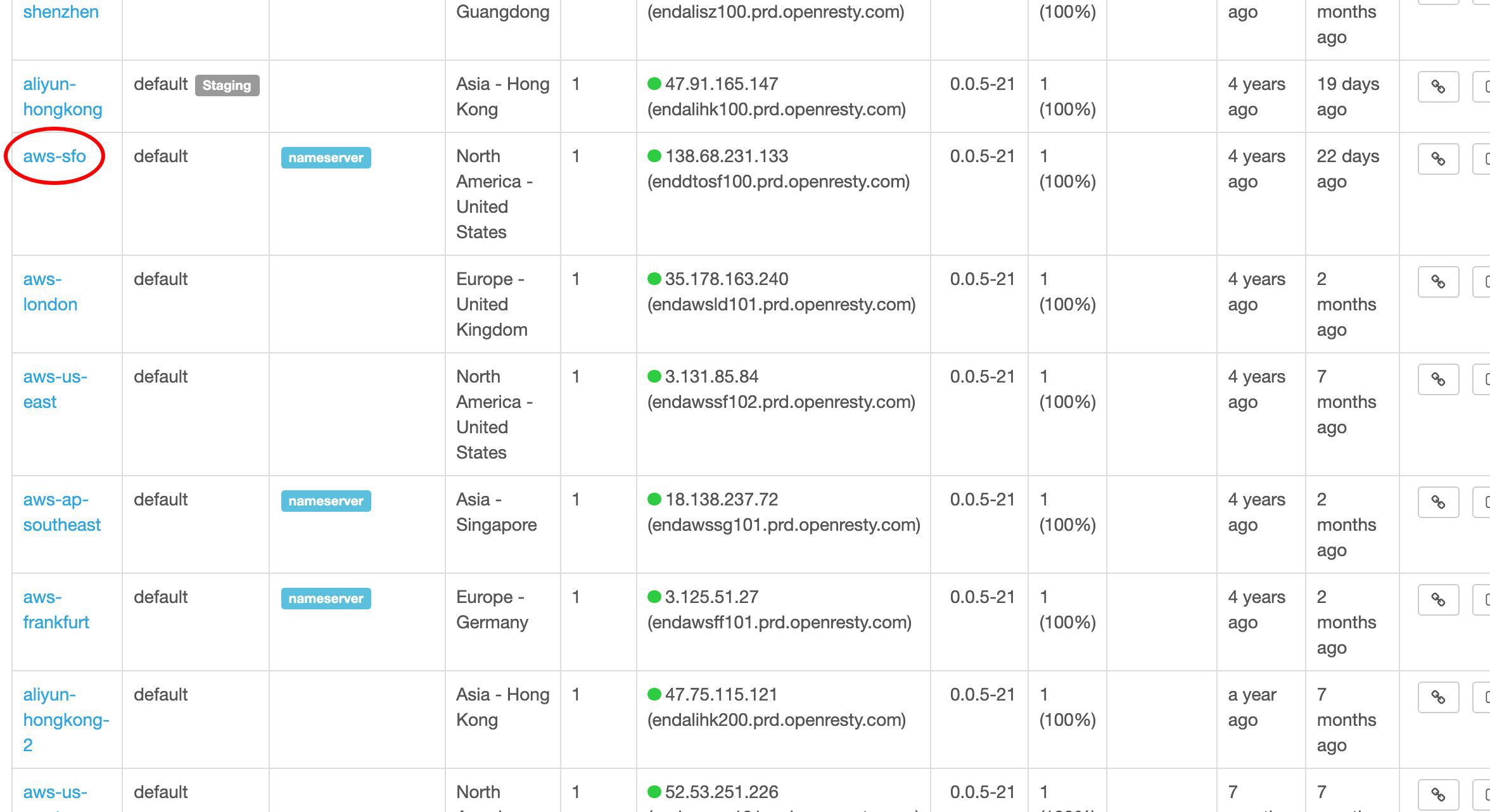

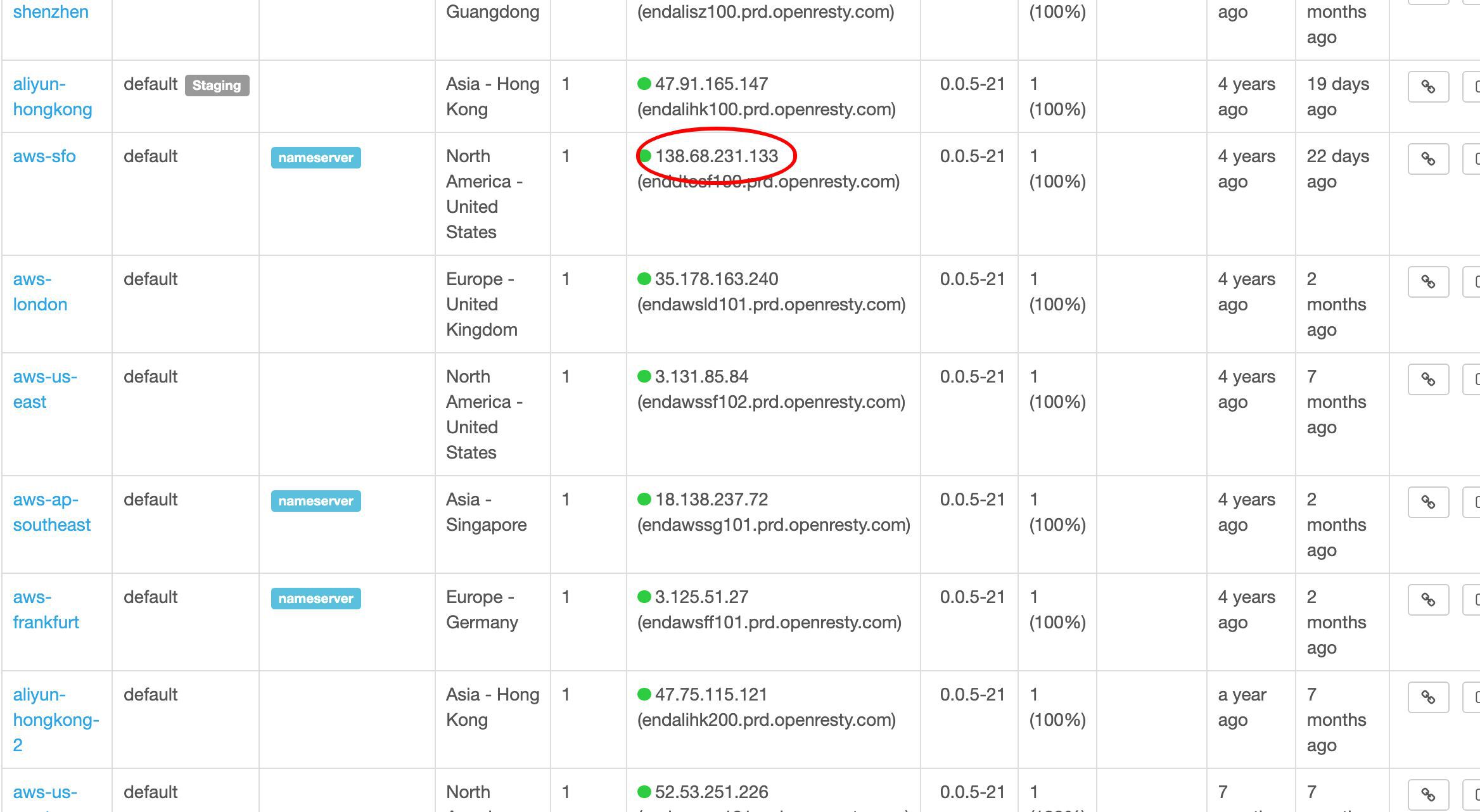

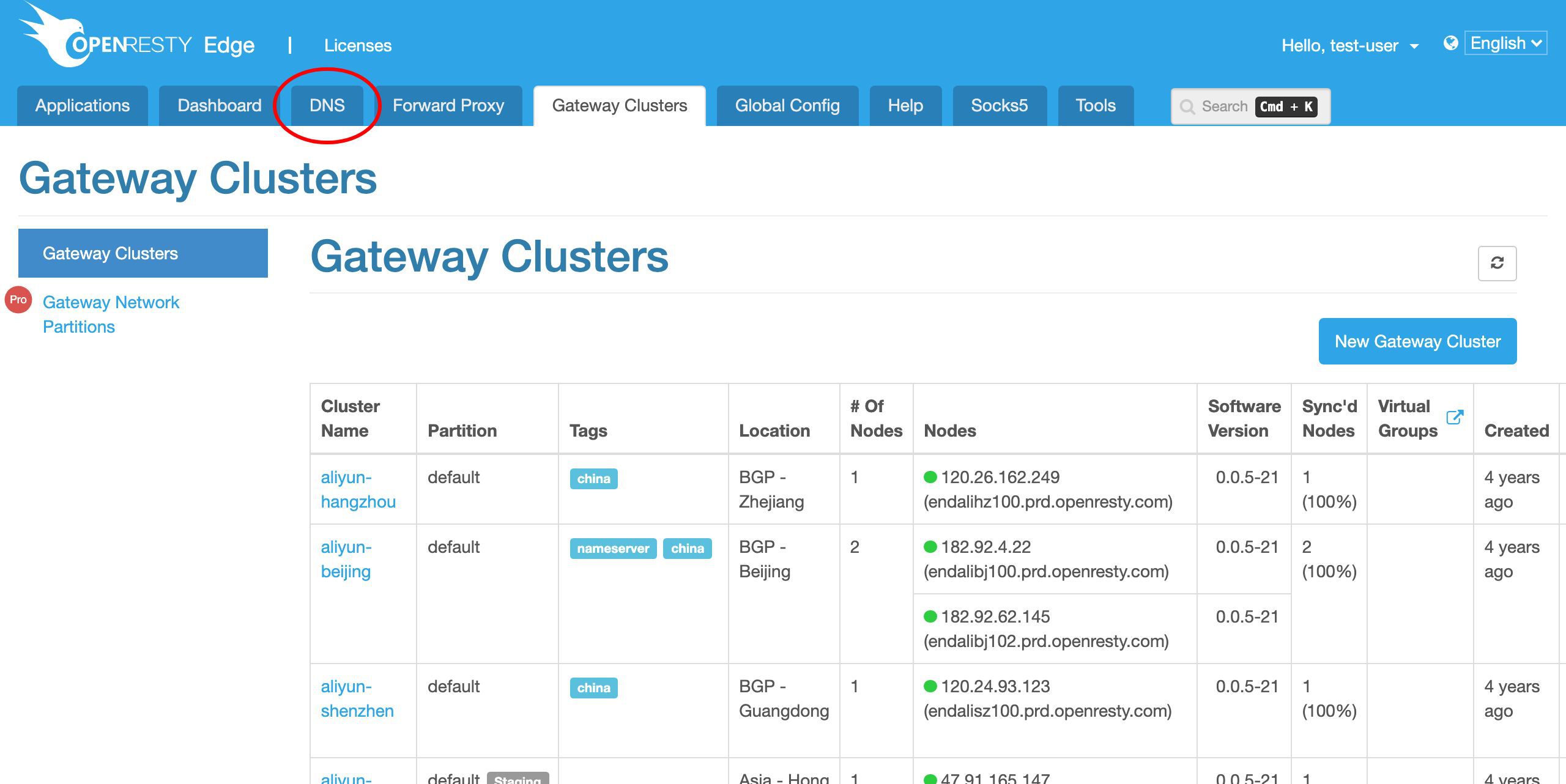

クラスター別にすべてのゲートウェイサーバーを確認することもできます。

これは世界中のサンプルデプロイメントです。

ユーザーは自由に任意の場所にゲートウェイサーバーをデプロイしたり、異なるクラウドやホスティングサービスにまたがってデプロイしたりすることができます。

この列は各ゲートウェイサーバーの設定同期状態を示しています。

サンフランシスコ近くのゲートウェイサーバーを選んでテストしてみましょう。

これがそのパブリック IP アドレスです。

このサーバーをテストするために、この IP アドレスを直接コピーします。

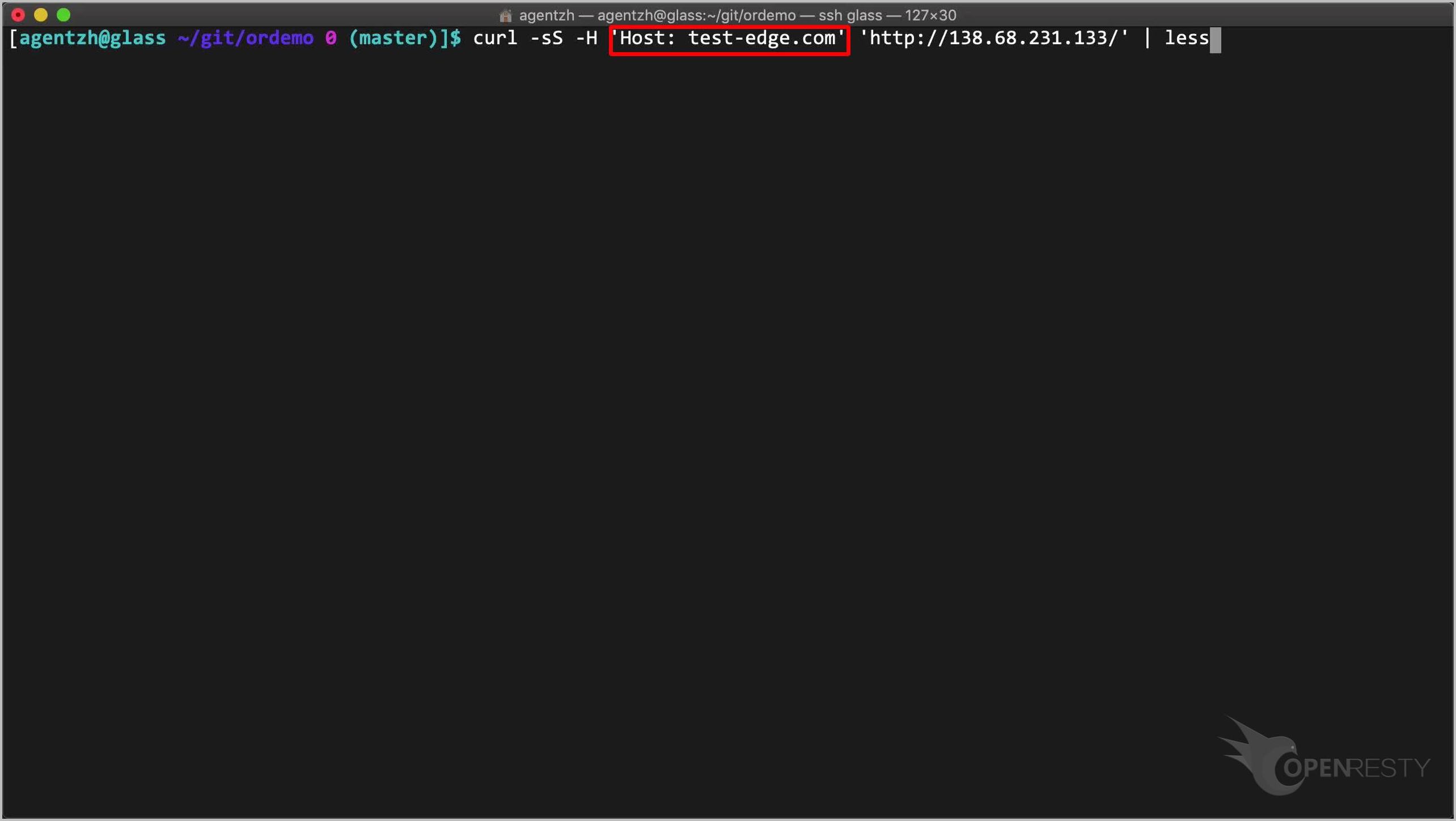

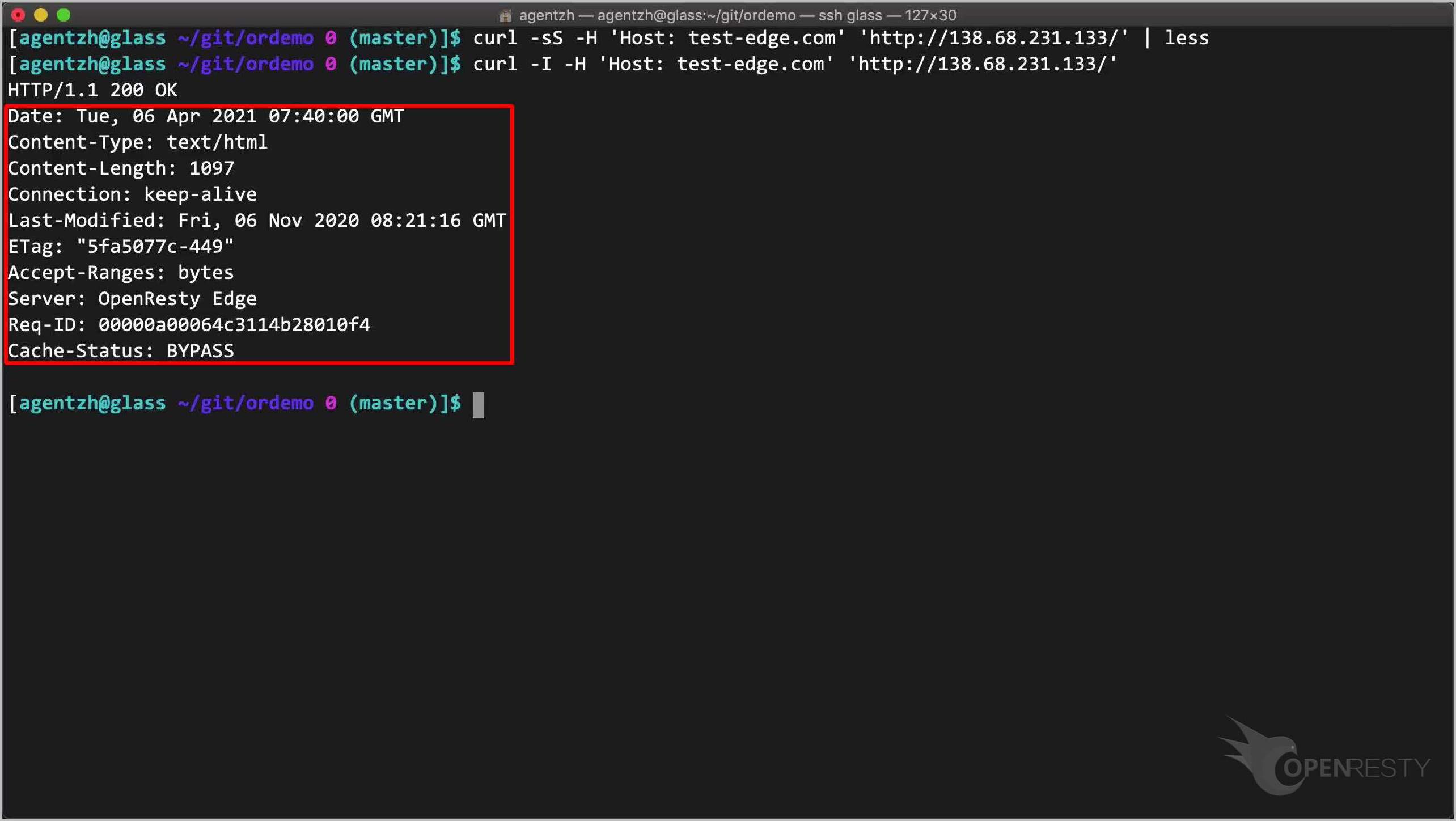

ターミナルで、curl を使用してこのサンフランシスコのゲートウェイサーバーをテストできます。

curl -sS -H 'Host: test-edge.com' 'http://138.68.231.133/' | less

Host リクエストヘッダーを指定していることに注意してください。これは、同じサーバーが多くの異なる仮想ホストにサービスを提供しているためです。

リクエストを送信します。

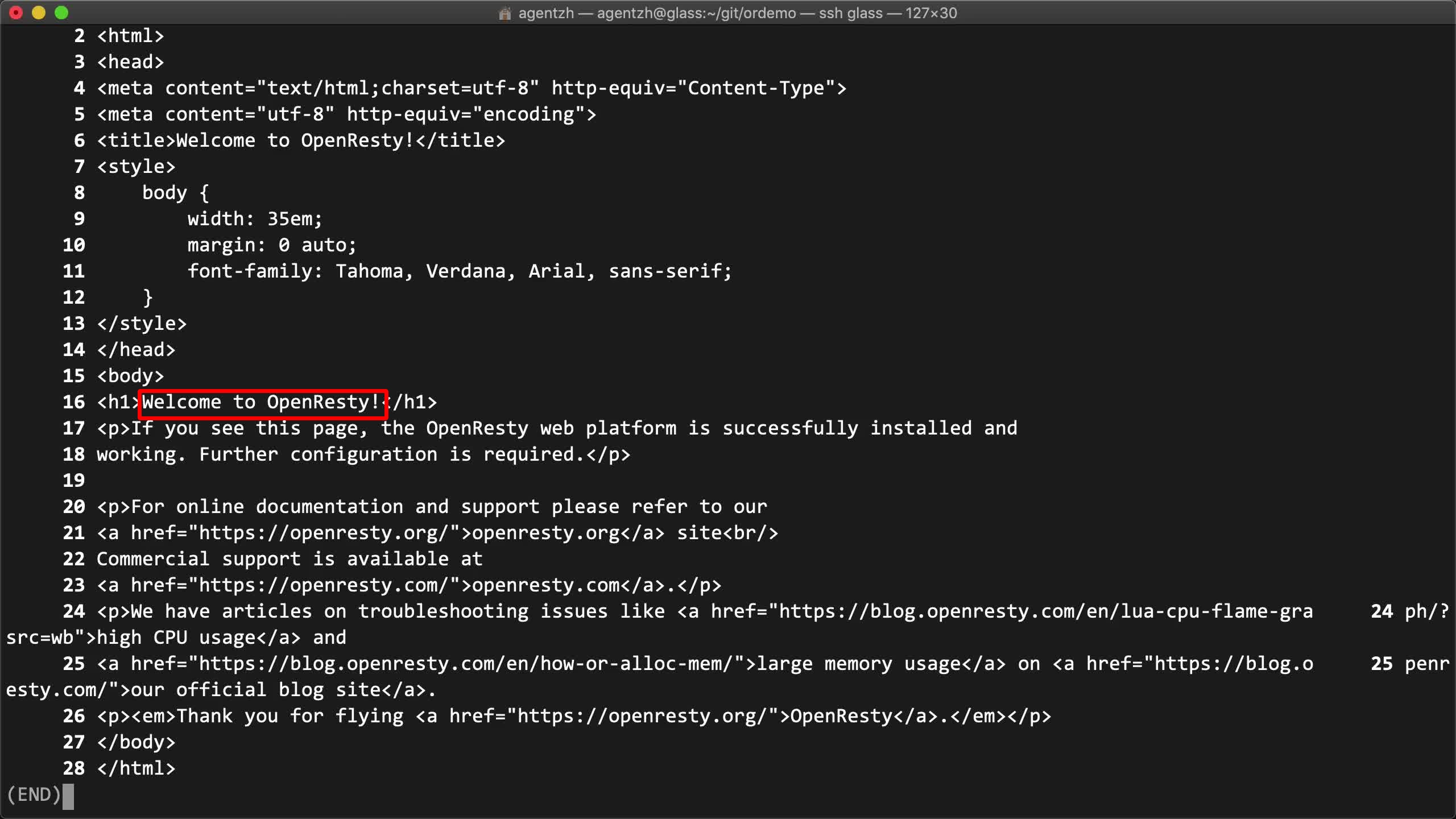

結果は予想通りです!バックエンドサーバーに直接アクセスしたかのように、デフォルトの OpenResty ホームページが表示されました。

curl の -I オプションを使用してレスポンスヘッダーを確認することもできます。

curl -I -H 'Host: test-edge.com' 'http://138.68.231.133/'

いくつかのレスポンスヘッダーは OpenResty Edge ゲートウェイソフトウェアによって生成されています。

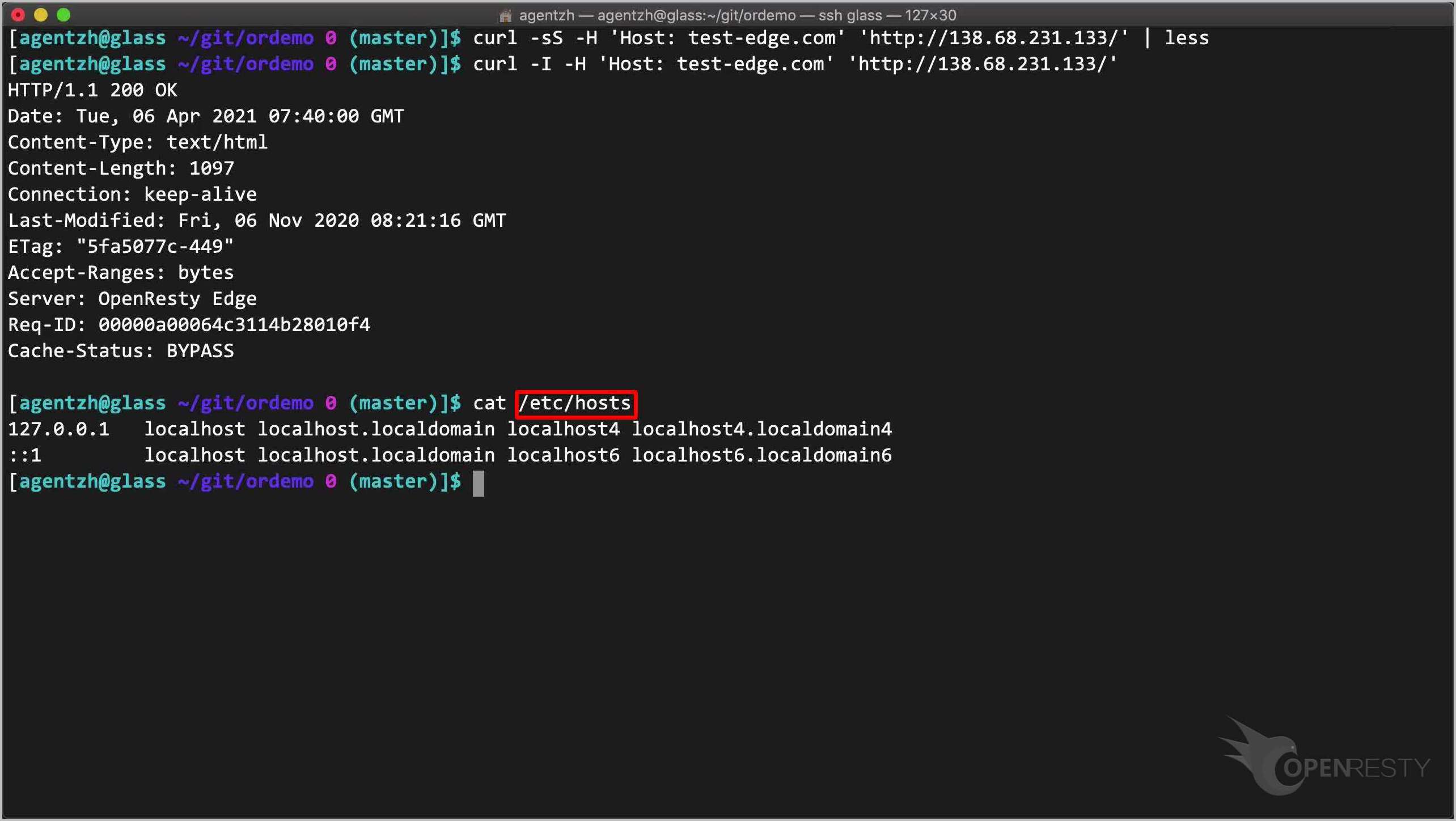

また、ローカルの /etc/hosts ファイルで IP アドレスをホスト名にバインドすることもできます。そうすれば、ウェブブラウザを直接このドメイン名に向けることができます。

cat /etc/hosts

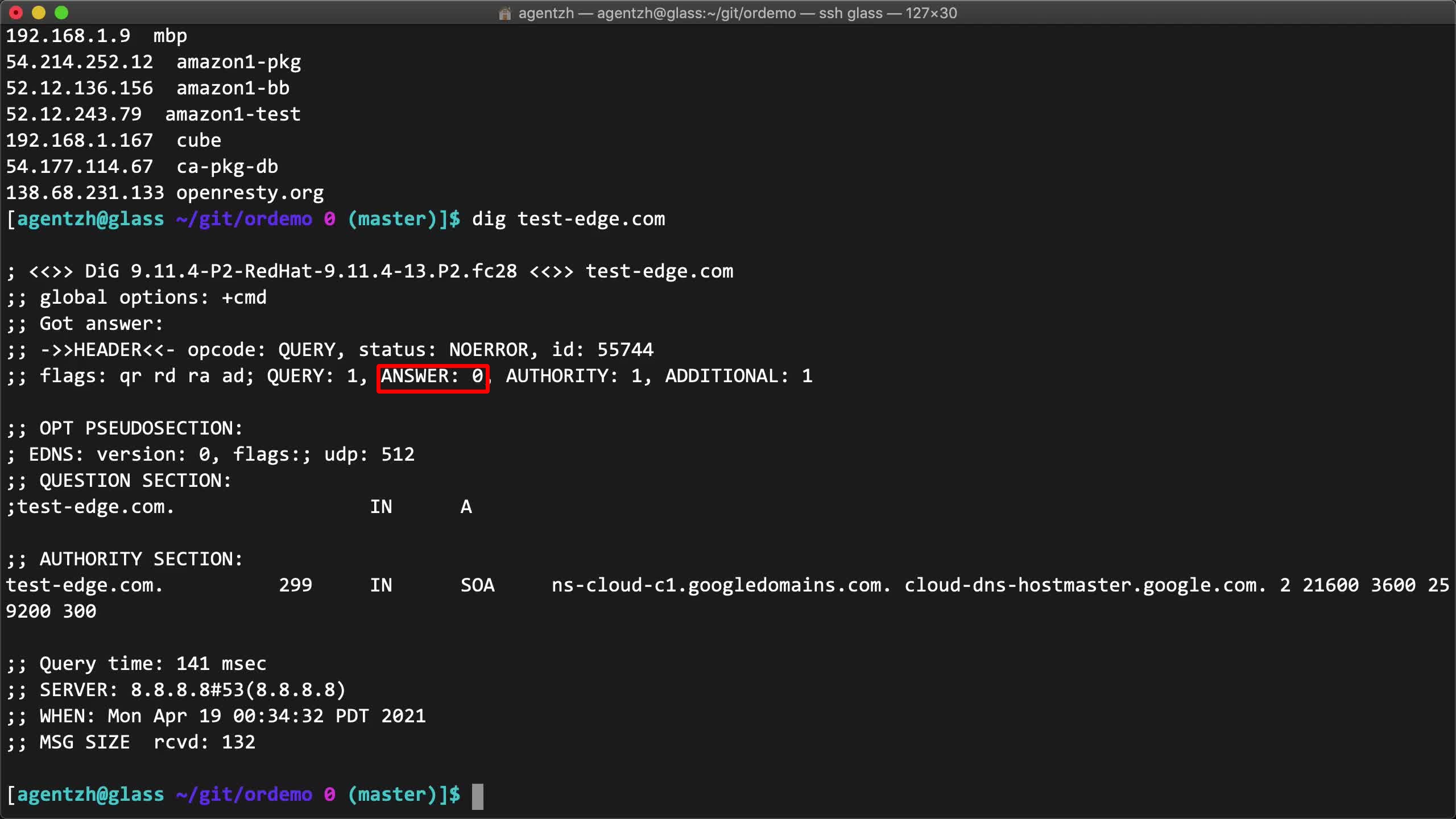

実際の設定では、ゲートウェイサーバーの IP アドレスを DNS ネームサーバーに追加する必要があります。

dig test-edge.com

ここではまだこのドメイン名の DNS レコードを設定していません。これは別の動画でデモンストレーションします。

OpenResty Edge は、権威 DNS サーバーネットワークとしても同時に機能します。

もちろん、これはオプションです。ユーザーは引き続きサードパーティの DNS ネームサーバーを使用することもできます。

以上が本日ご紹介する内容です。

OpenResty Edge について

OpenResty Edge は、マイクロサービスと分散トラフィックアーキテクチャ向けに設計された多機能ゲートウェイソフトウェアで、当社が独自に開発しました。トラフィック管理、プライベート CDN 構築、API ゲートウェイ、セキュリティ保護などの機能を統合し、現代のアプリケーションの構築、管理、保護を容易にします。OpenResty Edge は業界をリードする性能と拡張性を持ち、高並発・高負荷シナリオの厳しい要求を満たすことができます。K8s などのコンテナアプリケーショントラフィックのスケジューリングをサポートし、大量のドメイン名を管理できるため、大規模ウェブサイトや複雑なアプリケーションのニーズを容易に満たすことができます。

著者について

章亦春(Zhang Yichun)は、オープンソースの OpenResty® プロジェクトの創始者であり、OpenResty Inc. の CEO および創業者です。

章亦春(GitHub ID: agentzh)は中国江蘇省生まれで、現在は米国ベイエリアに在住しております。彼は中国における初期のオープンソース技術と文化の提唱者およびリーダーの一人であり、Cloudflare、Yahoo!、Alibaba など、国際的に有名なハイテク企業に勤務した経験があります。「エッジコンピューティング」、「動的トレーシング」、「機械プログラミング」 の先駆者であり、22 年以上のプログラミング経験と 16 年以上のオープンソース経験を持っております。世界中で 4000 万以上のドメイン名を持つユーザーを抱えるオープンソースプロジェクトのリーダーとして、彼は OpenResty® オープンソースプロジェクトをベースに、米国シリコンバレーの中心部にハイテク企業 OpenResty Inc. を設立いたしました。同社の主力製品である OpenResty XRay動的トレーシング技術を利用した非侵襲的な障害分析および排除ツール)と OpenResty Edge(マイクロサービスおよび分散トラフィックに最適化された多機能

翻訳

英文版の原文と日本語訳版(本文)をご用意しております。読者の皆様による他の言語への翻訳版も歓迎いたします。全文翻訳で省略がなければ、採用を検討させていただきます。心より感謝申し上げます!