OpenResty Edge での Kubernetes(K8s)アップストリームへのトラフィック管理

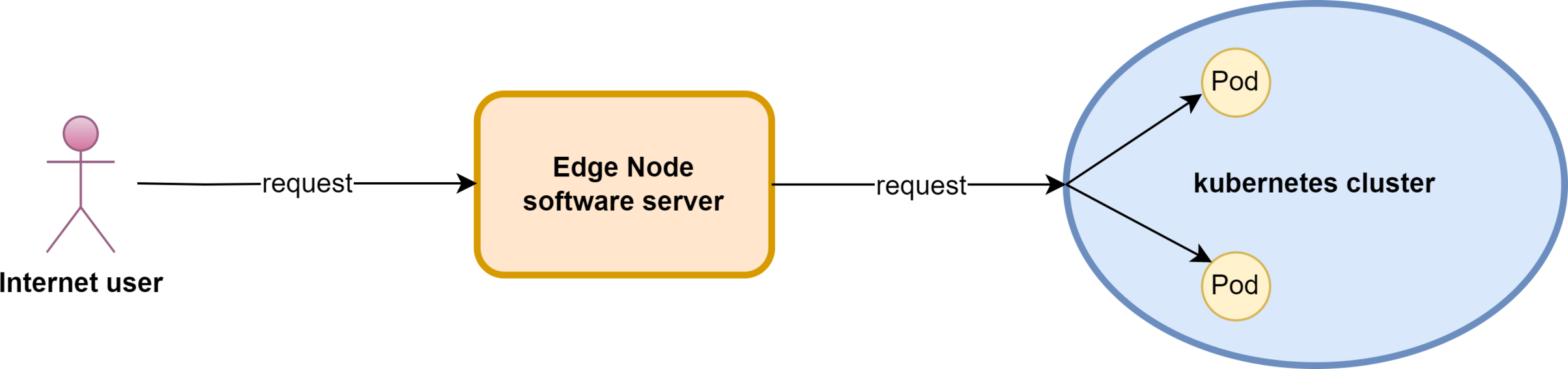

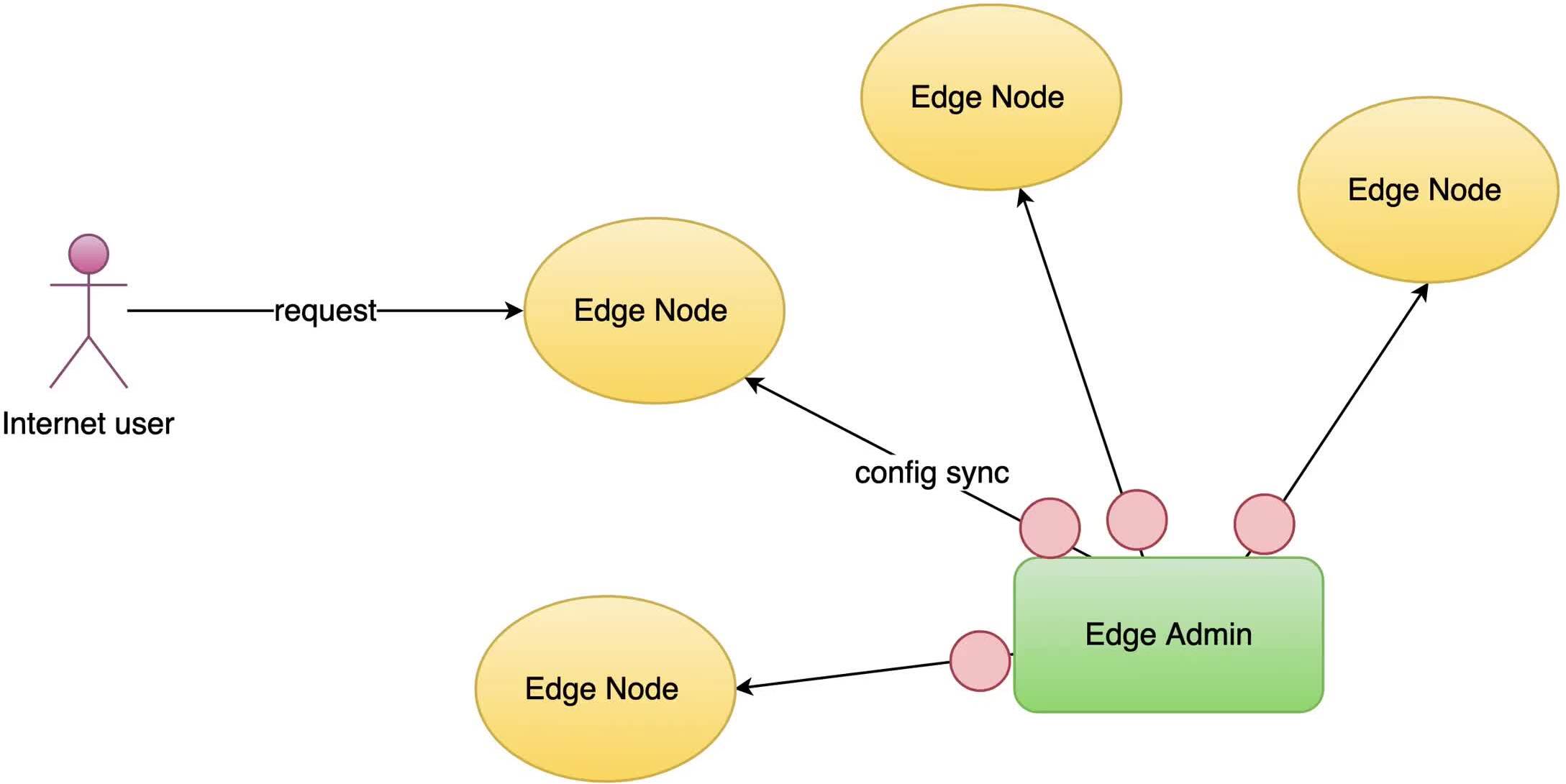

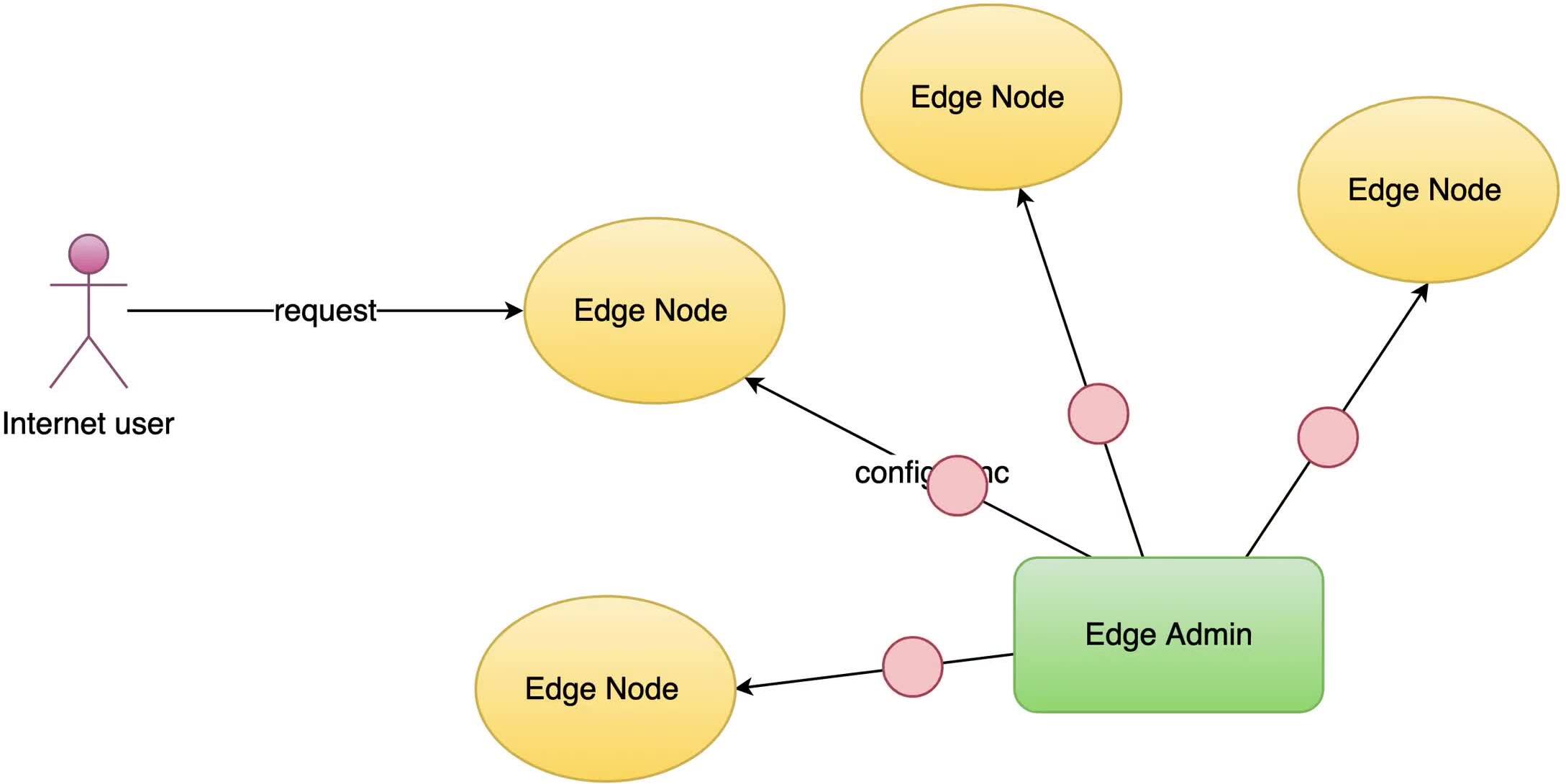

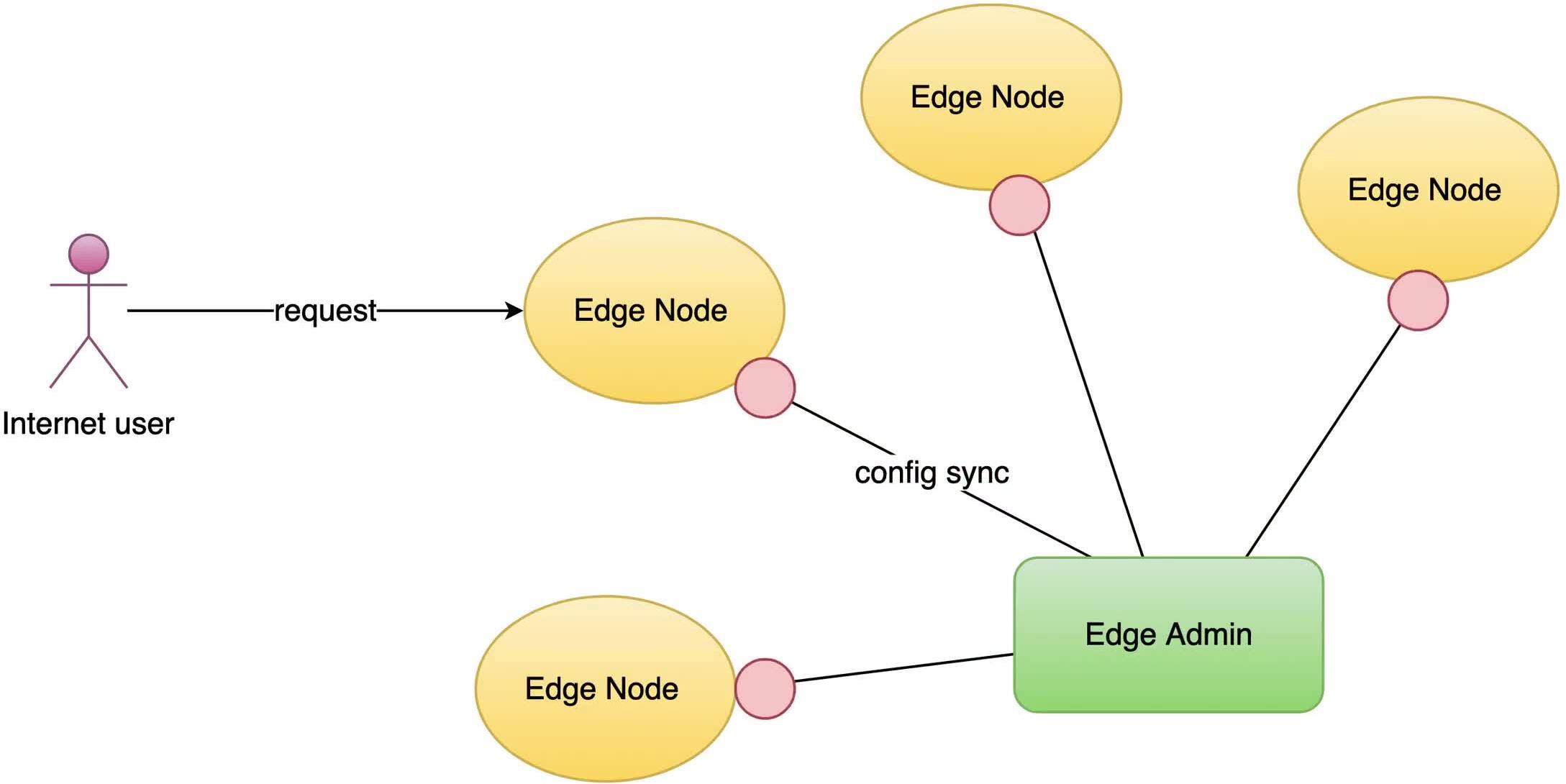

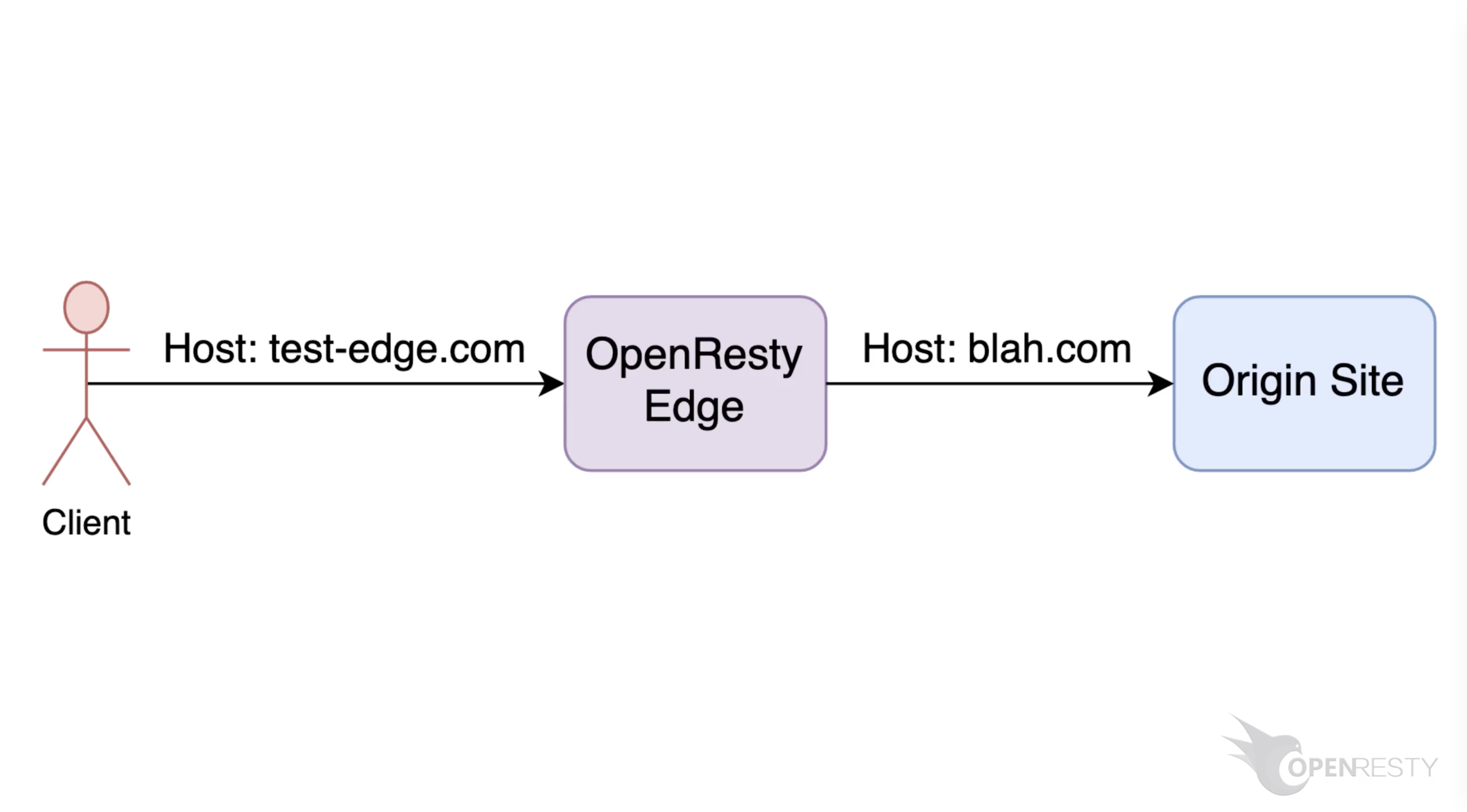

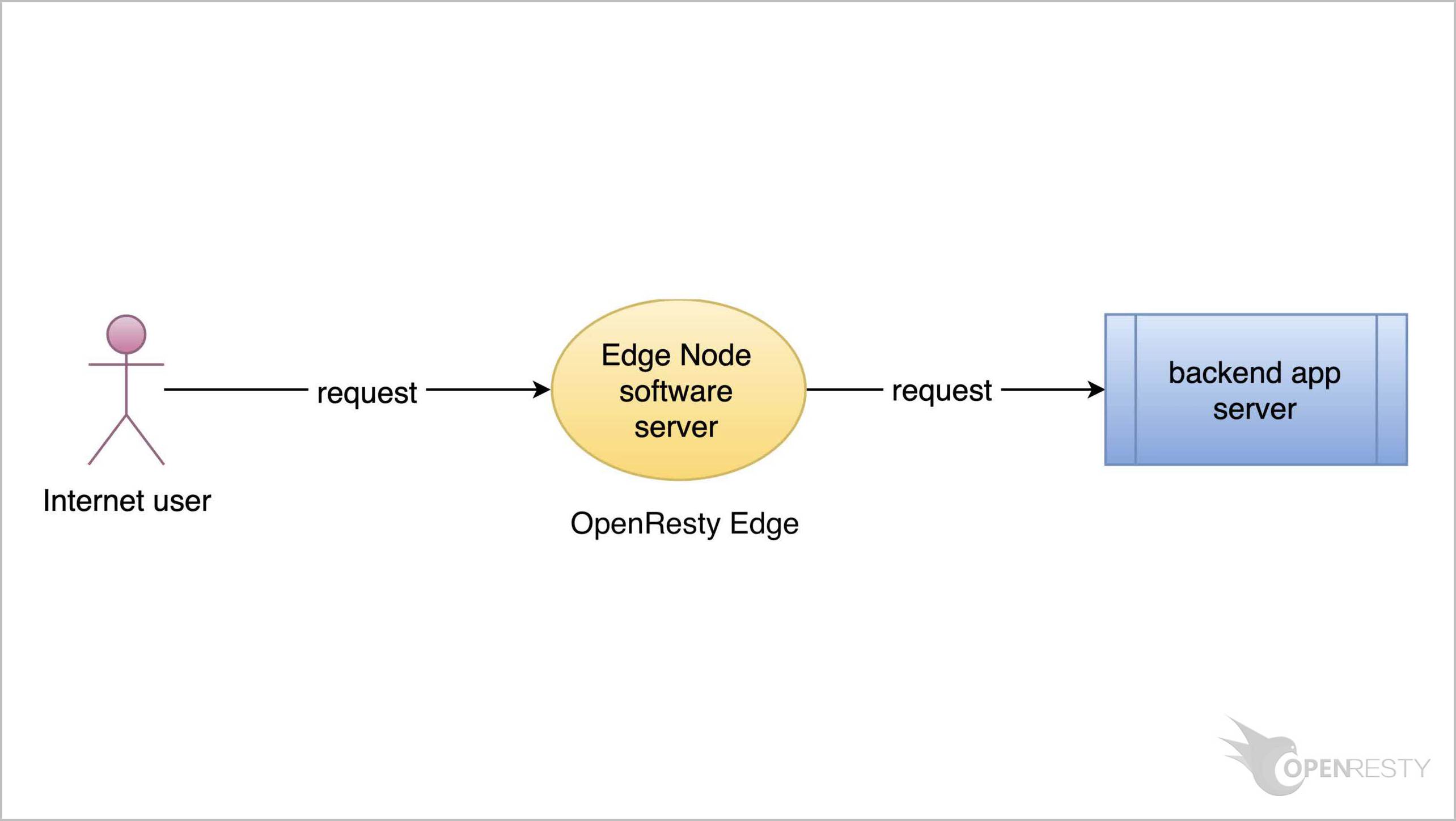

今回は、OpenResty Edge を Kubernetes クラスターの強力な ingress コントローラーとして使用する方法をご紹介いたします。つまり、バックエンドアプリケーションが Kubernetes コンテナ内で実行されている場合に、OpenResty Edge を使用してそのトラフィックを管理する方法についてお話しします。

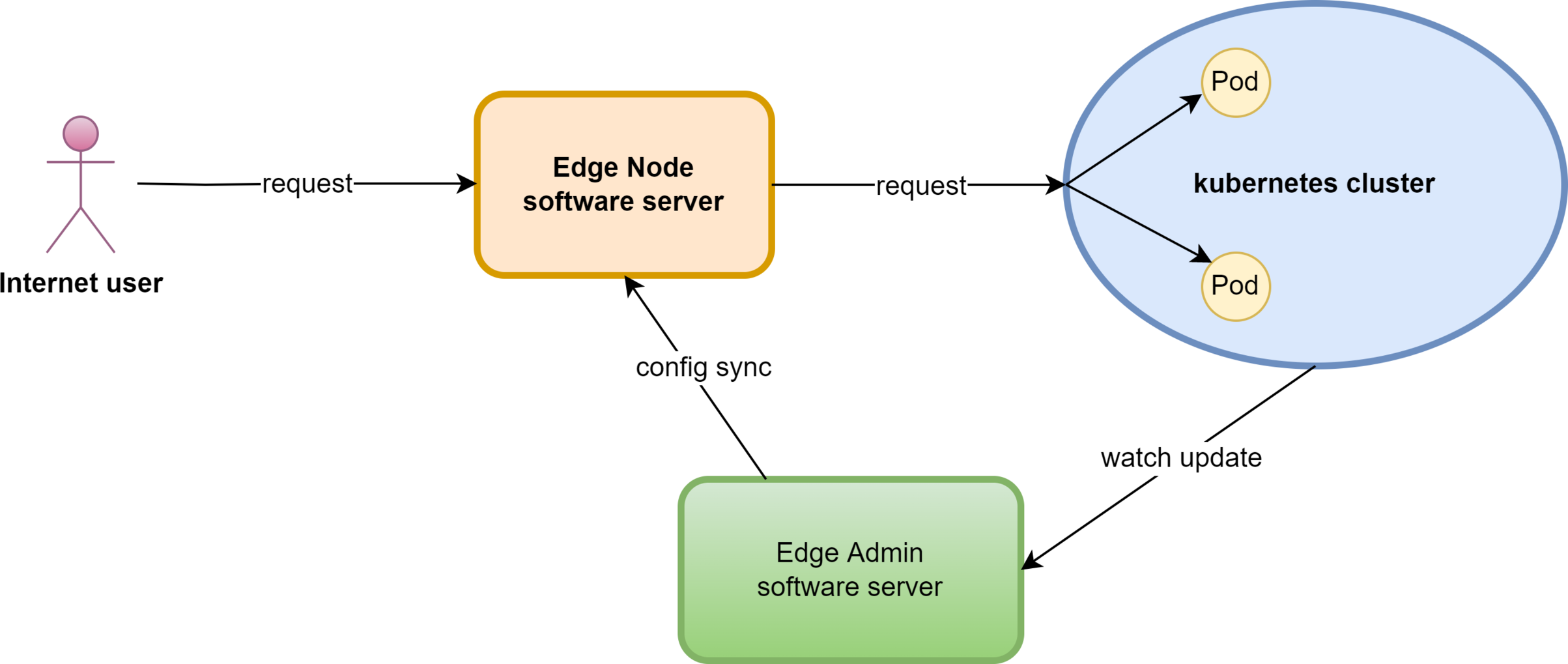

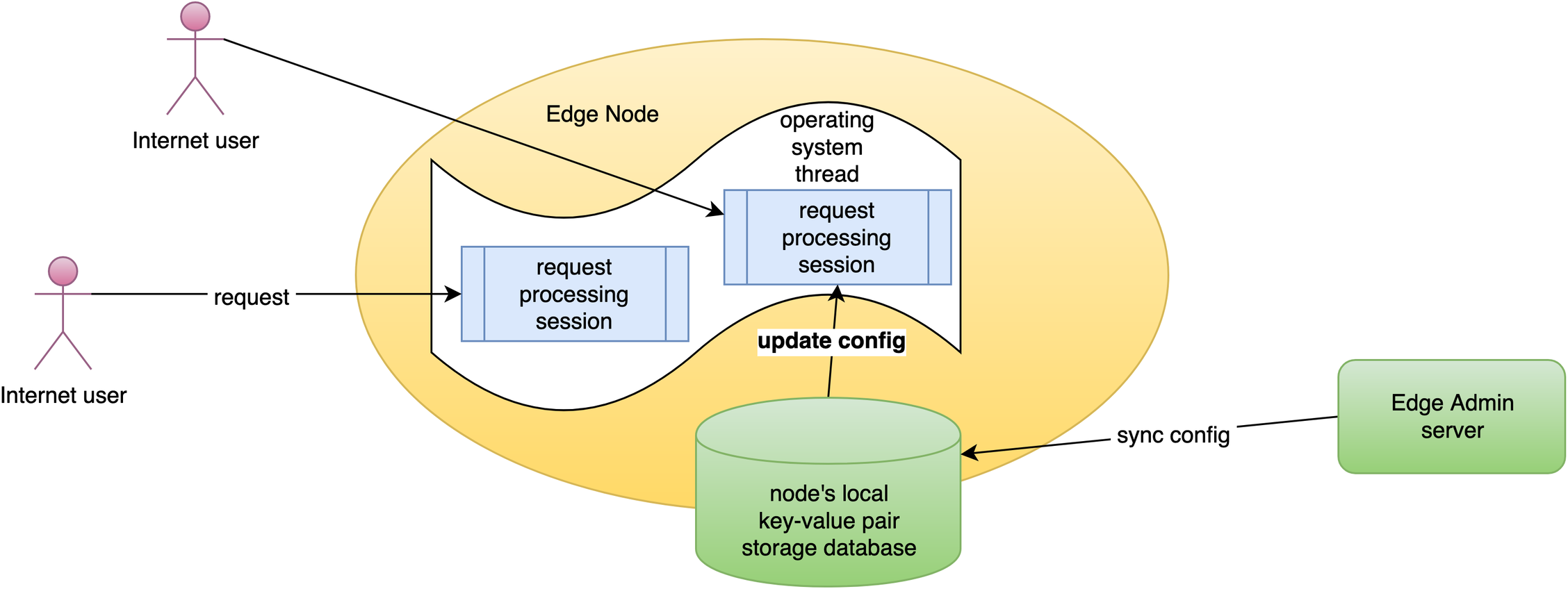

このチュートリアルでは、Edge アプリケーション内に Kubernetes アップストリームを作成します。Edge ゲートウェイサーバーは、Kubernetes クラスター内部で実行することも、外部で実行することも可能です。Edge の Admin サーバーは、API サーバーを通じて Kubernetes クラスターを継続的に監視します。また、Kubernetes ノード(またはコンテナ)のオンラインおよびオフラインイベントに応答し、アップストリームサーバーリストを自動的に更新します。

Kubernetes アップストリームの作成と使用方法

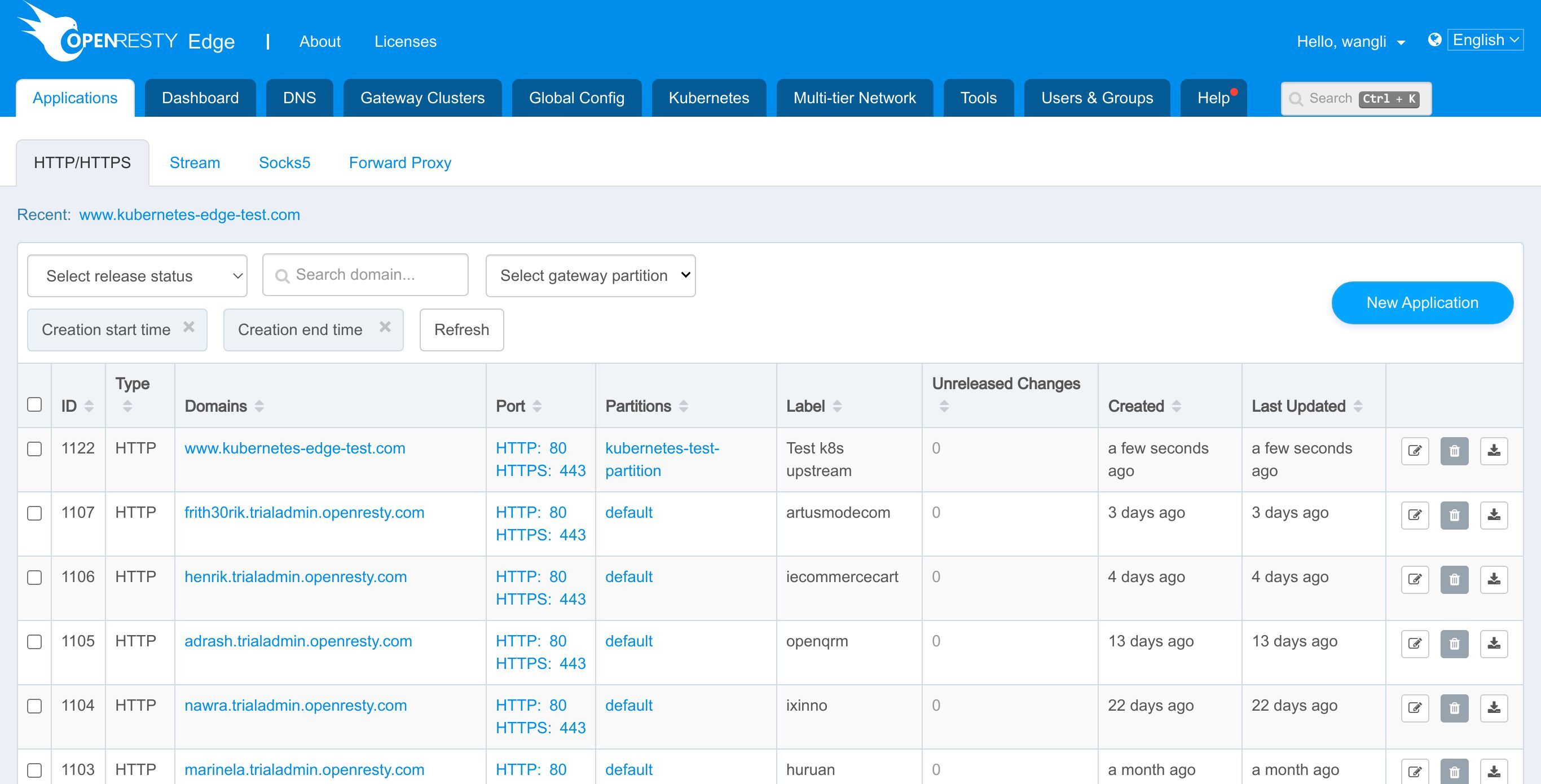

OpenResty Edge の Admin Web コンソールにアクセスしましょう。これはコンソールのサンプルデプロイメントです。各ユーザーは独自のローカルデプロイメントを持っています。

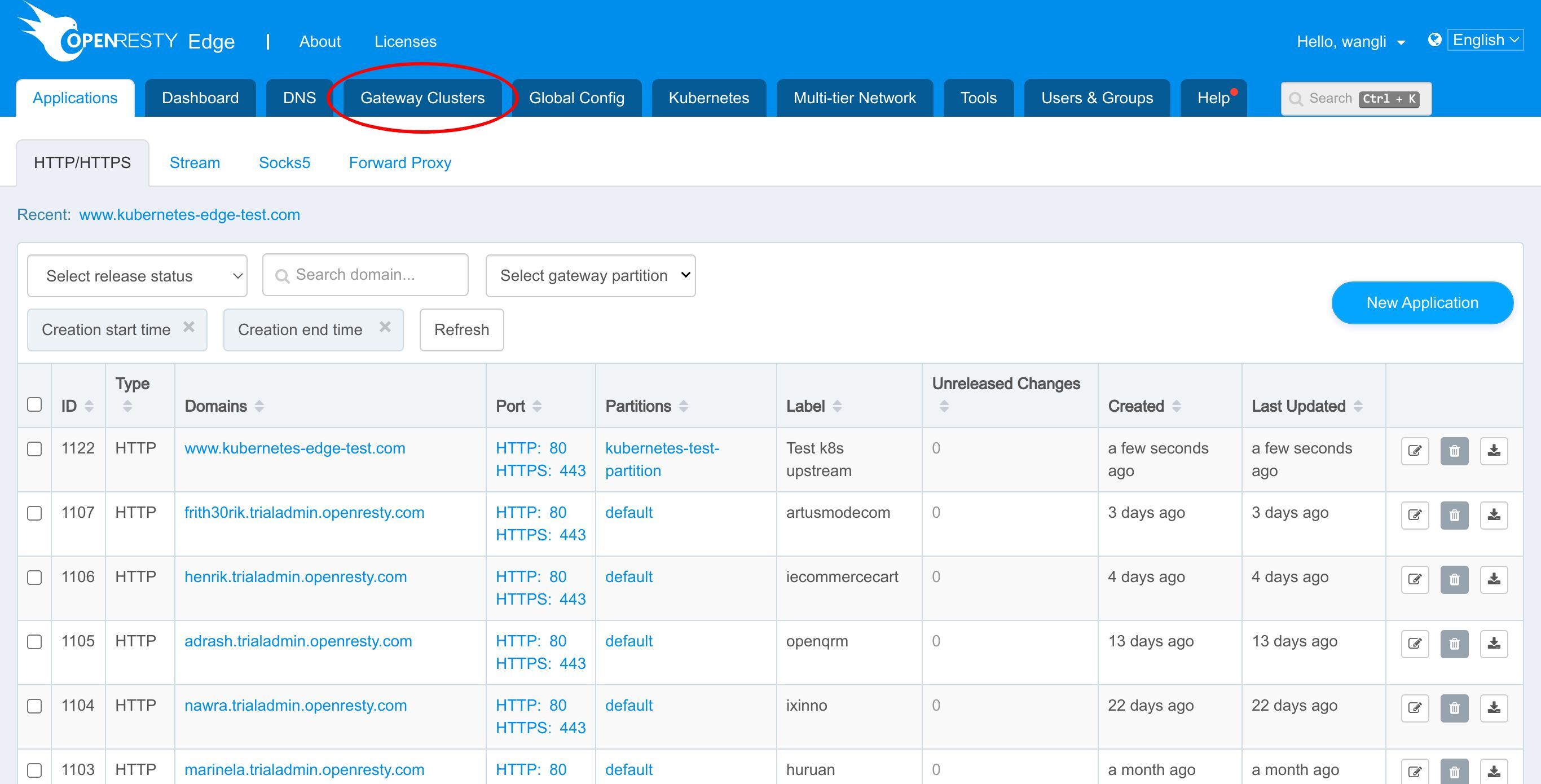

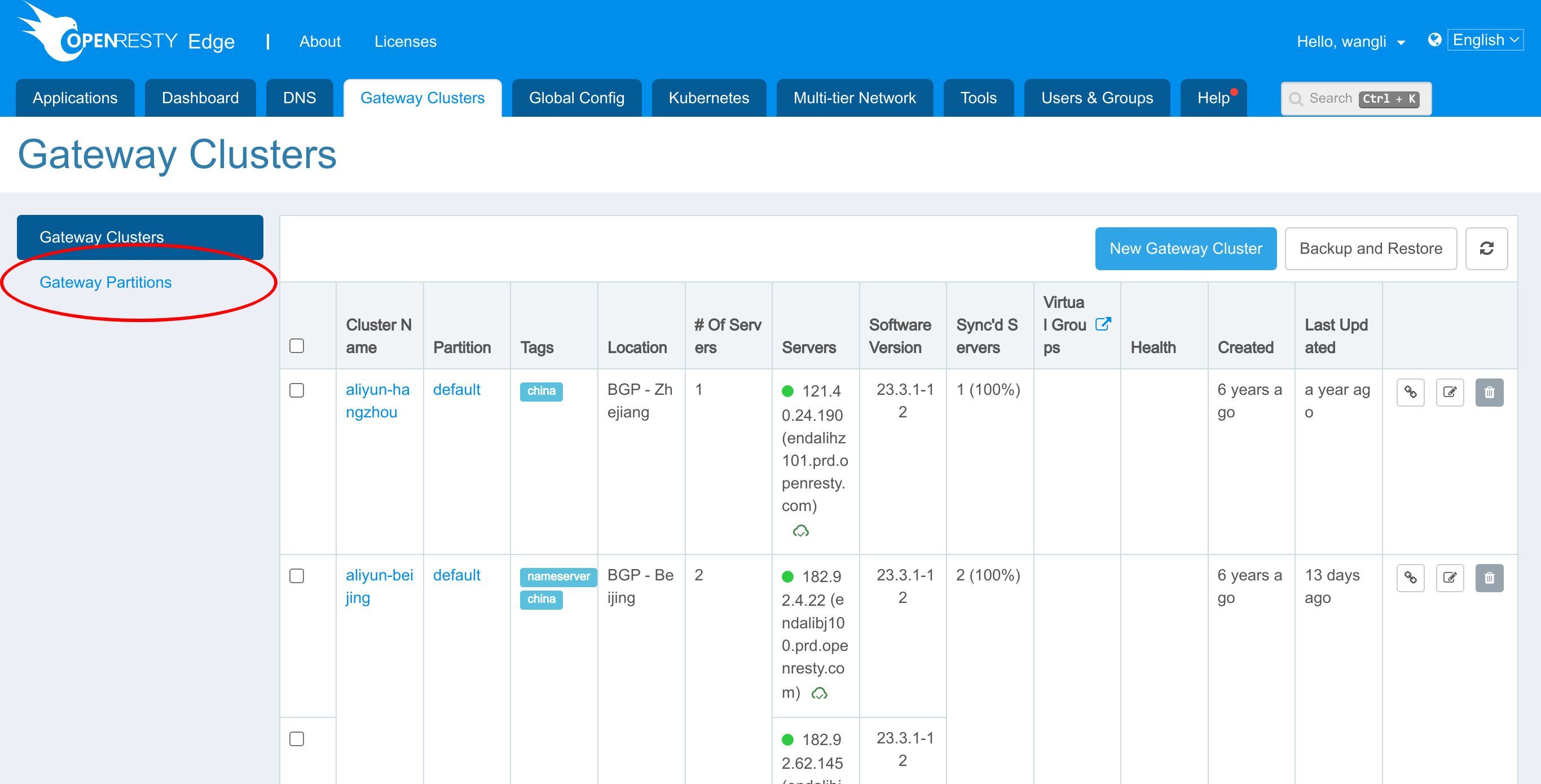

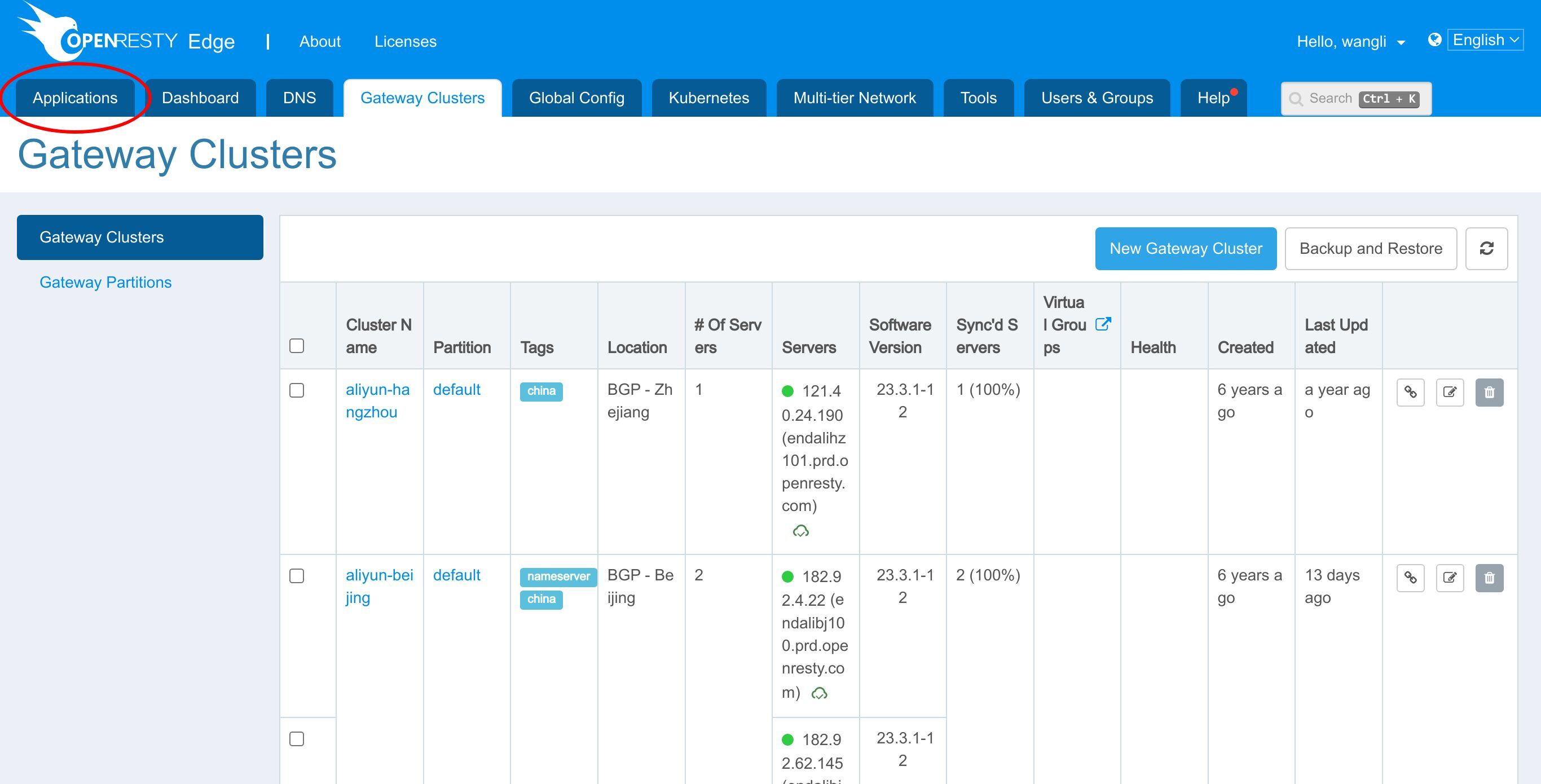

まず、専用のゲートウェイパーティション内に、Kubernetes サービスに接続できるゲートウェイサーバーを設定する必要があります。

ゲートウェイパーティションページに移動します。

すでに kubernetes-test-partition という名前のゲートウェイパーティションが作成しています。

パーティション内には kubernetes-test-cluster という名前のゲートウェイクラスターがあります。

すべてのゲートウェイサーバーが Kubernetes サービスに接続できるわけではないため、別のゲートウェイパーティションが必要です。

kubernetes-test-cluster クラスターに移動します。

このゲートウェイクラスター内にすでにゲートウェイサーバーが定義されています。このゲートウェイサーバーが Kubernetes クラスターに接続できることを確認する必要があります。

Kubernetes クラスターの作成

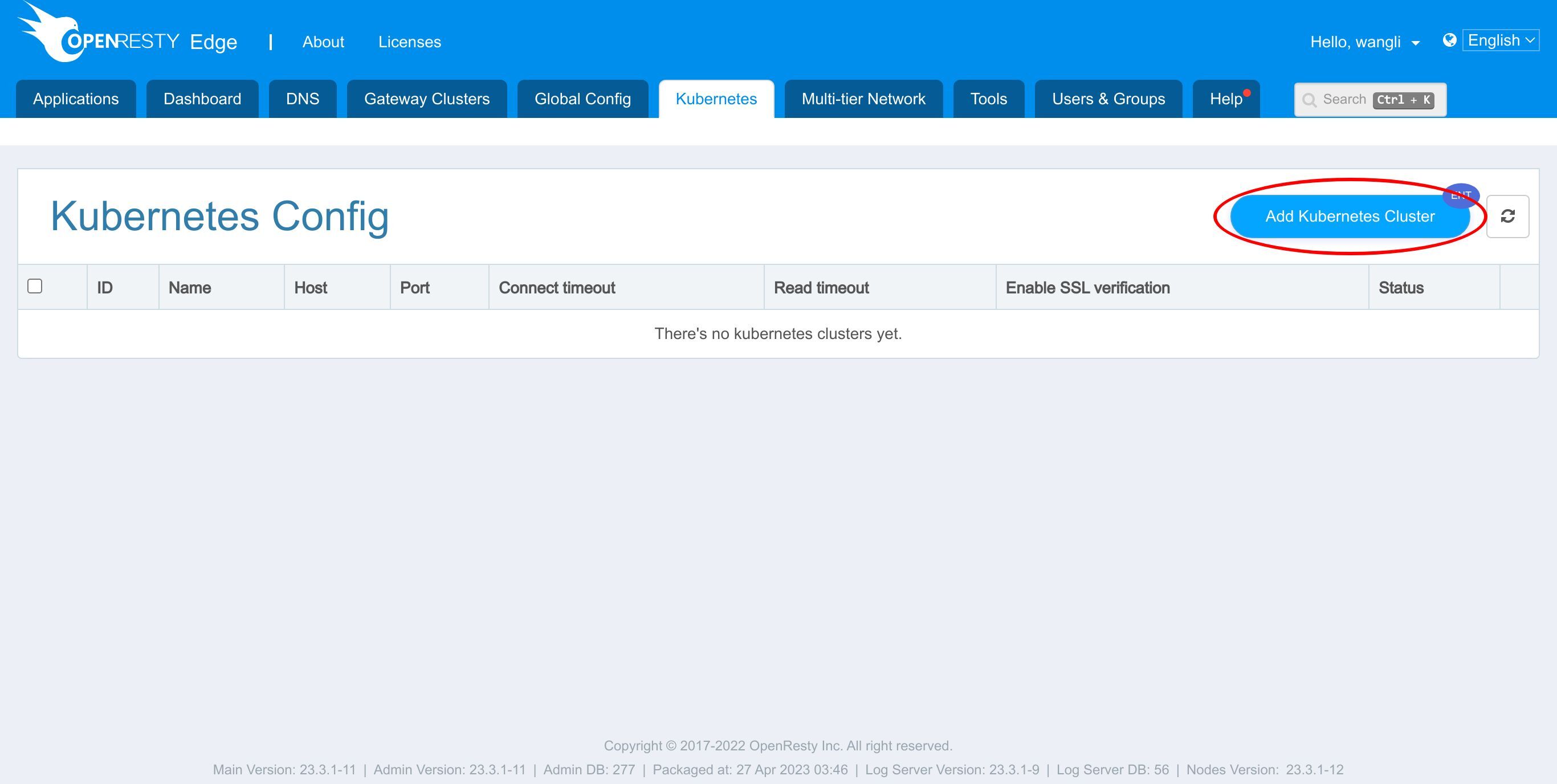

Edge アプリケーションで新しい Kubernetes アップストリームを作成する前に、まずグローバルスコープで Kubernetes クラスターを登録する必要があります。

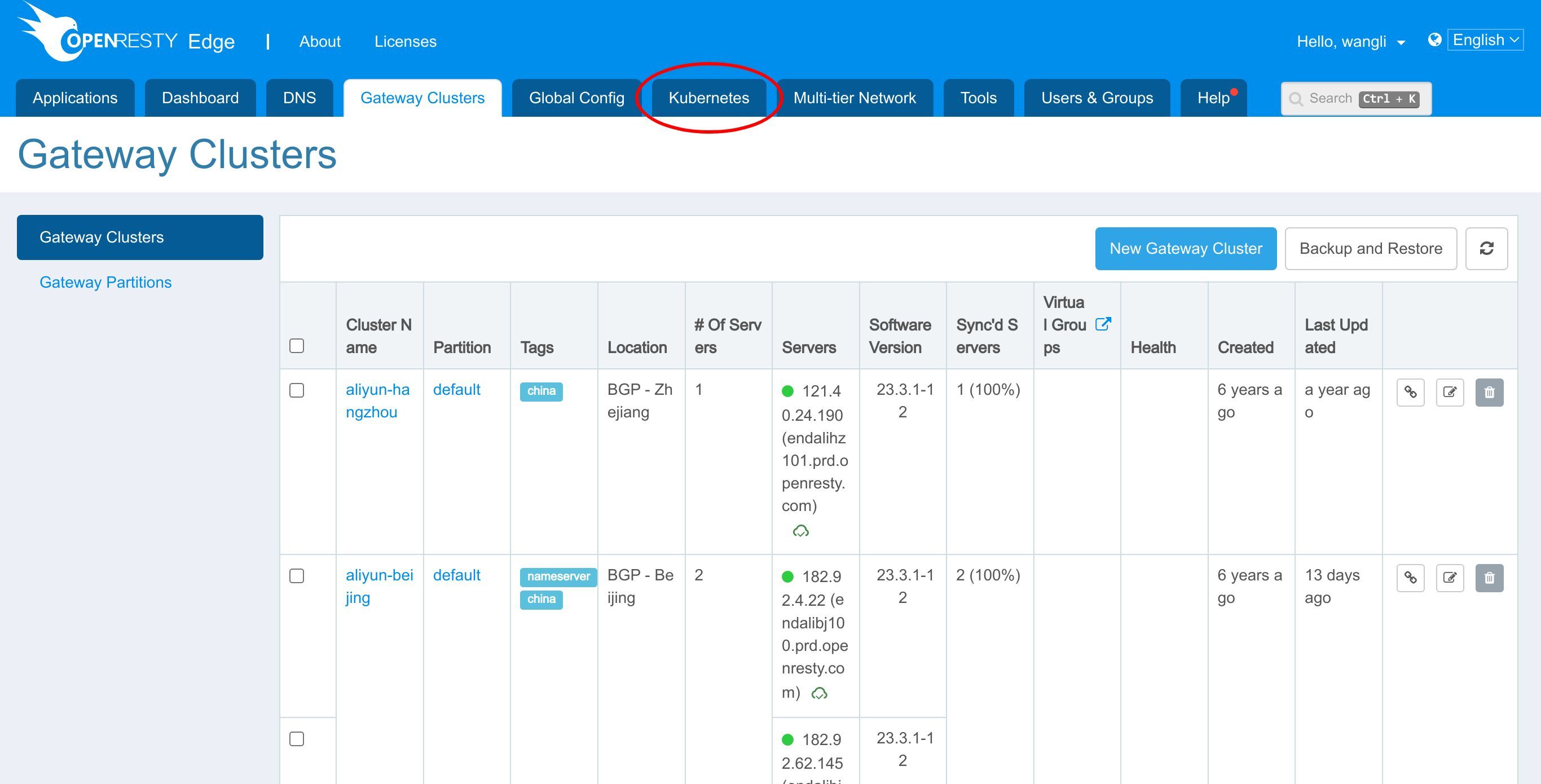

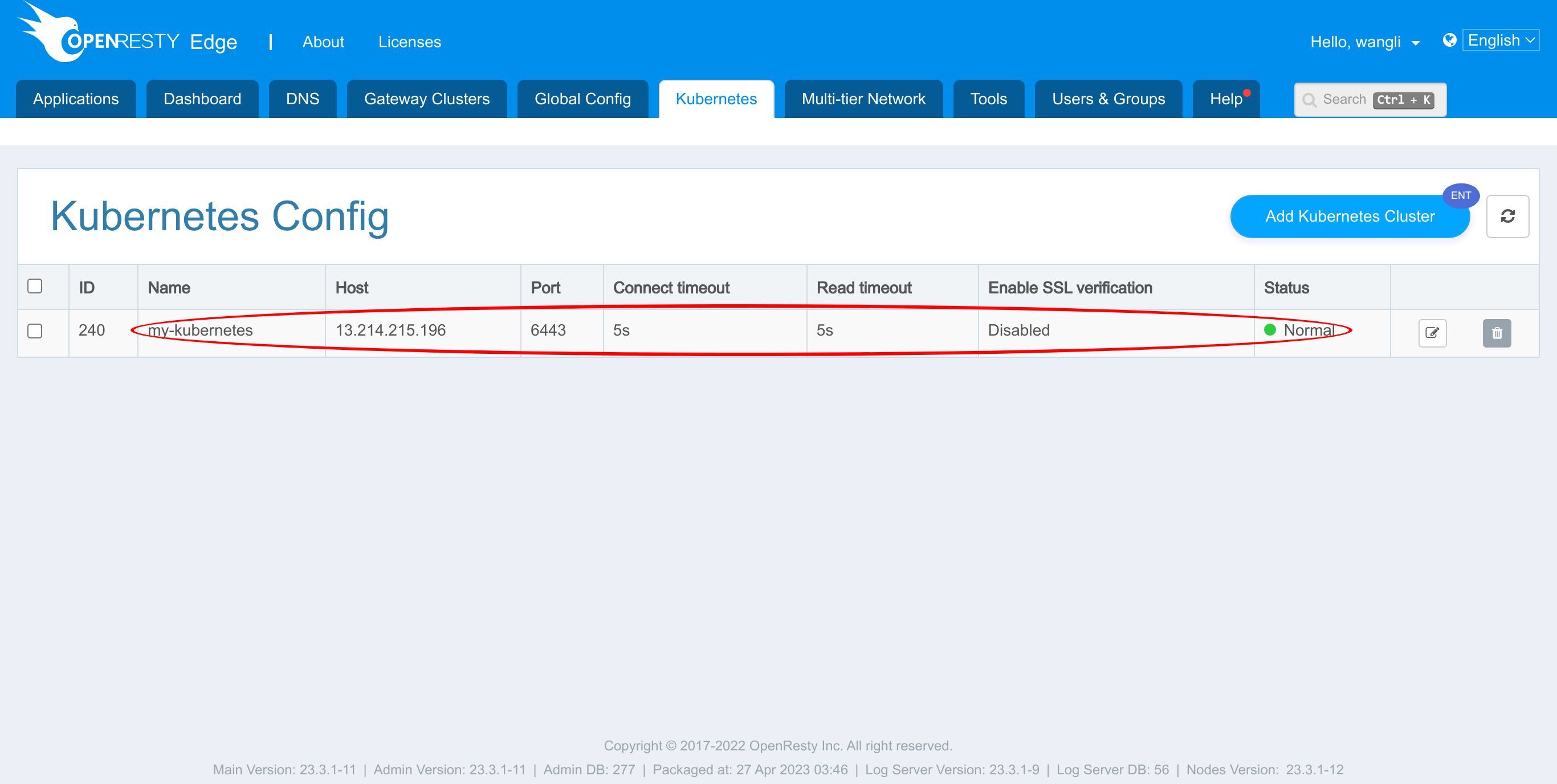

Kubernetes ページに移動します。

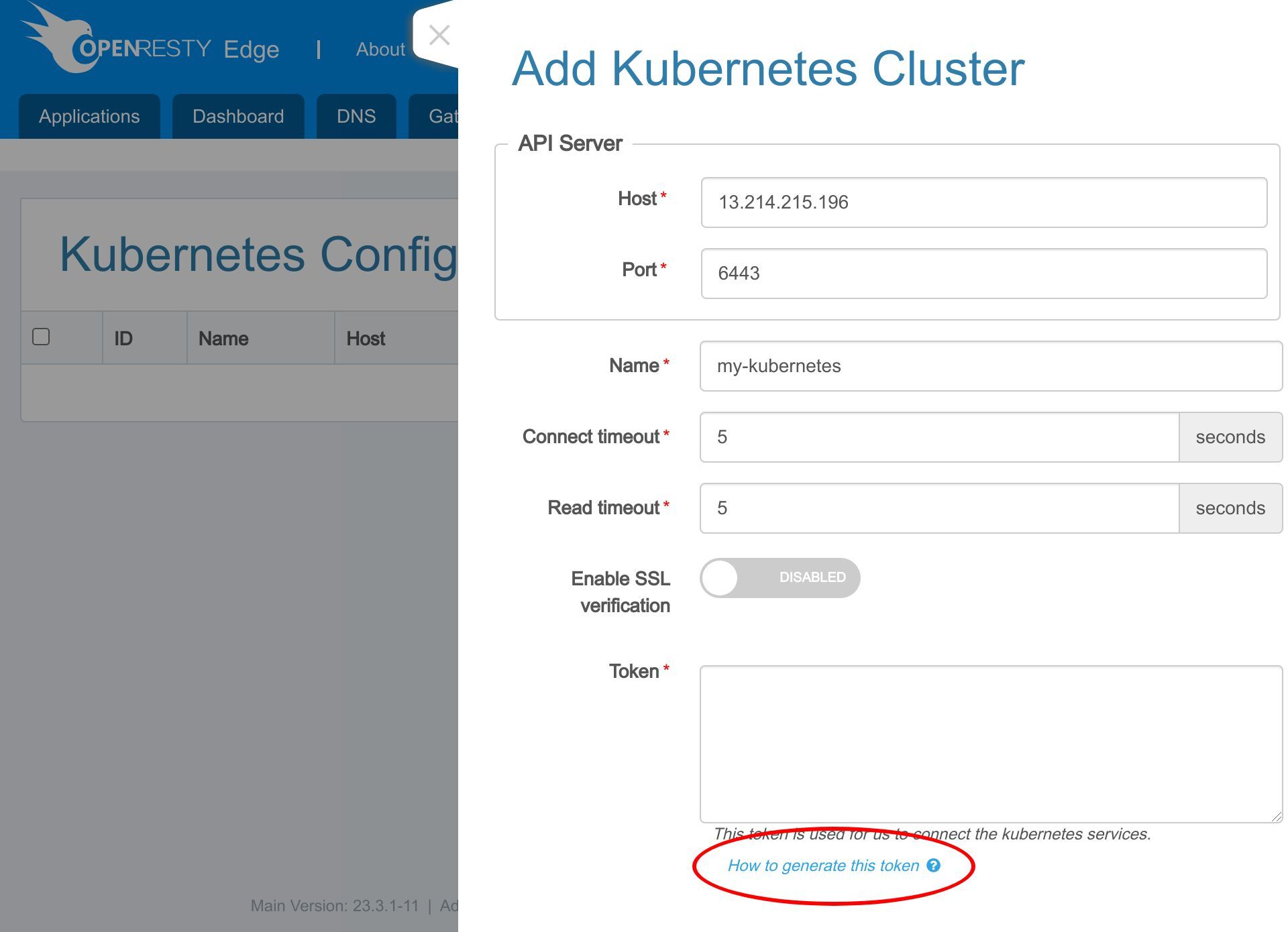

このボタンをクリックして新しい Kubernetes クラスターを追加します。

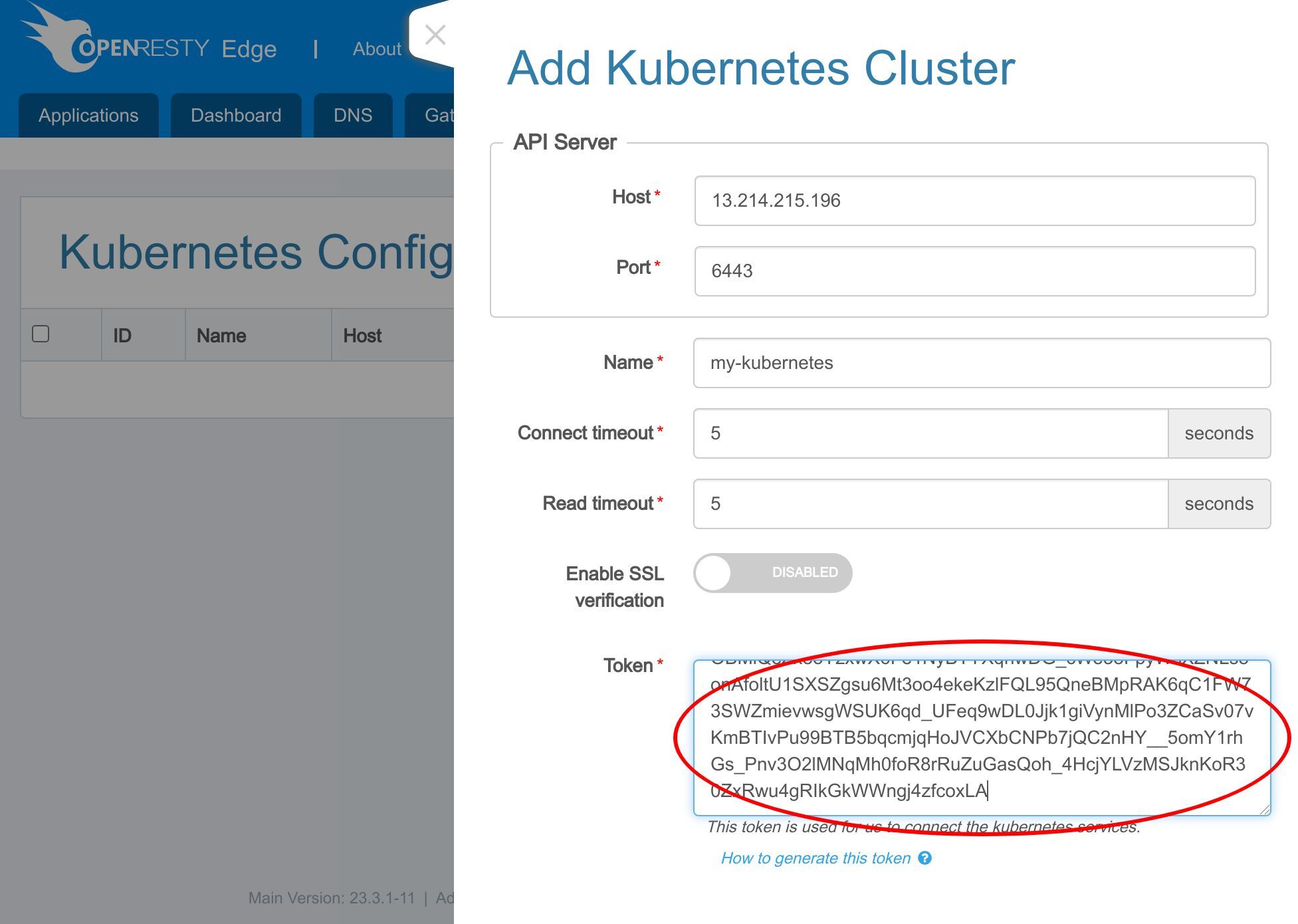

新しい通知を受け取るために、OpenResty Edge Admin は Kubernetes の HTTPS API サーバーとの接続を確立する必要があります。

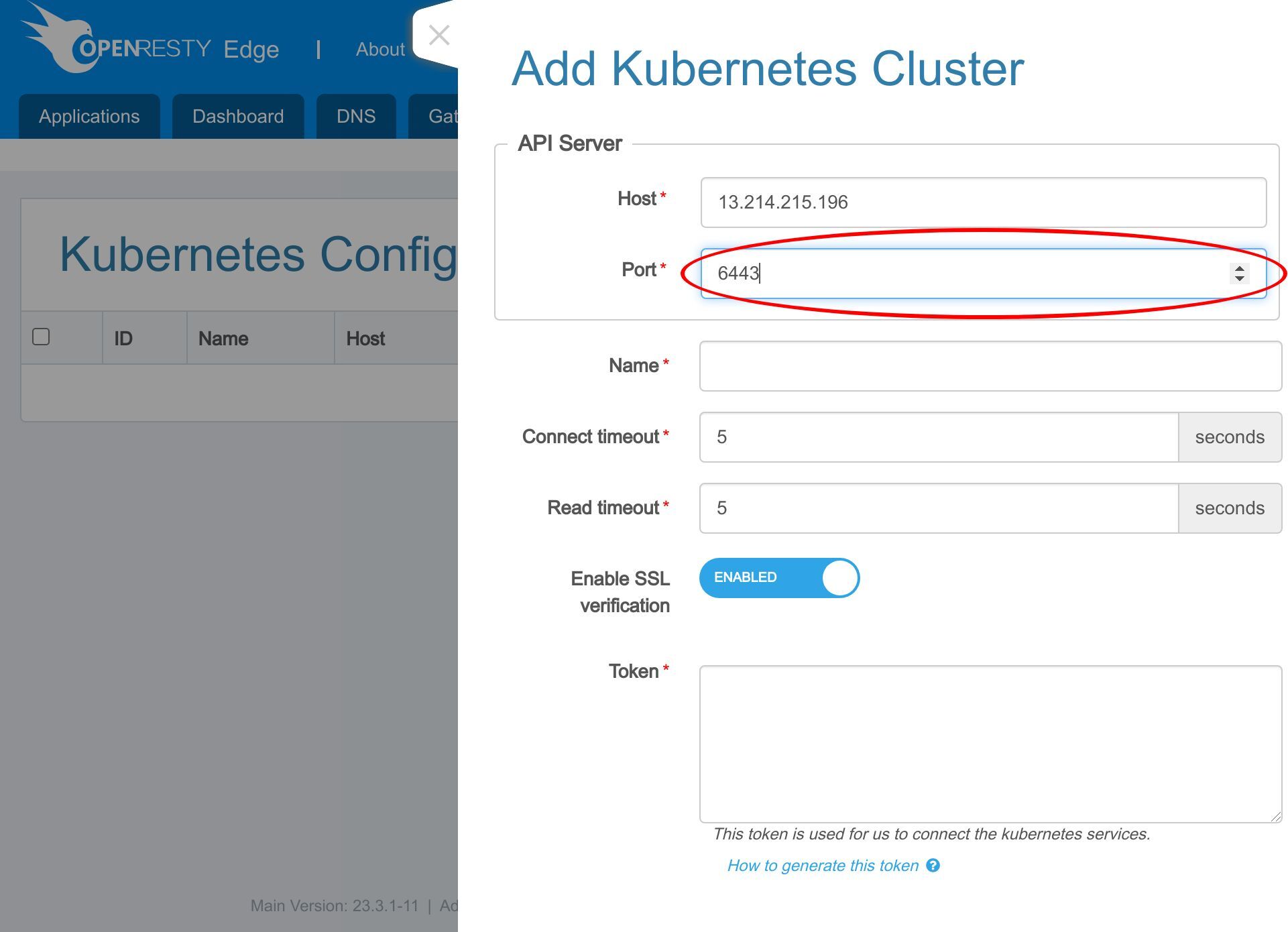

Kubernetes API サーバーのホスト名または IP アドレスを入力します。

次にポート番号を入力します。

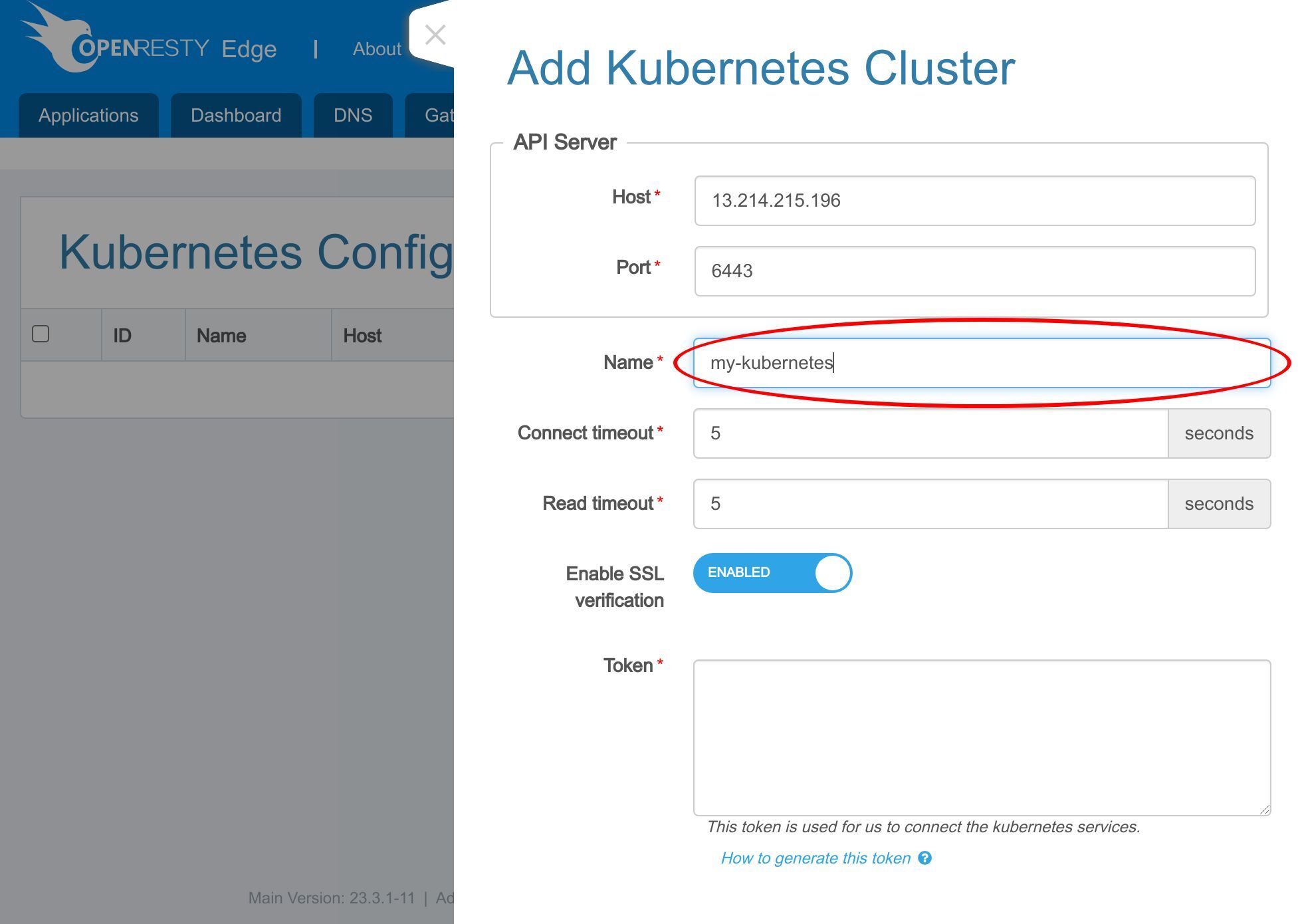

続いて kubernetes クラスターの名前を入力します。

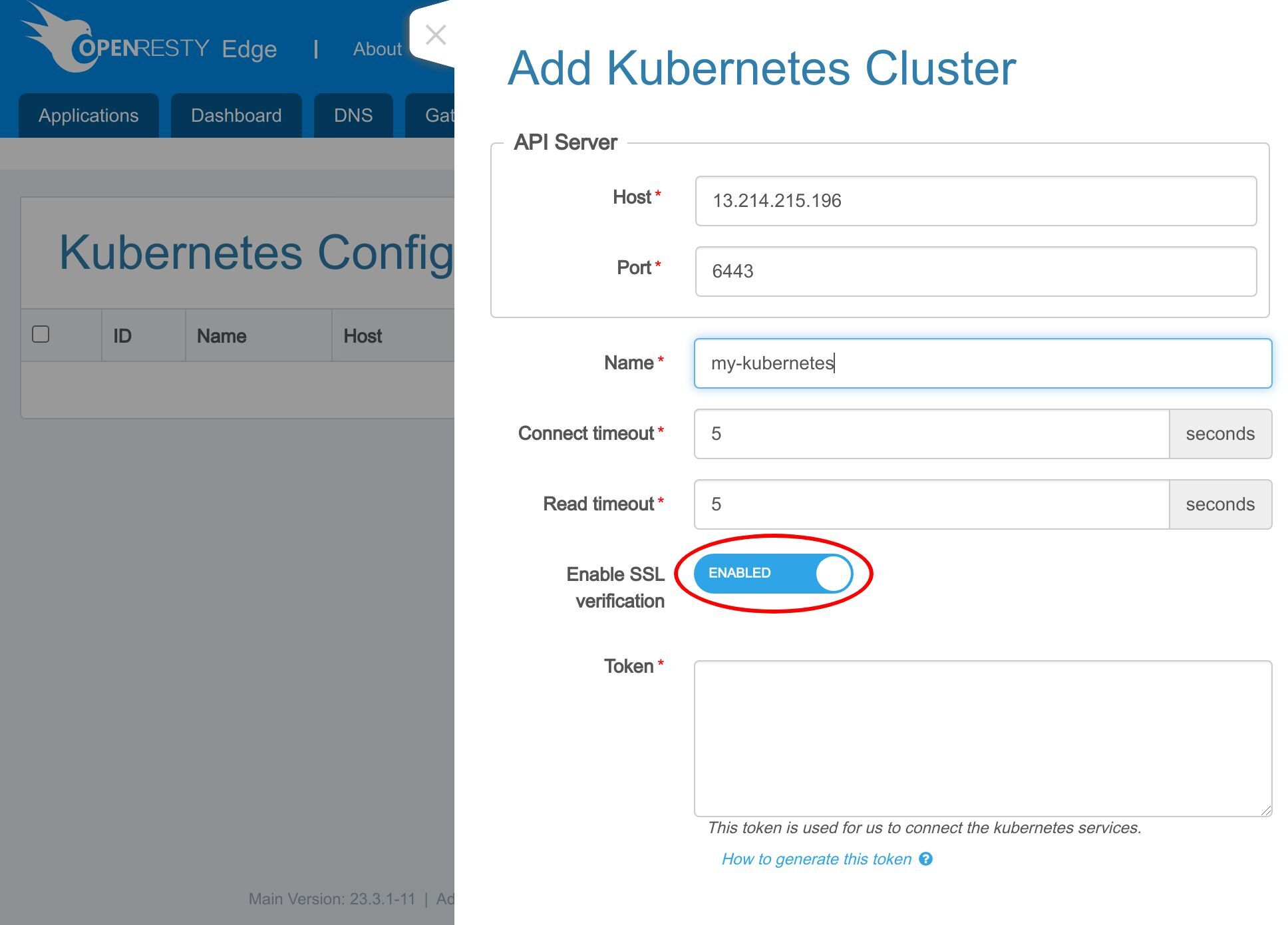

SSL 証明書の検証を無効にします。これは、Kubernetes API サーバーの証明書が自己署名されているためです。

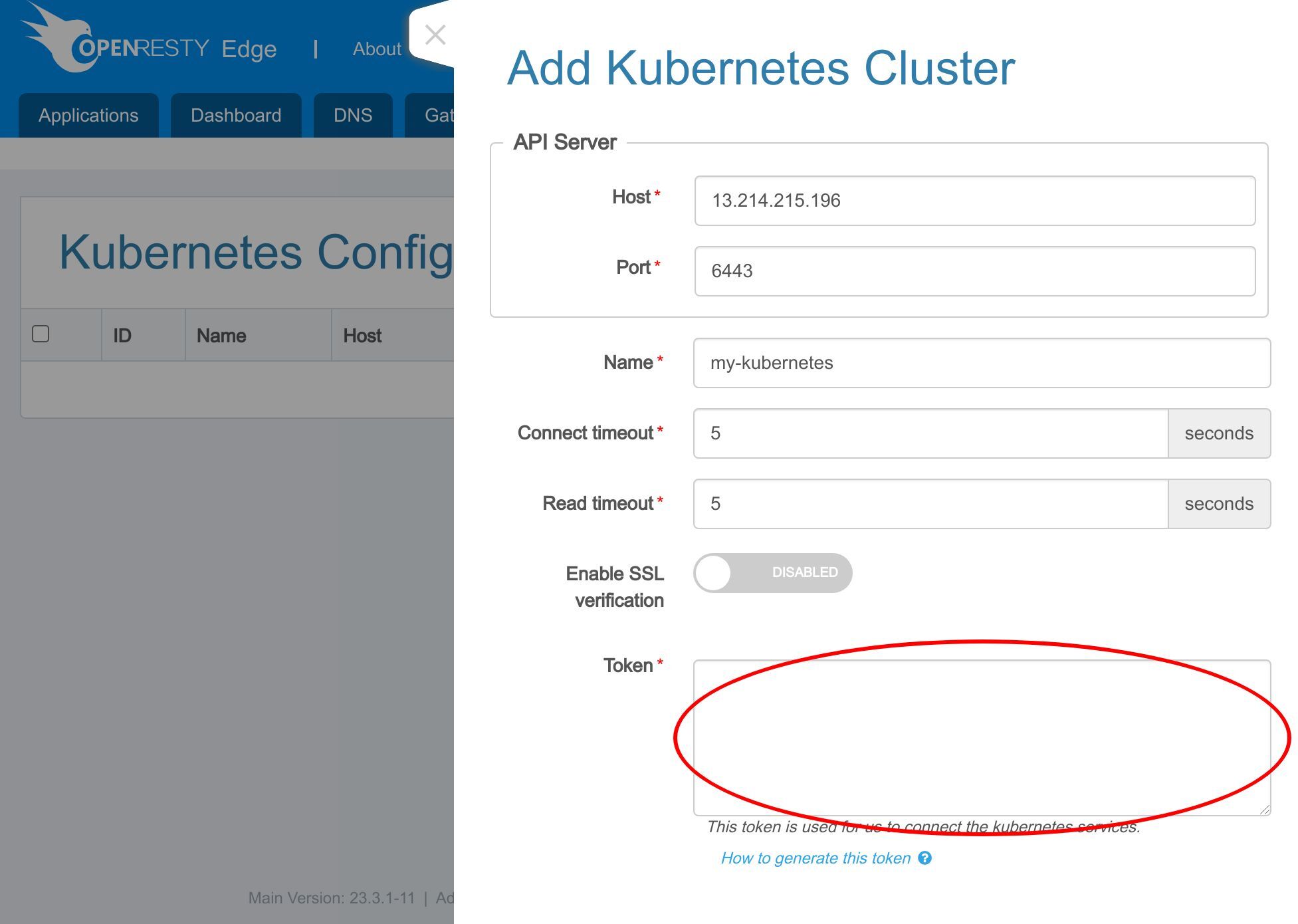

Kubernetes API サーバーにアクセスするためのトークンも必要です。このトークンには十分な権限が必要です。

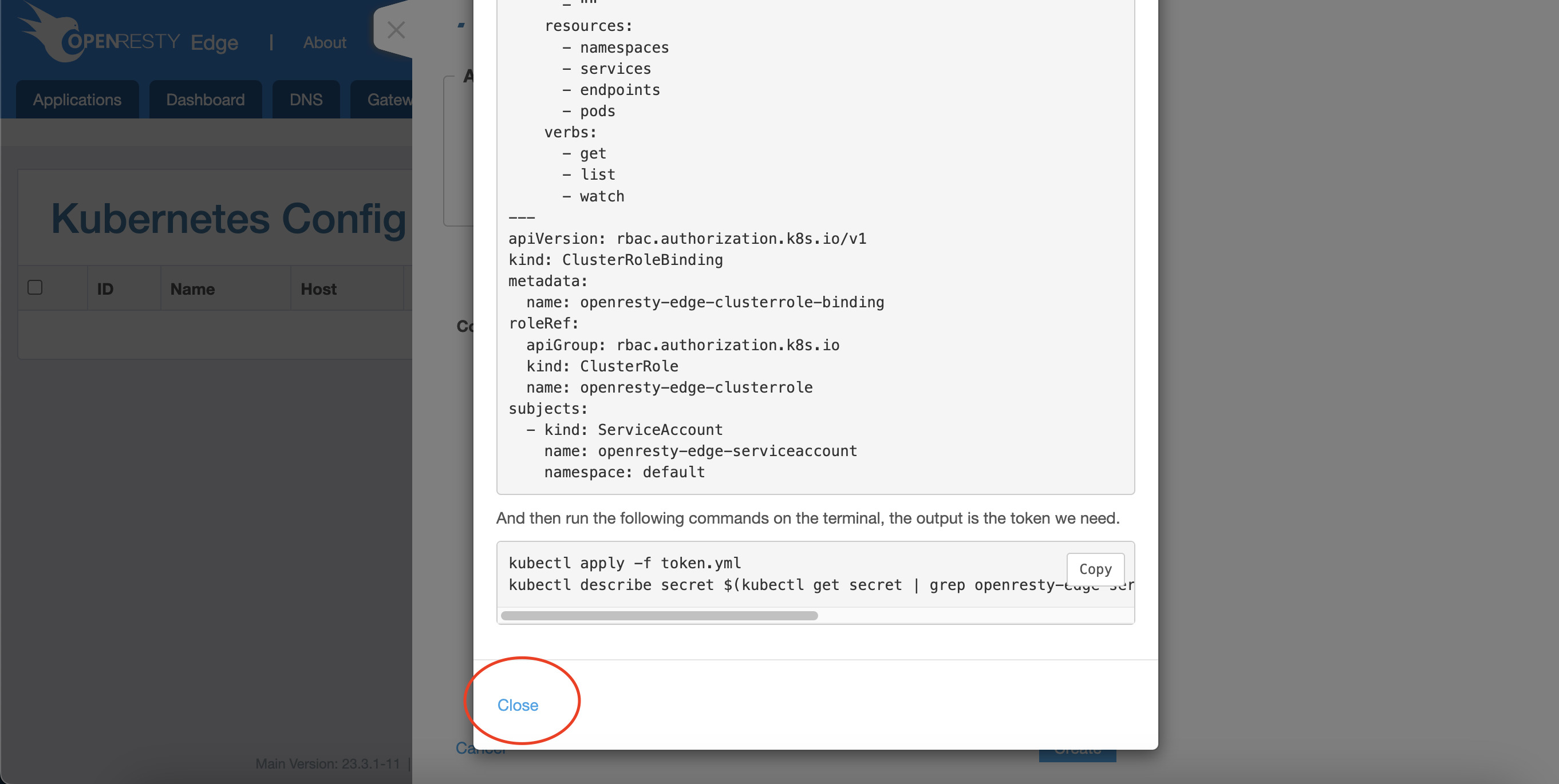

「How to generate this token」というリンクをクリックすると、ポップアップが開きます。

ここでは、独自の Kubernetes デプロイメントからトークンを生成する方法が説明されています。 では、このプロセスをデモンストレーションしましょう。

このポップアップを閉じます。

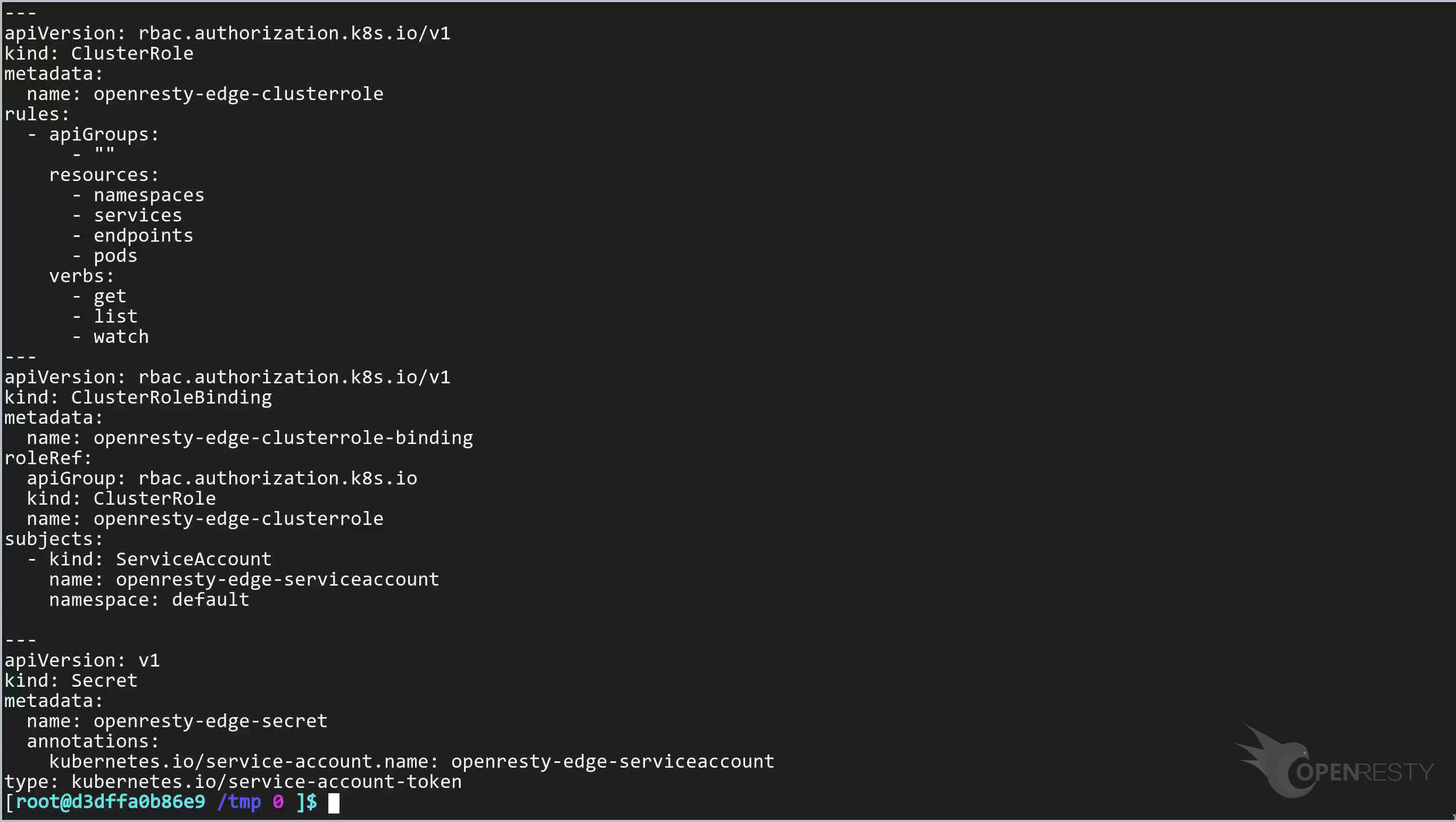

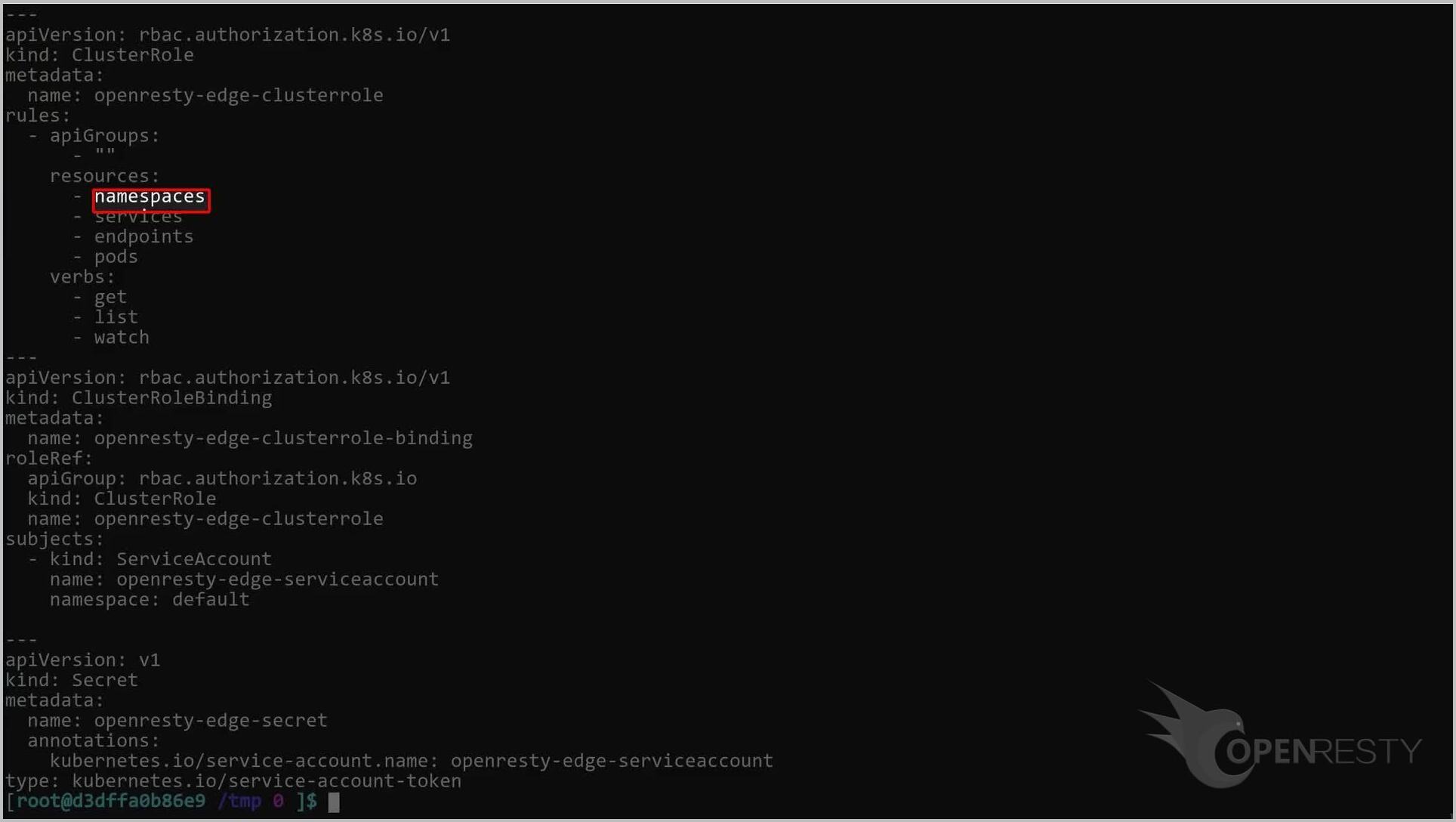

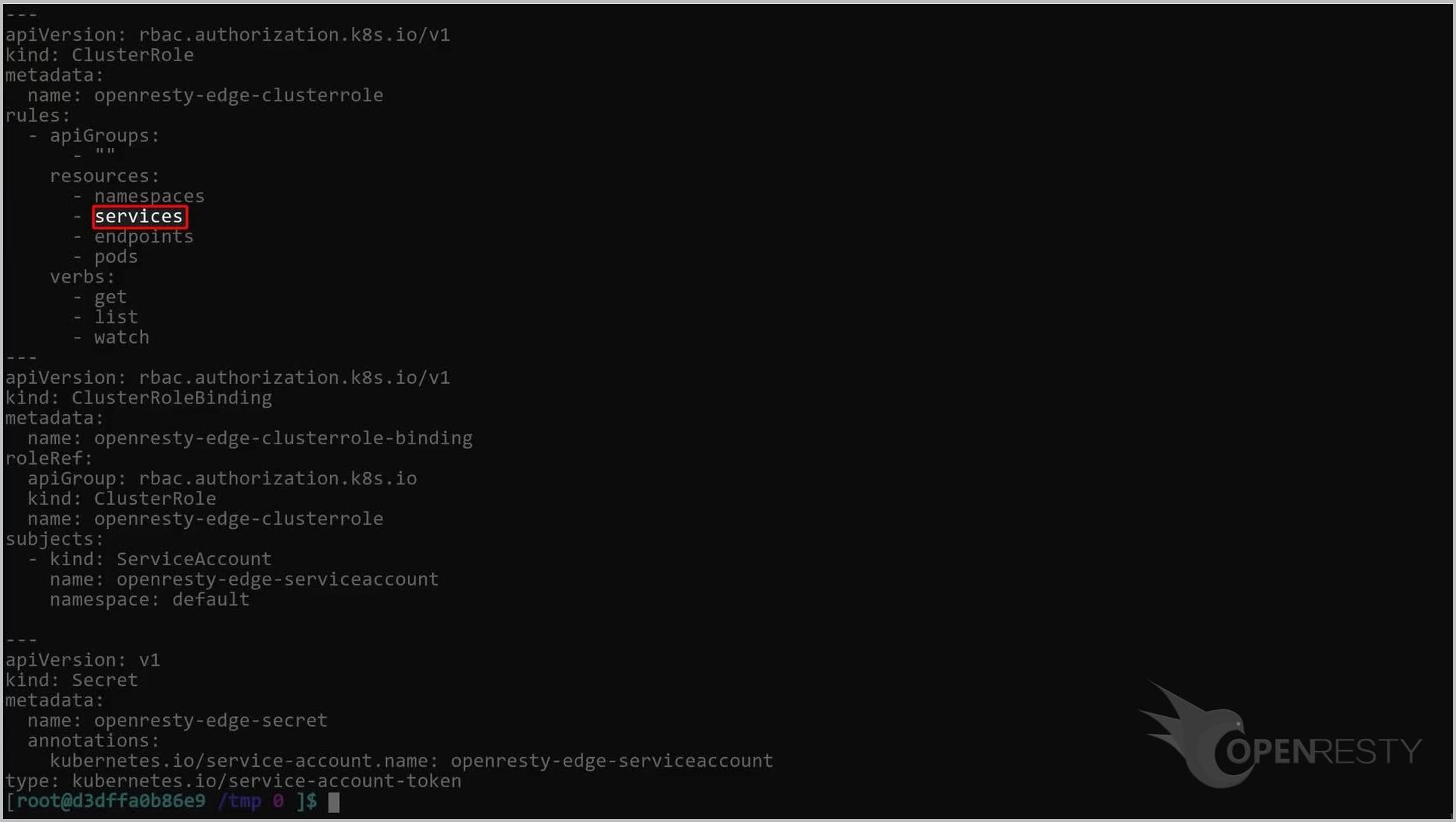

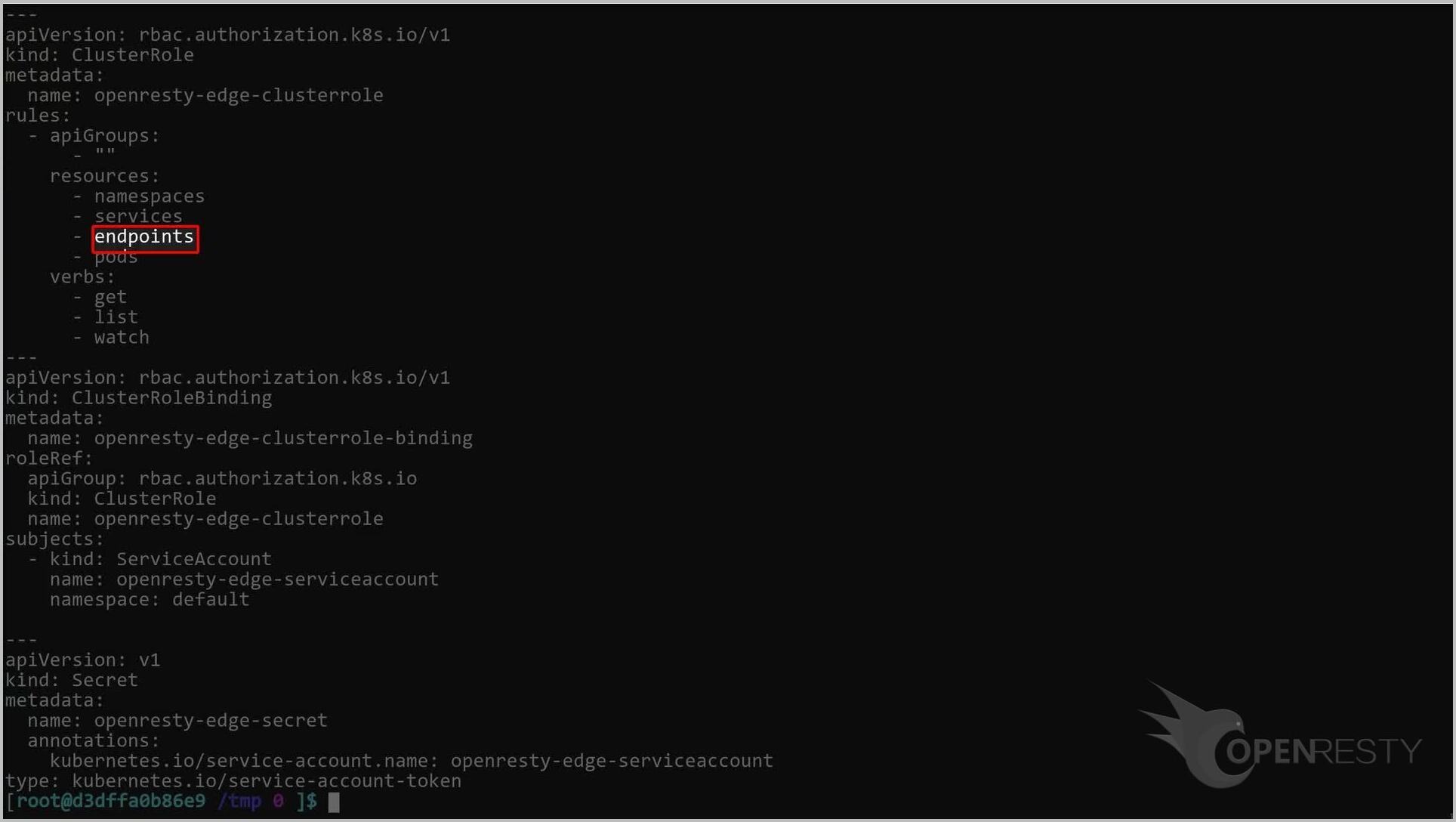

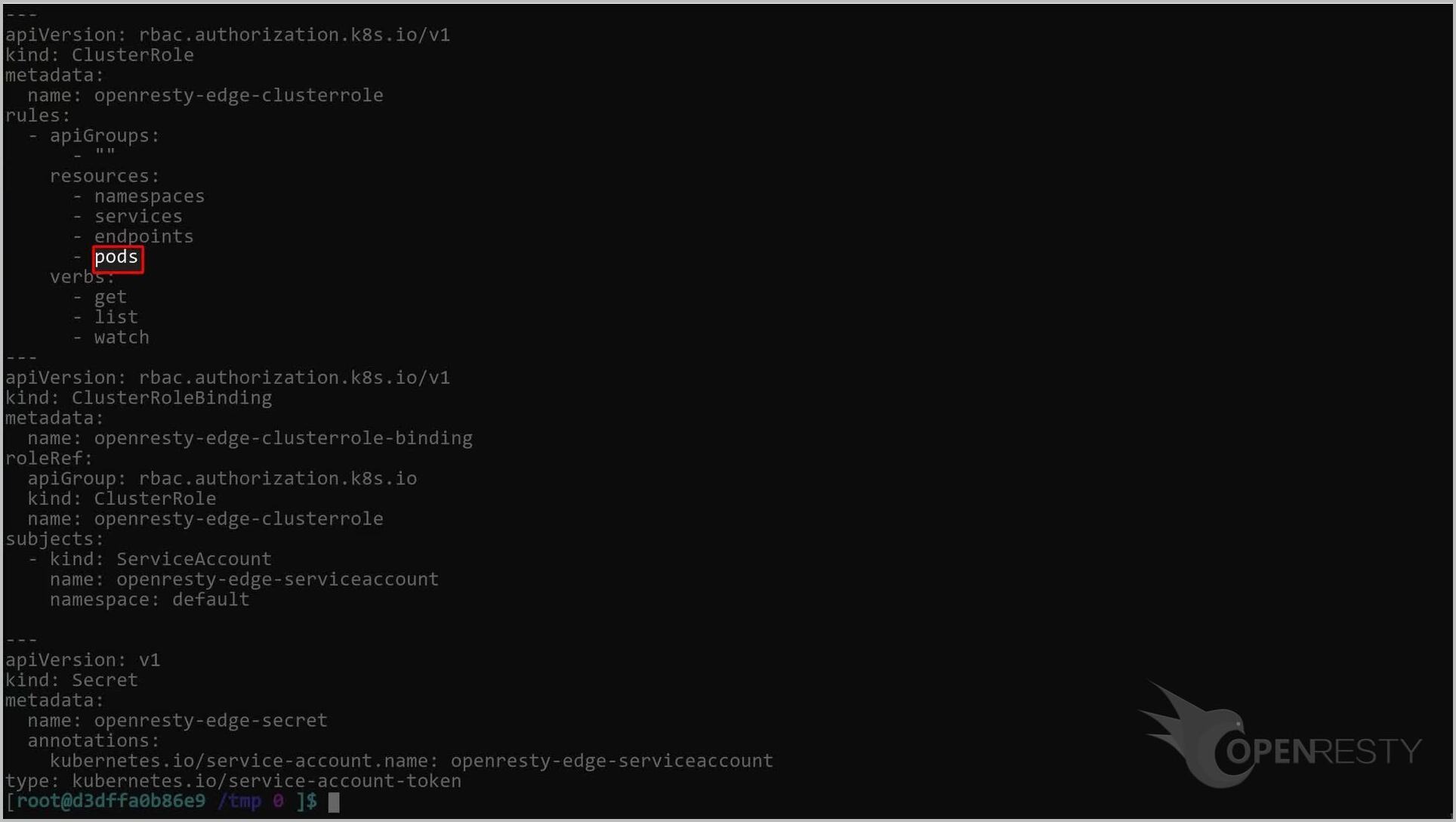

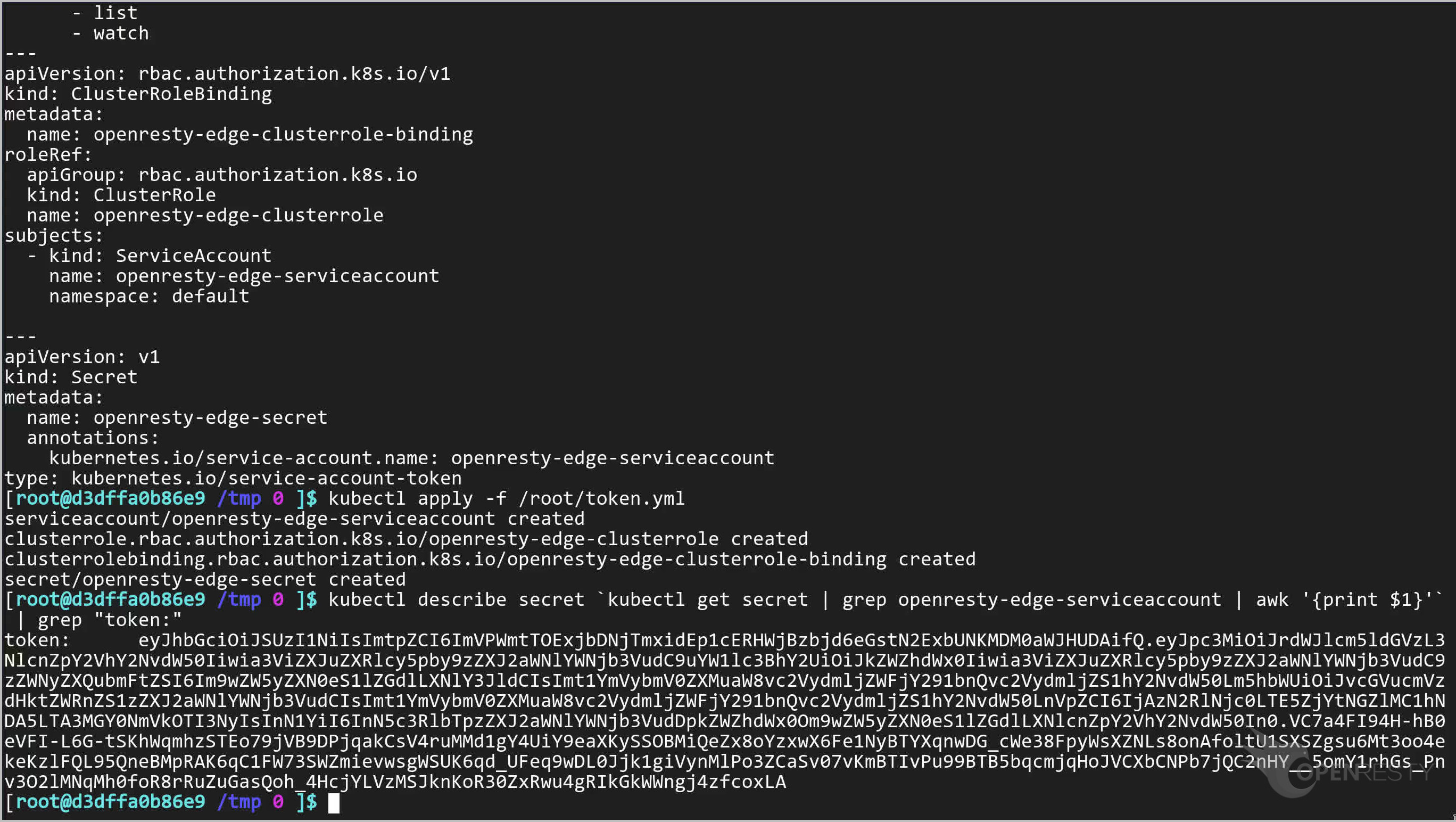

token.yml という名前の設定ファイルを準備する必要があります。以下はこのファイルのサンプルです。これを使用して、読み取り権限を持つアカウントを作成し、

ネームスペース、

サービス、

エンドポイント、および

Pods オブジェクトにアクセスできるようにします。

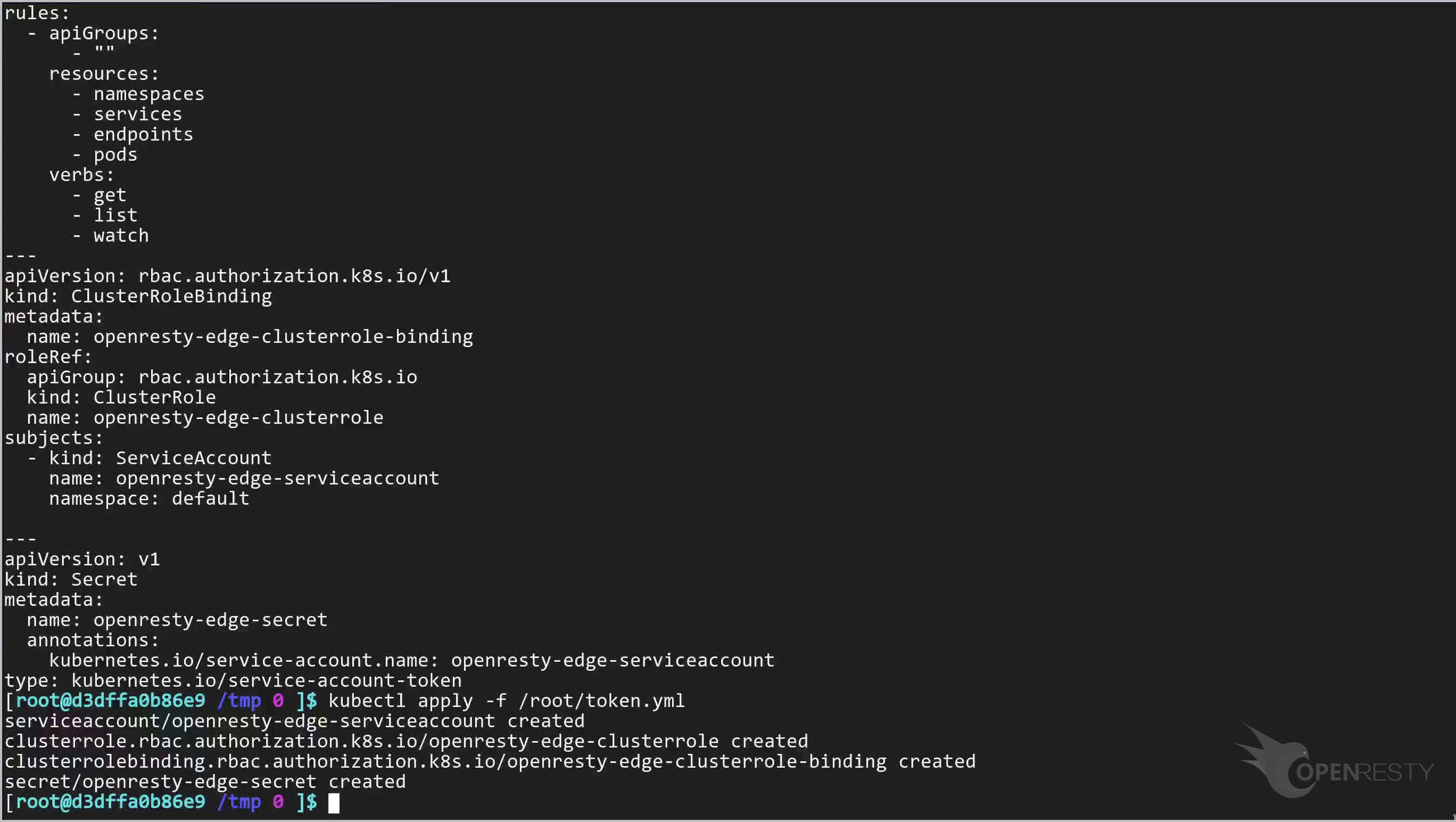

以下のコマンドを使用してアカウントを作成します。

kubectl apply -f /root/token.yml

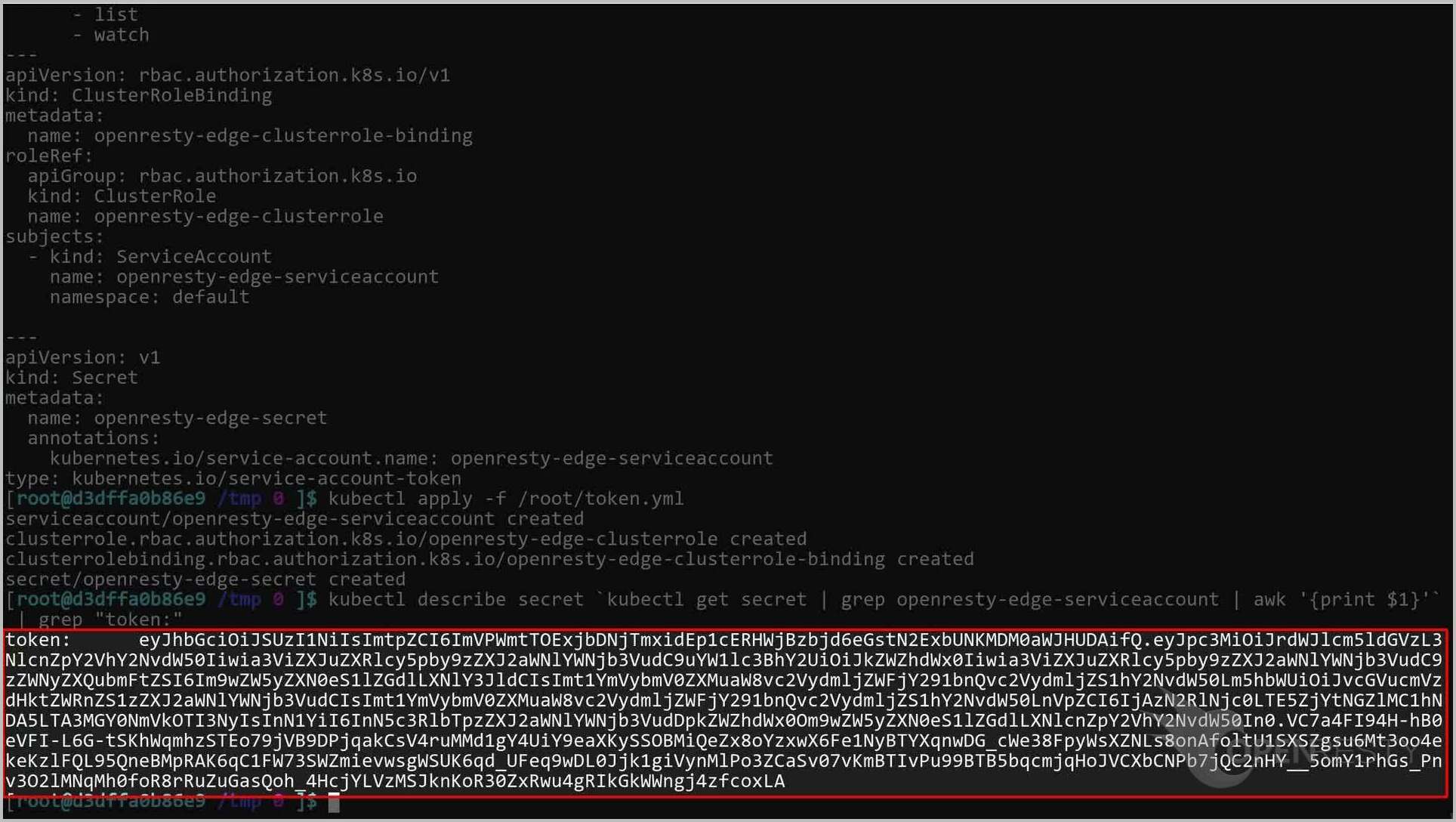

最後に、以下のコマンドを使用してアカウントのトークンを取得します。

“token:” の後のテキストが必要なトークンです。

これで、Kubernetes API サーバーにアクセスするためのトークンが得られました。

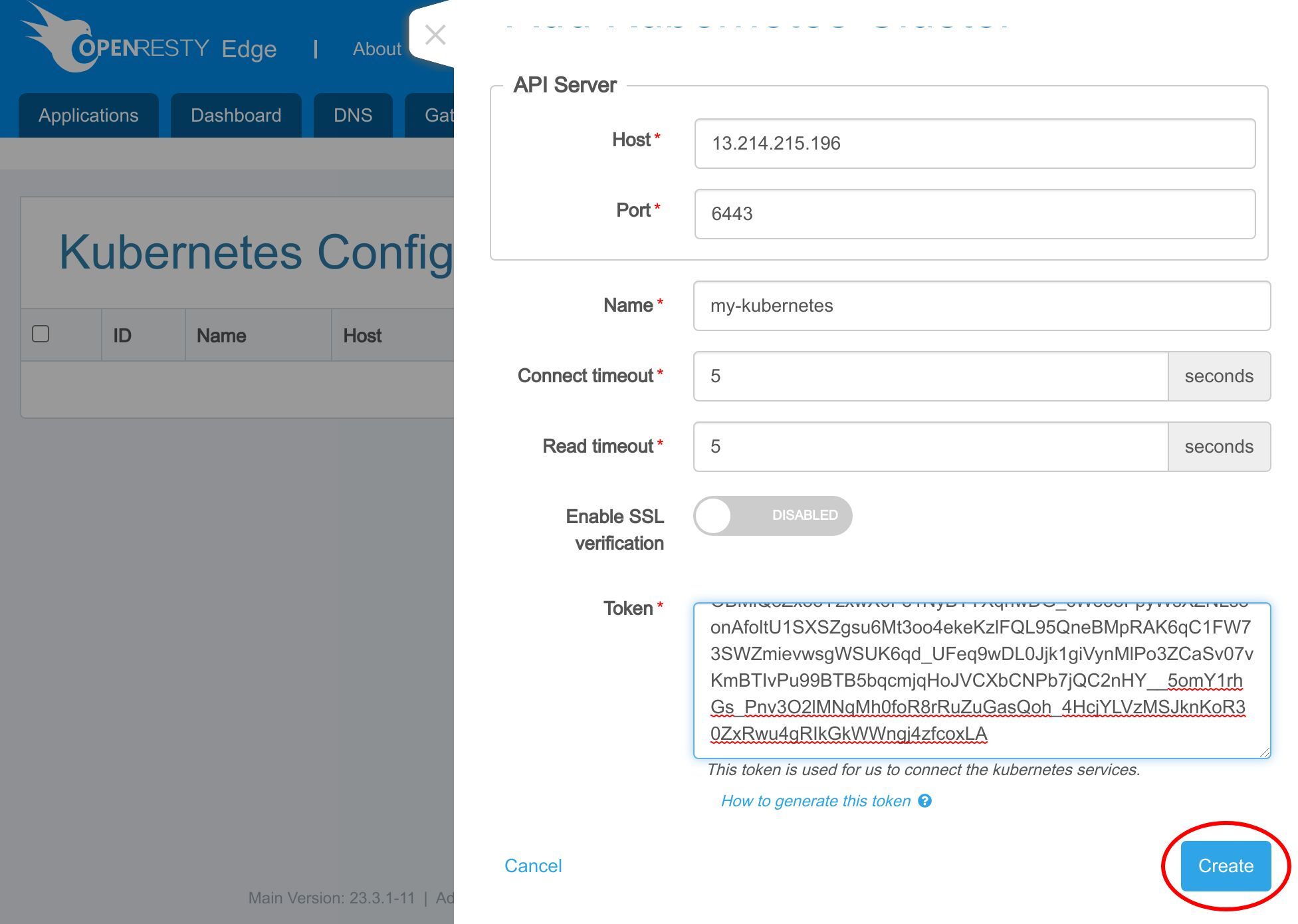

先ほど作成した API トークンをここに貼り付けます。

「作成」ボタンをクリックして完了です。

Kubernetes アップストリームの作成

この時点で、グローバルスコープで Kubernetes クラスターを登録しました。次は、Edge アプリケーション内でこのクラスターを利用するアップストリームを作成する時です。

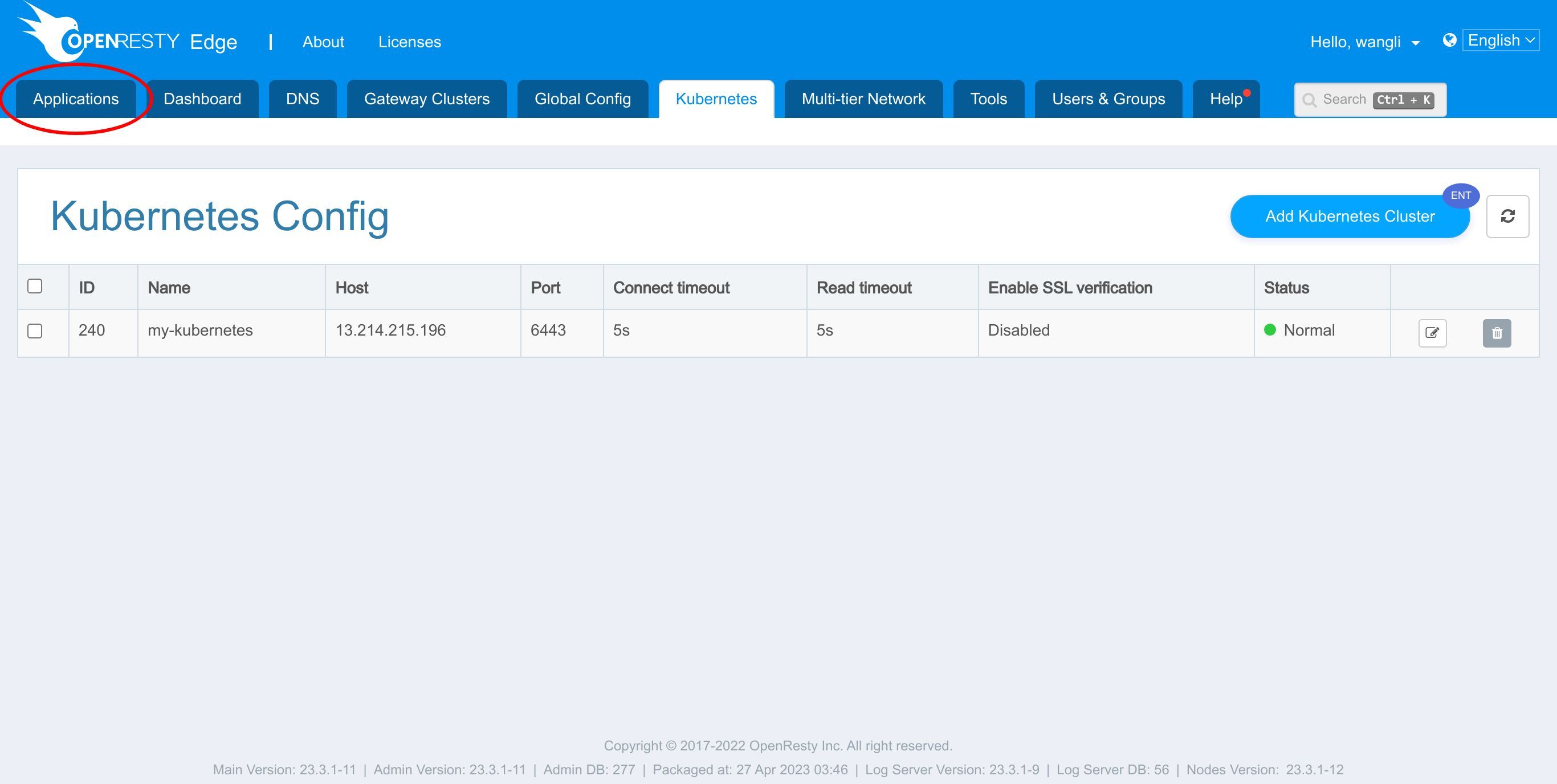

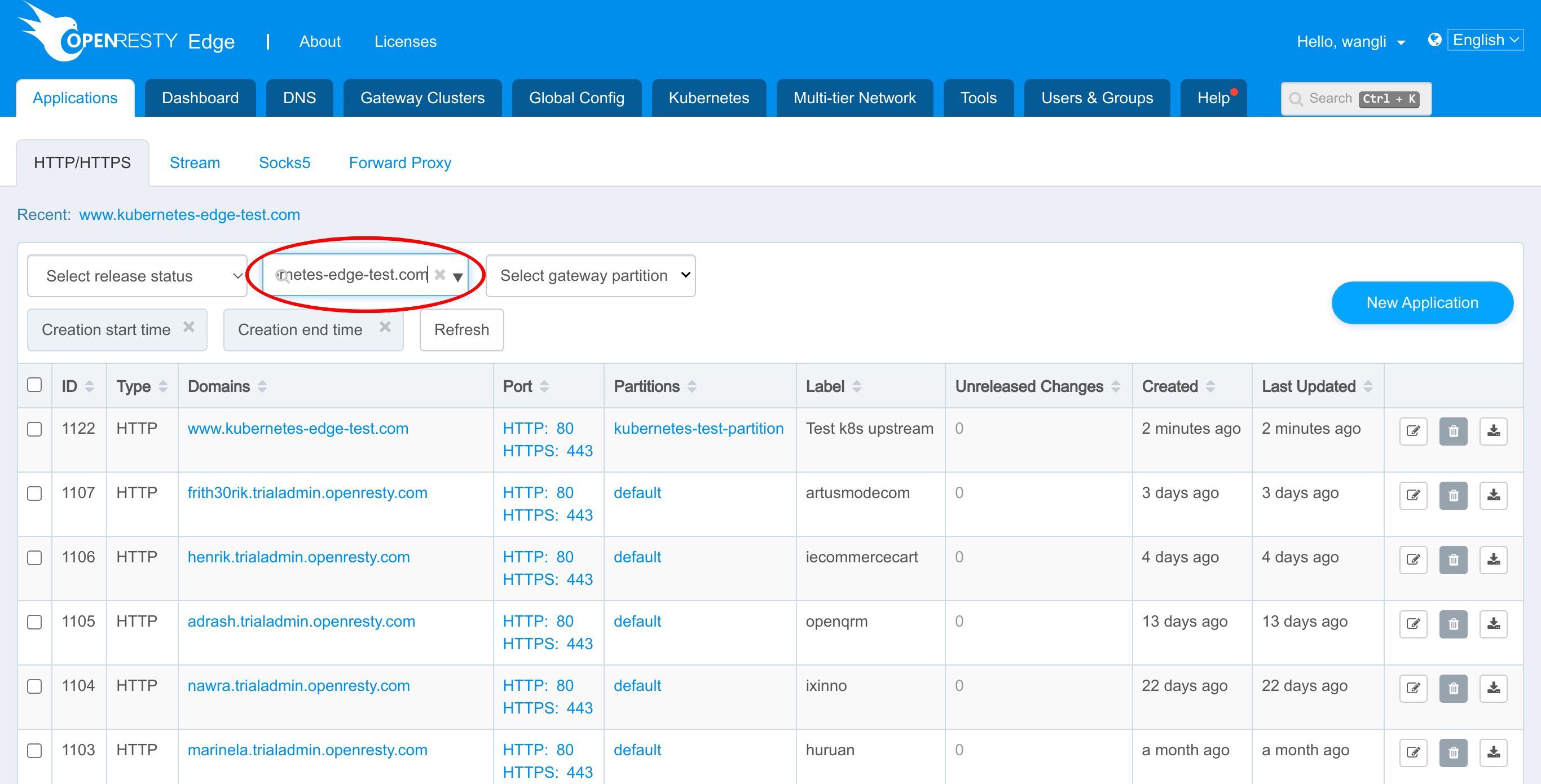

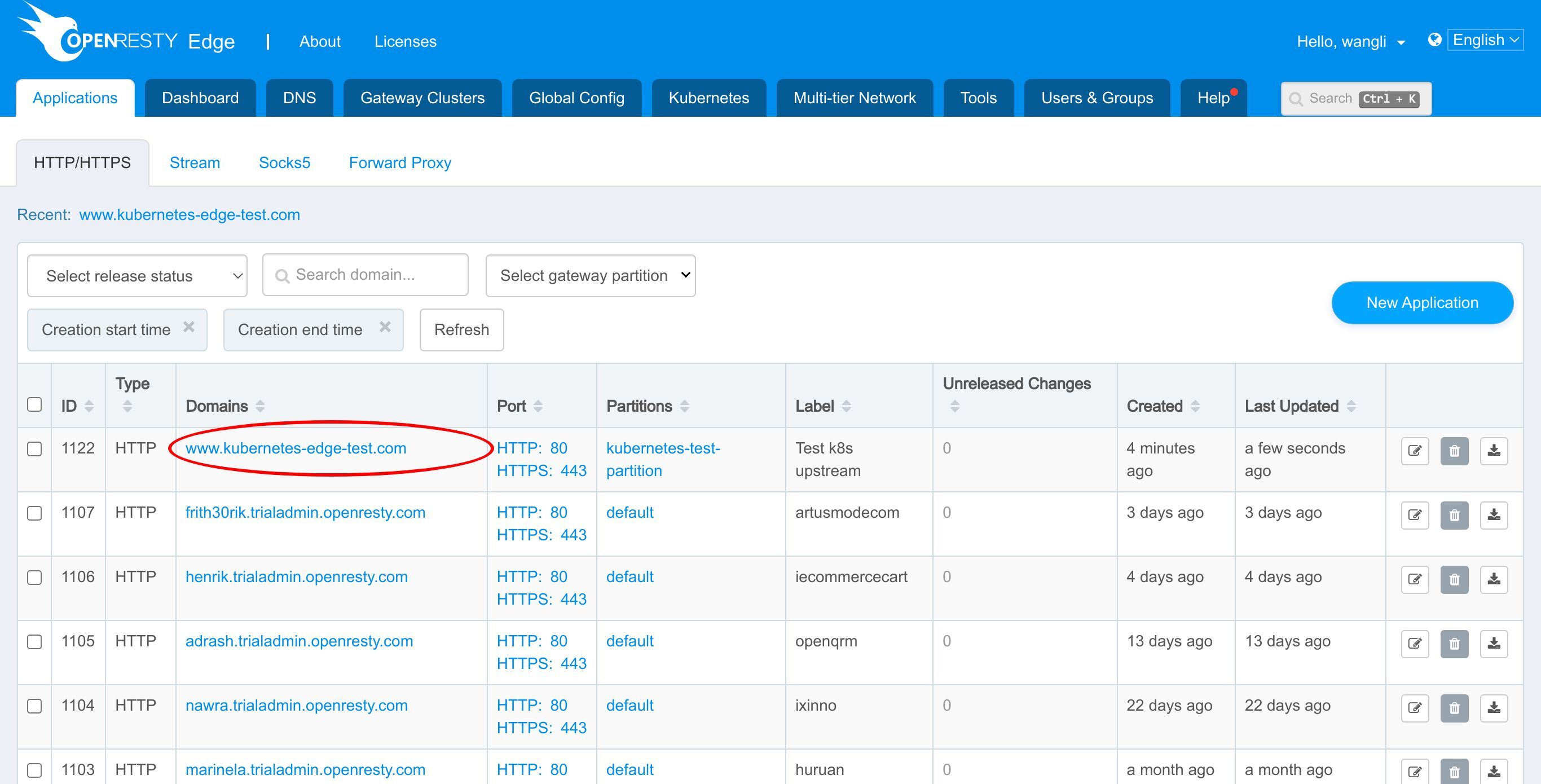

アプリケーション一覧ページに移動します。

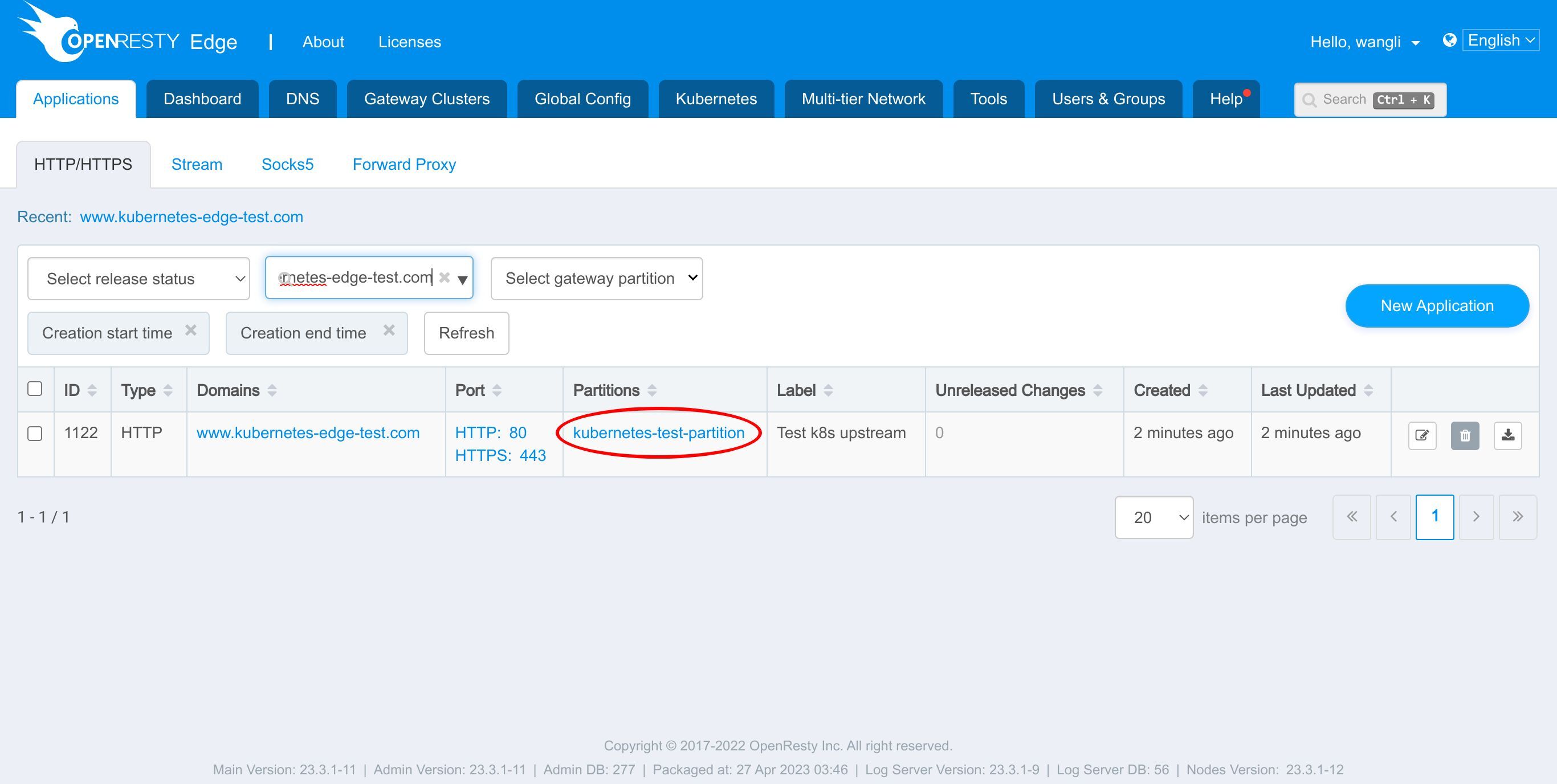

www.kubernetes-edge-test.com という名前の Edge アプリケーションをすでに用意しています。

このアプリケーションは、先ほど示したゲートウェイパーティションにマッピングされています。

このアプリケーションに入ります。

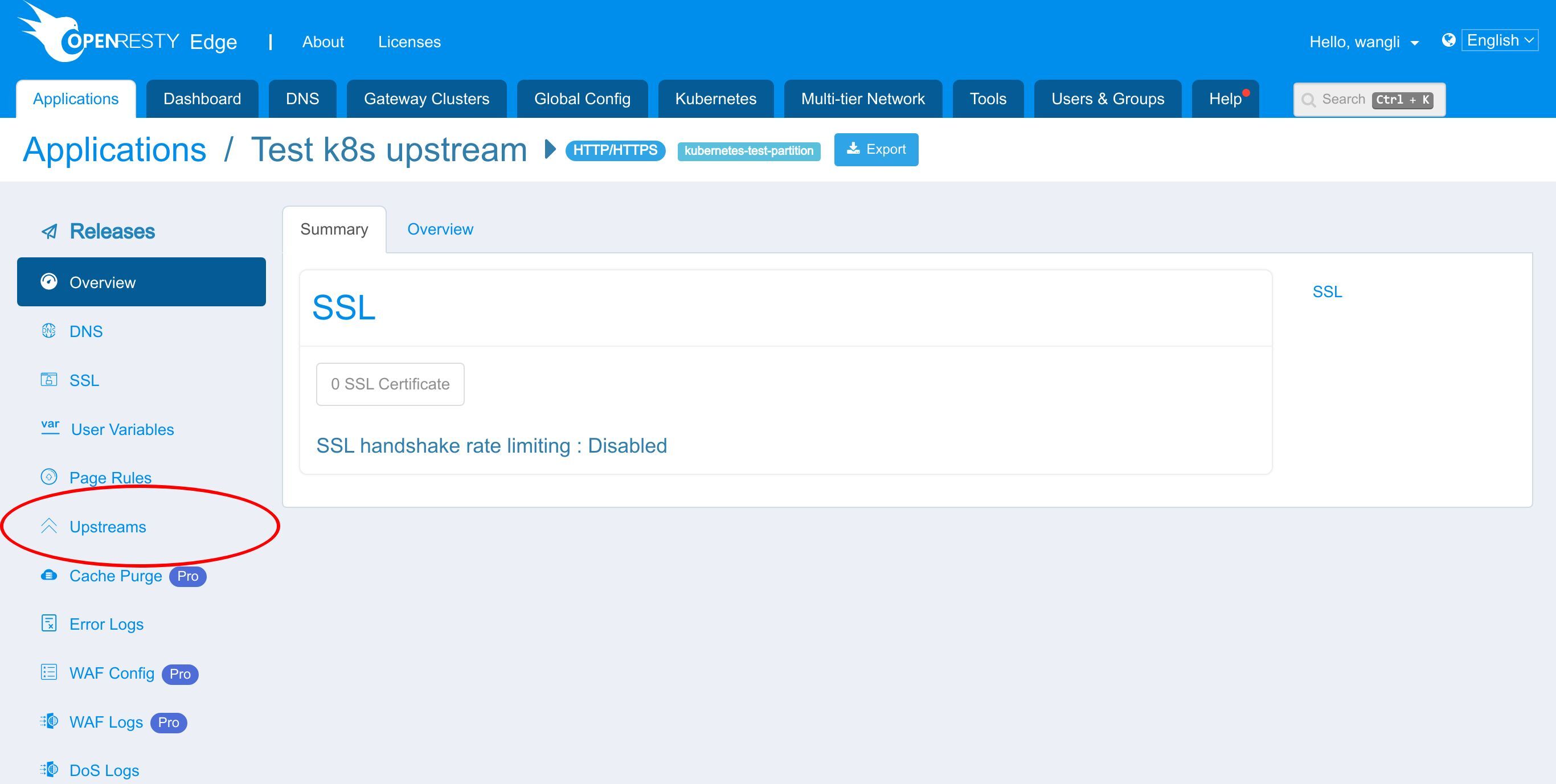

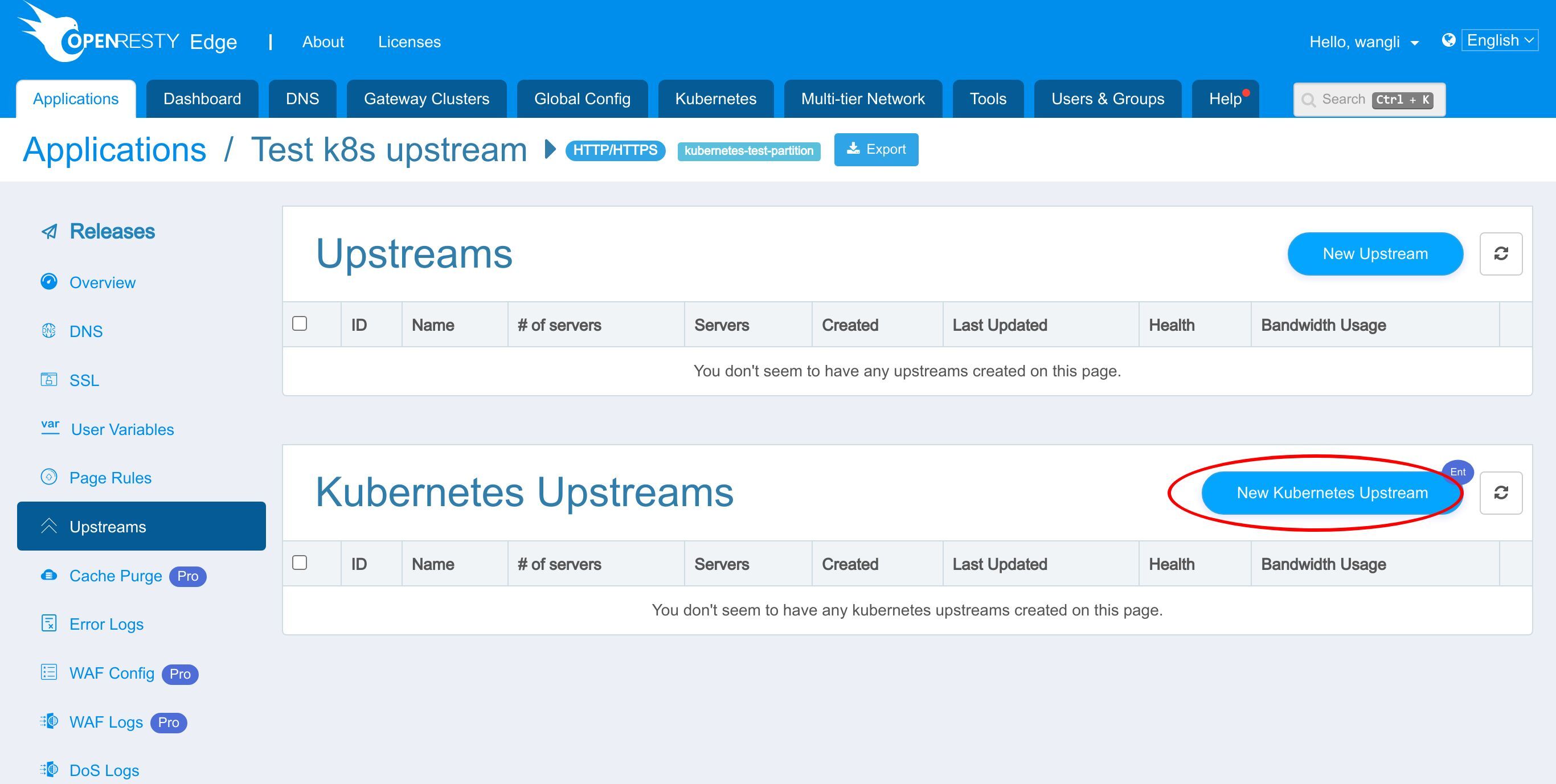

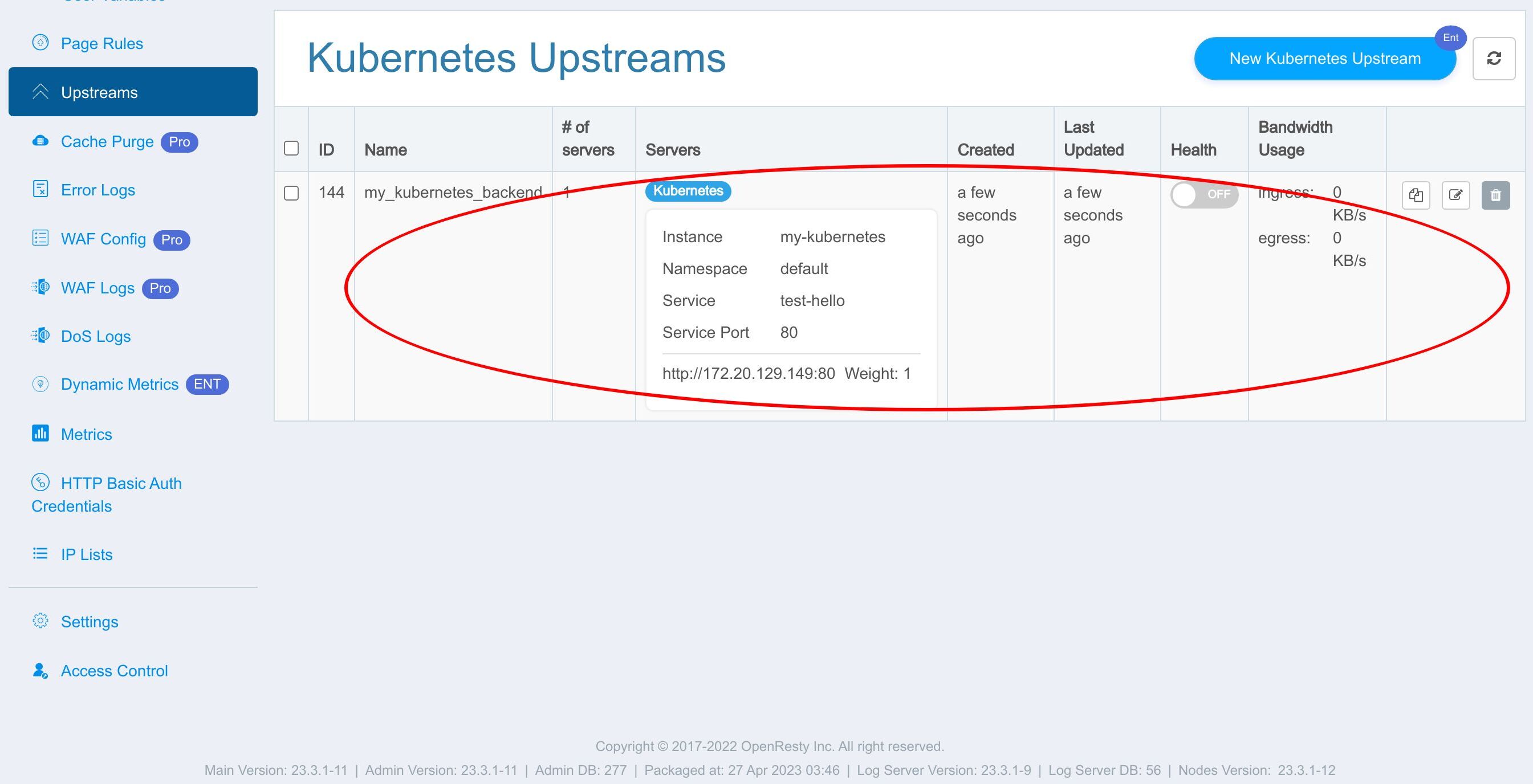

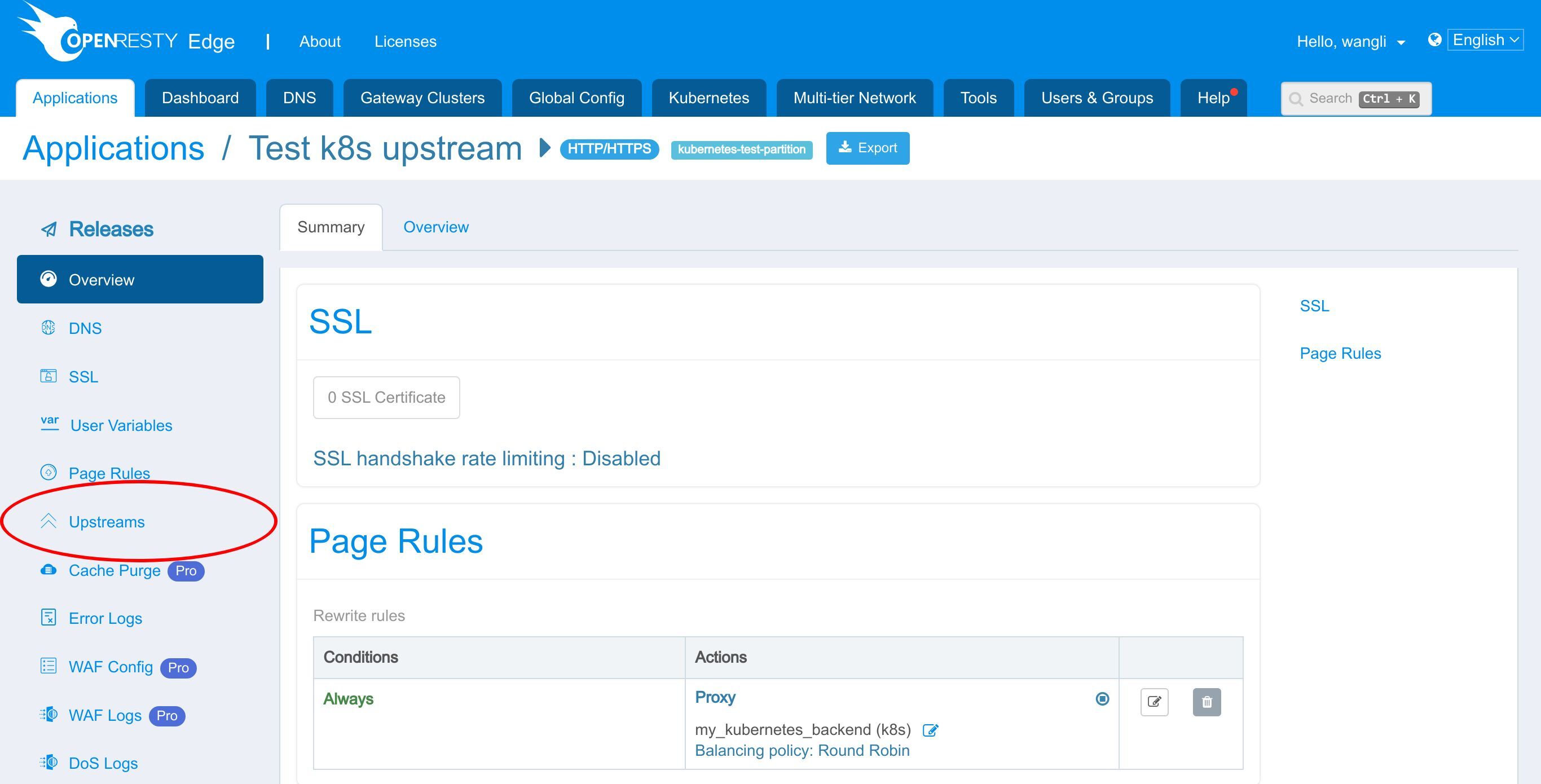

「Upstreams」ページに移動します。

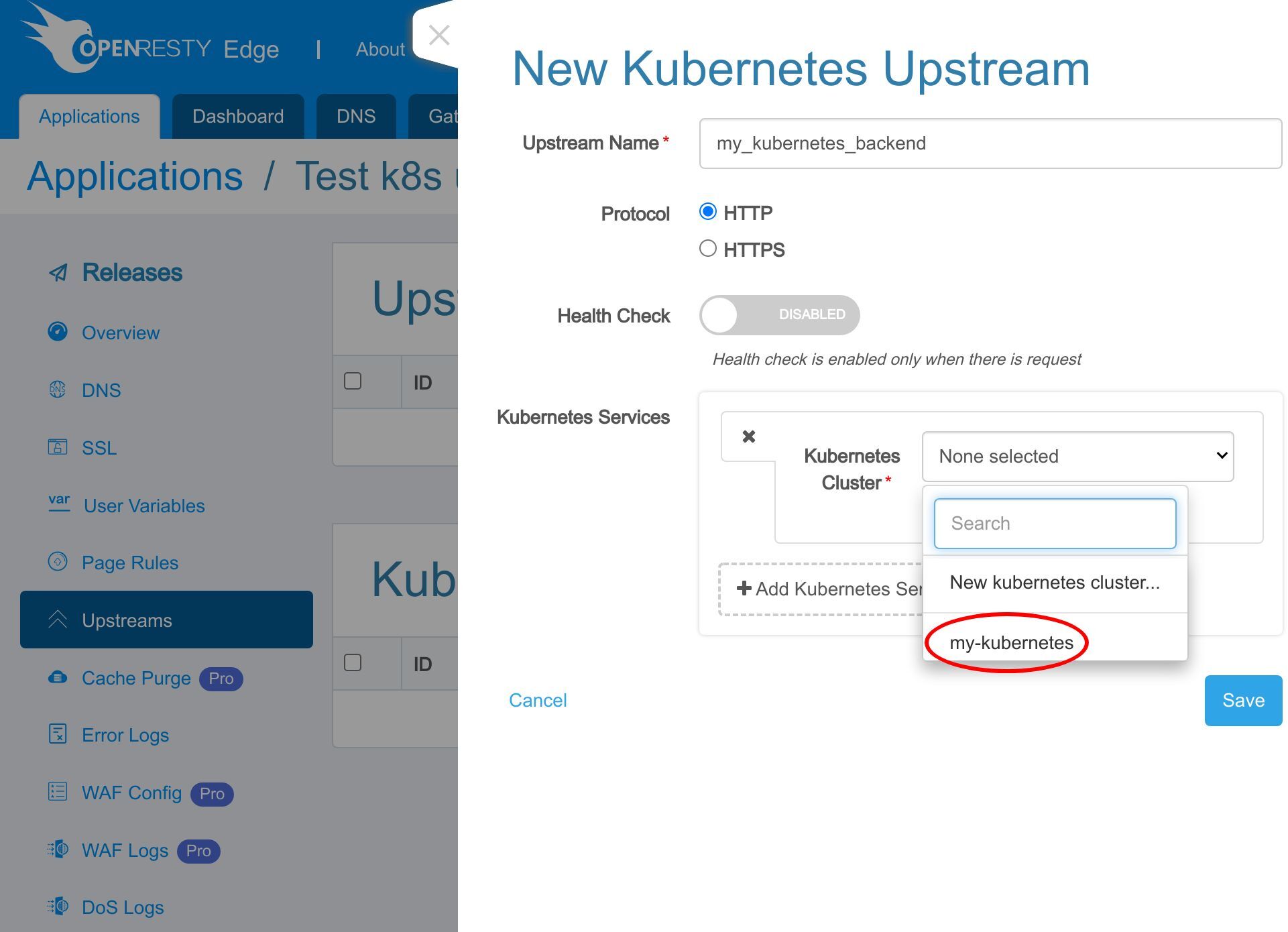

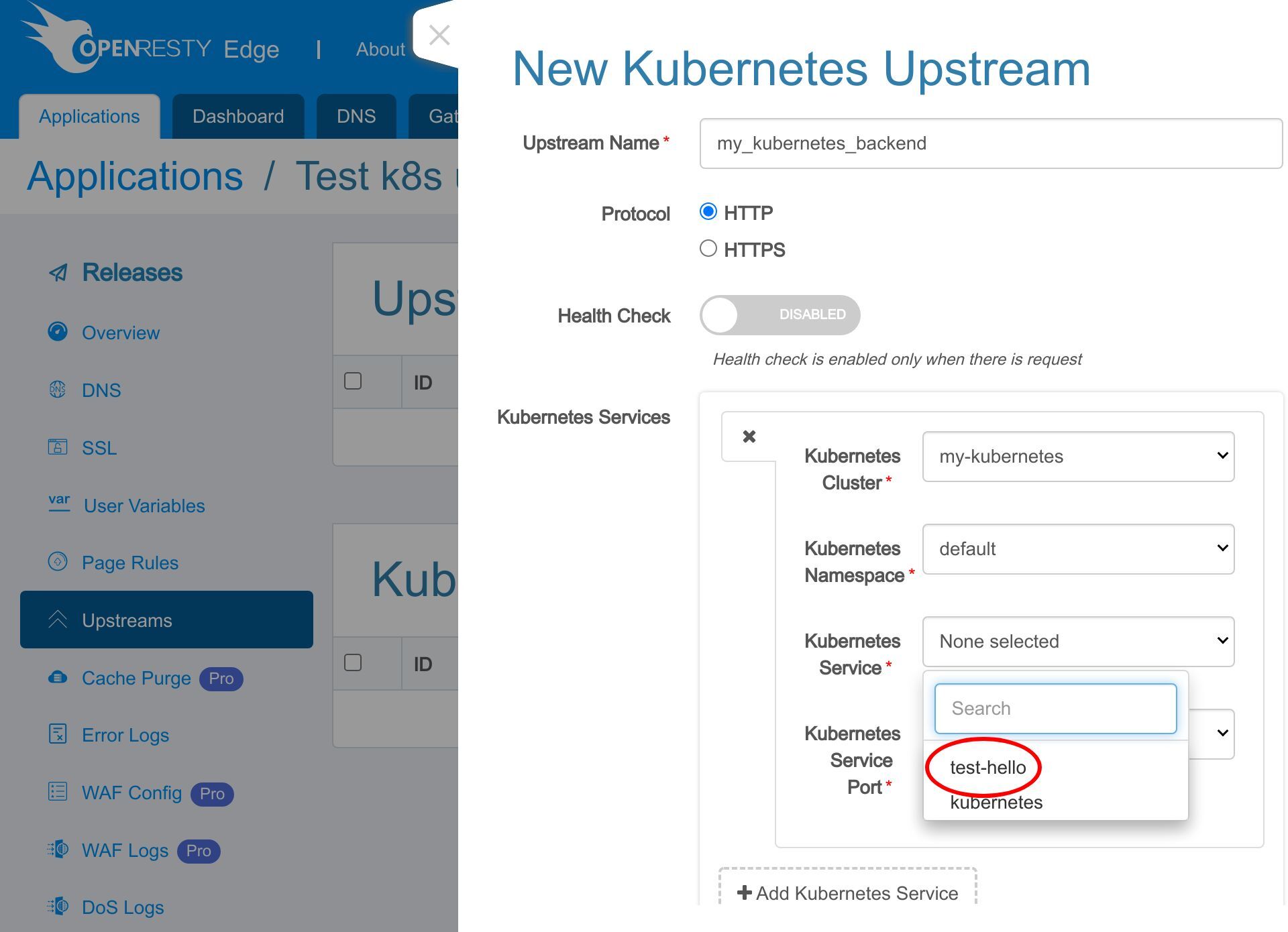

新しい Kubernetes アップストリームを作成するボタンをクリックします。

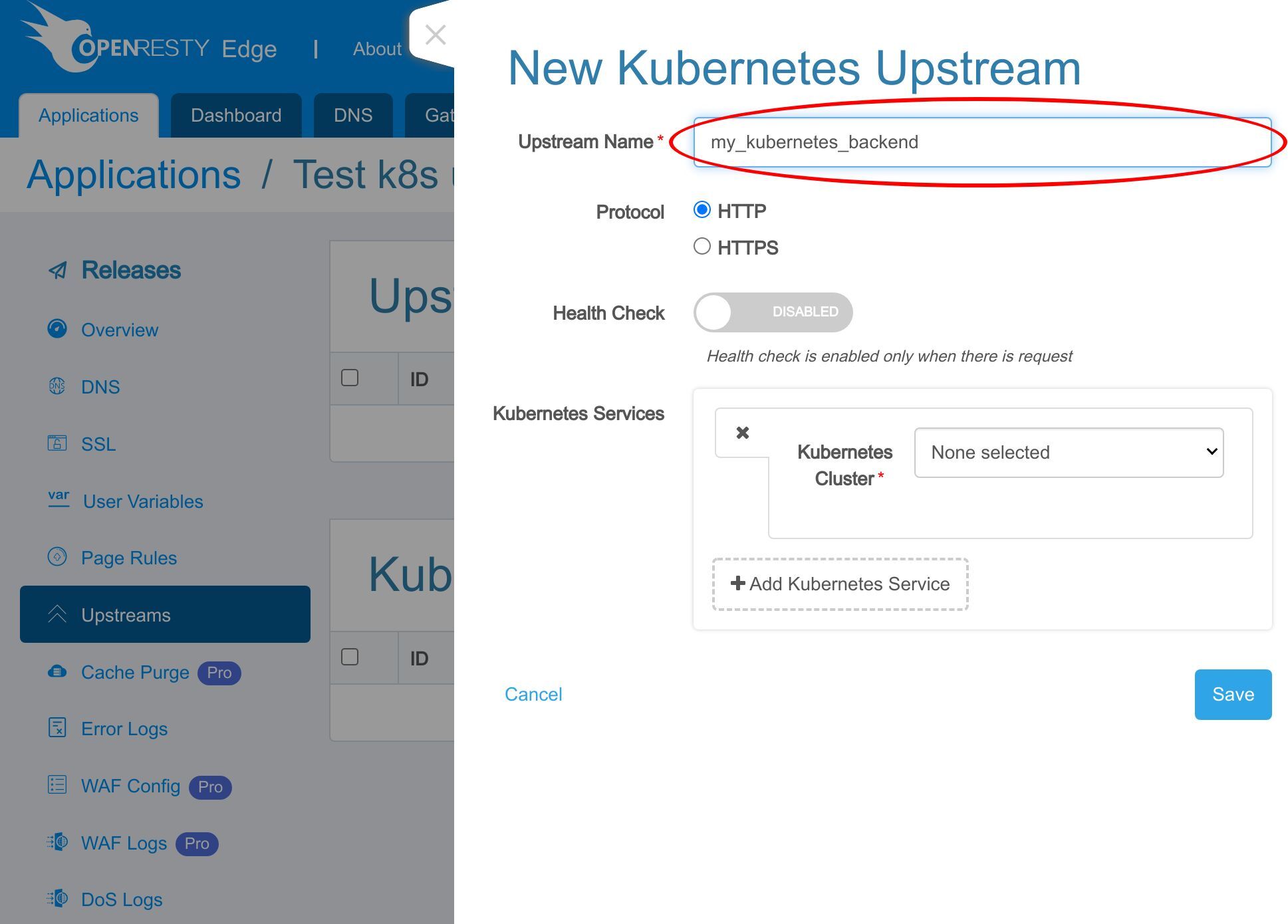

Kubernetes アップストリームの名前として “my kubernetes backend” と入力します。

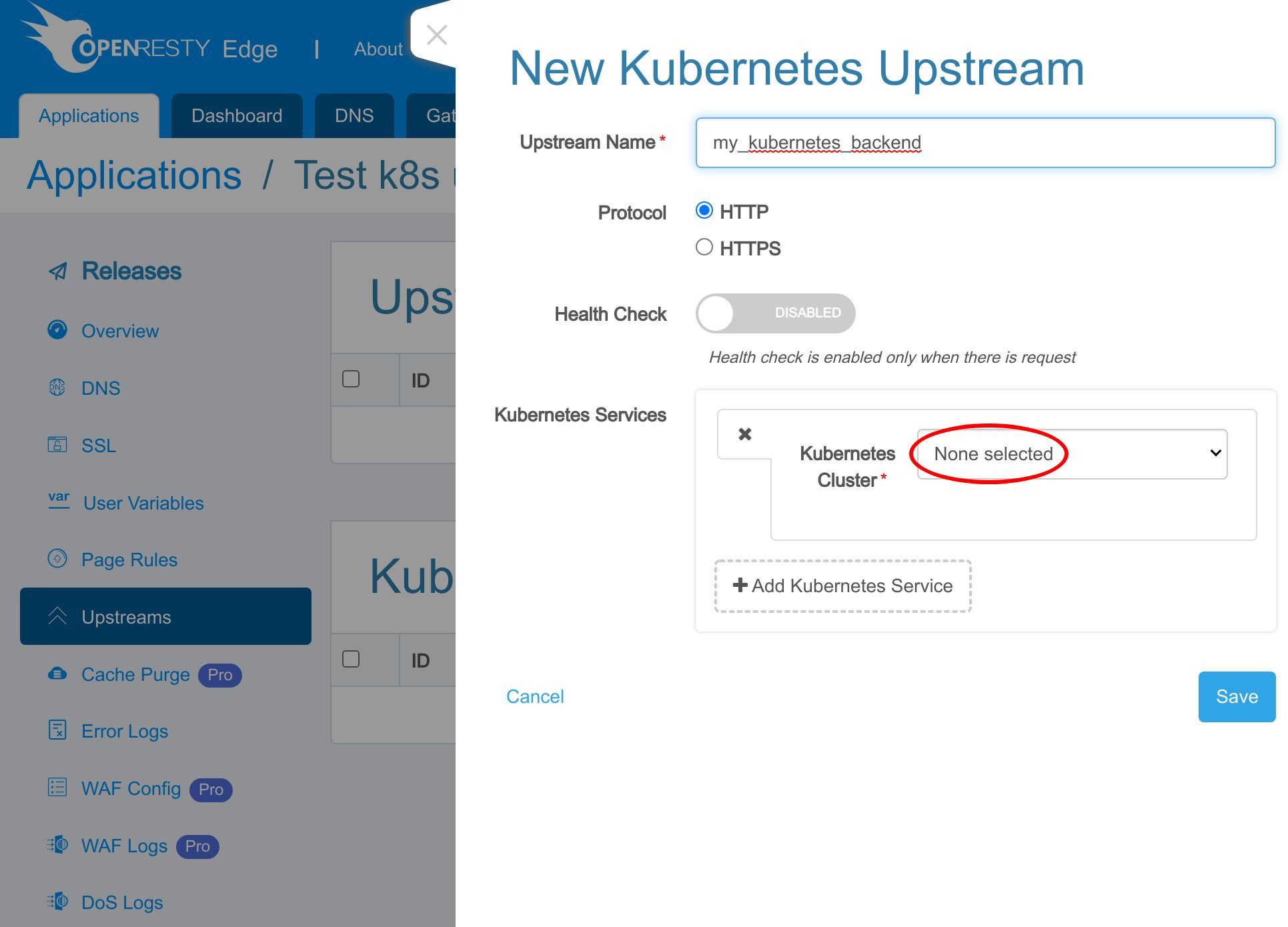

ターゲットとなる Kubernetes クラスターを選択します。

先ほど作成した Kubernetes クラスターを選択します。

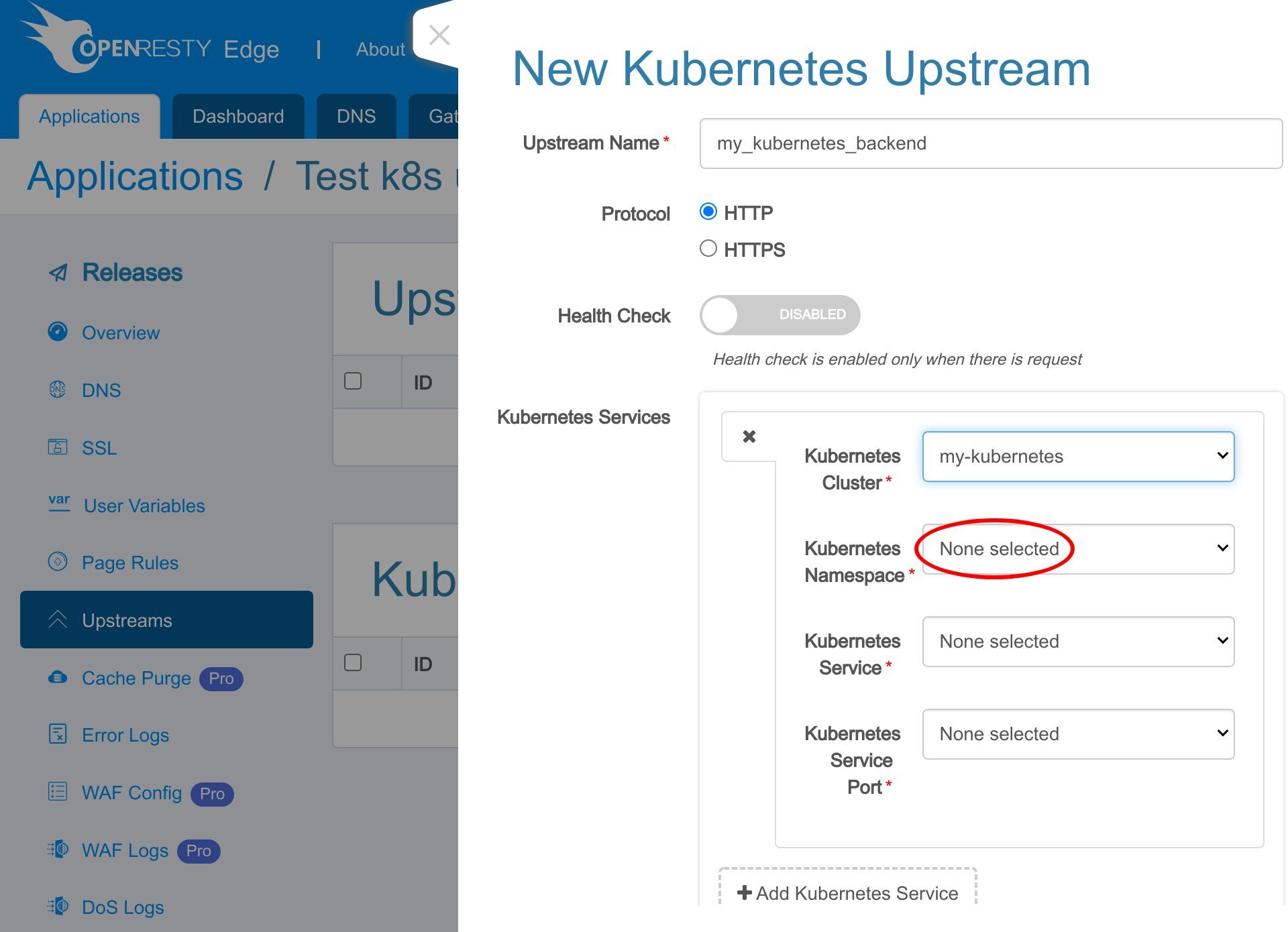

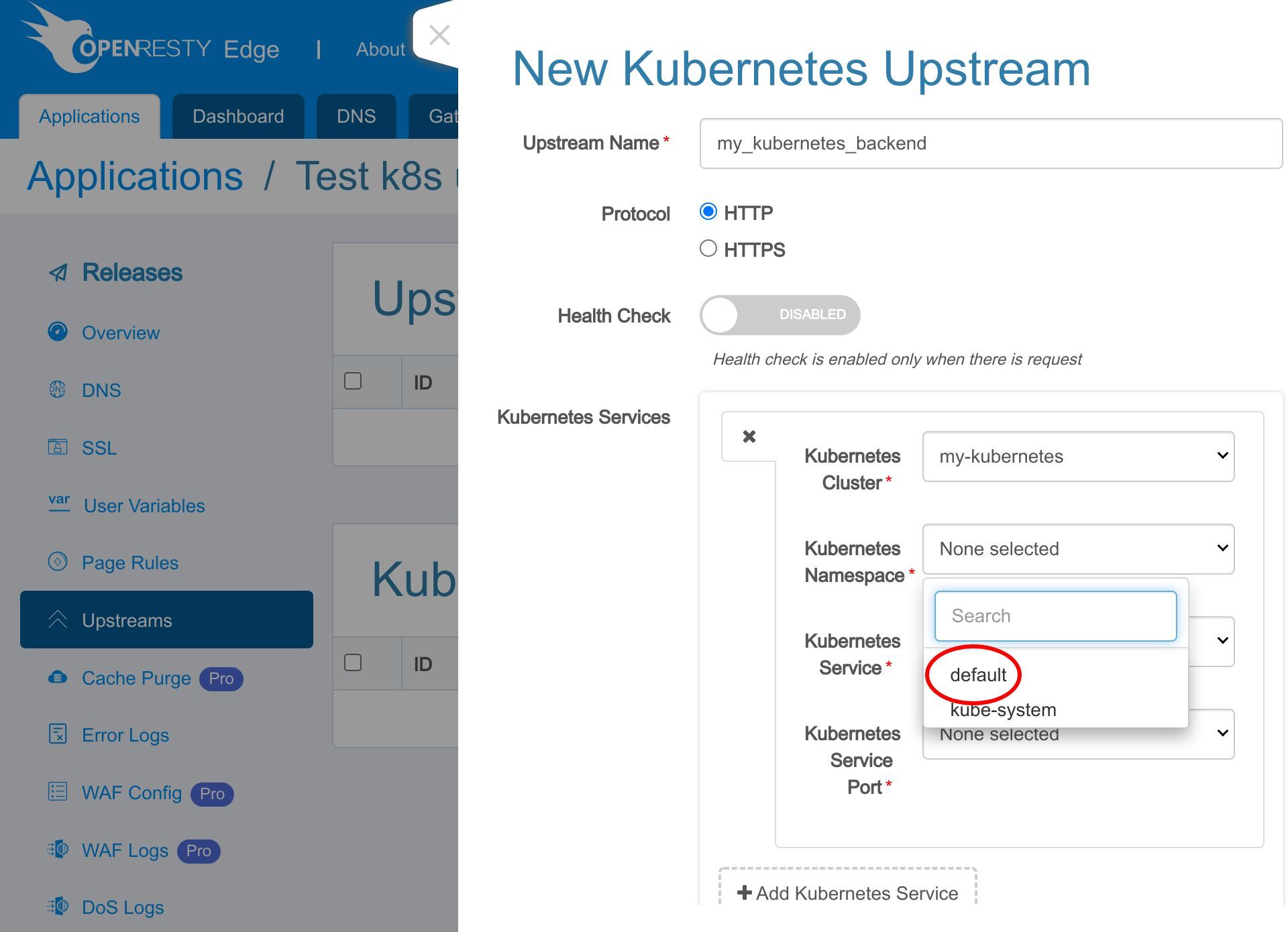

ターゲットとなる Kubernetes のネームスペースを選択します。

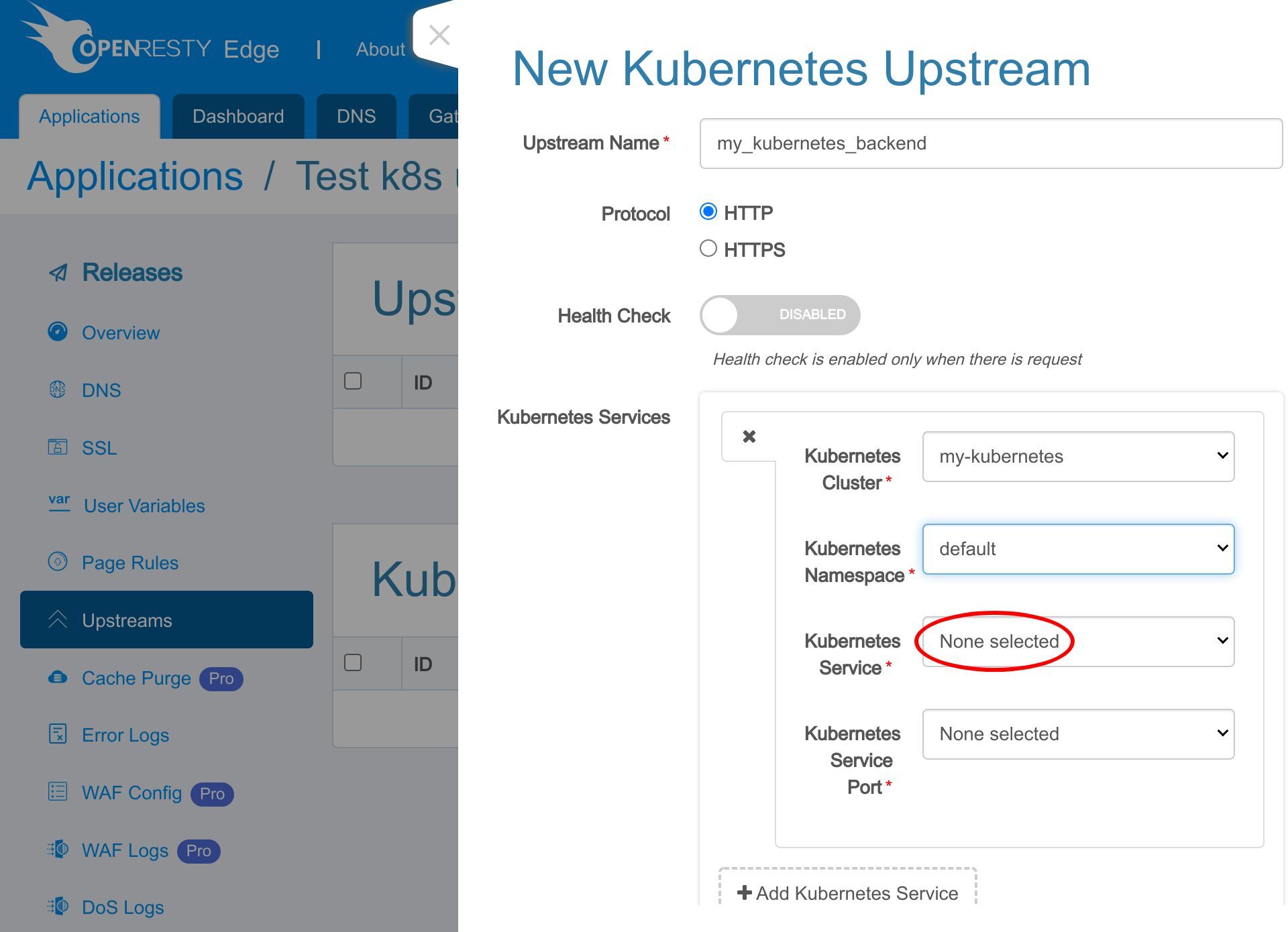

「default」という名前のネームスペースを選択します。他のネームスペースを選択することもできます。

Kubernetes サービスをリストから選択します。

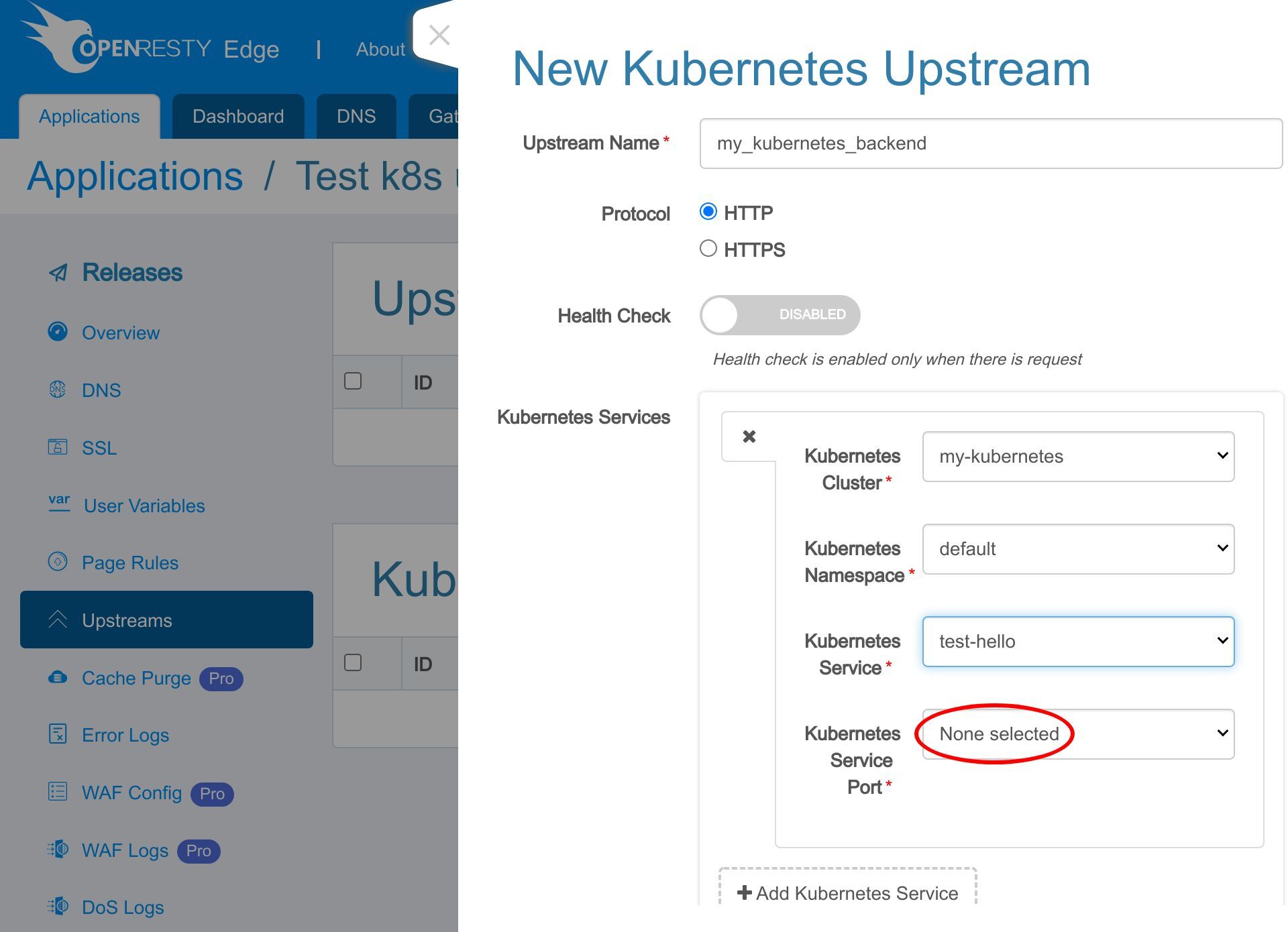

「test-hello」サービスを選択します。

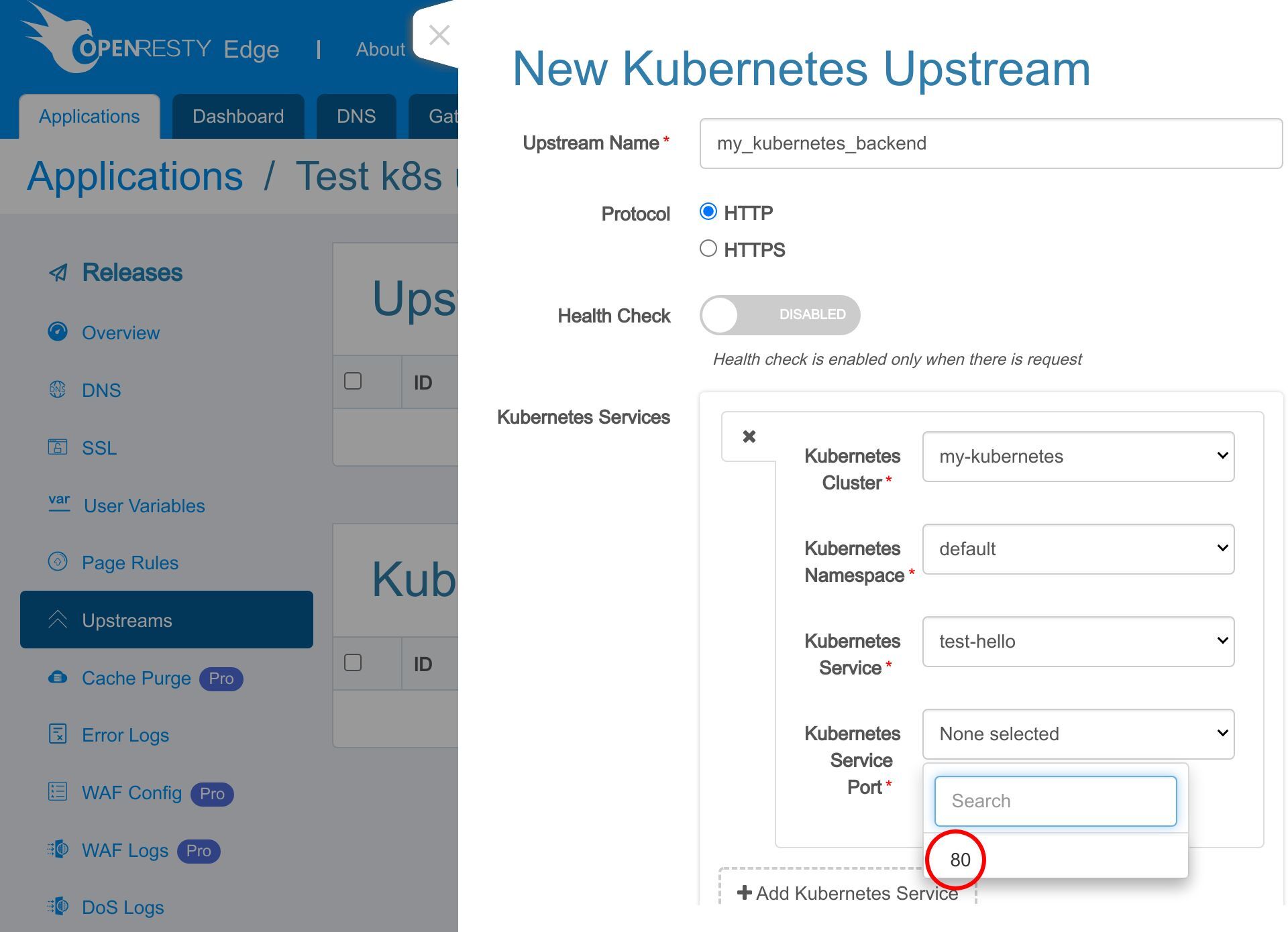

そしてサービスのポートを選択します。

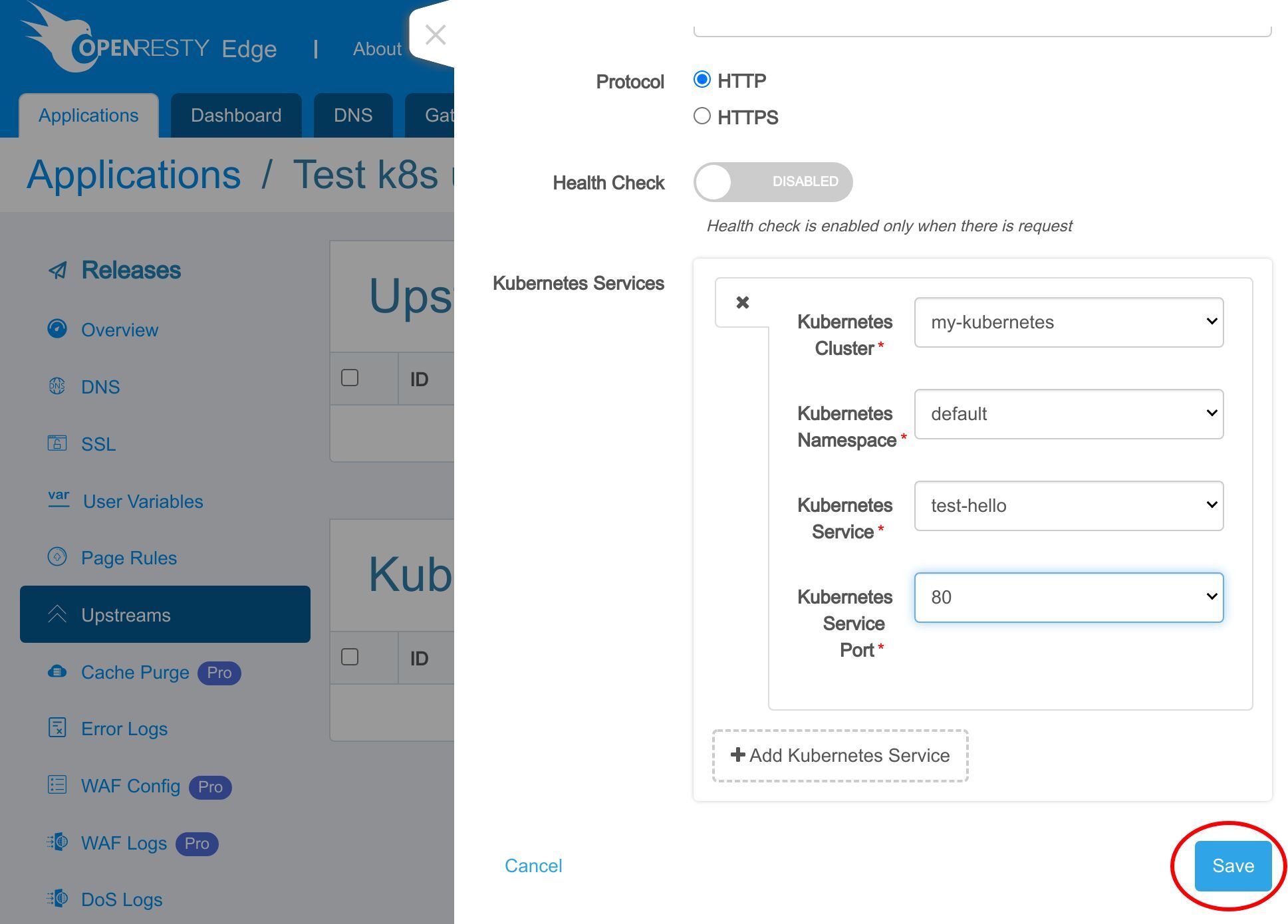

この例では 80 ポートです。

新しいアップストリームを保存します。

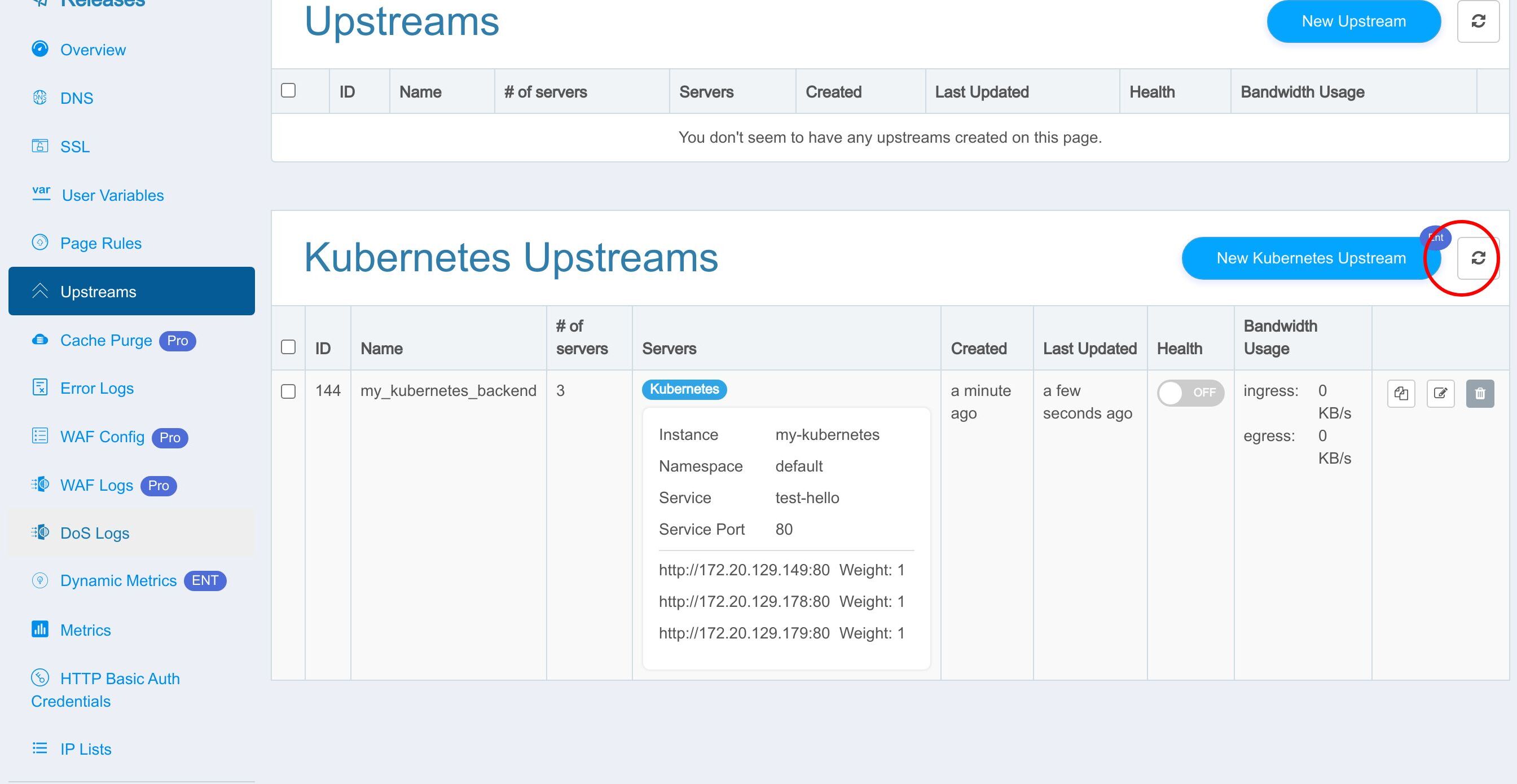

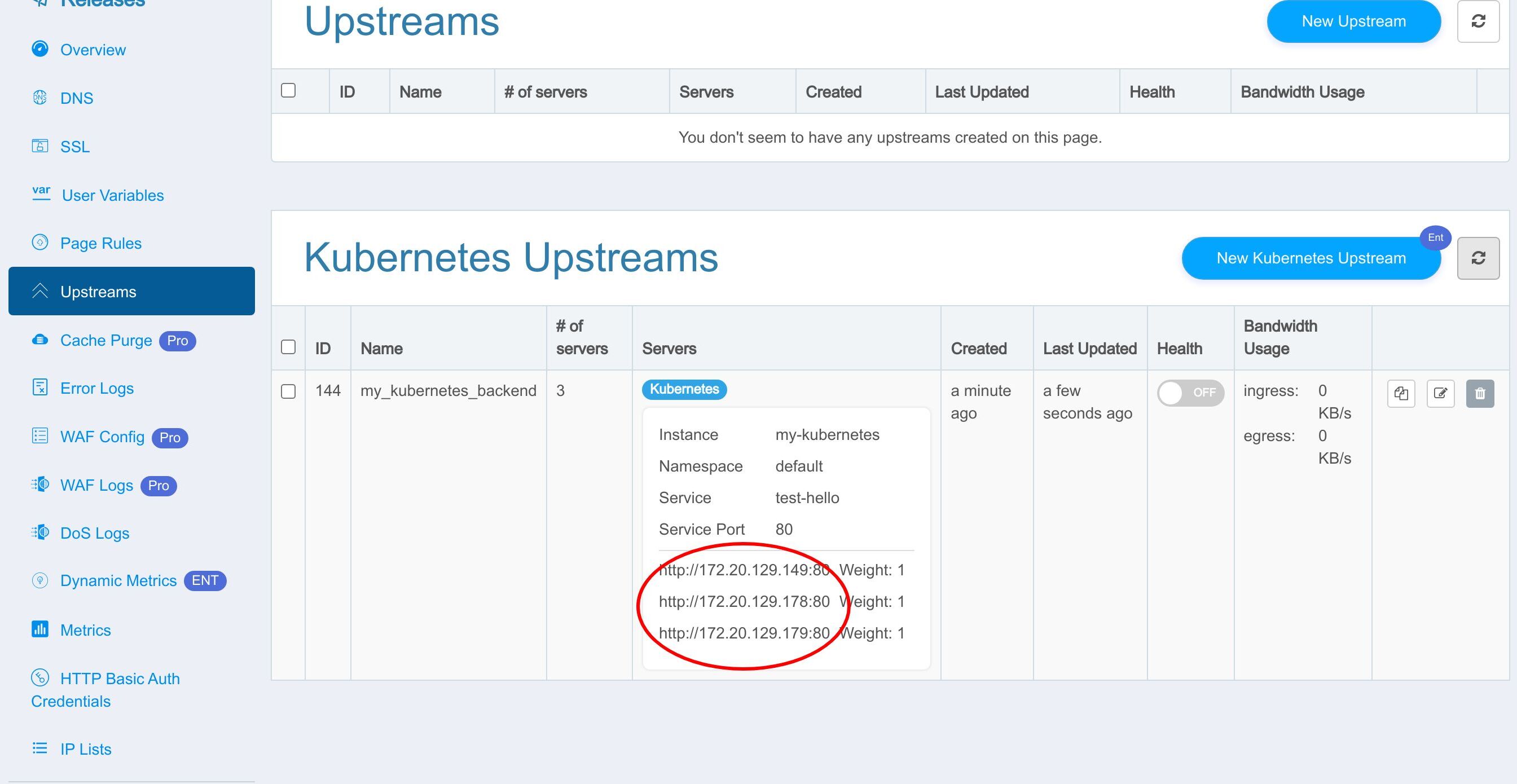

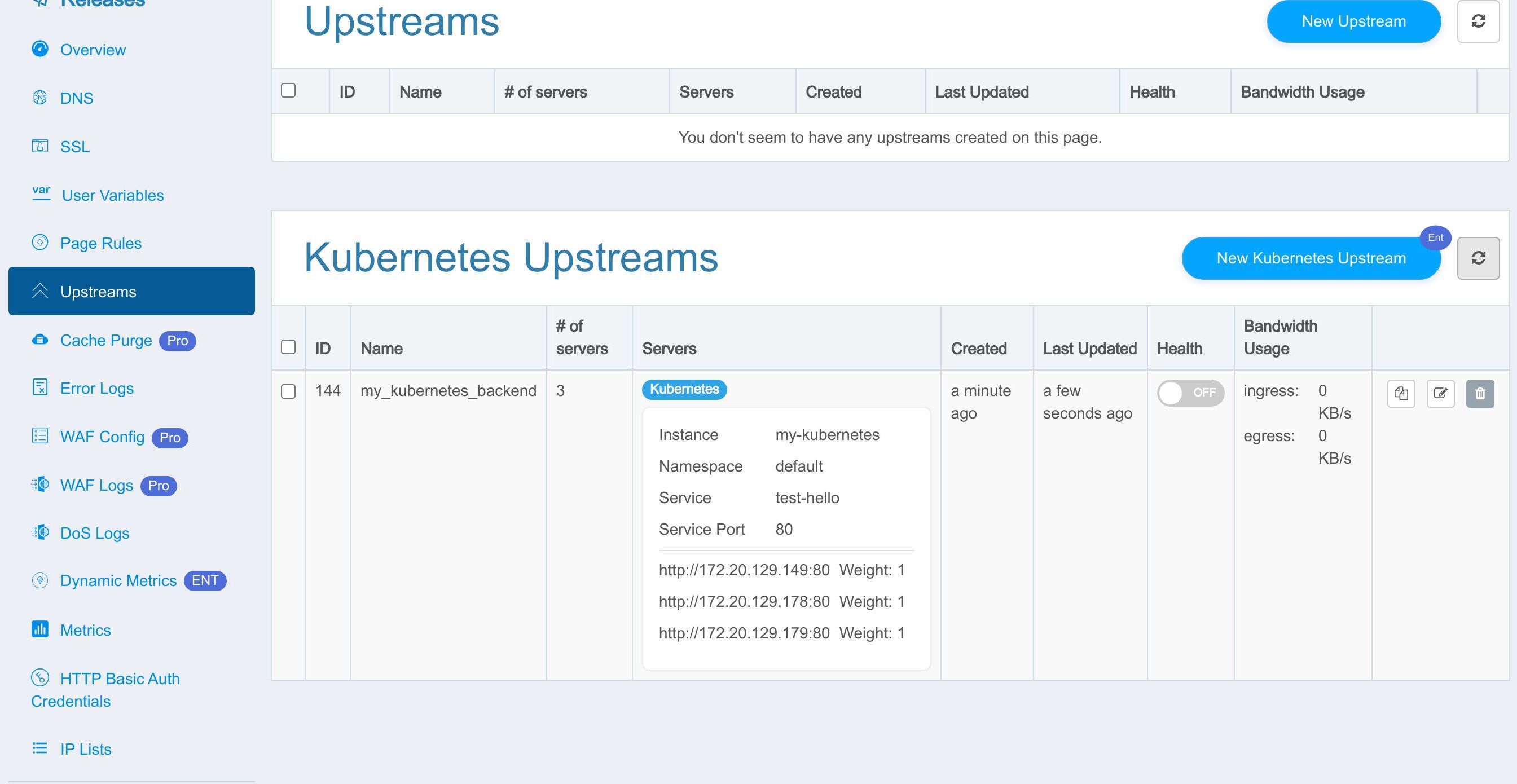

これで新しい Kubernetes アップストリームの作成に成功しました。

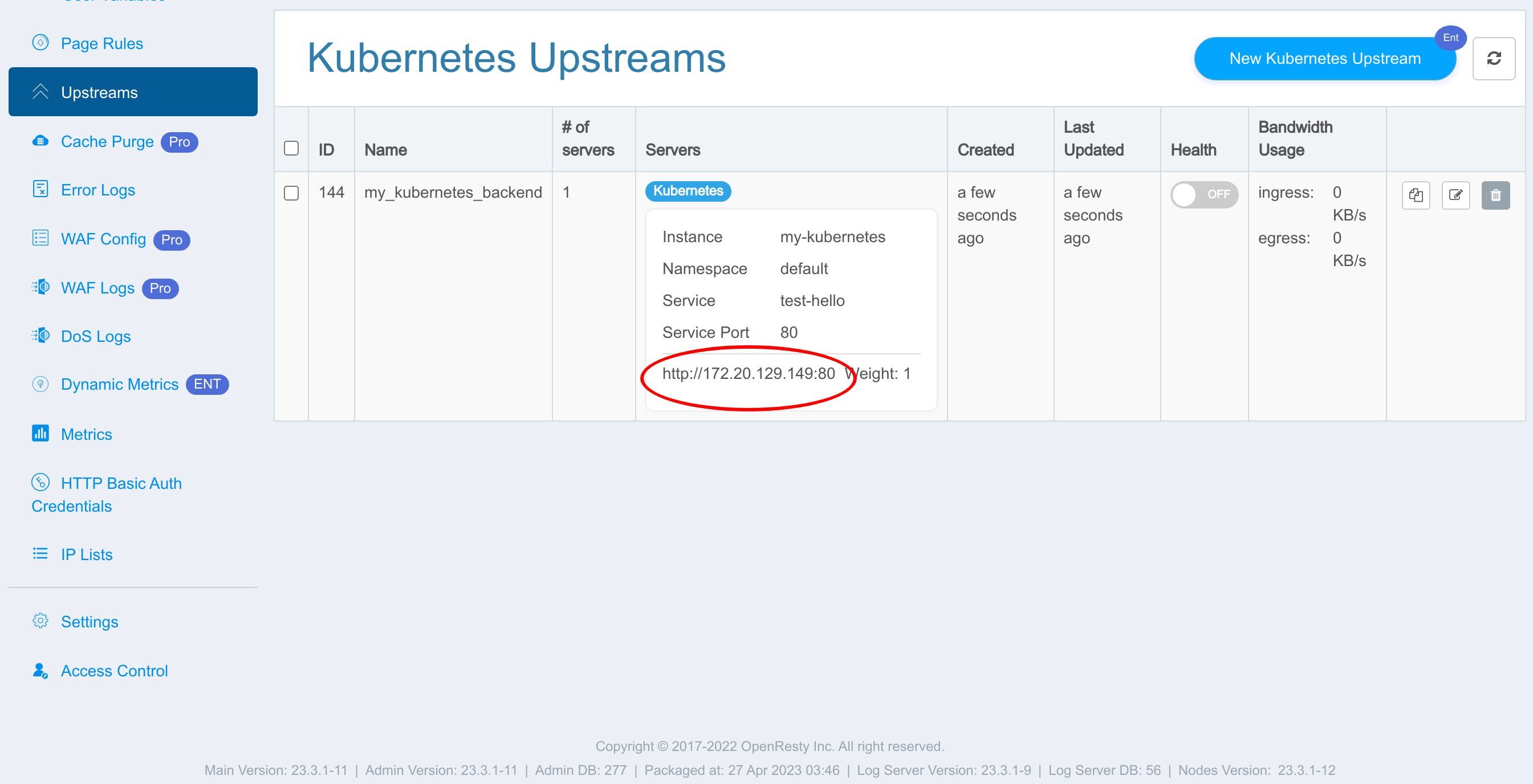

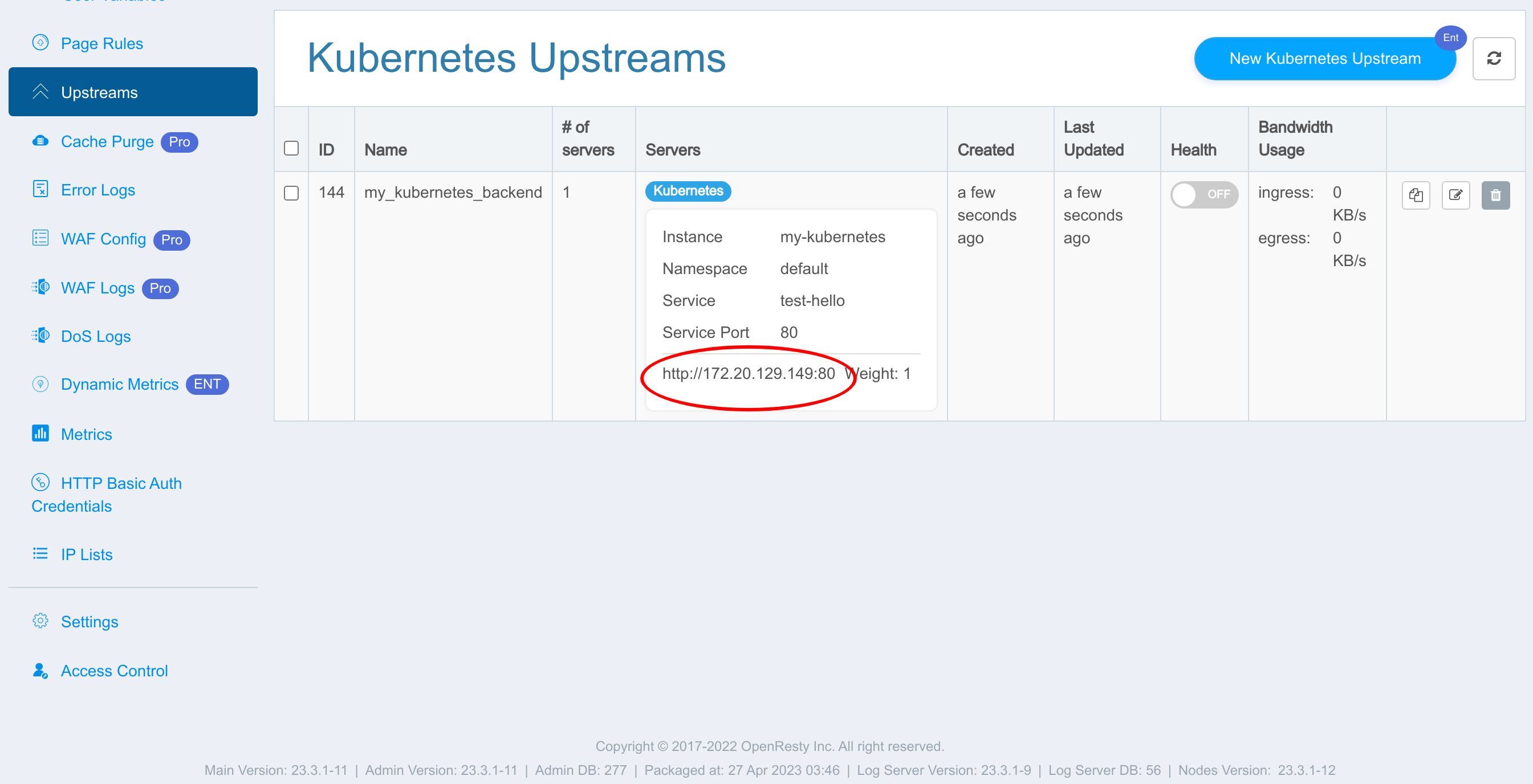

アップストリームサーバーのアドレスがここに表示されています。

これらは Kubernetes クラスターから自動的に同期された Kubernetes ノードまたはコンテナです。

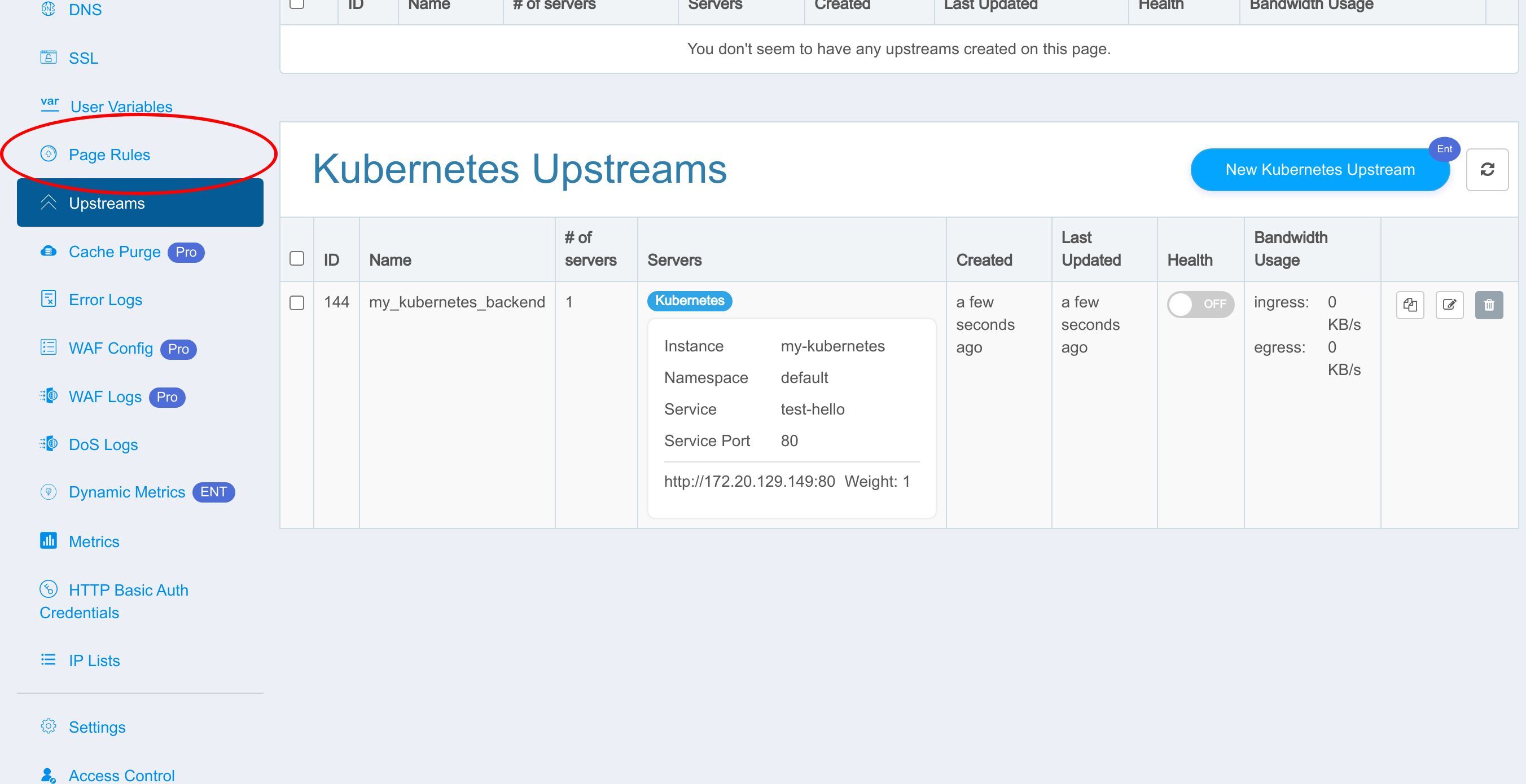

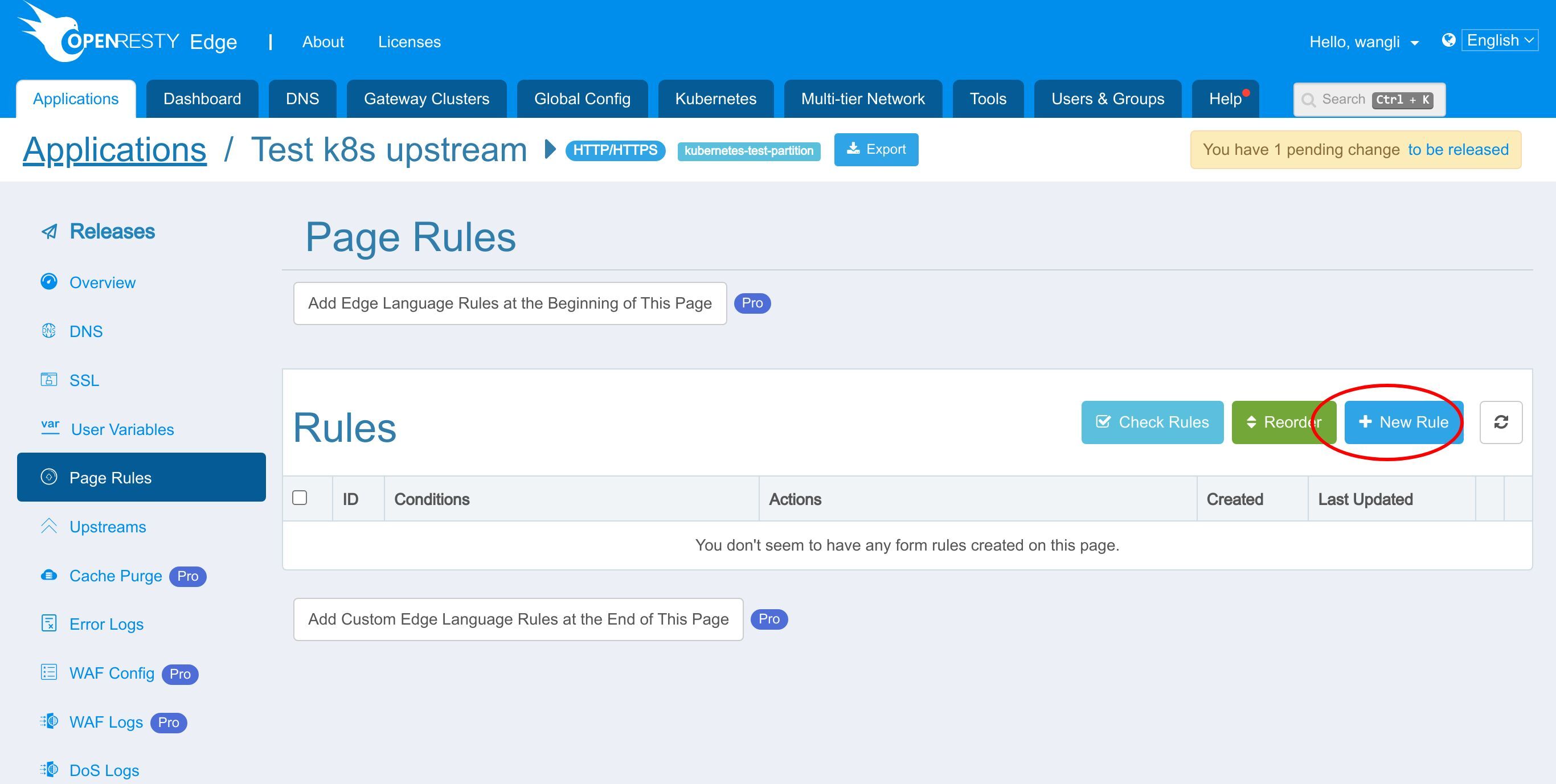

Kubernetes アップストリームを使用するページルールの作成

通常のアップストリームと同様に、この新しい Kubernetes アップストリームを使用するための新しいページルールを作成する必要があります。

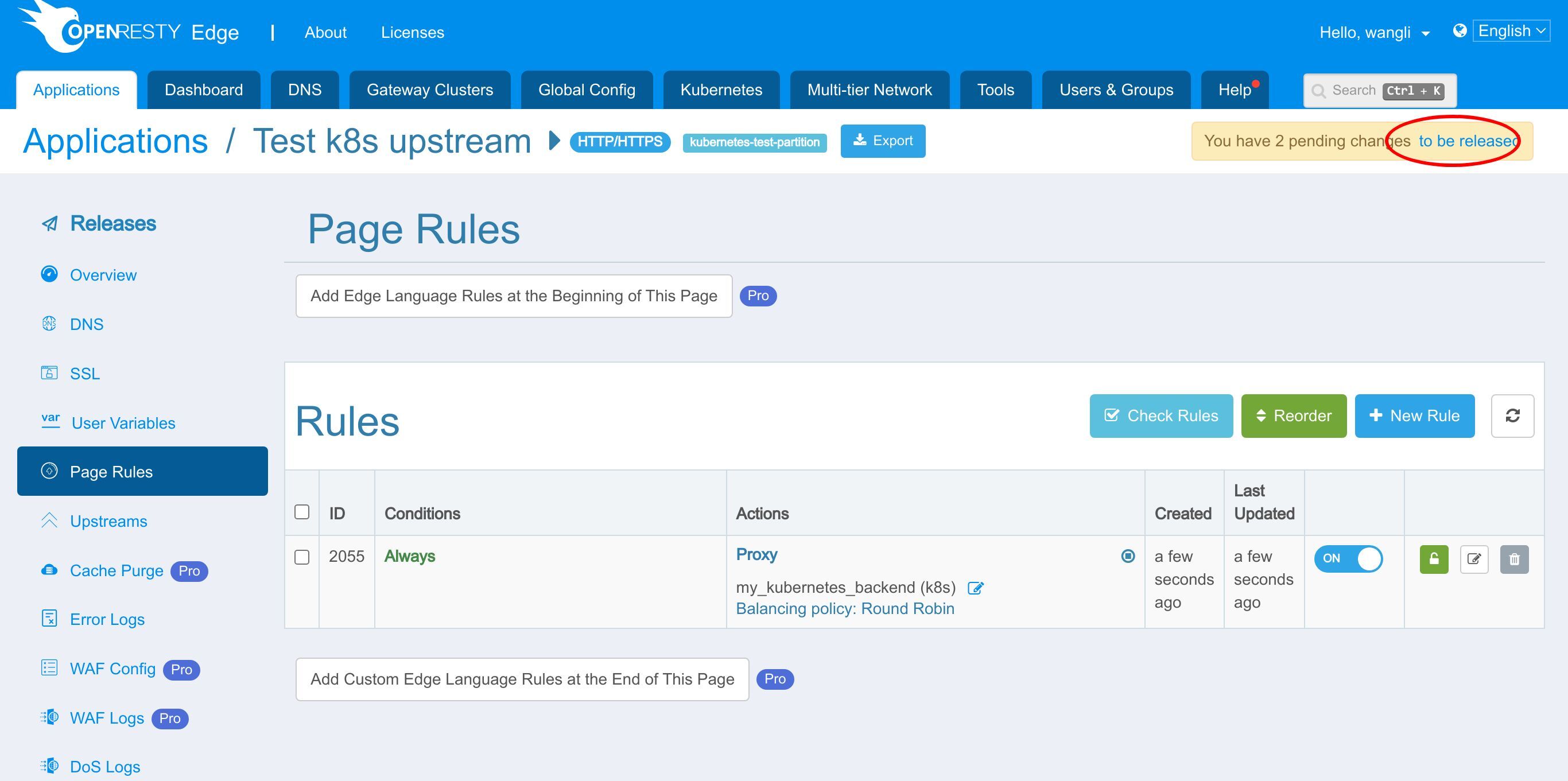

ページルールページに移動します。

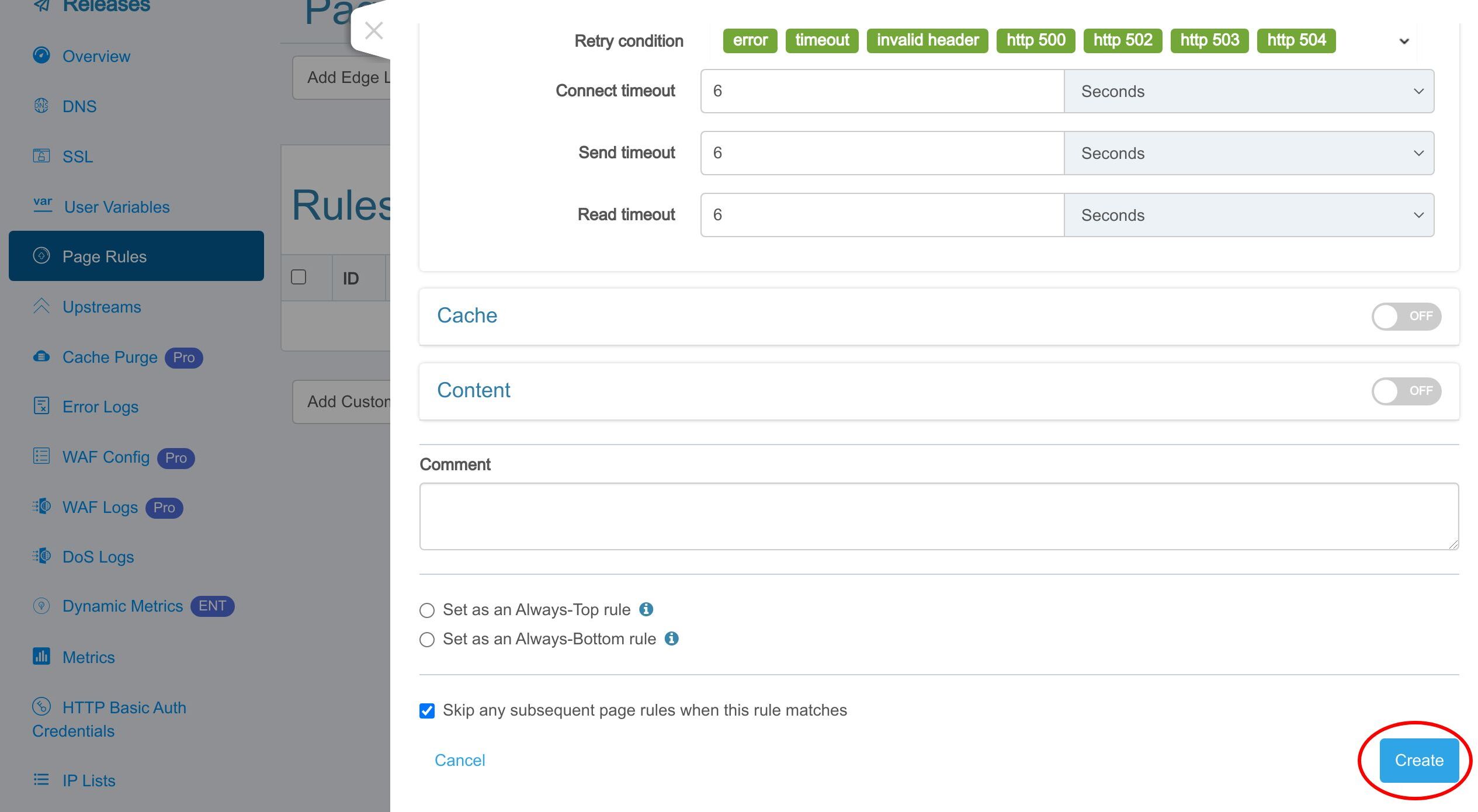

この「新規ルール」ボタンをクリックします。

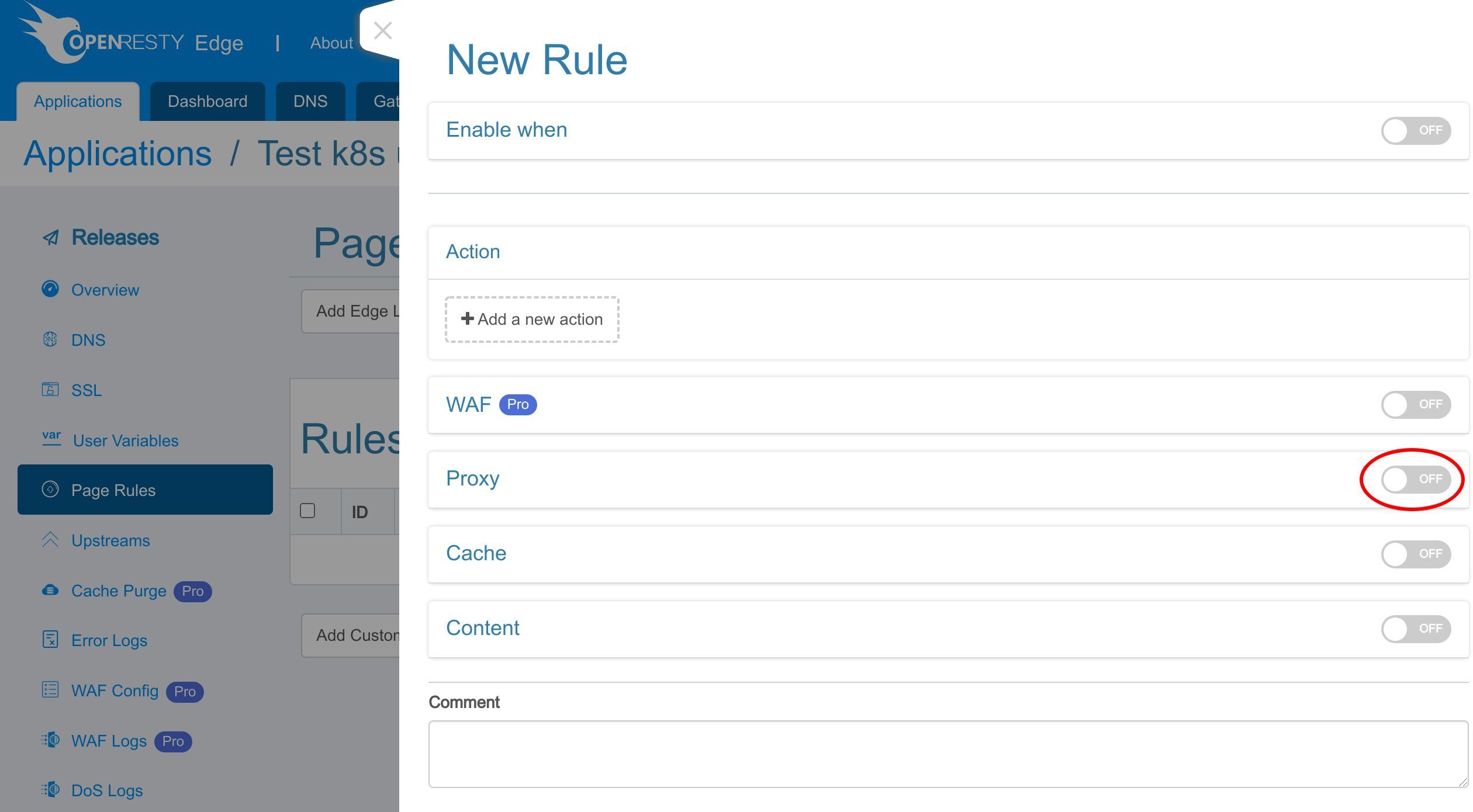

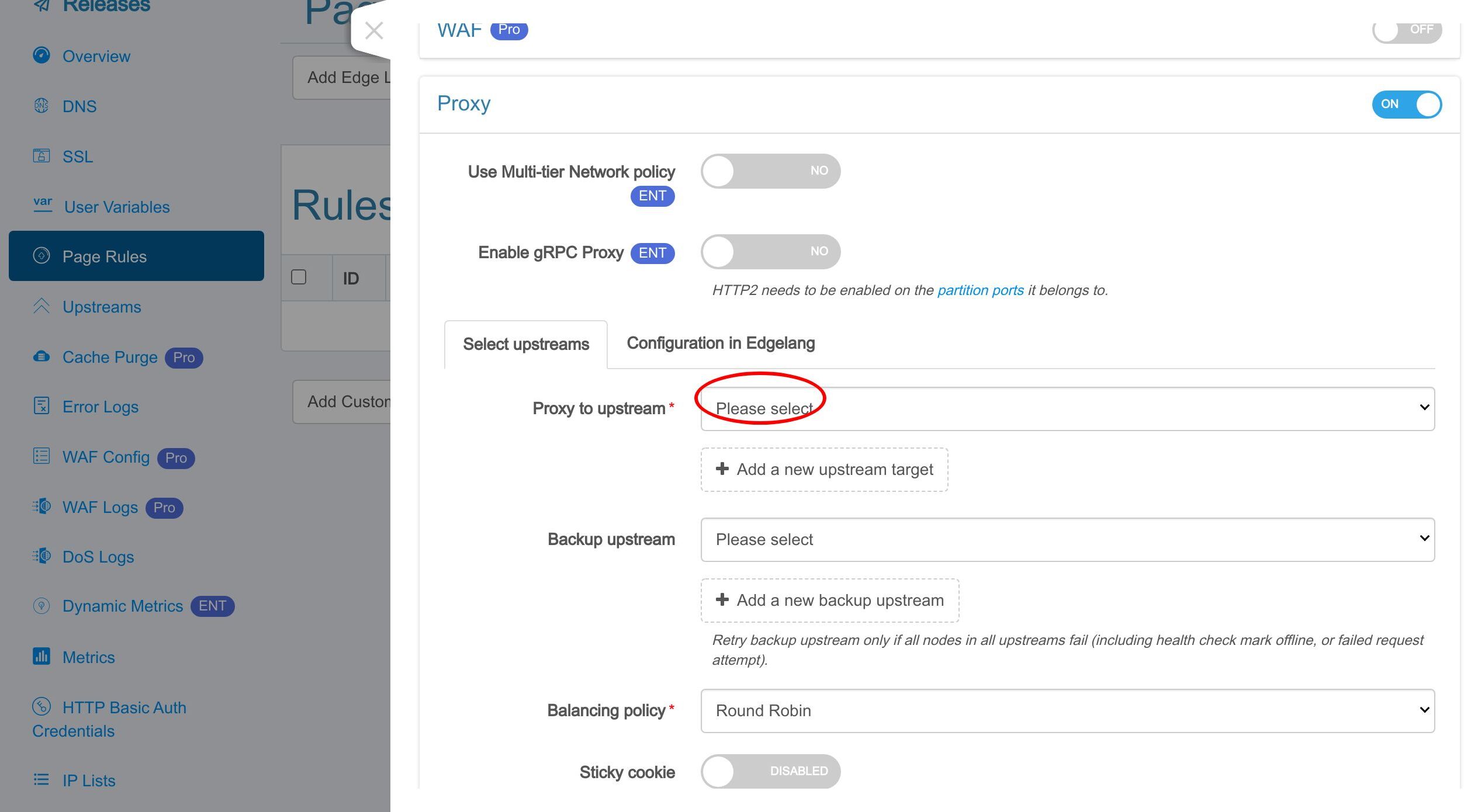

Proxy アクションを有効にします。

この「アップストリームにプロキシ」ドロップダウンリストをクリックします。

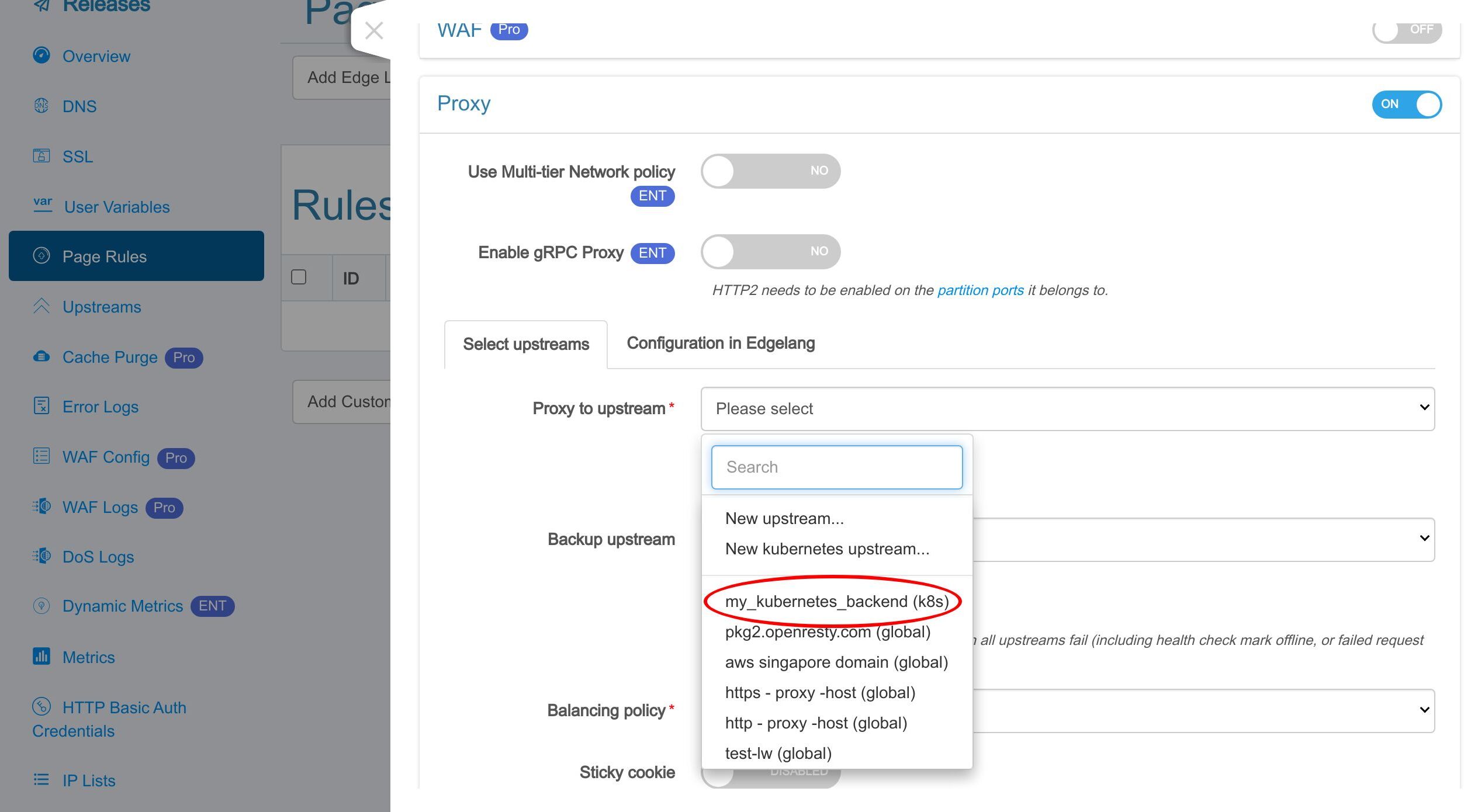

先ほど作成した Kubernetes アップストリーム「my kubernetes backend」を選択します。

このページルールを保存します。

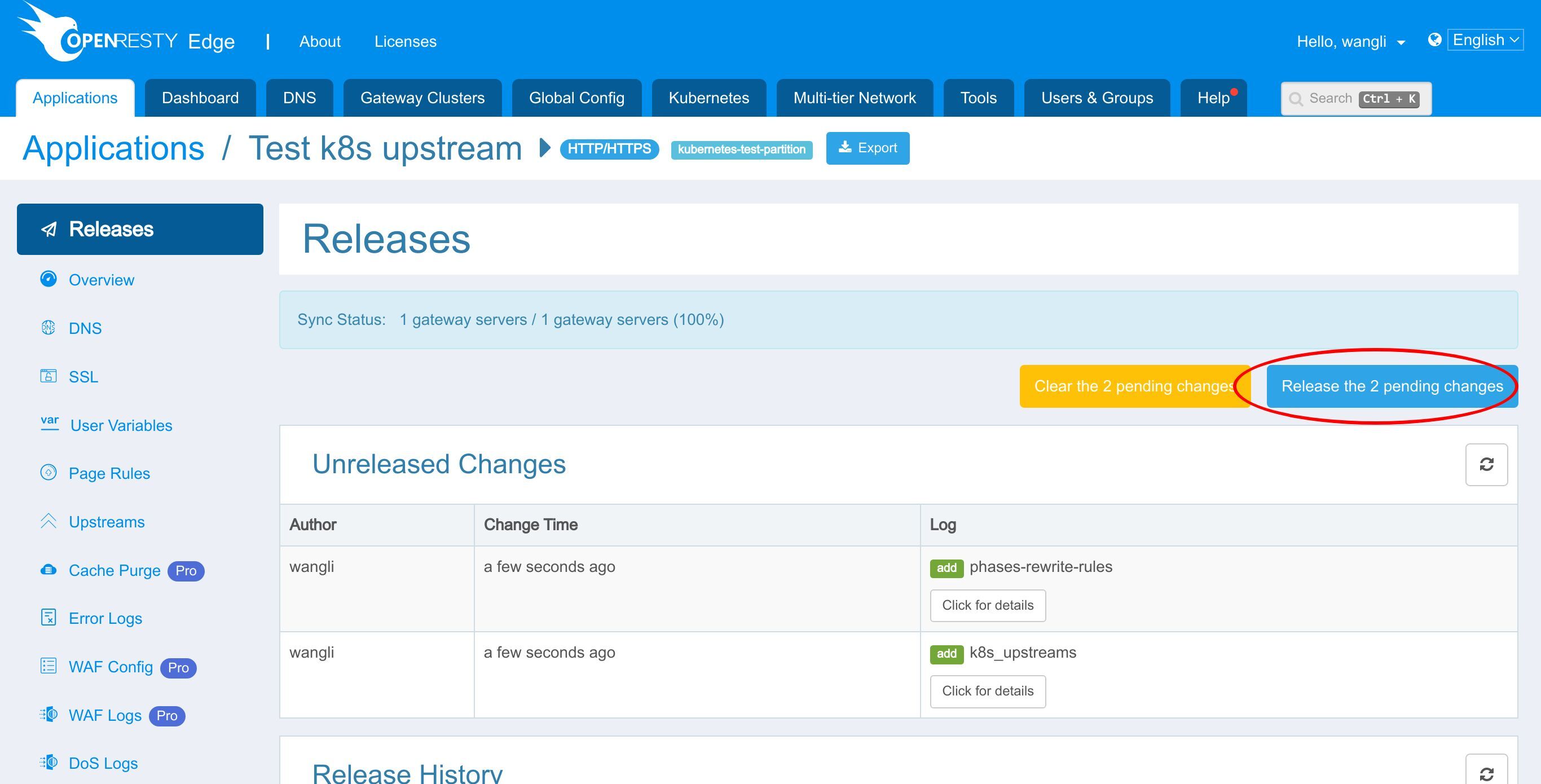

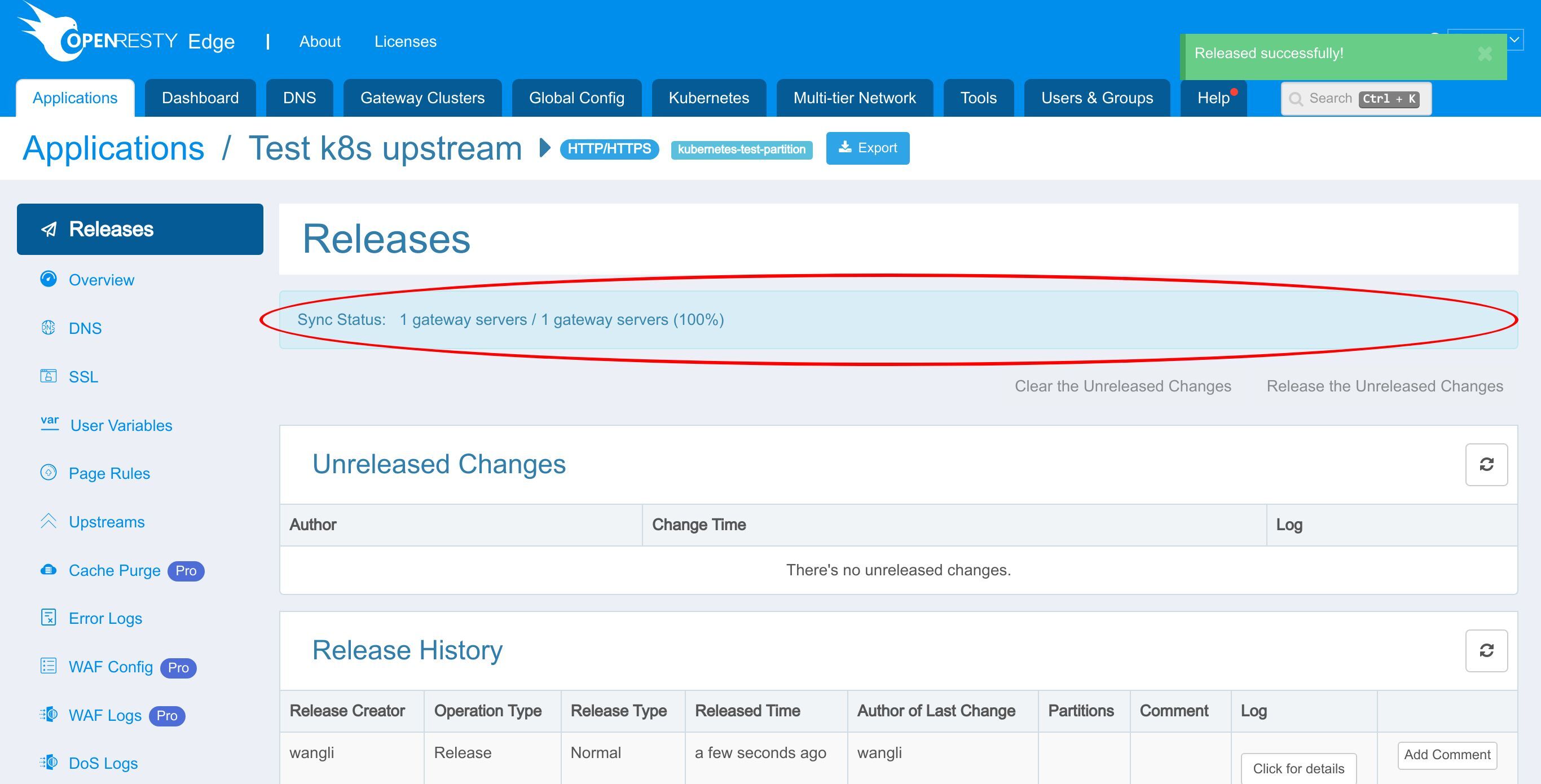

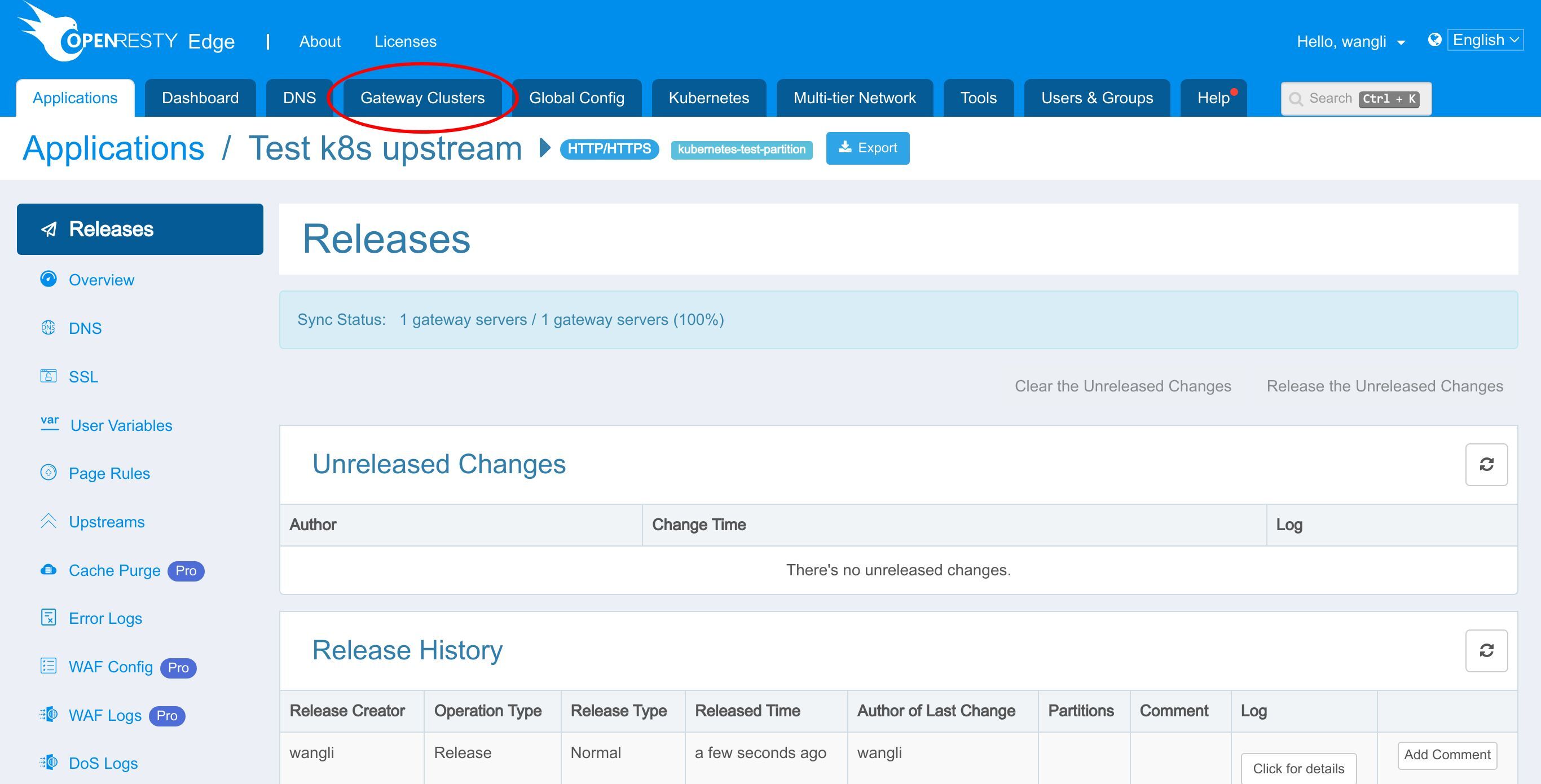

いつものように、先ほどの変更をプッシュするために新しいバージョンをリリースする必要があります。

このボタンをクリックします。

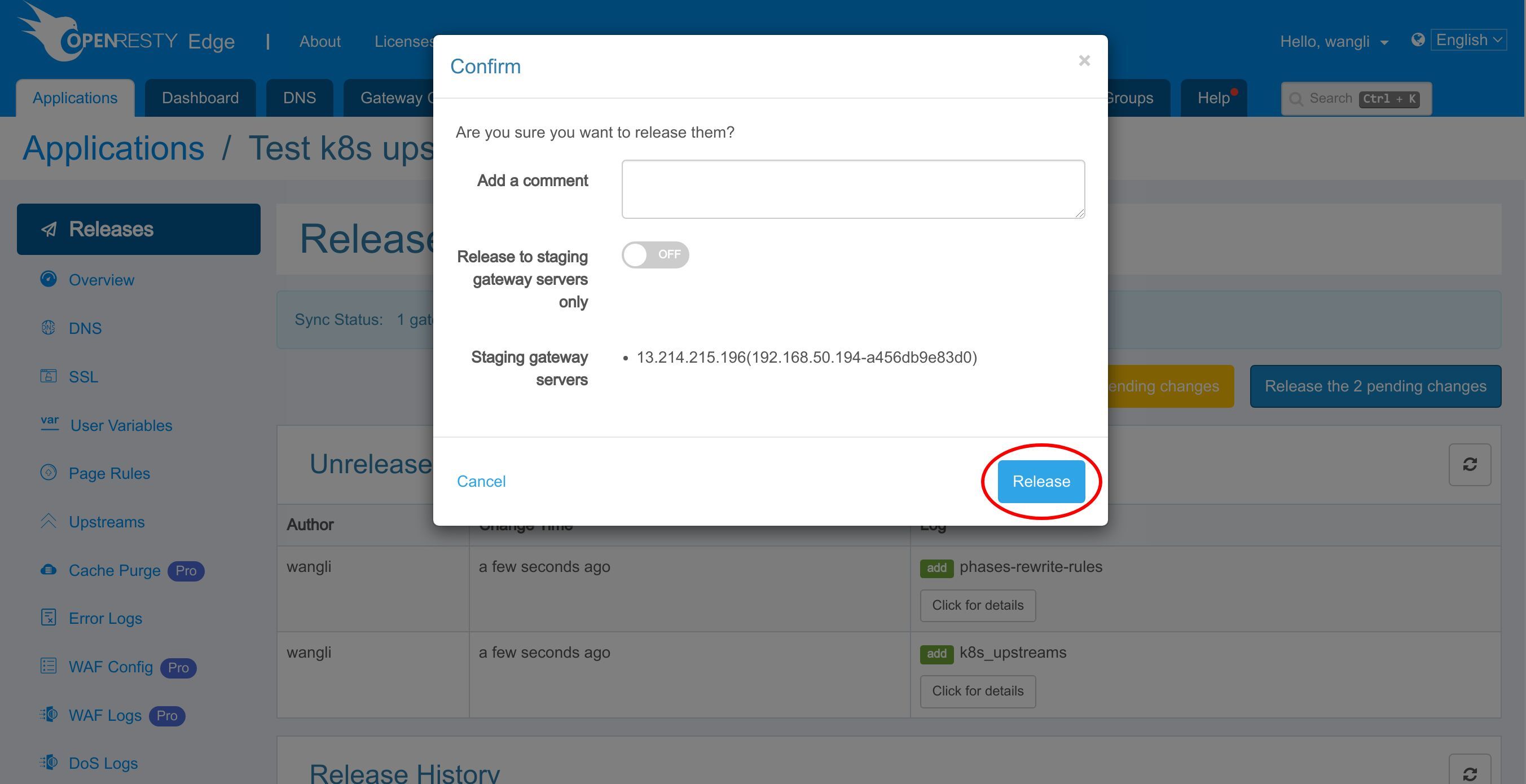

リリースします!

同期が完了しました。

これで、新しいページルールがすべてのゲートウェイクラスターとサーバーにプッシュされました。

これらの設定変更にはサーバーのリロード、再起動、またはバイナリアップグレードは必要ありません。そのため、非常に効率的でスケーラブルです。

テスト

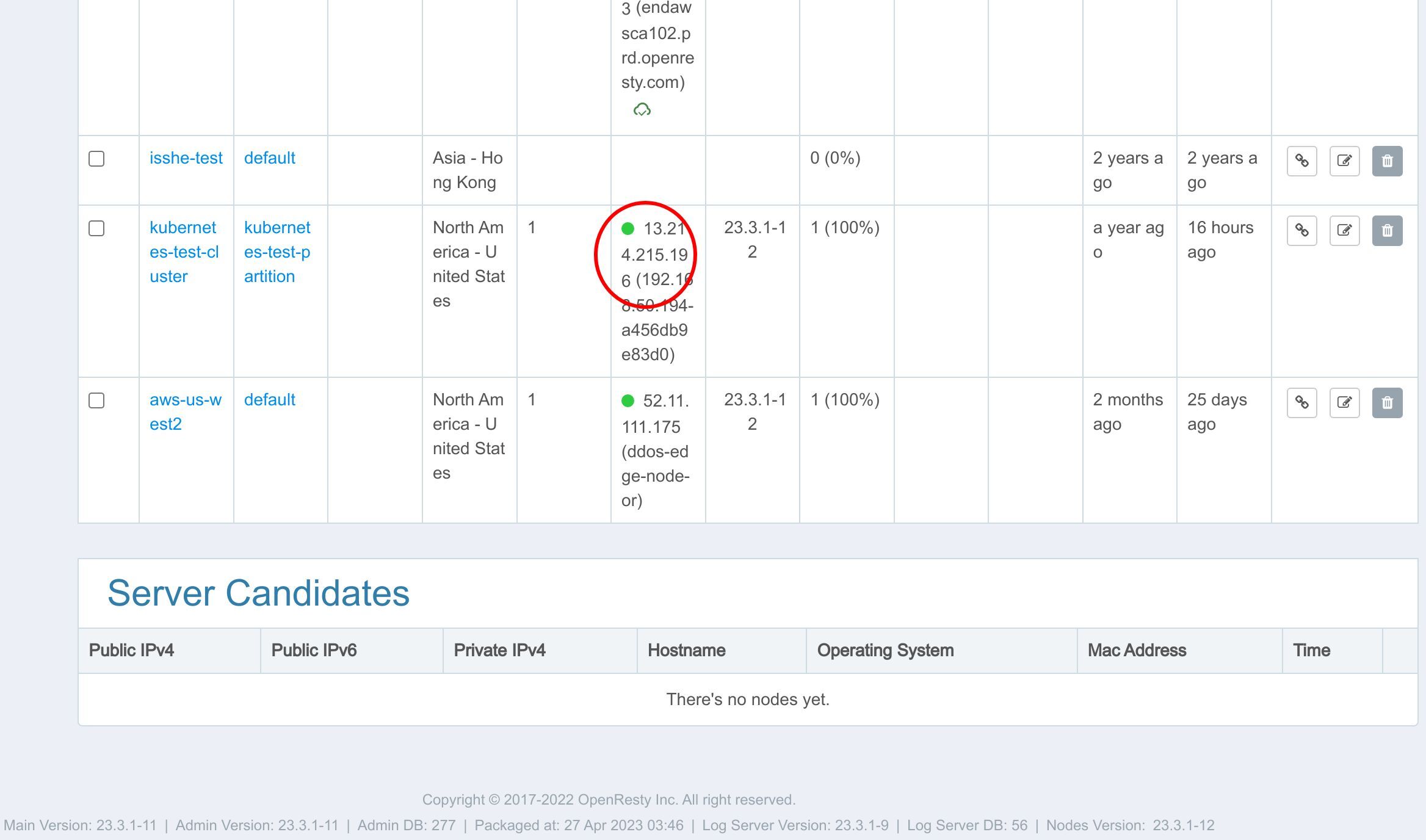

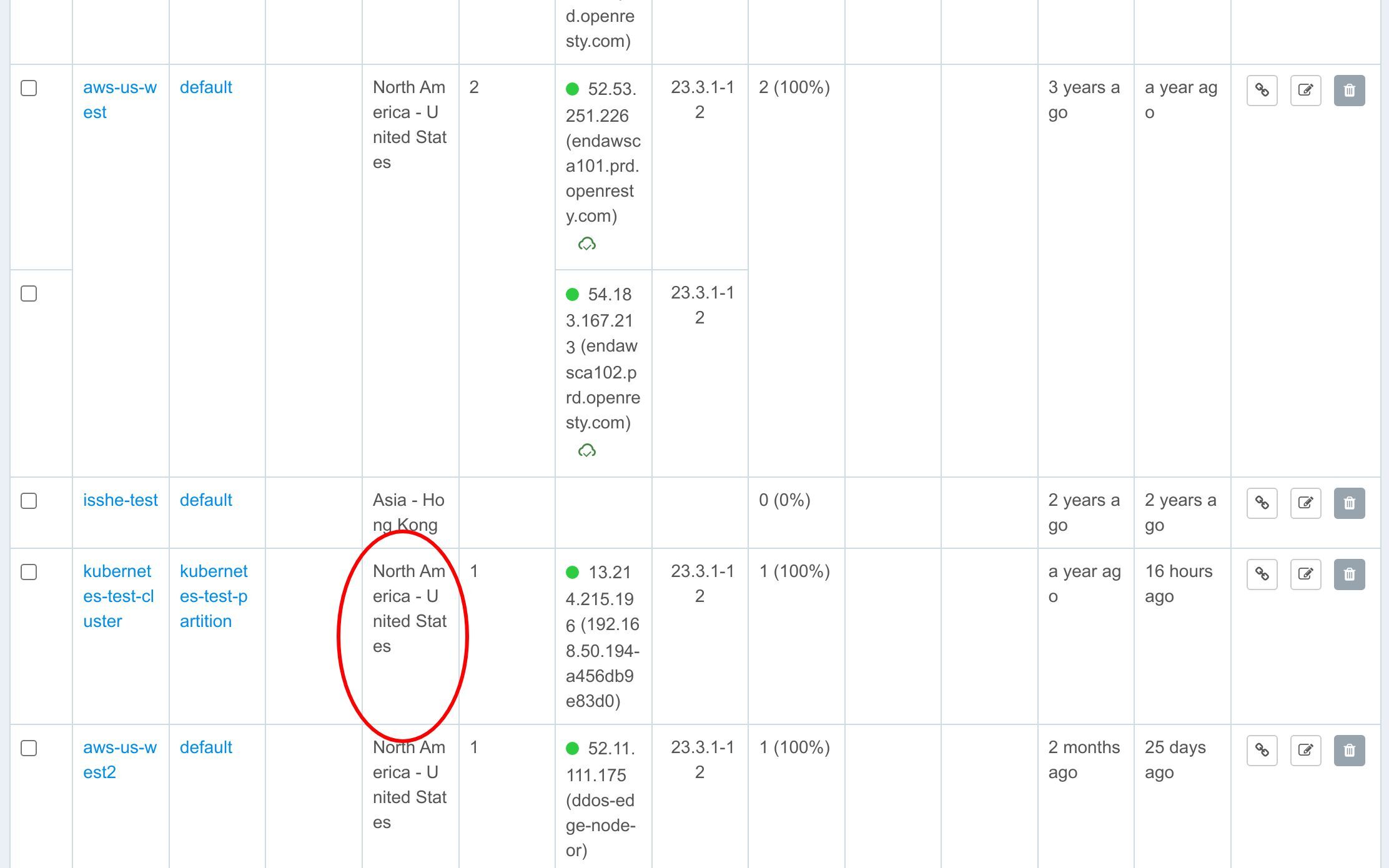

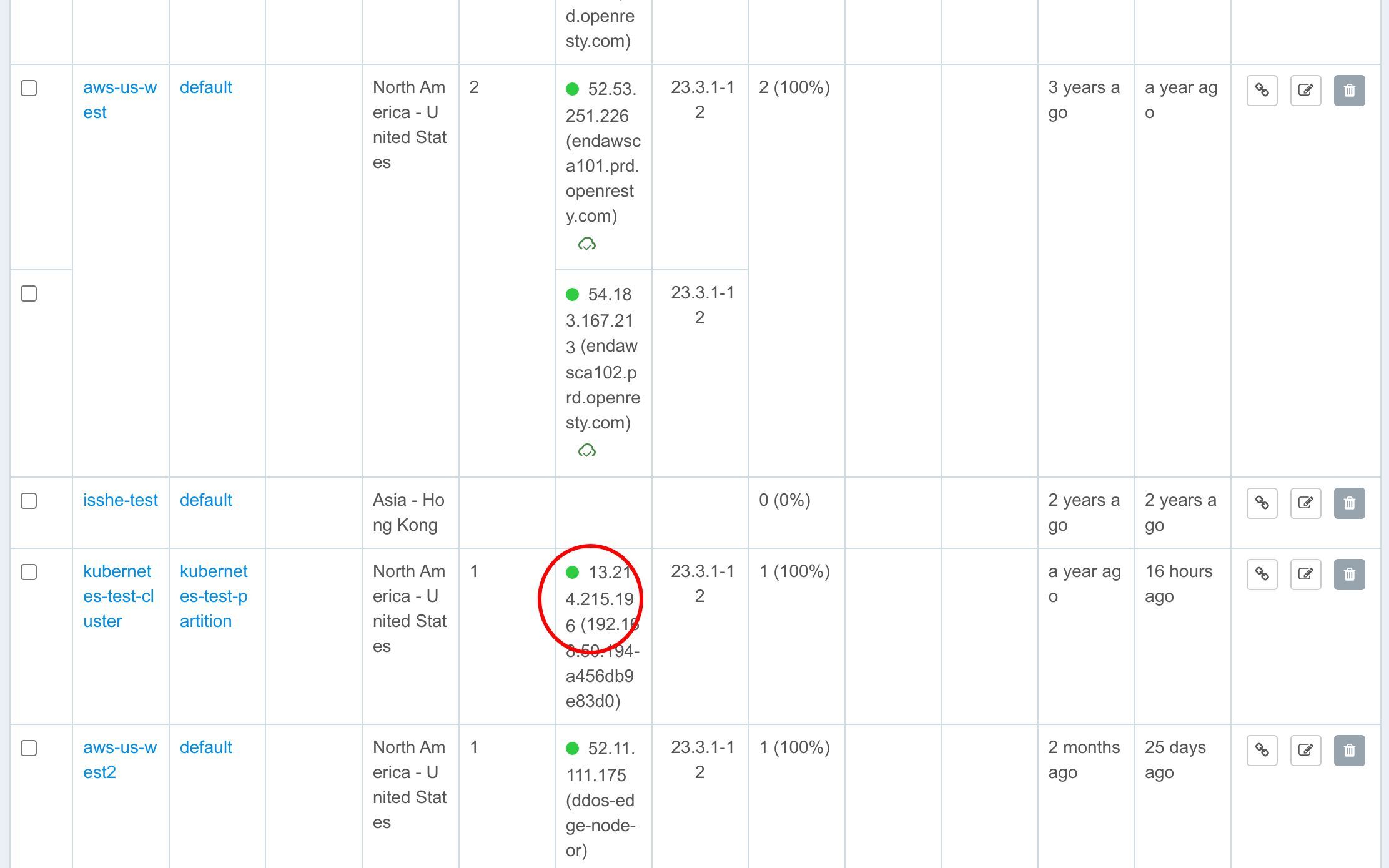

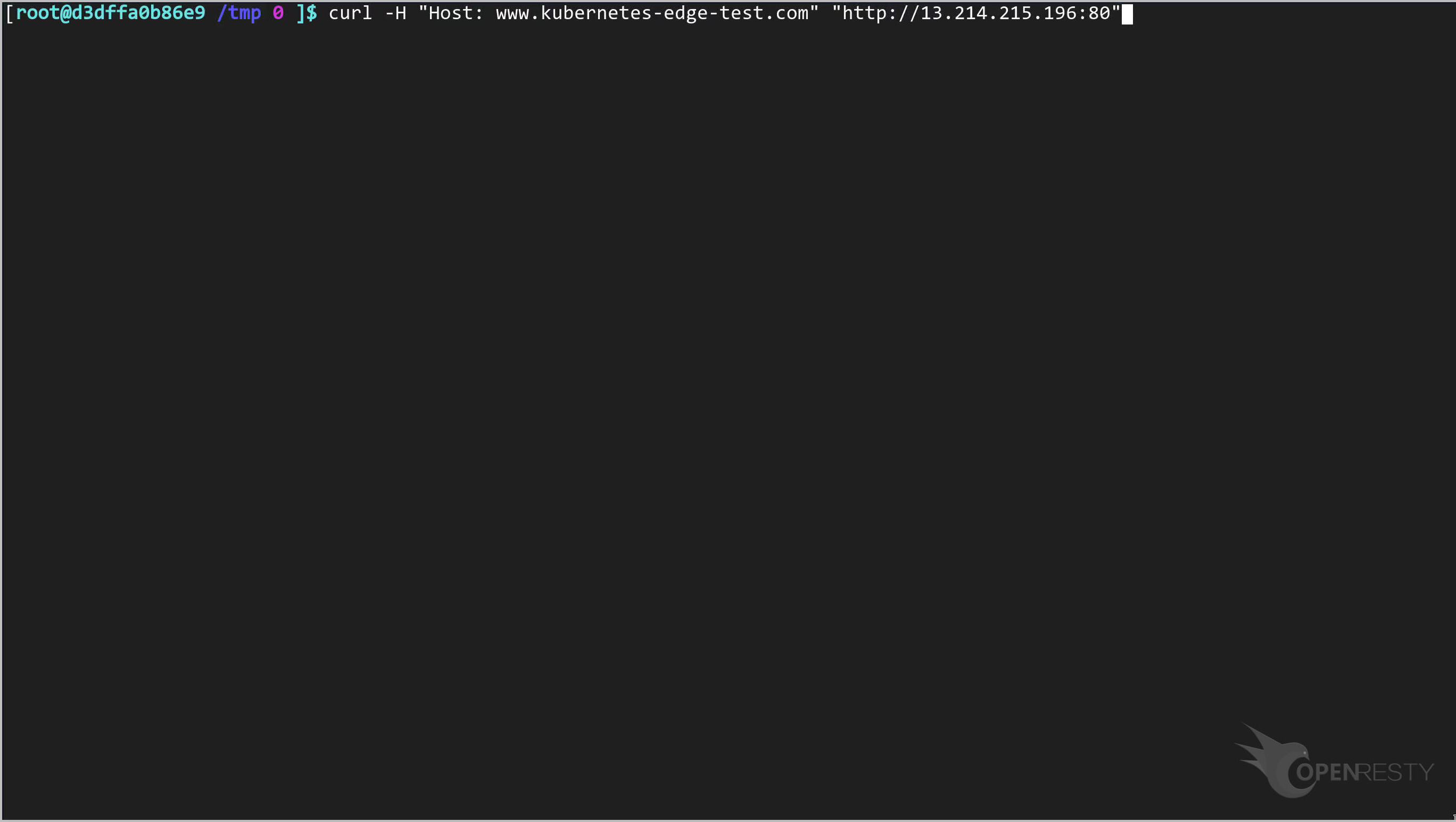

次に、実際のテストを行うために、対応するパーティション内の Edge ゲートウェイサーバーを見つけます。ゲートウェイクラスターページに移動します。

対応するゲートウェイサーバーを見つけます。

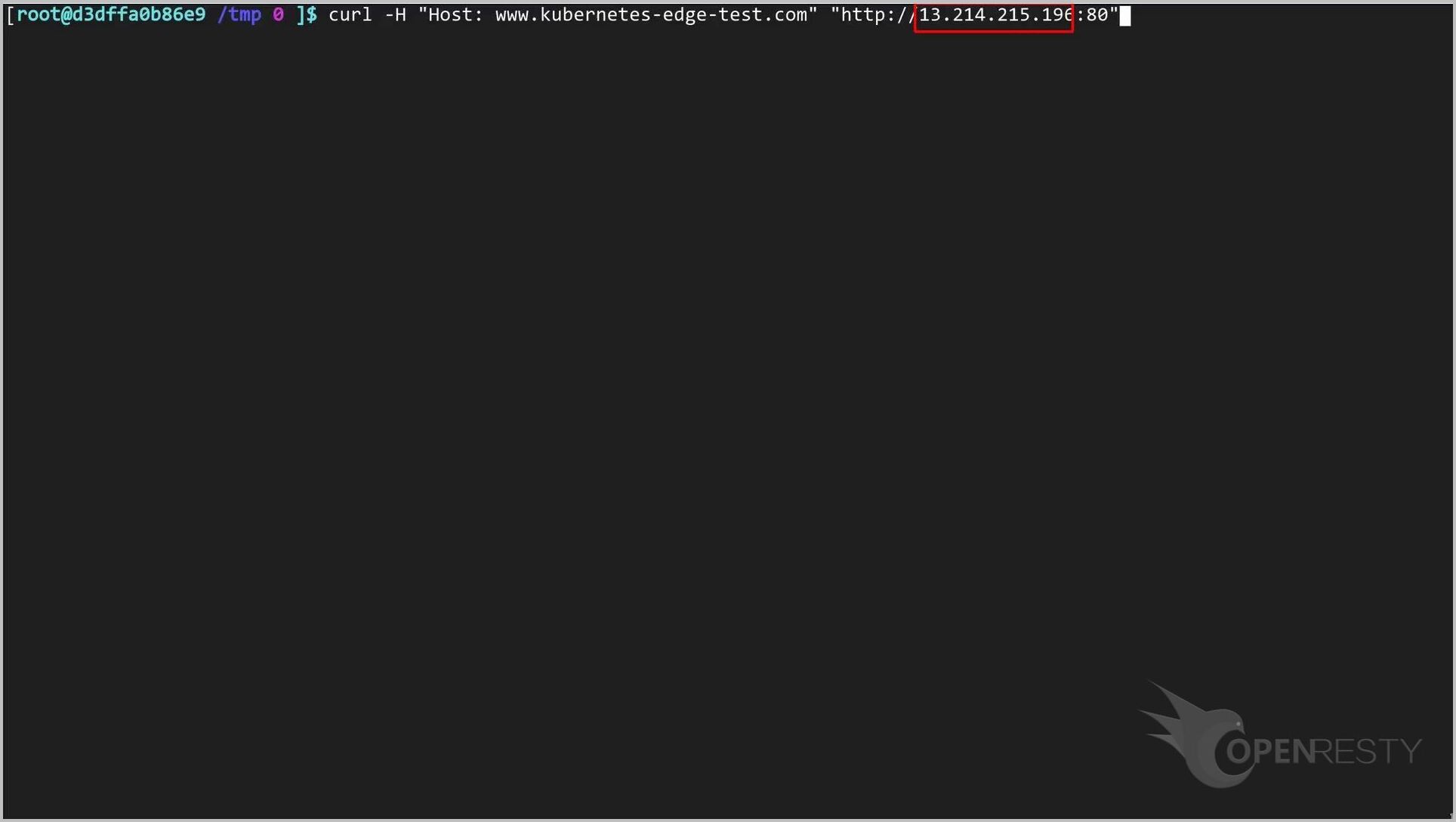

パブリック IP アドレスが .196 で終わっていることを覚えておいてください。

この IP アドレスをコピーして、コマンドラインで使用できるようにします。

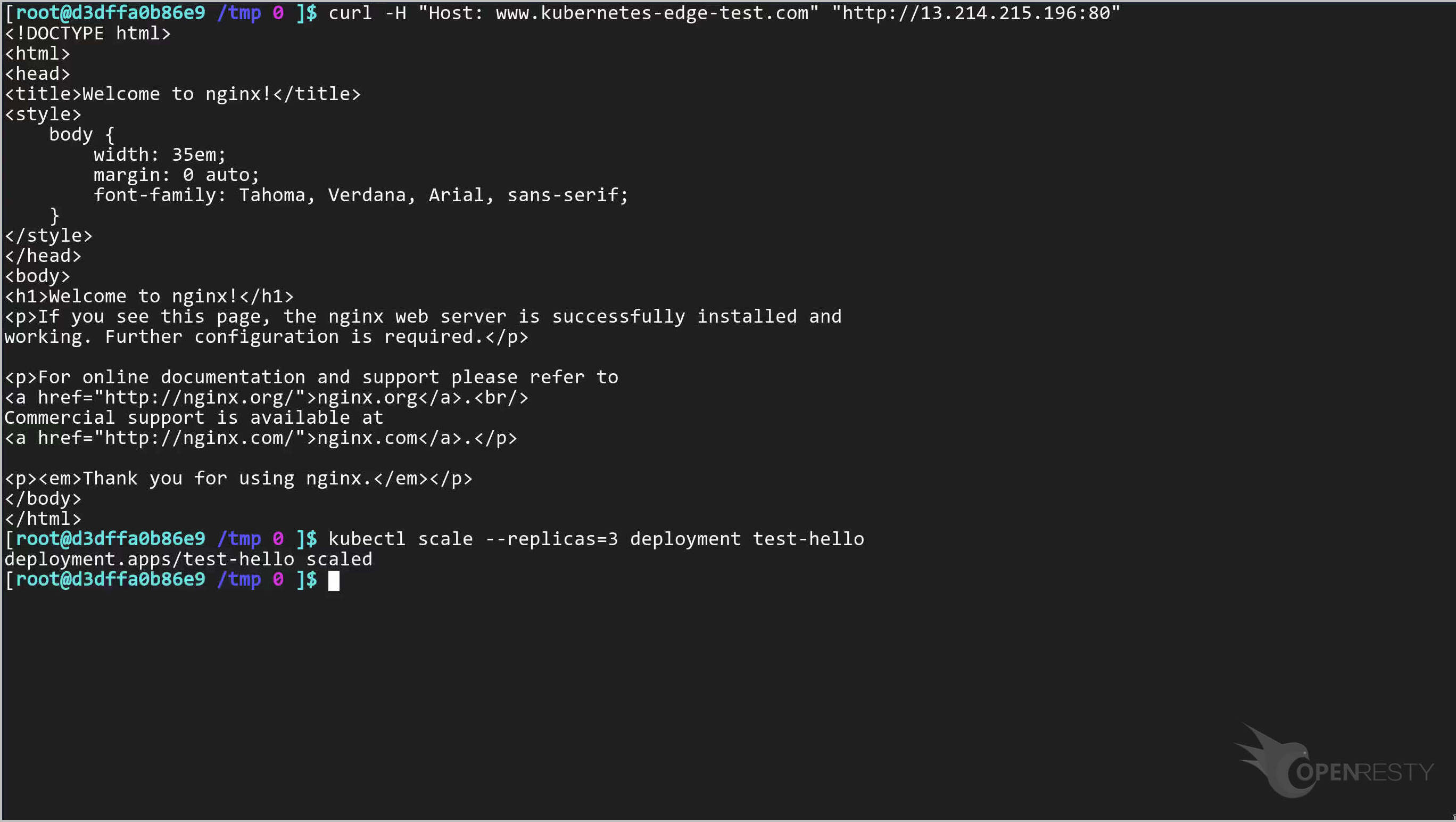

ターミナル上で、このゲートウェイサーバーを通じて Kubernetes サービスにアクセスしてみます。

先ほどコピーしたゲートウェイサーバーの IP アドレスを使用していることに注意してください。

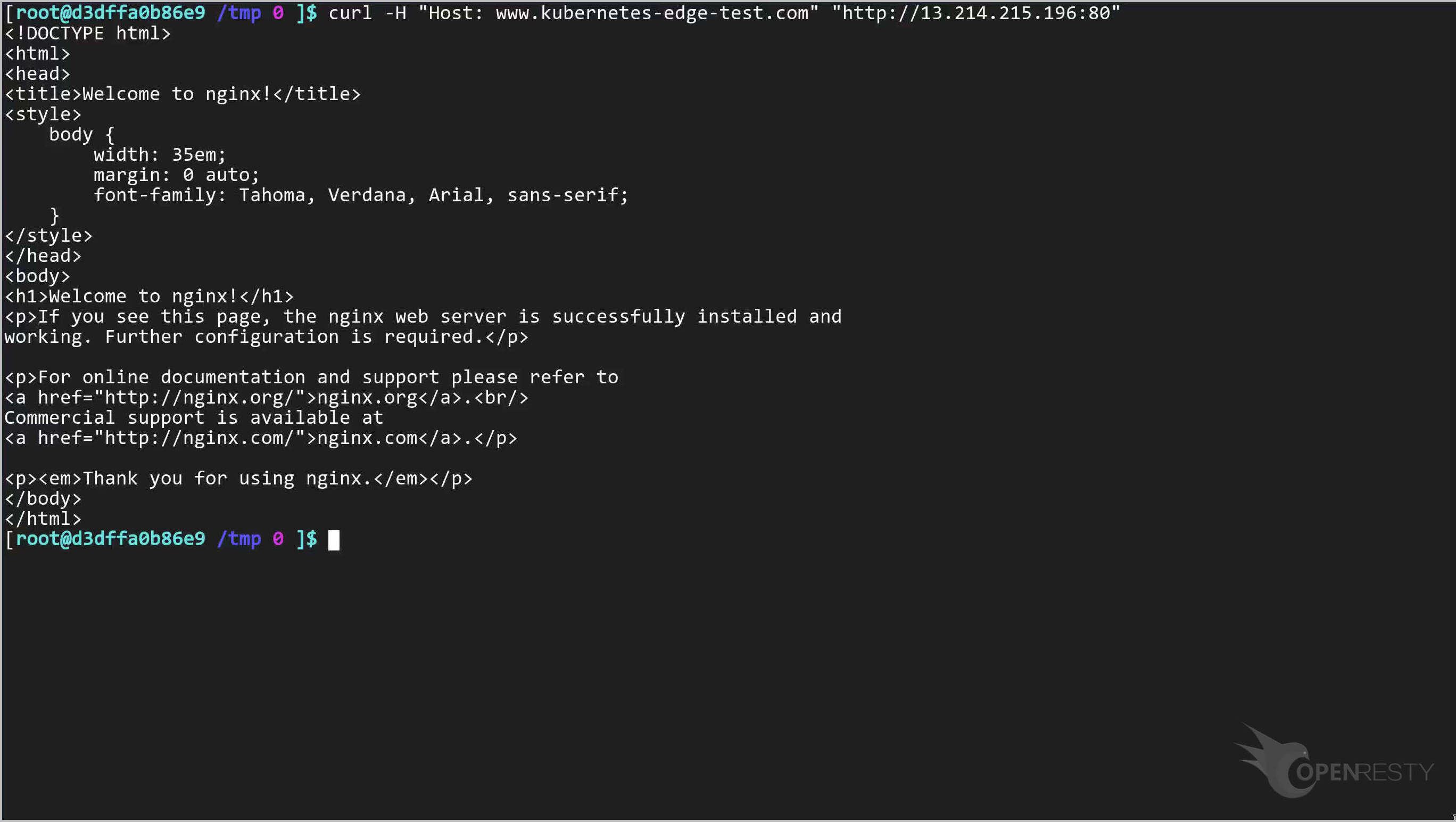

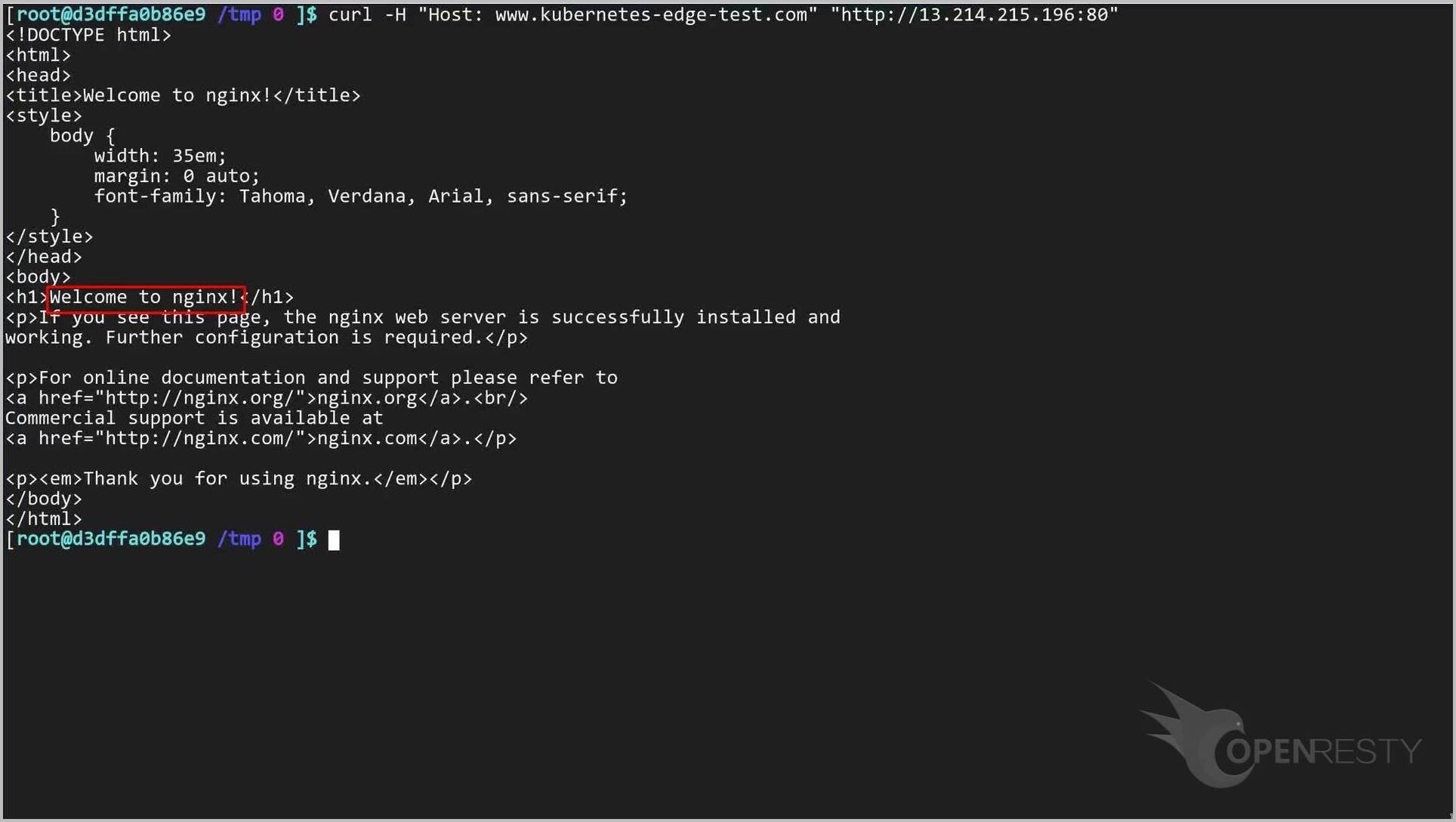

このコマンドを実行します。

このサービスへのアクセスが成功していることがわかります。このチュートリアルの残りの部分では、Kubernetes サービスの設定を変更します。

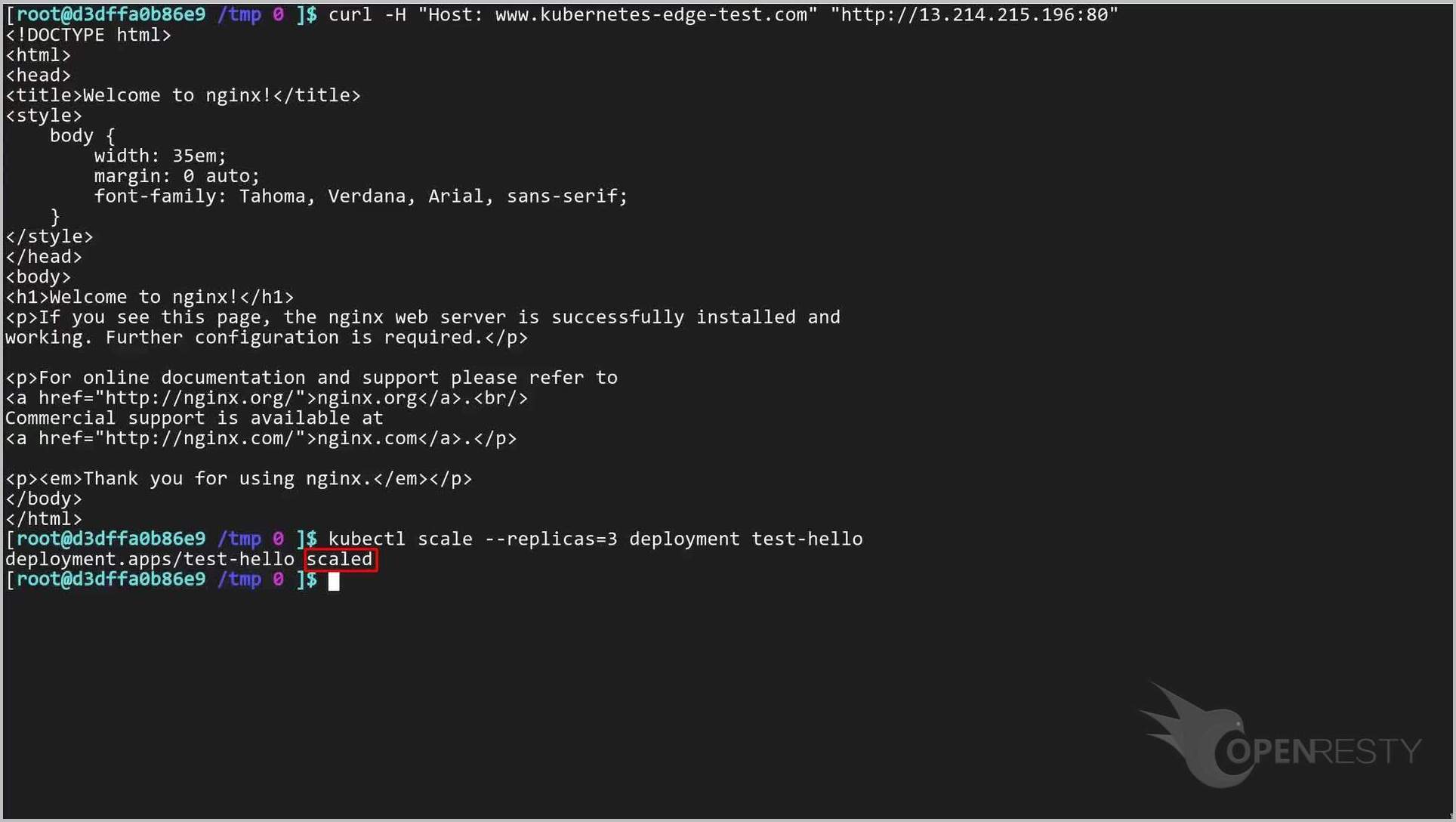

Kubernetes ノードの数を一時的に 3 つに増やします。

kubectl scale --replicas=3 deployment test-hello

スケーリングが正常に完了しました。

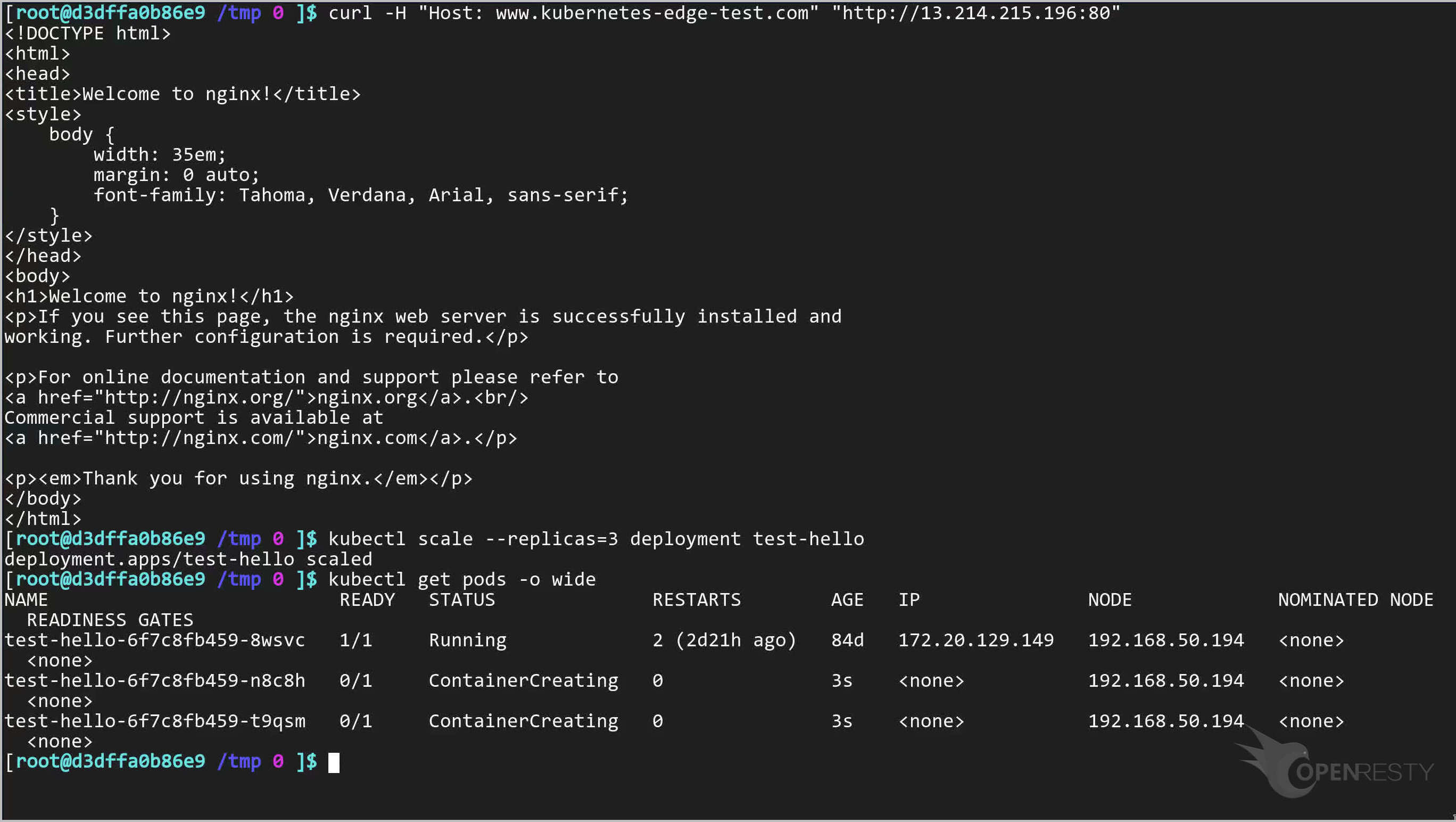

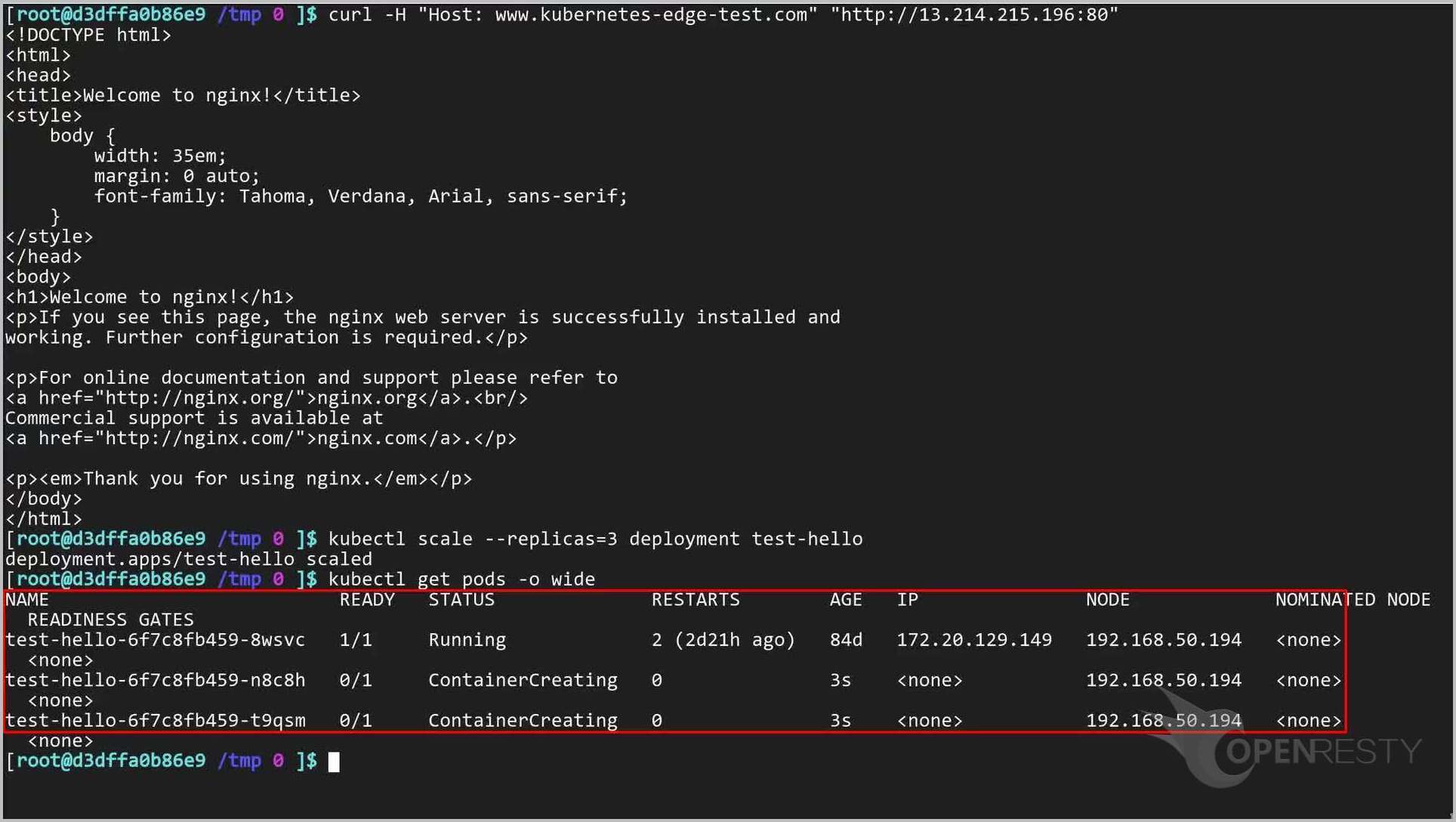

新しいノードを確認してみましょう。

kubectl get pods -o wide

現在、確かに 3 つの Kubernetes ノードがあります。

Edge Admin は自動的に Kubernetes アップストリームを更新して、この変更を反映します。

Edge アプリケーションページに戻って確認しましょう。

アップストリームページに戻ります。

Upstreams ページをリフレッシュしてデータを更新します。

予想通り!Kubernetes アップストリームには確かに 3 台のサーバーがあります!

同様に、Kubernetes ノードが減少した場合も、Edge Admin は自動的にアップストリームを更新します。以上が本日のご説明内容となります。

OpenResty Edge について

OpenResty Edge は、マイクロサービスと分散トラフィックアーキテクチャ向けに設計された多機能ゲートウェイソフトウェアで、当社が独自に開発しました。トラフィック管理、プライベート CDN 構築、API ゲートウェイ、セキュリティ保護などの機能を統合し、現代のアプリケーションの構築、管理、保護を容易にします。OpenResty Edge は業界をリードする性能と拡張性を持ち、高並発・高負荷シナリオの厳しい要求を満たすことができます。K8s などのコンテナアプリケーショントラフィックのスケジューリングをサポートし、大量のドメイン名を管理できるため、大規模ウェブサイトや複雑なアプリケーションのニーズを容易に満たすことができます。

著者について

章亦春(Zhang Yichun)は、オープンソースの OpenResty® プロジェクトの創始者であり、OpenResty Inc. の CEO および創業者です。

章亦春(GitHub ID: agentzh)は中国江蘇省生まれで、現在は米国ベイエリアに在住しております。彼は中国における初期のオープンソース技術と文化の提唱者およびリーダーの一人であり、Cloudflare、Yahoo!、Alibaba など、国際的に有名なハイテク企業に勤務した経験があります。「エッジコンピューティング」、「動的トレーシング」、「機械プログラミング」 の先駆者であり、22 年以上のプログラミング経験と 16 年以上のオープンソース経験を持っております。世界中で 4000 万以上のドメイン名を持つユーザーを抱えるオープンソースプロジェクトのリーダーとして、彼は OpenResty® オープンソースプロジェクトをベースに、米国シリコンバレーの中心部にハイテク企業 OpenResty Inc. を設立いたしました。同社の主力製品である OpenResty XRay動的トレーシング技術を利用した非侵襲的な障害分析および排除ツール)と OpenResty Edge(マイクロサービスおよび分散トラフィックに最適化された多機能

翻訳

英文版の原文と日本語訳版(本文)をご用意しております。読者の皆様による他の言語への翻訳版も歓迎いたします。全文翻訳で省略がなければ、採用を検討させていただきます。心より感謝申し上げます!