OpenResty Edge での HTTP キャッシュの有効化

本日は、OpenResty Edge で HTTP レスポンスキャッシュを有効にする方法をご紹介いたします。

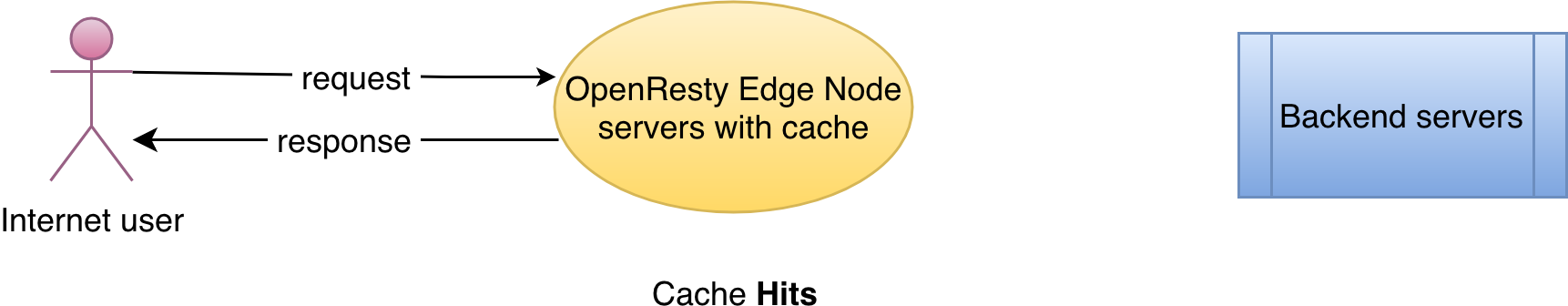

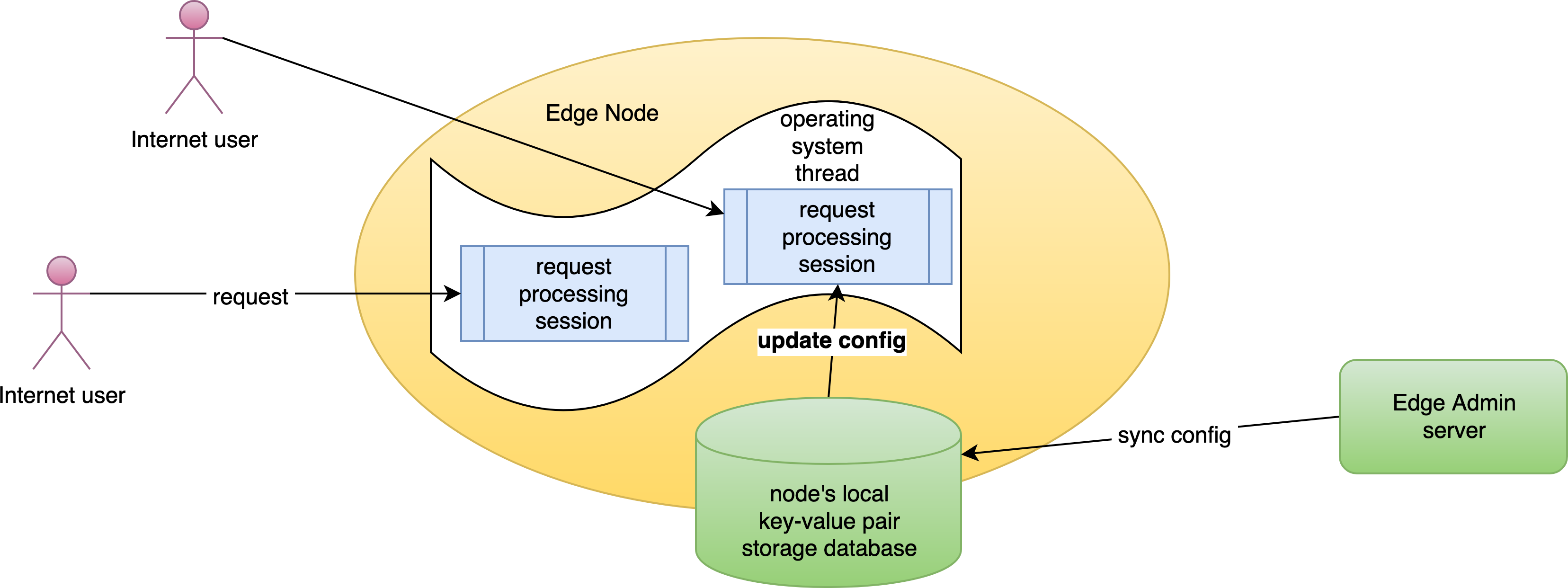

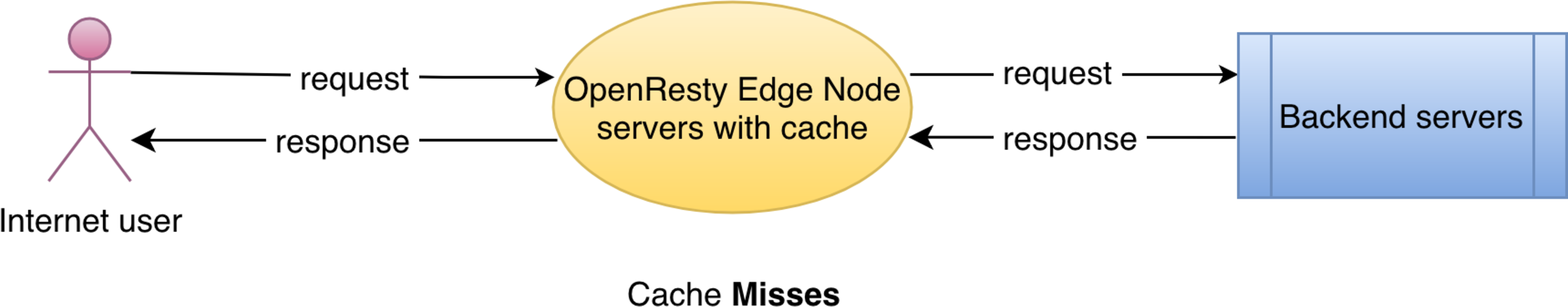

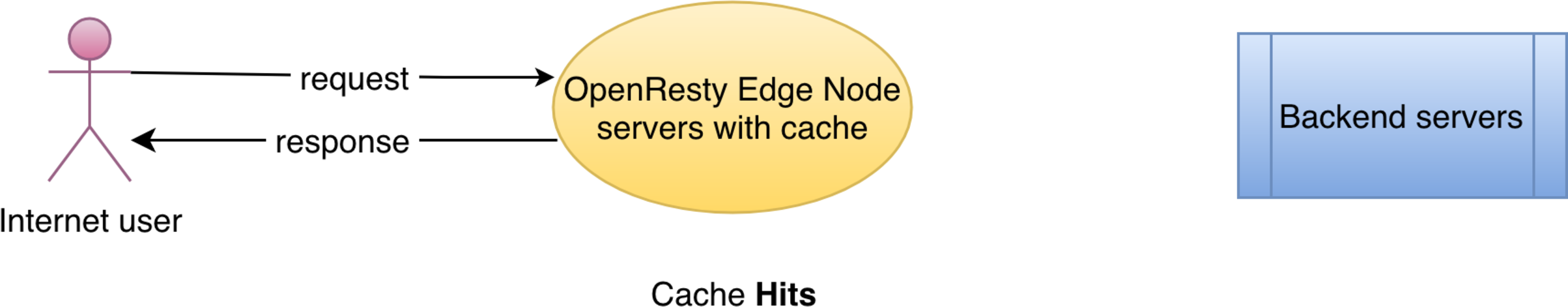

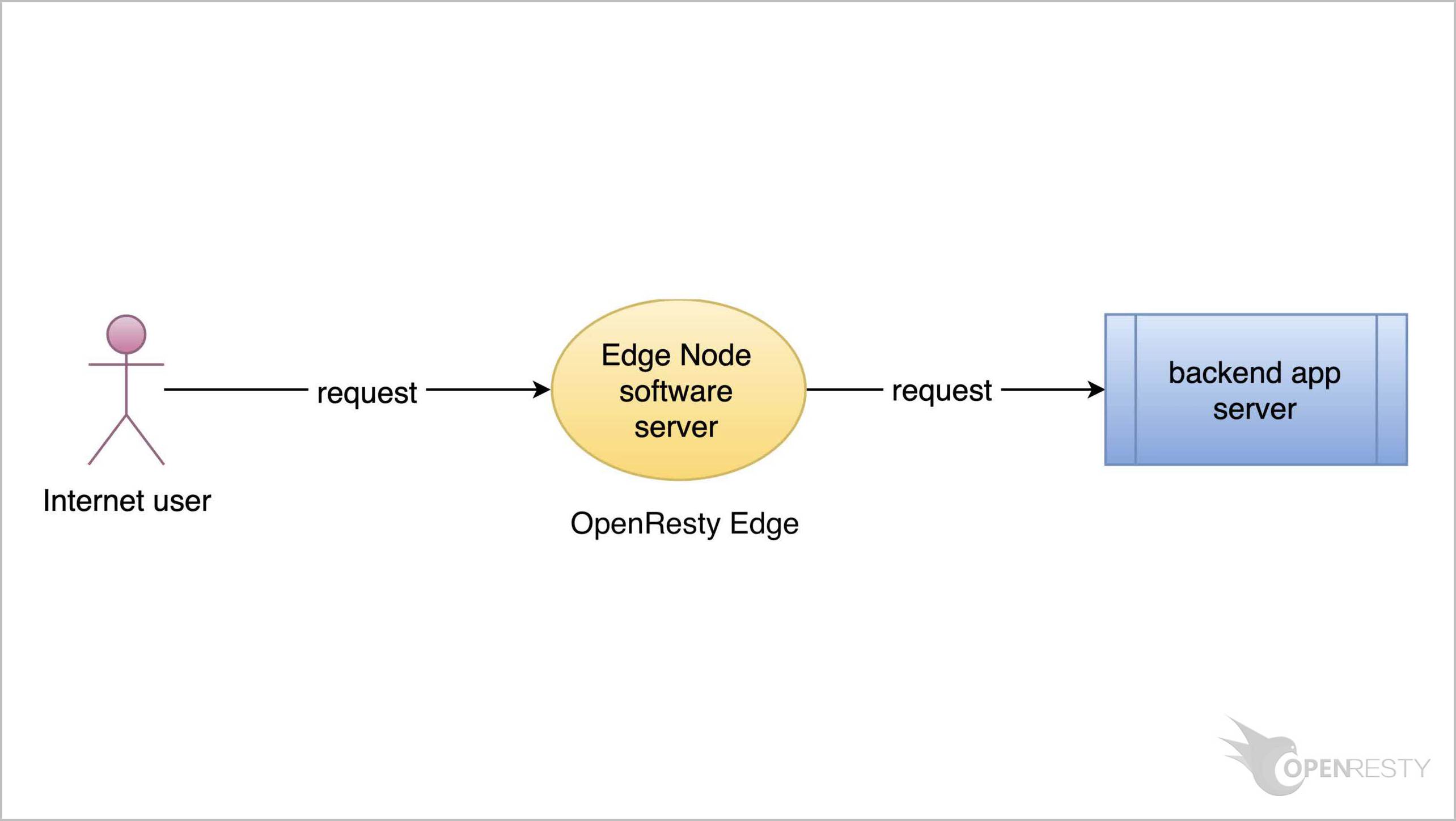

クライアントのリクエストが Edge Node サーバー上のキャッシュにヒットした場合、バックエンドサーバーへのリクエストは不要となります。これにより、レスポンス遅延を削減し、ネットワーク帯域幅を節約することができます。

アプリケーションのプロキシキャッシュを有効にする

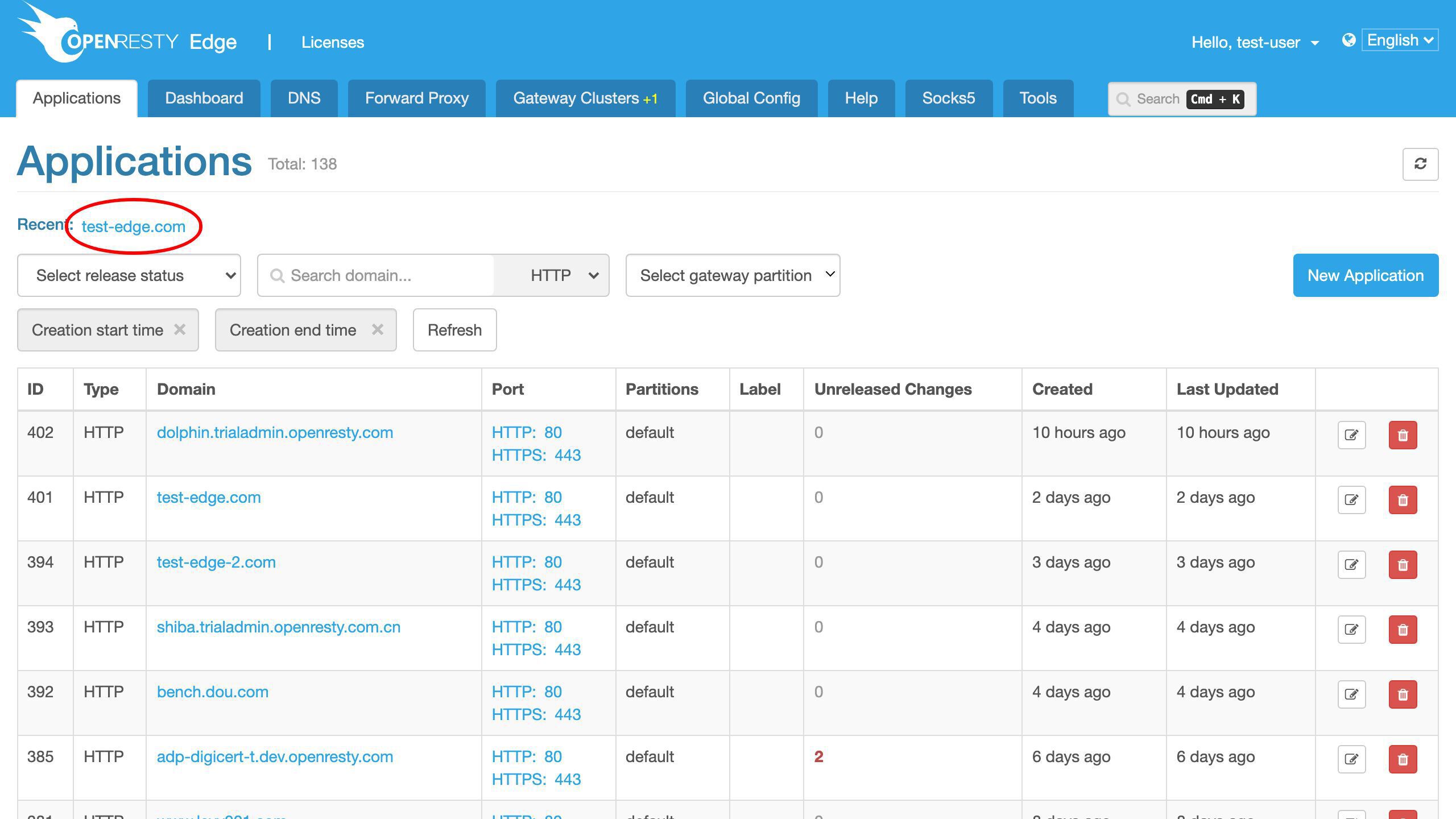

OpenResty Edge の Admin Web コンソールにアクセスしましょう。これはコンソールのサンプルデプロイメントです。各ユーザーには独自のローカルデプロイメントがあります。

前回のアプリケーション例 test-edge.com を引き続き使用します。

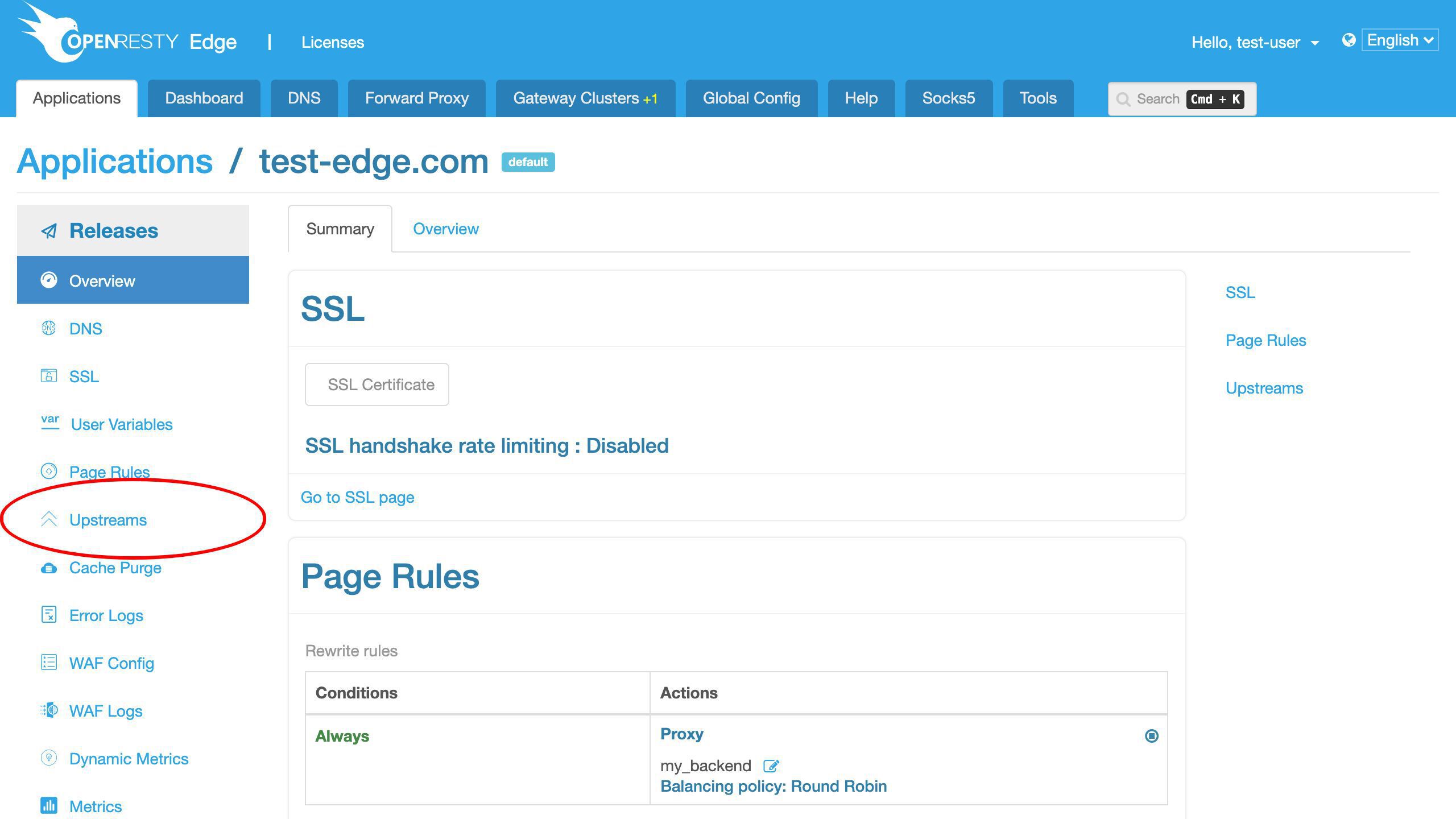

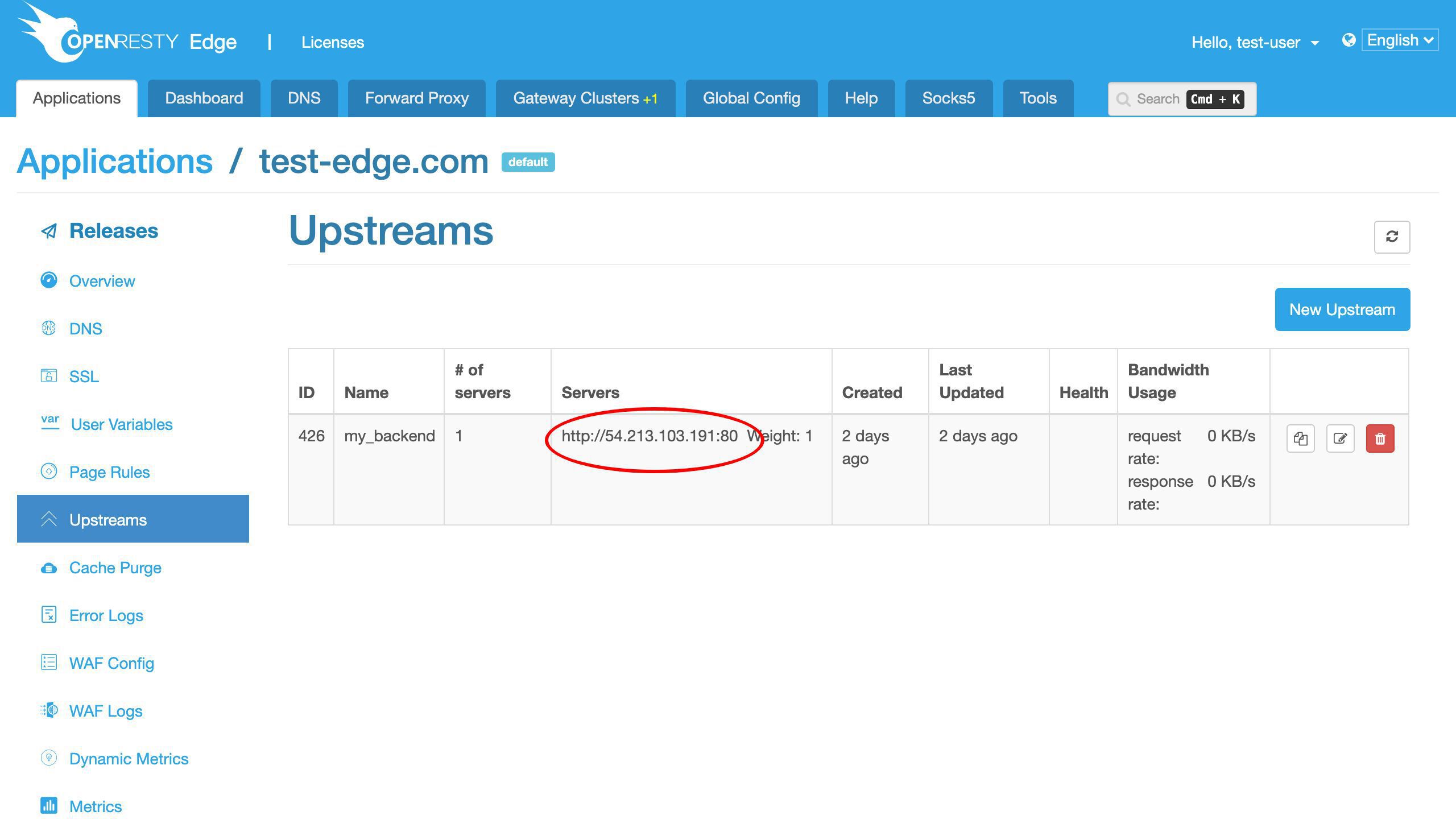

すでにアップストリームを定義しています。

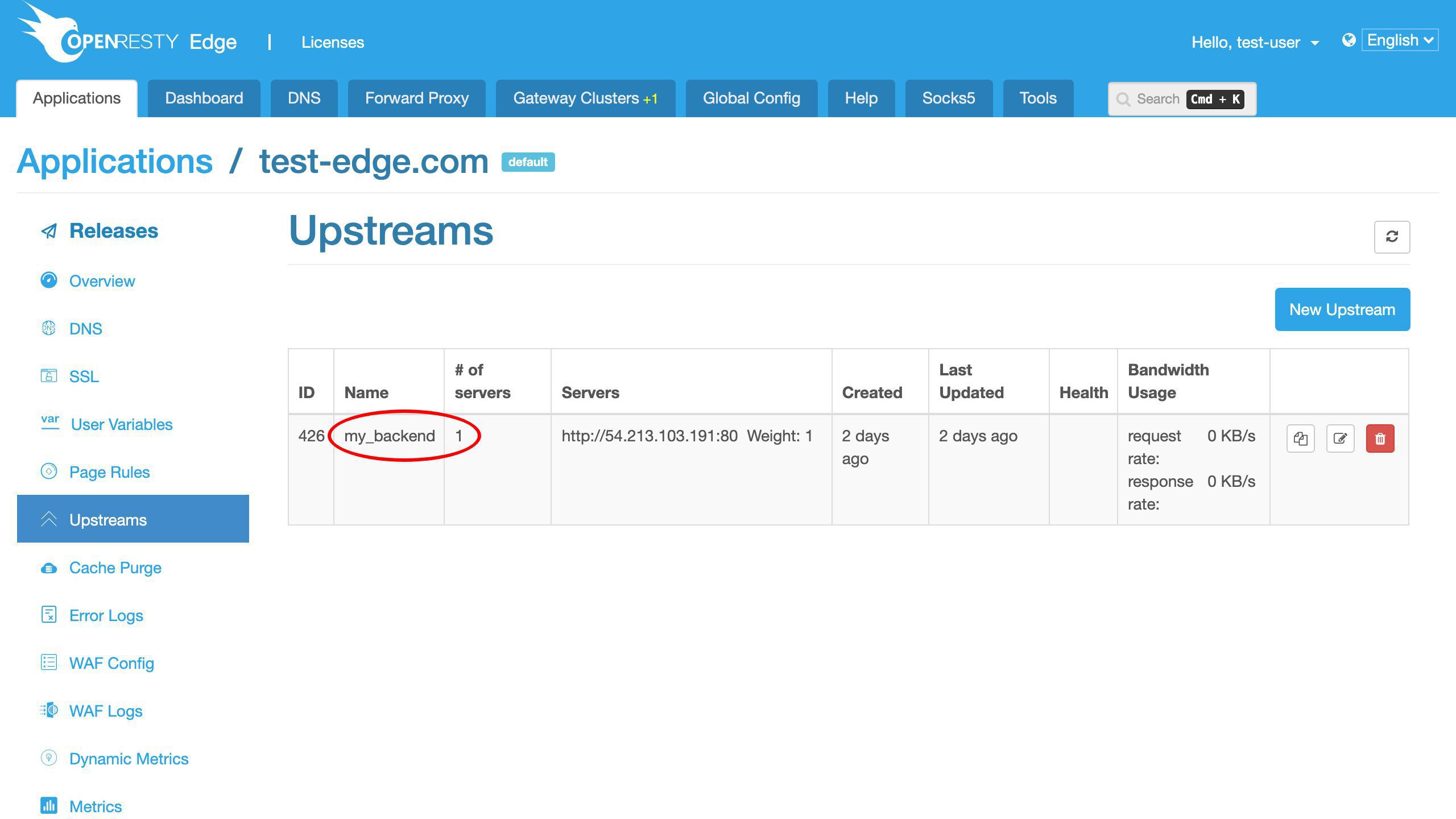

この my_backend アップストリームには 1 つのバックエンドサーバーがあります。

バックエンドサーバーの IP アドレスが 191 で終わっていることに注目してください。後ほどこの IP アドレスを使用します。

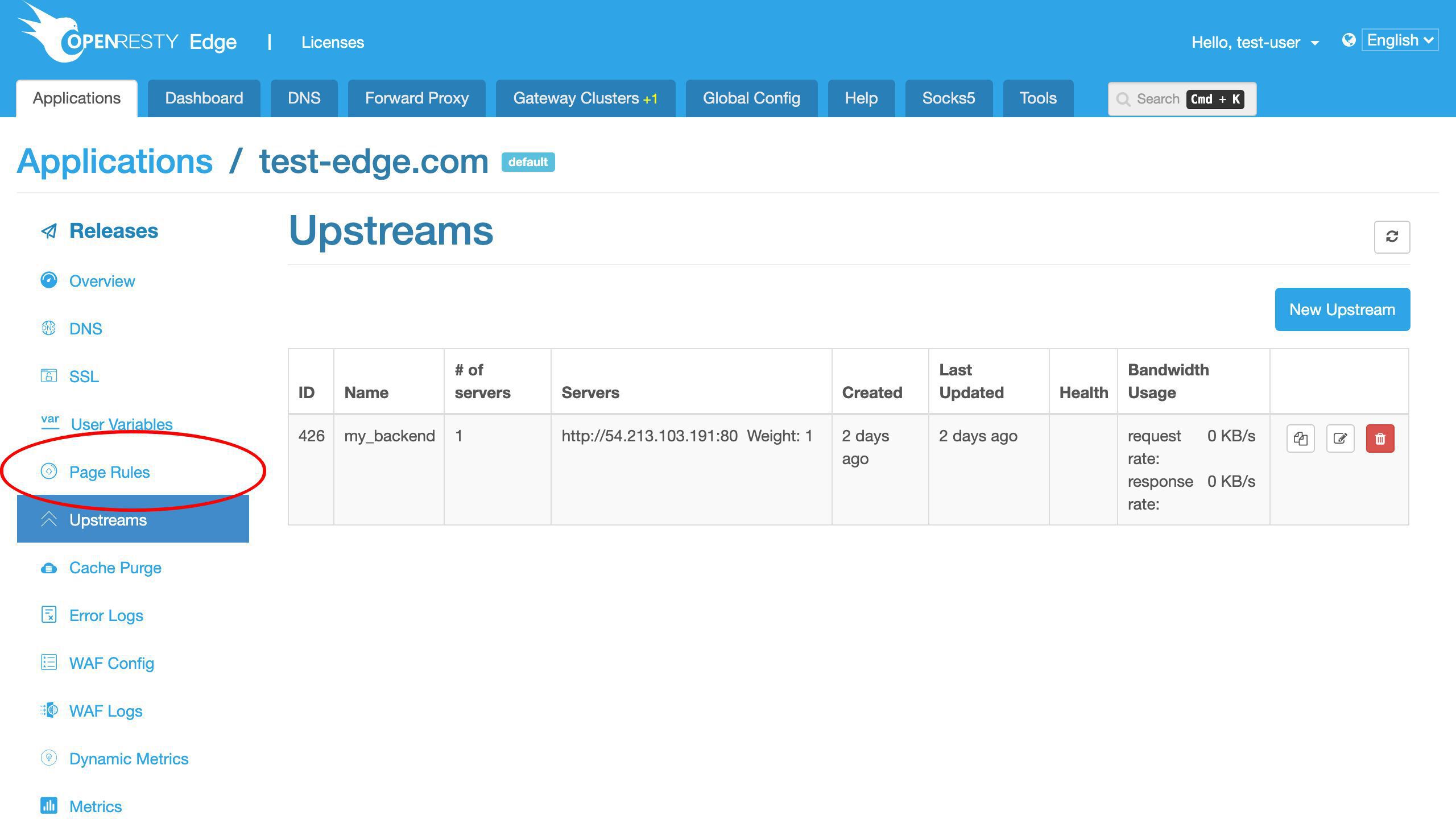

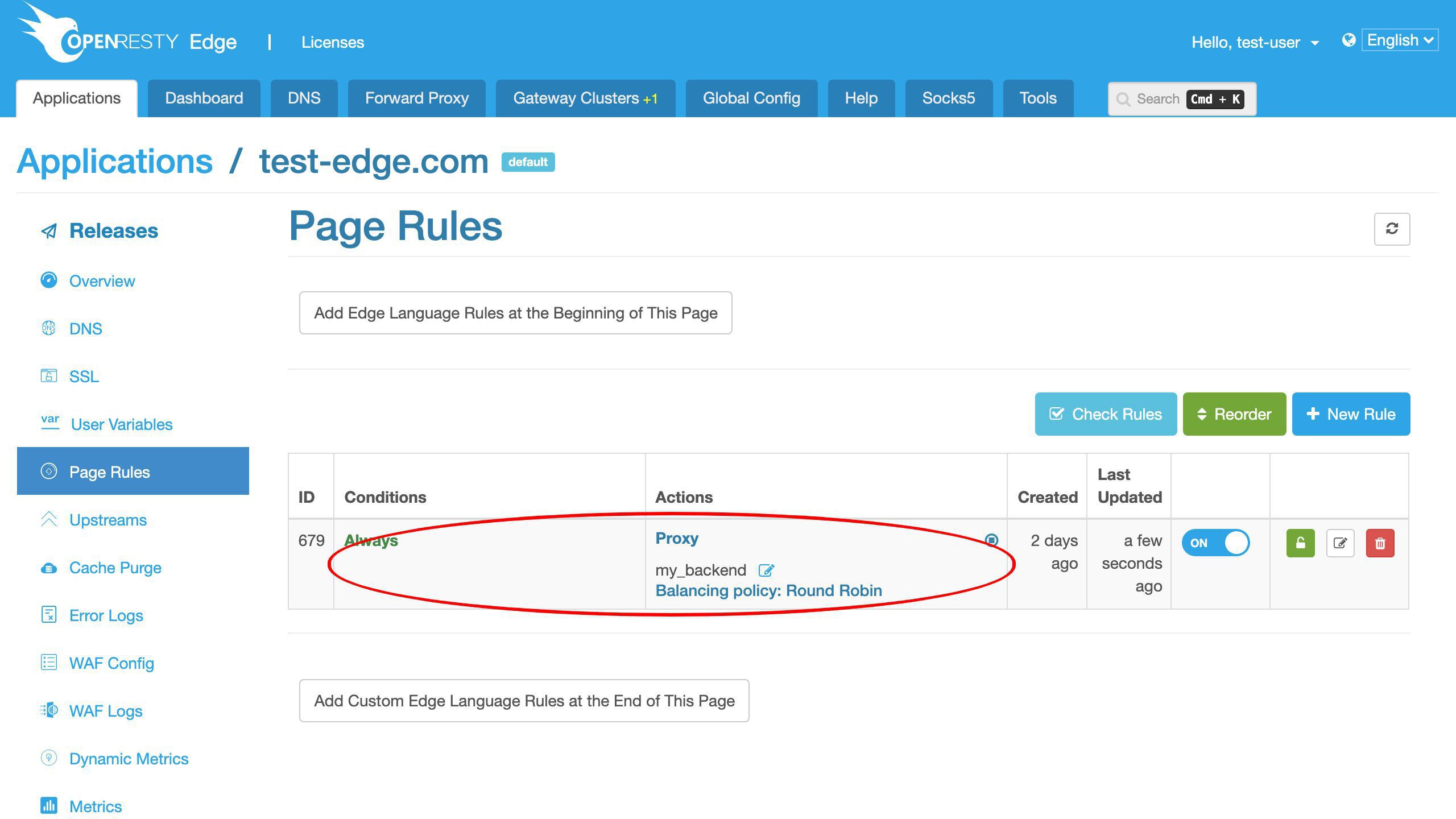

また、ページルールも定義済みです。

このページルールは、このアップストリームを指すリバースプロキシを設定しています。

明らかに、このページルールではまだプロキシキャッシュを有効にしていません。

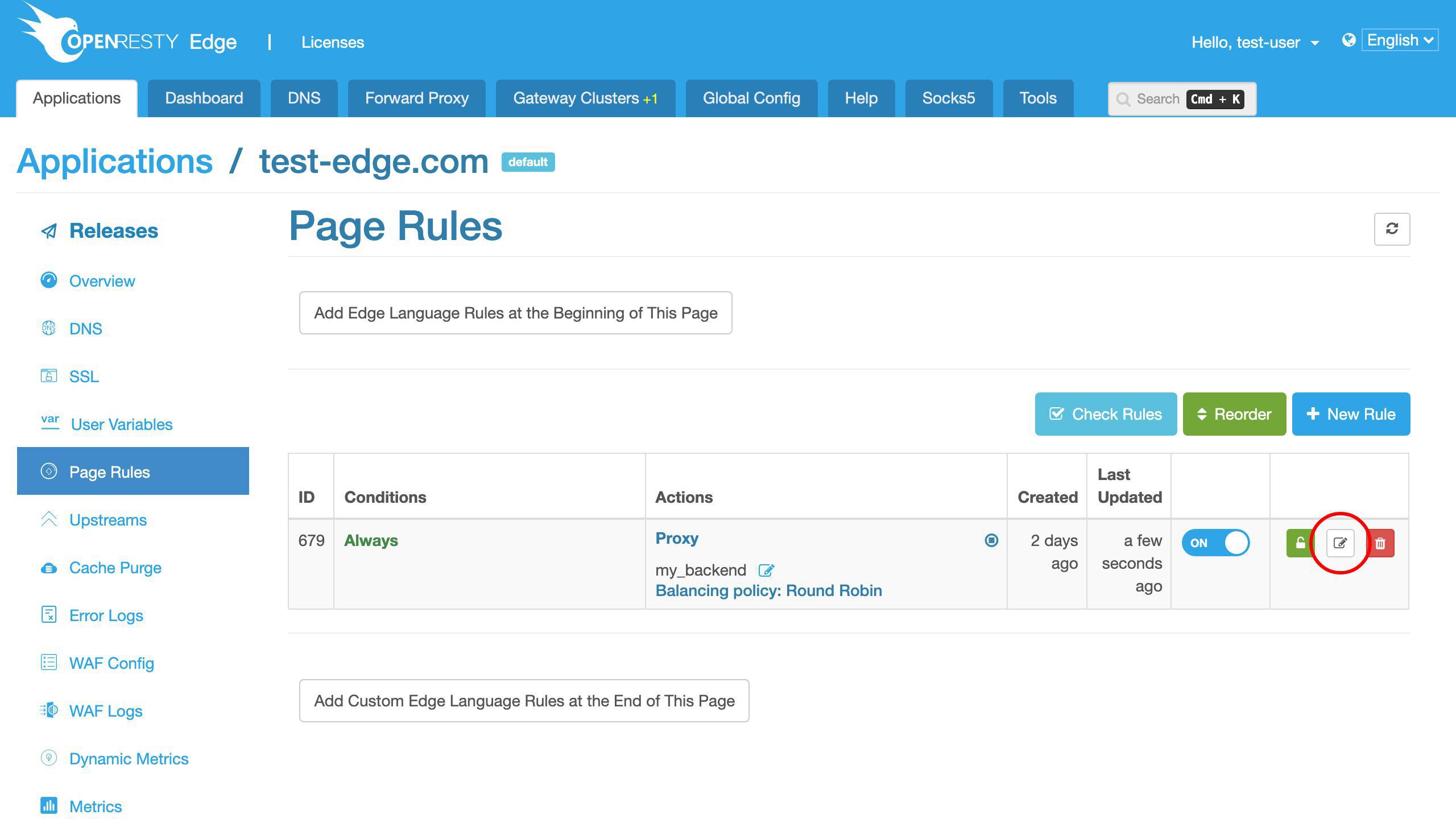

では、このページルールを編集して、ゲートウェイにレスポンスキャッシュを追加しましょう。

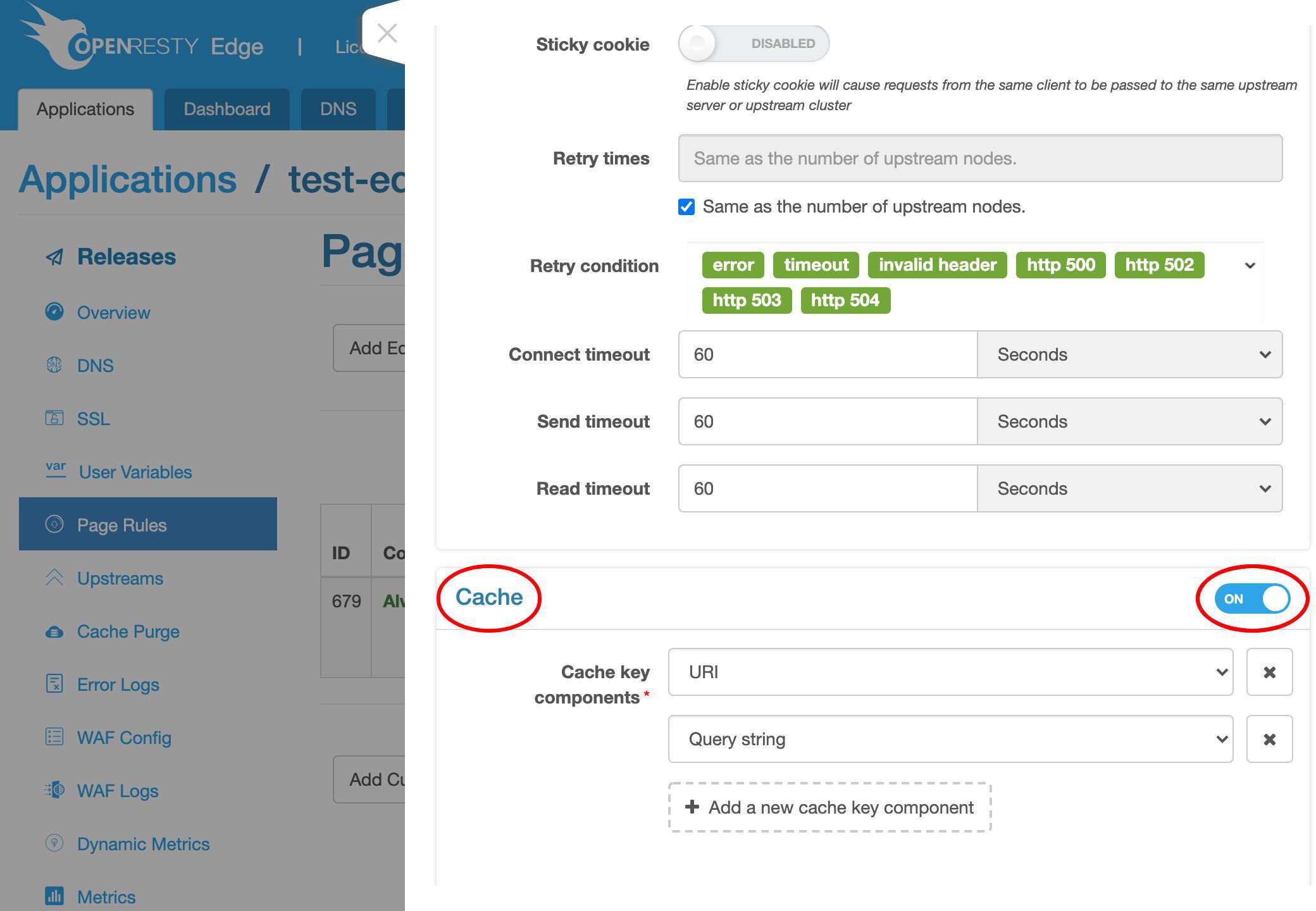

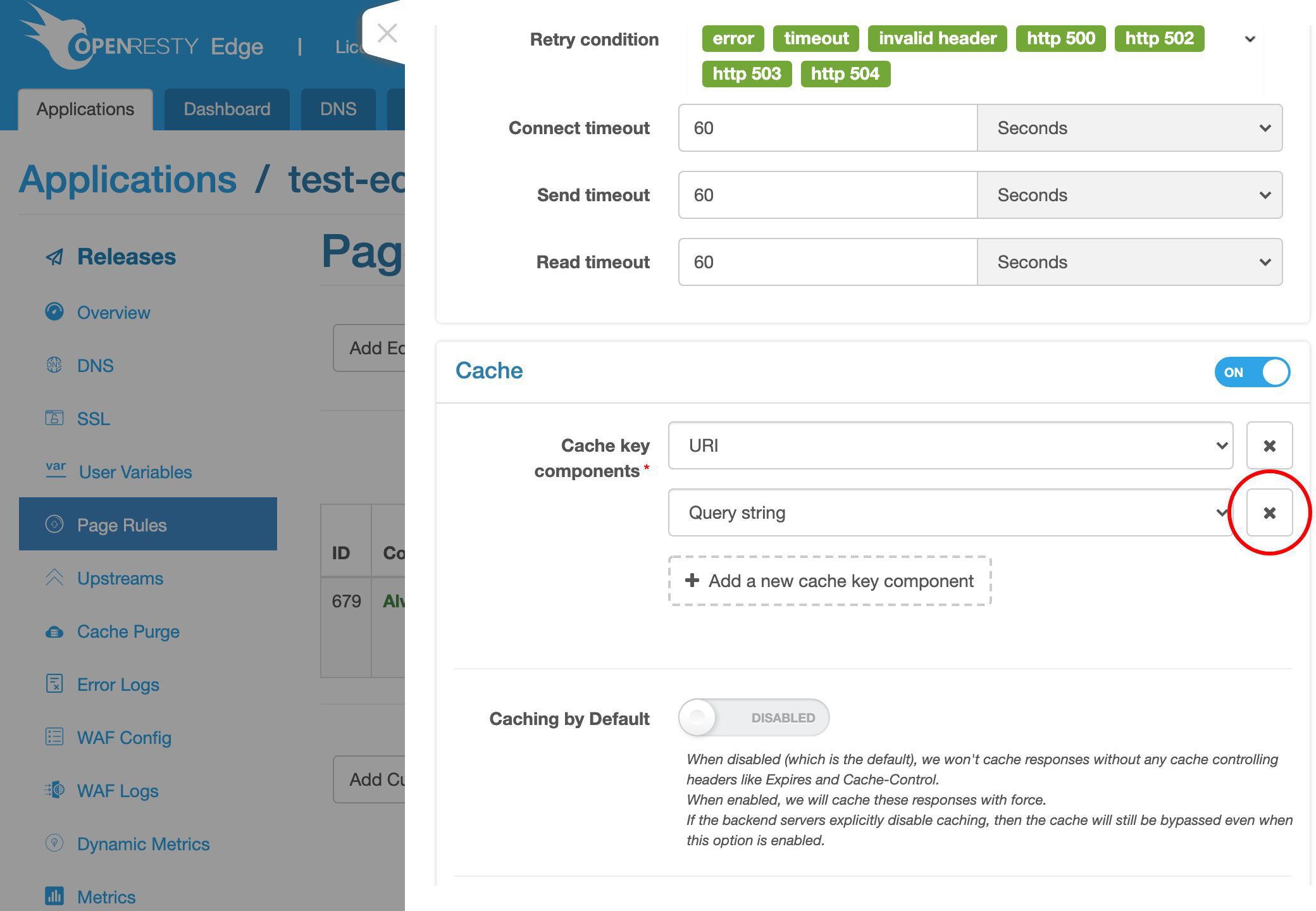

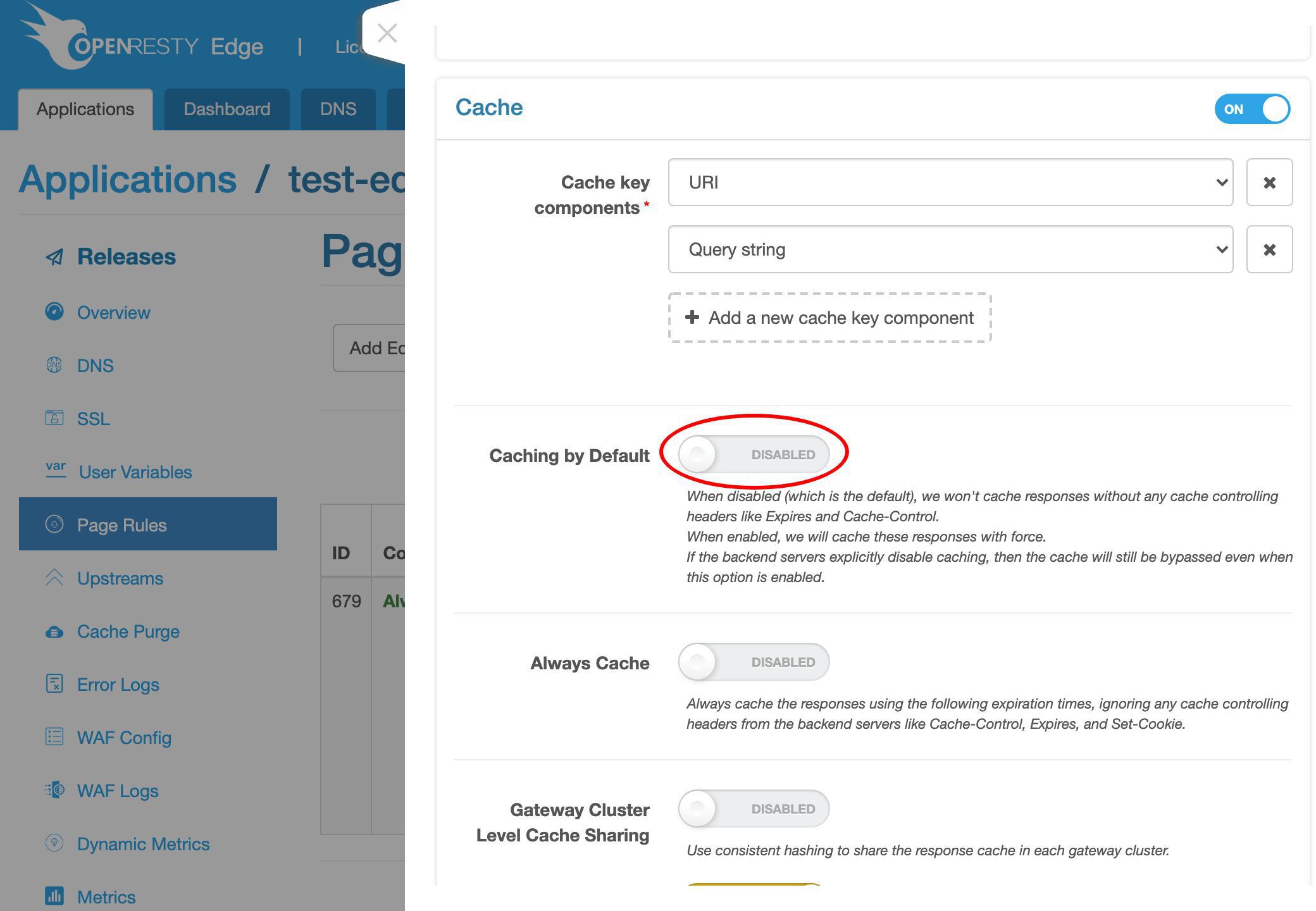

プロキシキャッシュを有効にします。

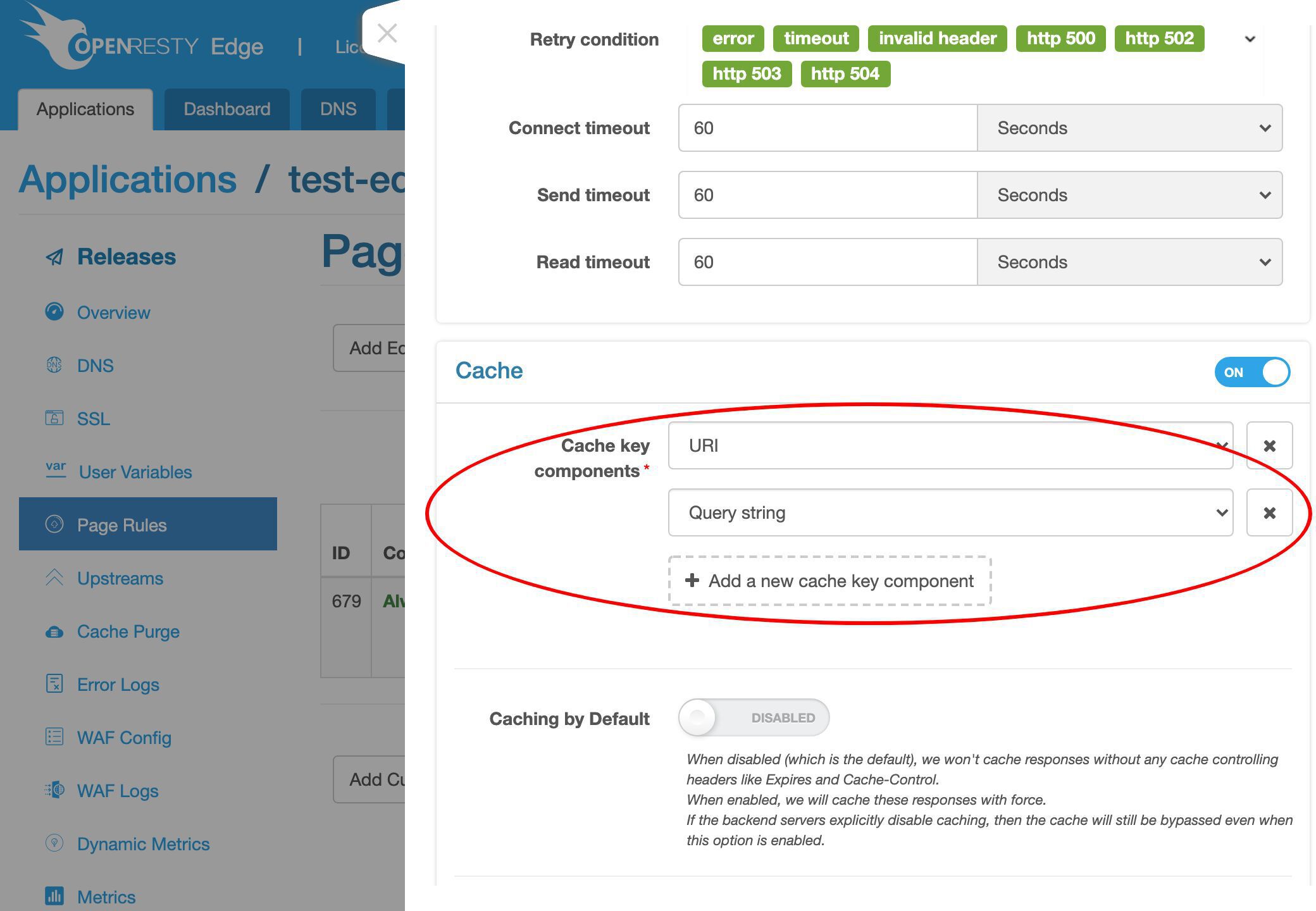

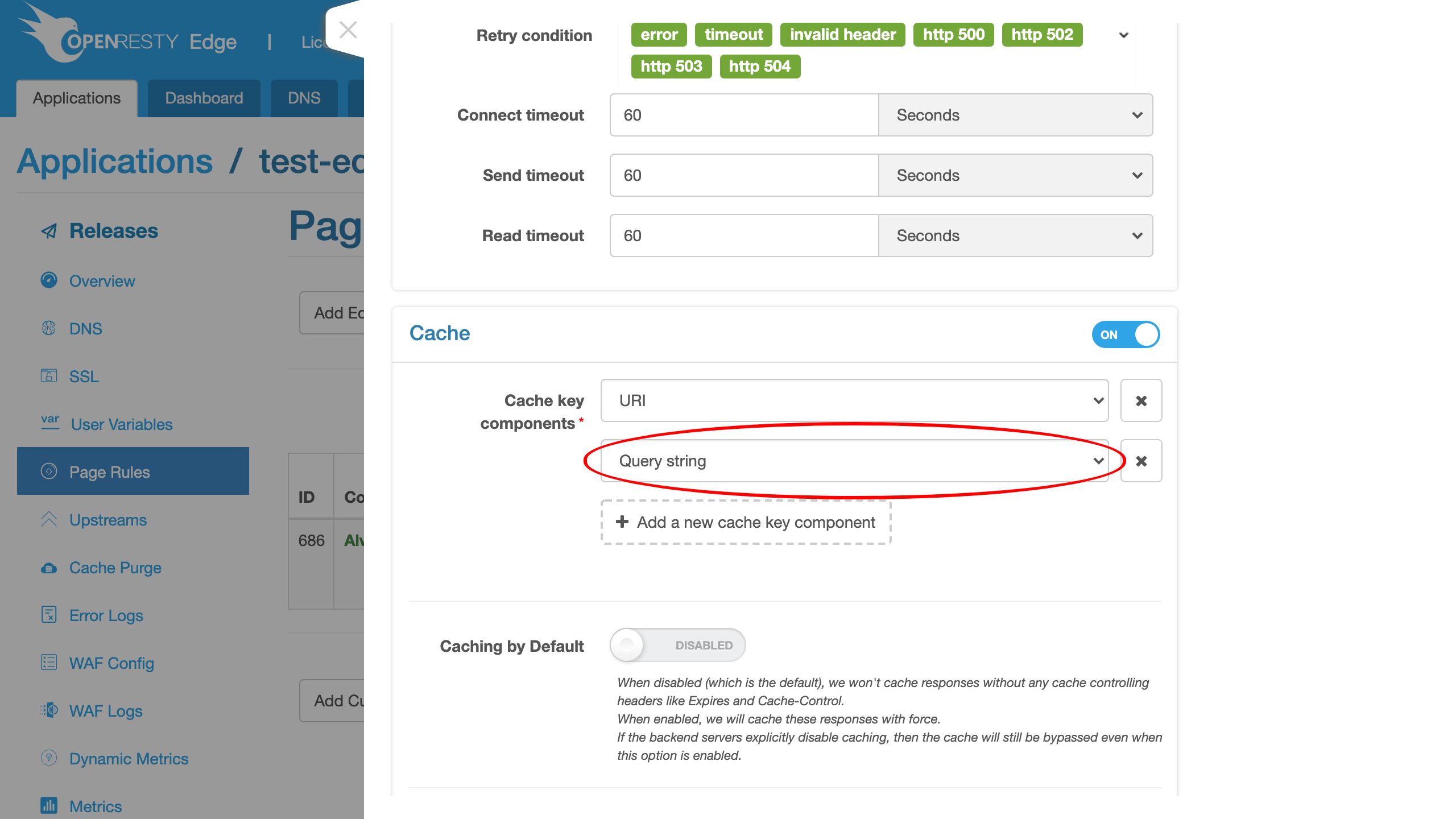

ここでキャッシュキーを設定できます。

デフォルトでは、キャッシュキーは URI とクエリ文字列(URI パラメータ)の 2 つの部分で構成されています。

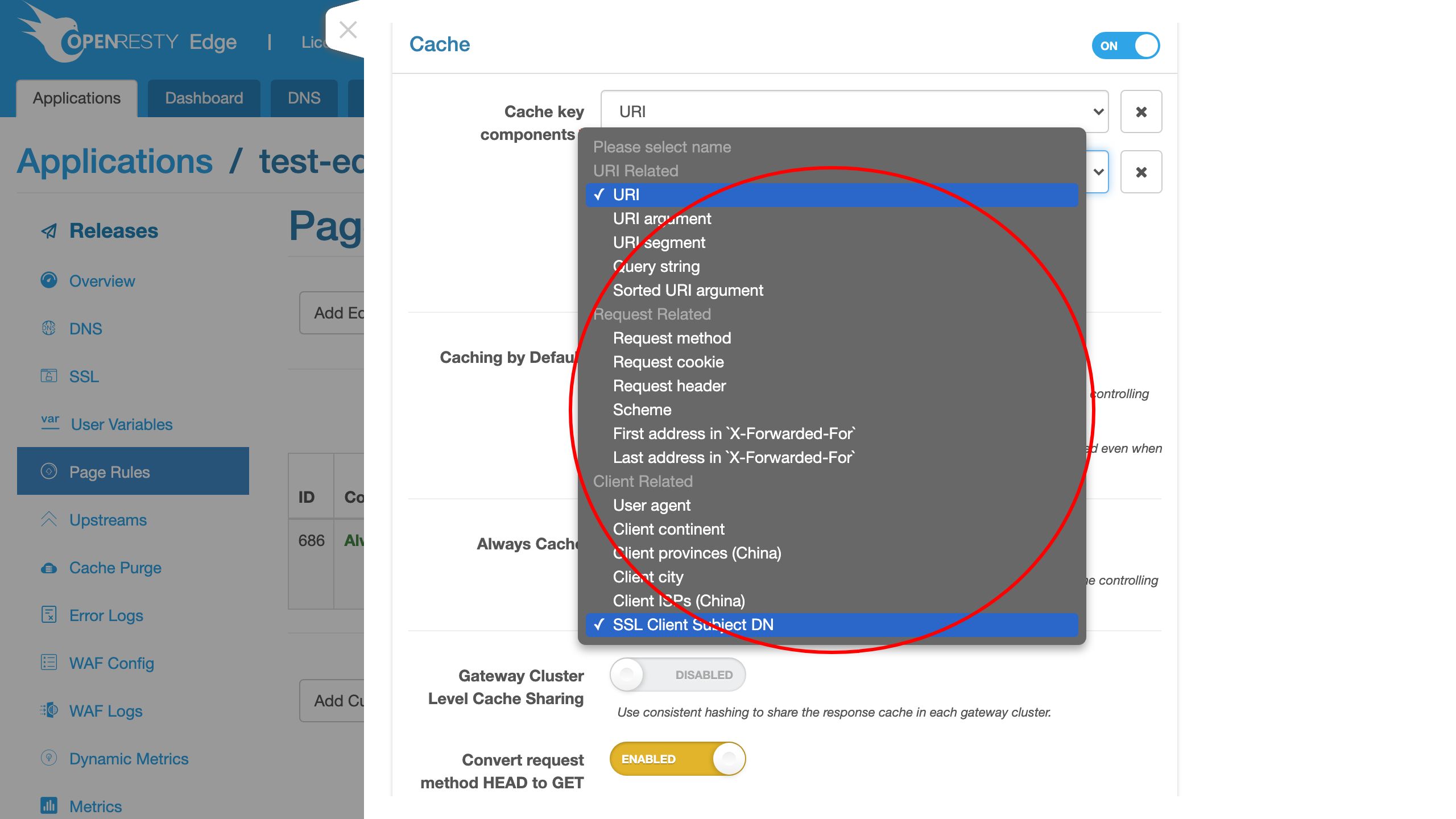

他の種類のキーコンポーネントを選択することもできます。

または、クエリ文字列全体をキーから削除することもできます。

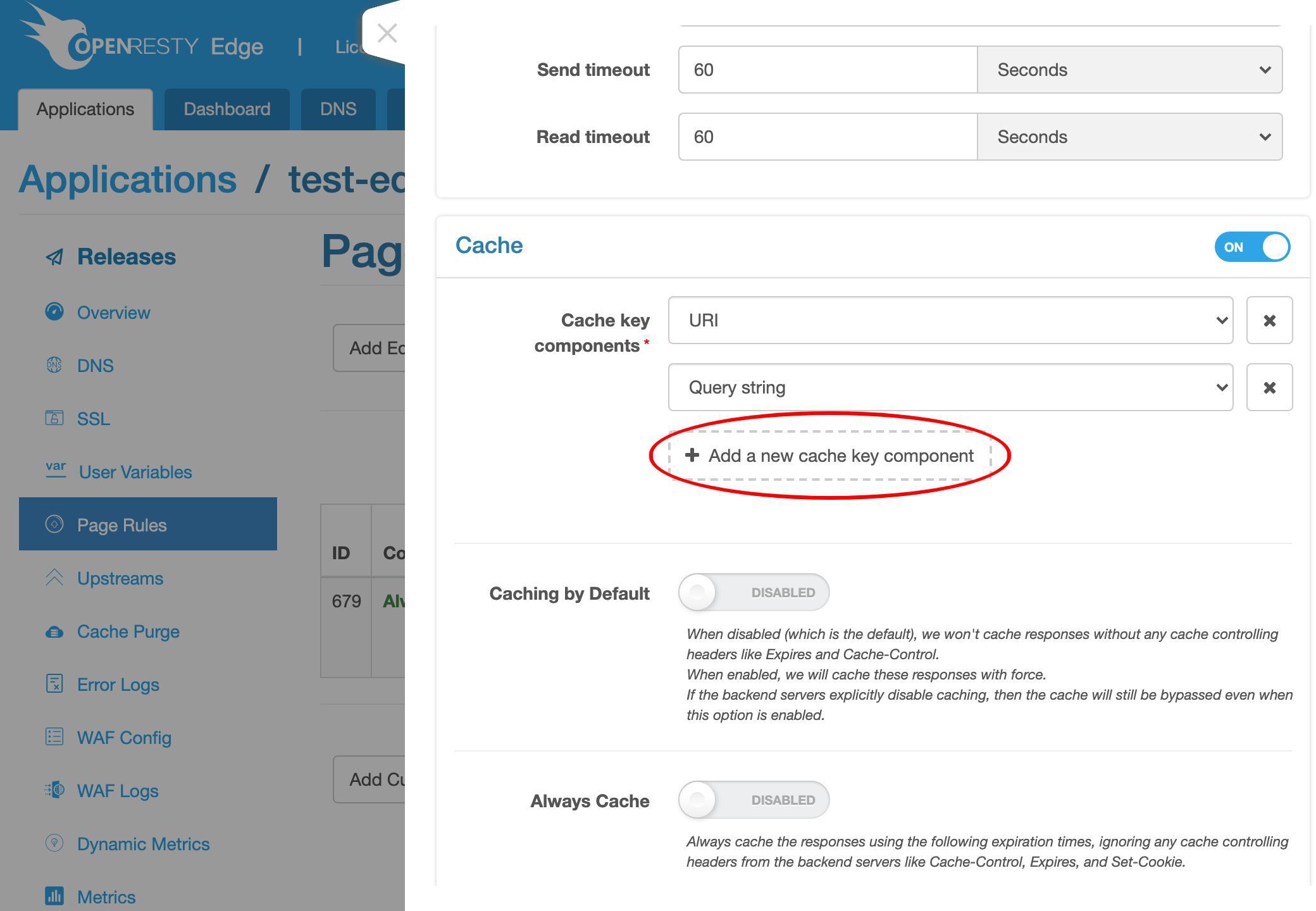

さらにキーコンポーネントを追加することもできます。

この例では、デフォルトのキャッシュキー定義のみを保持します。

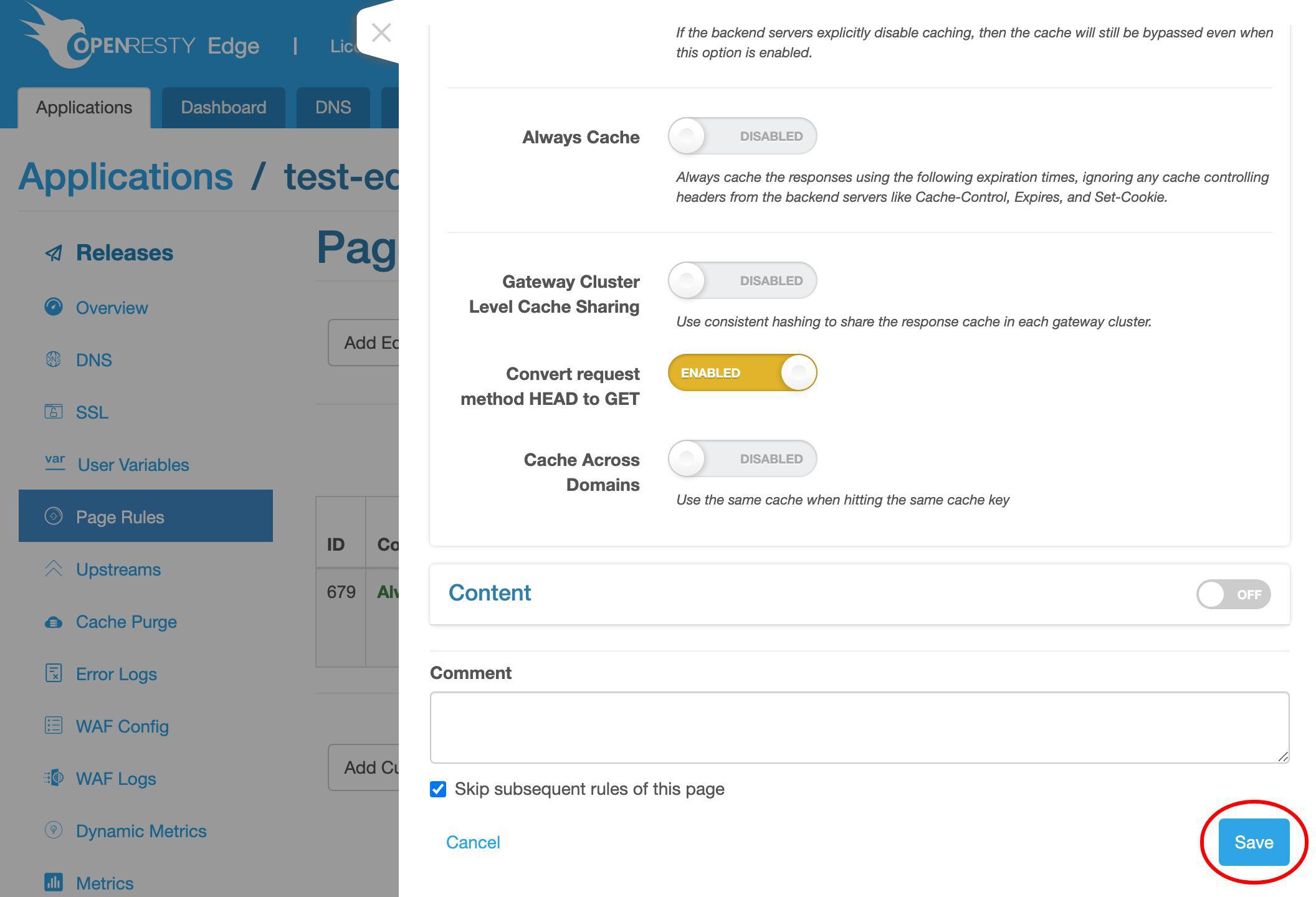

このページルールを保存します。

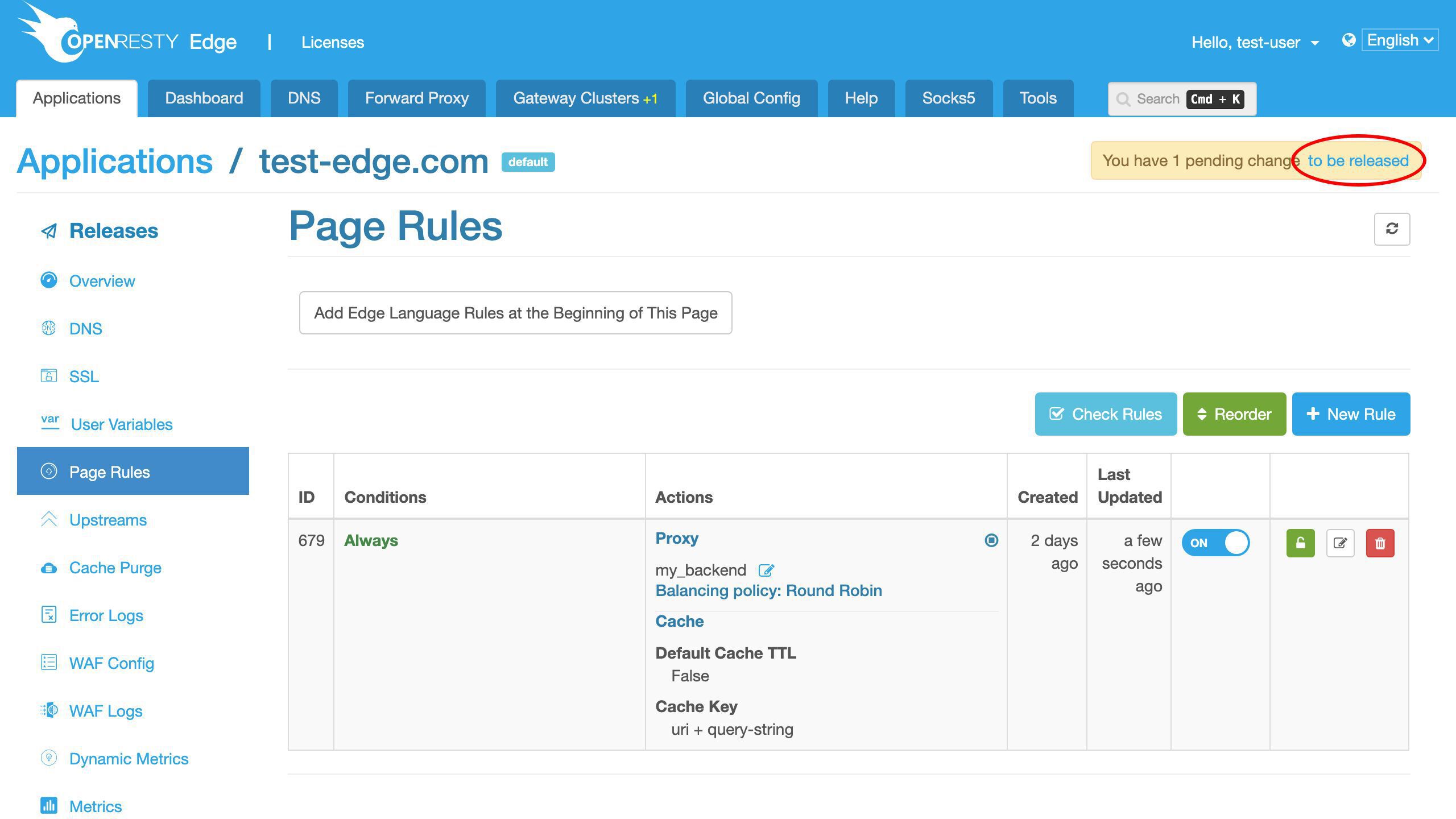

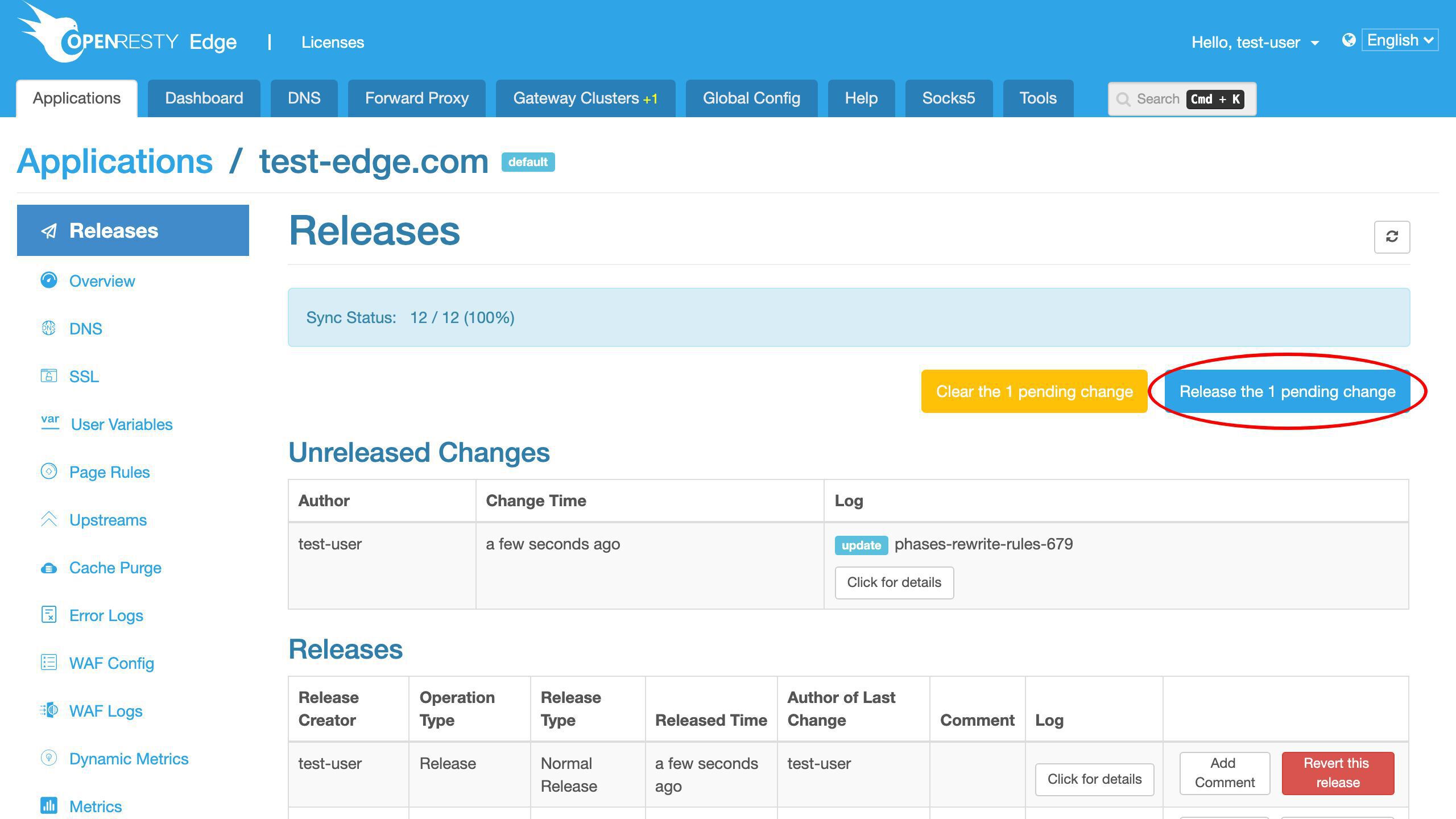

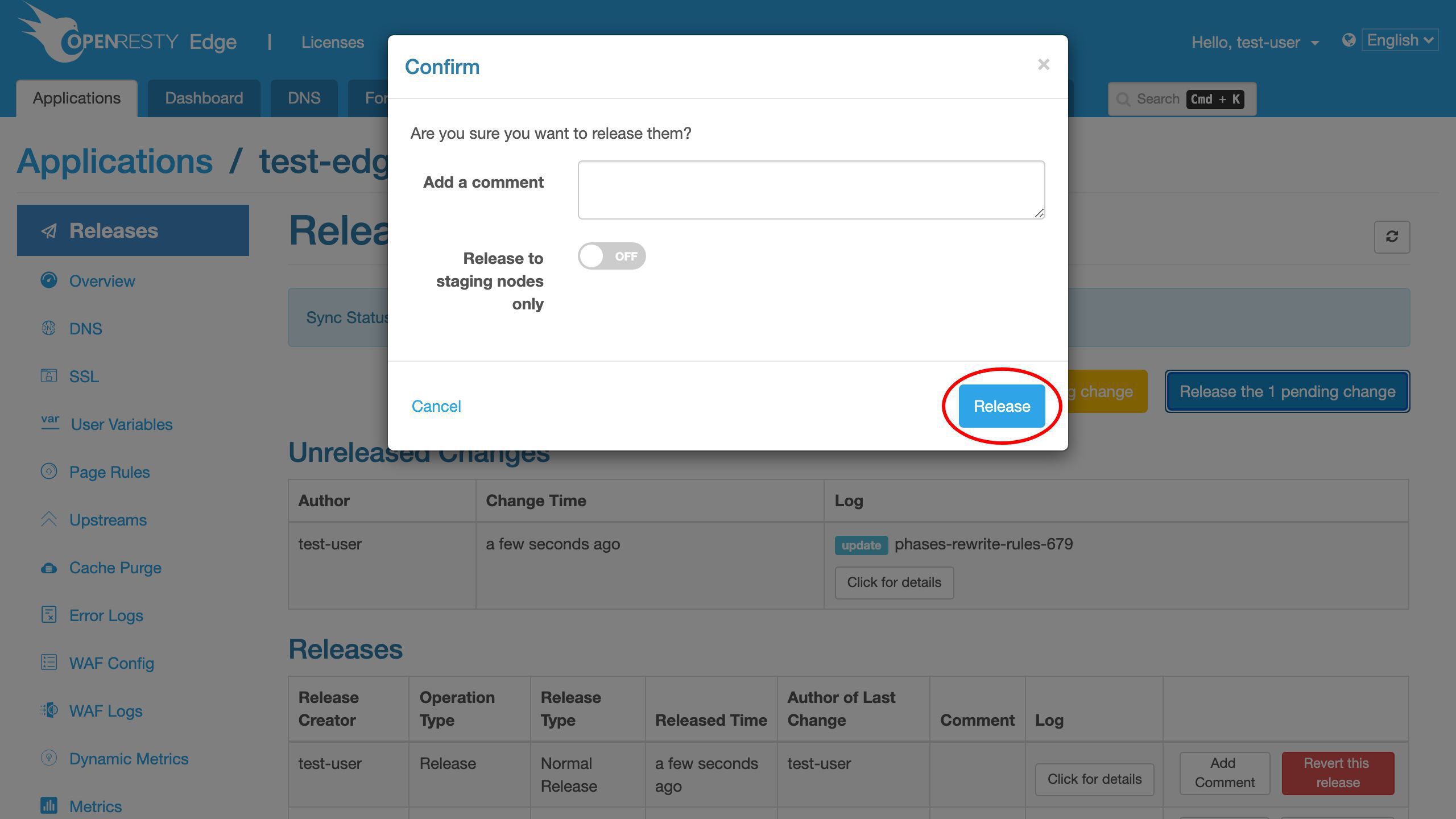

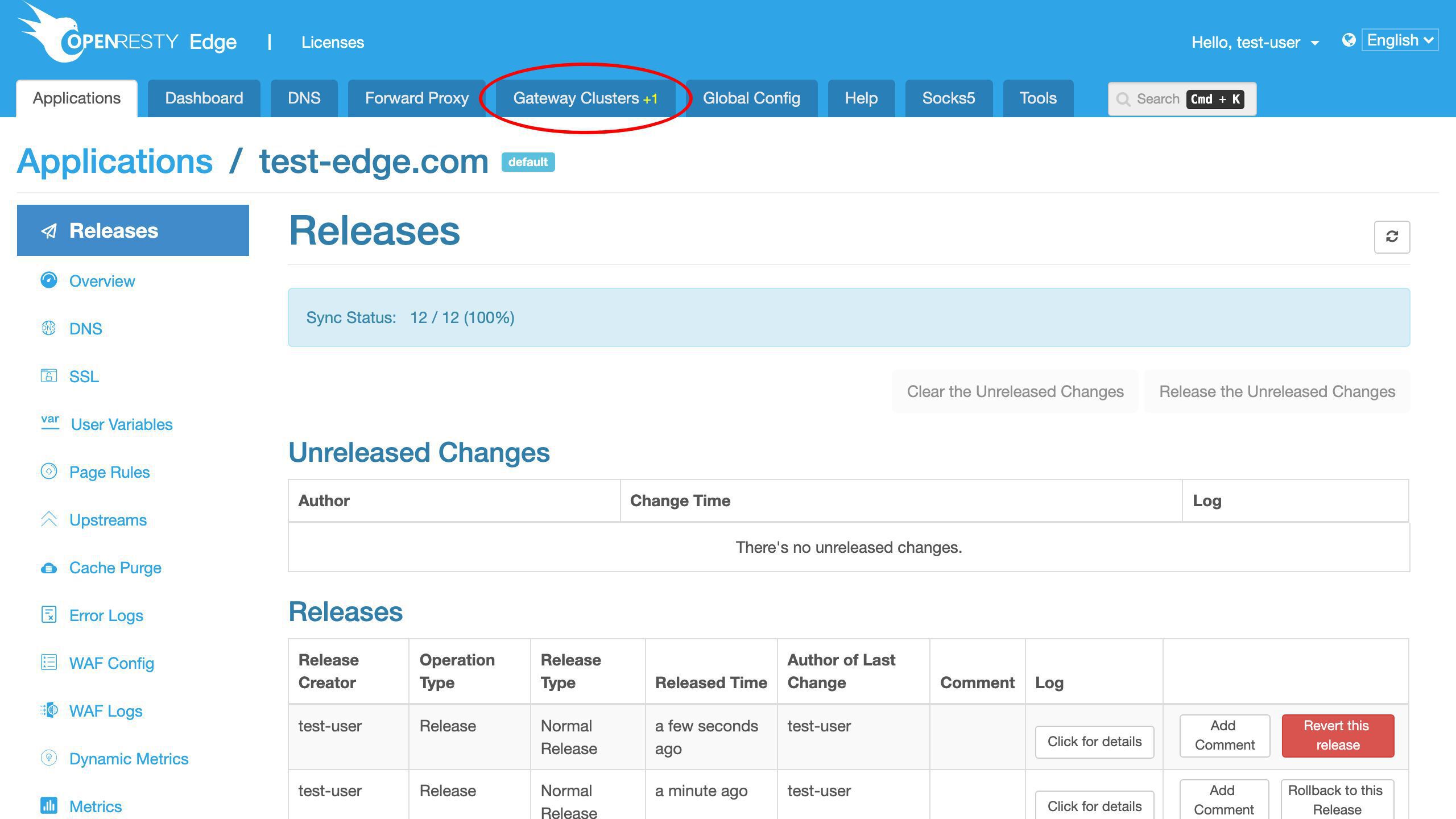

新しい設定をリリースしましょう。

先ほどの変更をプッシュします。

リリースします!

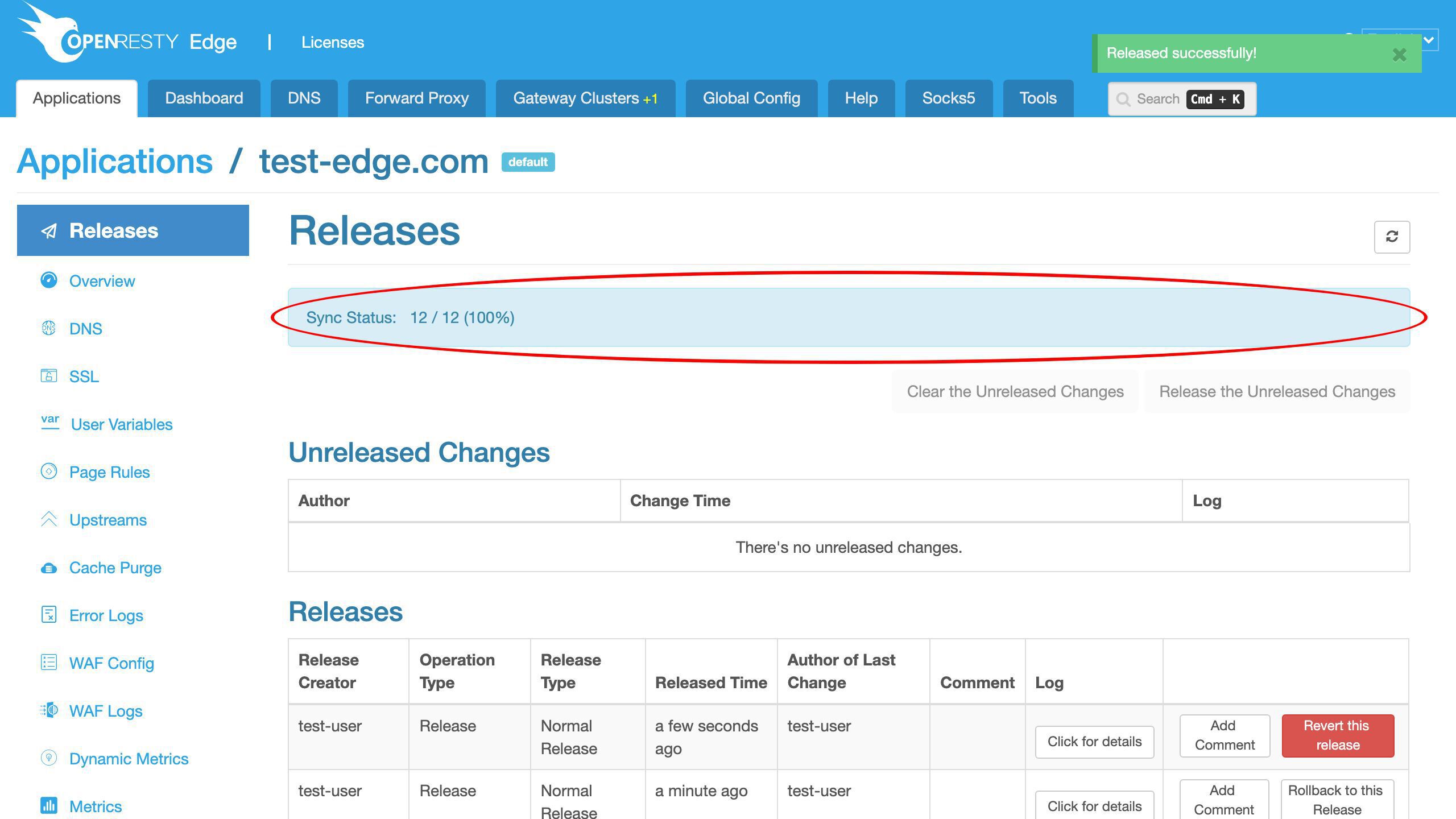

新しいバージョンの設定が、すべてのゲートウェイサーバーに同期されました。

設定の変更には、サーバーのリロード、再起動、またはサーバープロセスのバイナリアップグレードは必要ありません。そのため、非常に効率的です。

プロキシキャッシュのテスト

ゲートウェイサーバーのキャッシュをテストしてみましょう。

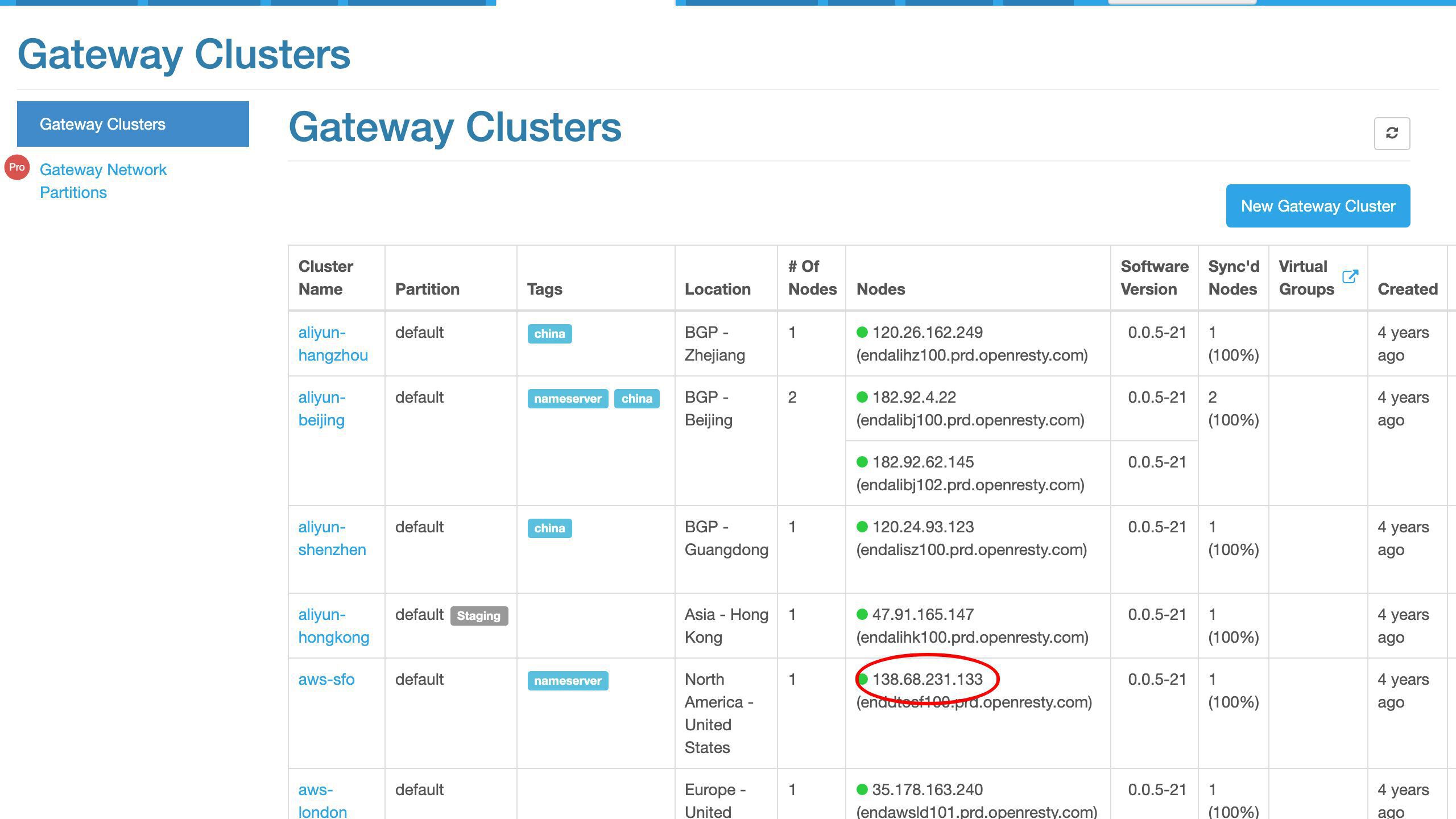

このサンフランシスコのゲートウェイサーバーの IP アドレスをコピーします。

この IP アドレスの最後の数字が 133 であることに注意してください。

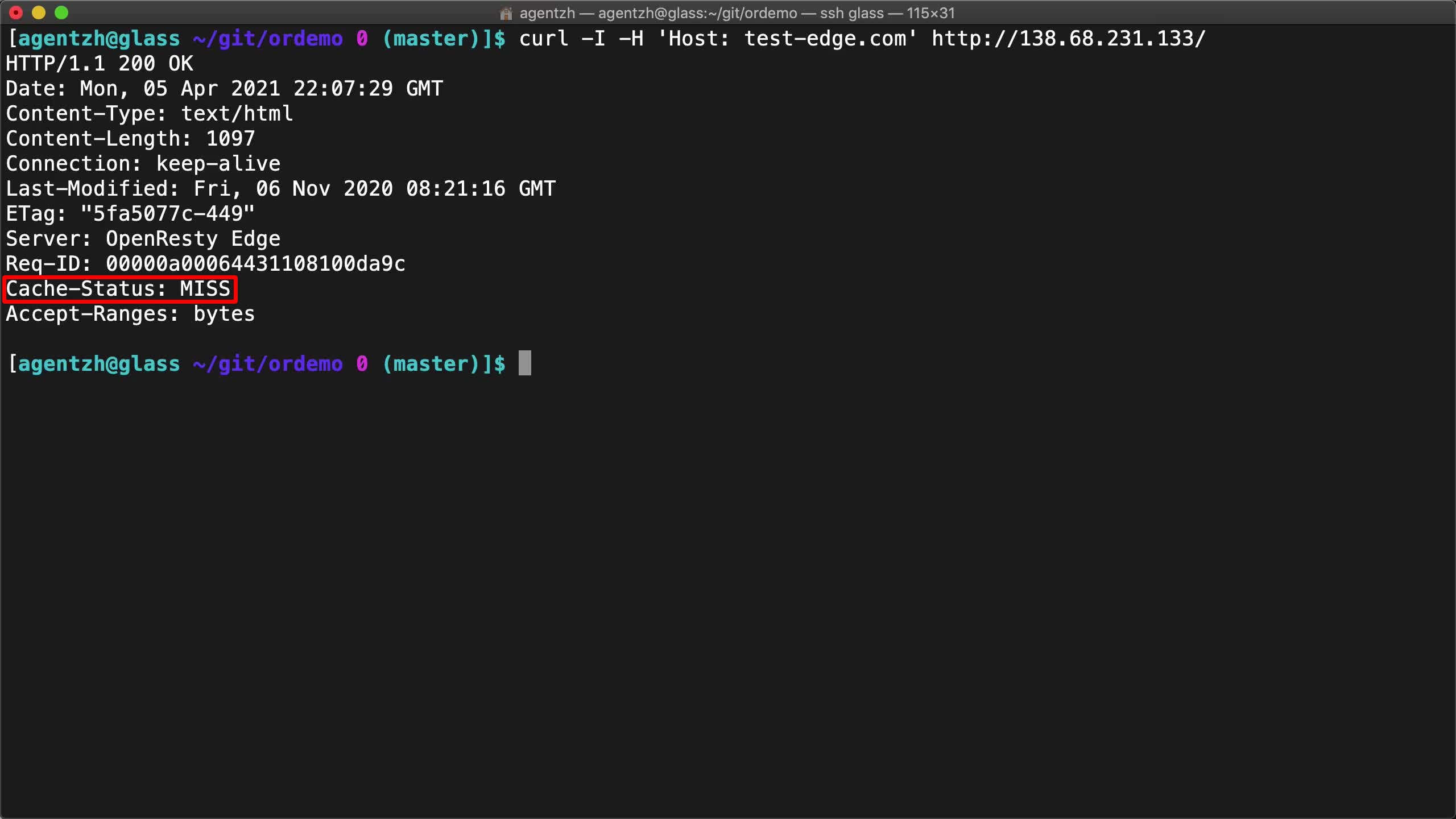

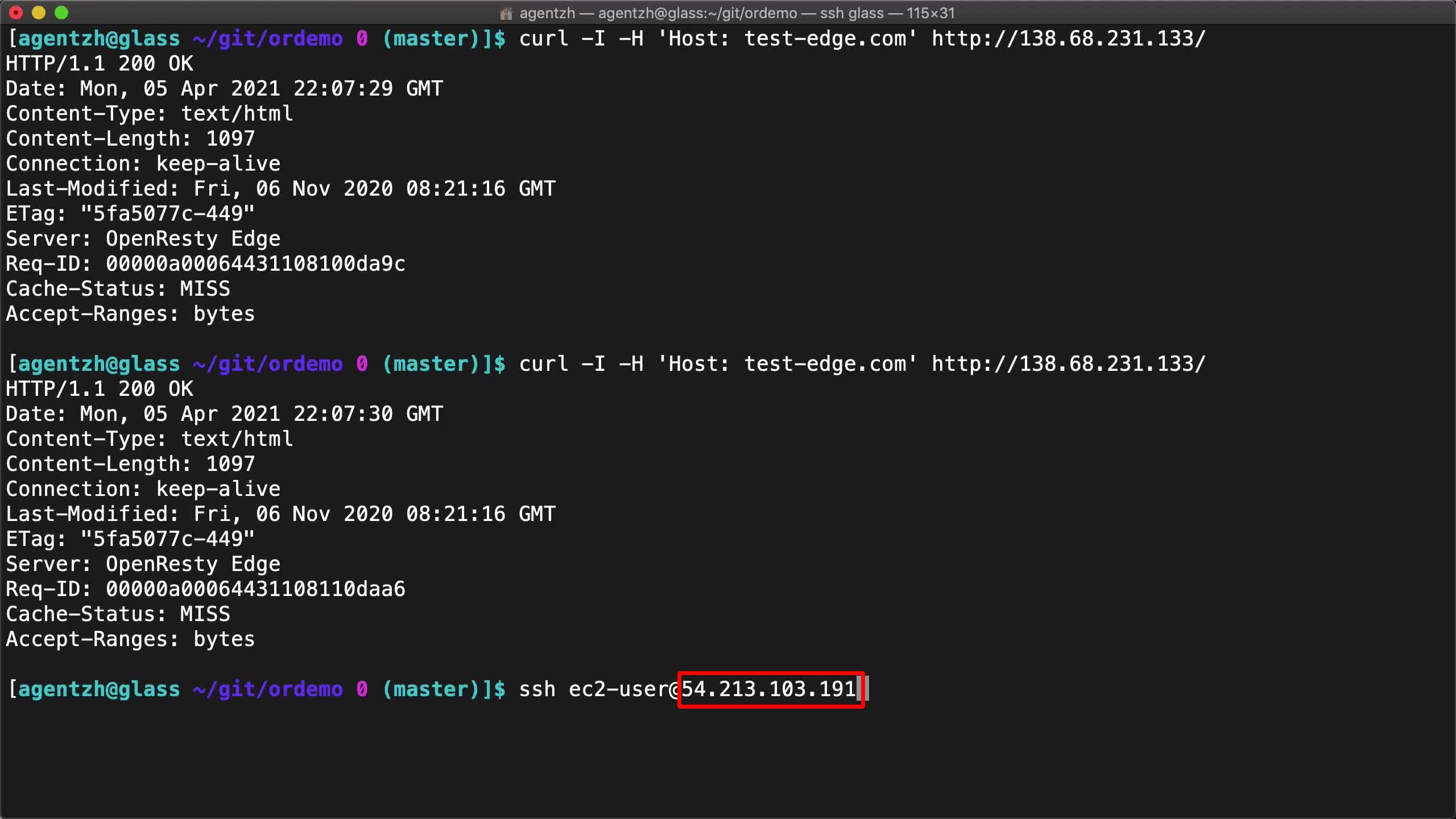

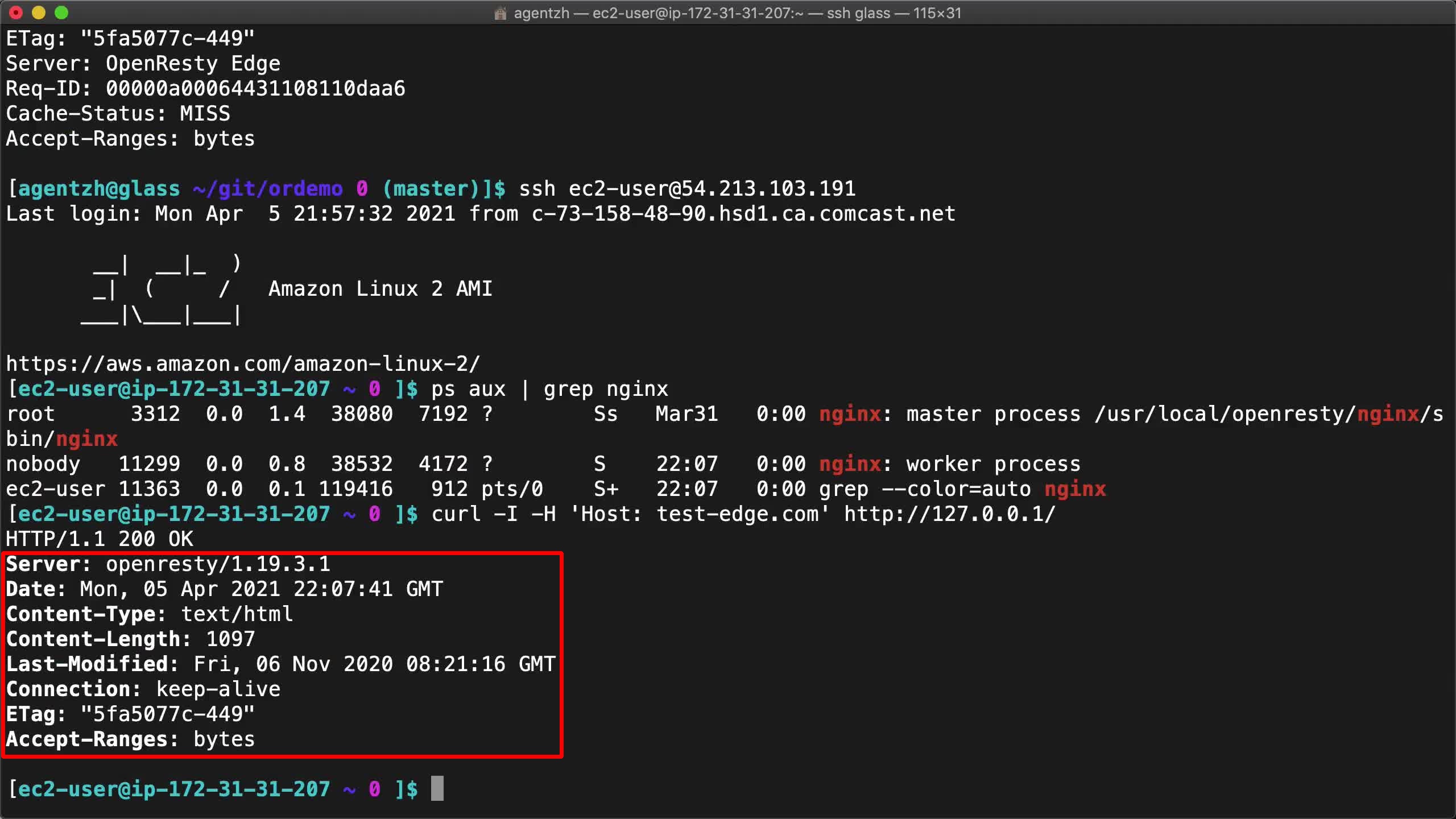

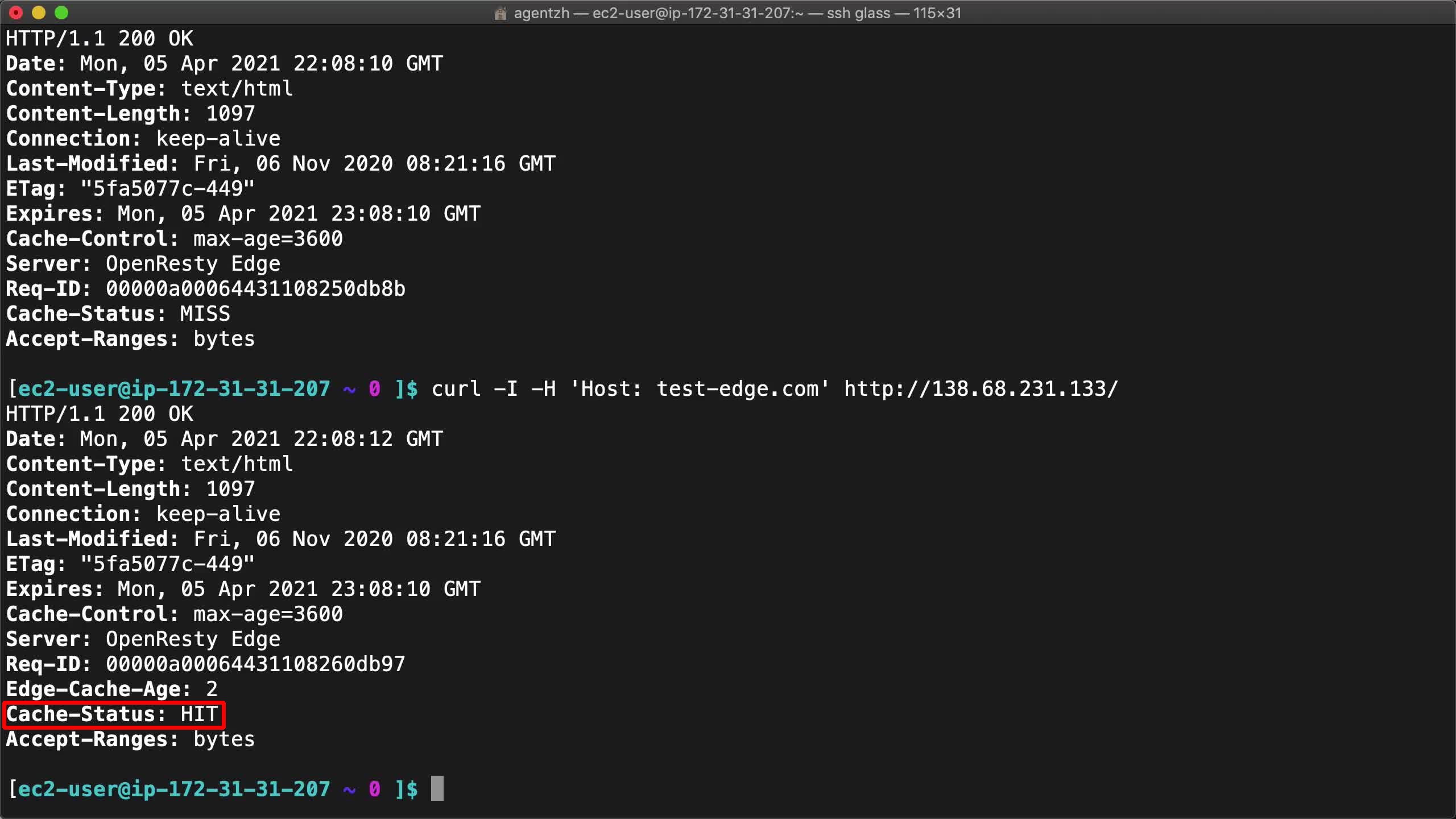

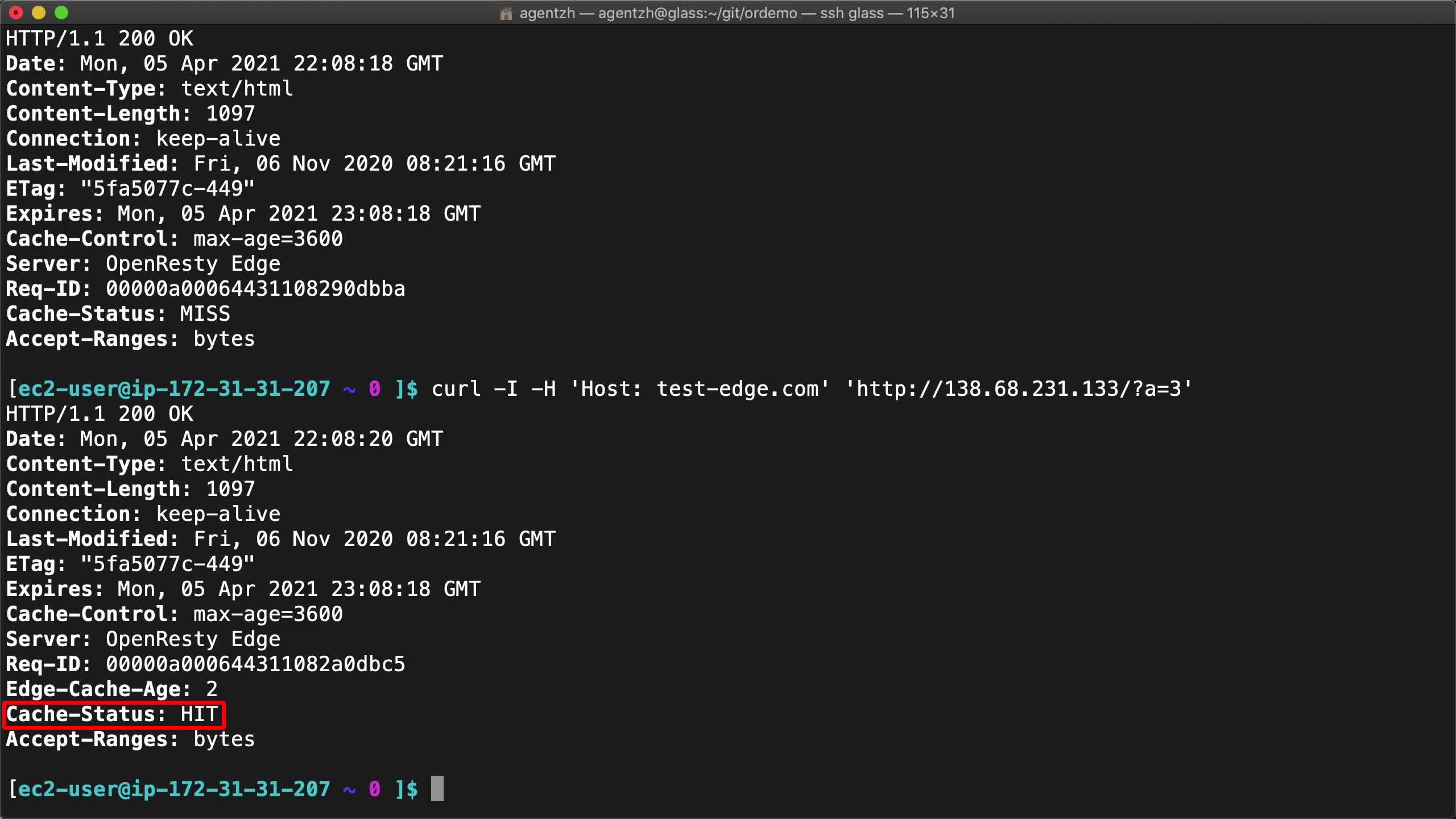

ターミナルで、curl コマンドラインツールを使用してこのゲートウェイサーバーに HTTP リクエストを送信します。

curl -I -H 'Host: test-edge.com' http://138.68.231.133/

返された Cache-Status: MISS レスポンスヘッダーに注目してください。

もう一度試してみます。

curl -I -H 'Host: test-edge.com' http://138.68.231.133/

まだ Cache-Status: MISS ヘッダーが返ってきます。これは、キャッシュがまったく使用されていないことを意味します。なぜでしょうか?実は、バックエンドサーバーの元のレスポンスヘッダーに Expires や Cache-Control などのヘッダーが欠けているためです。

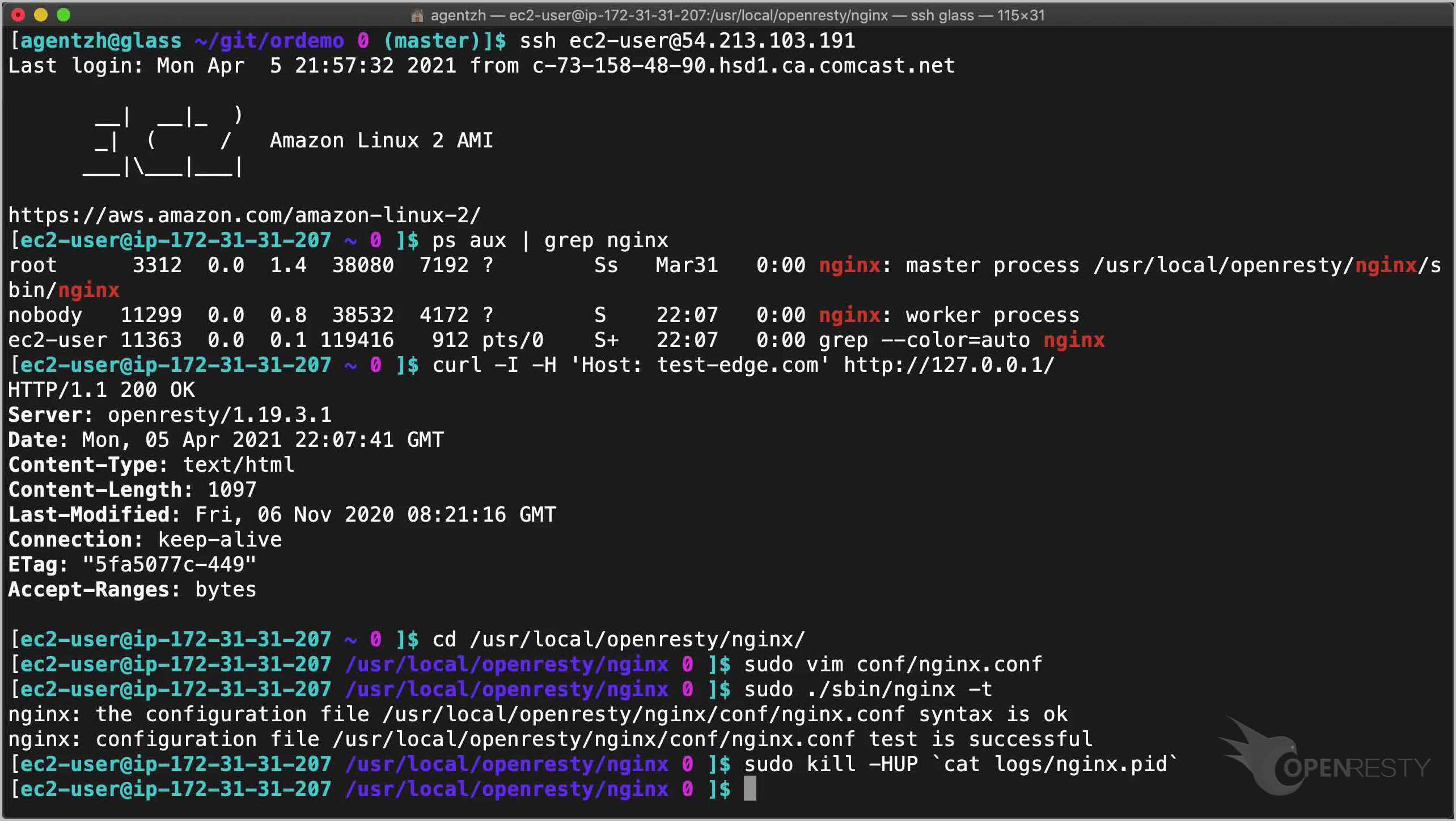

バックエンドサーバーにログインしてみましょう。

ssh ec2-user@54.213.103.191

バックエンドサーバーの IP アドレスが 191 で終わっています。

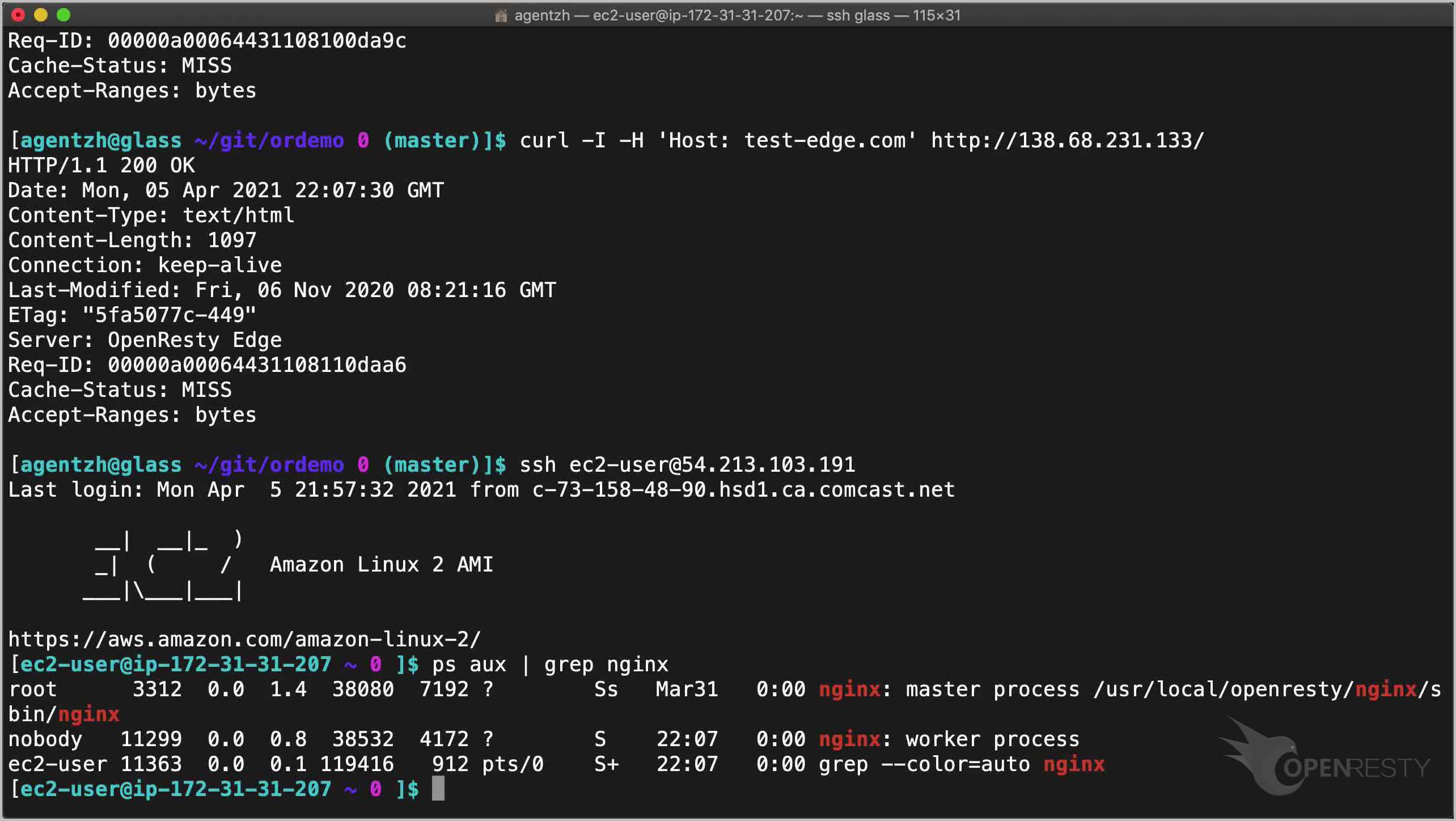

このバックエンドサーバーはオープンソースの OpenResty ソフトウェアを実行しています。

ps aux | grep nginx

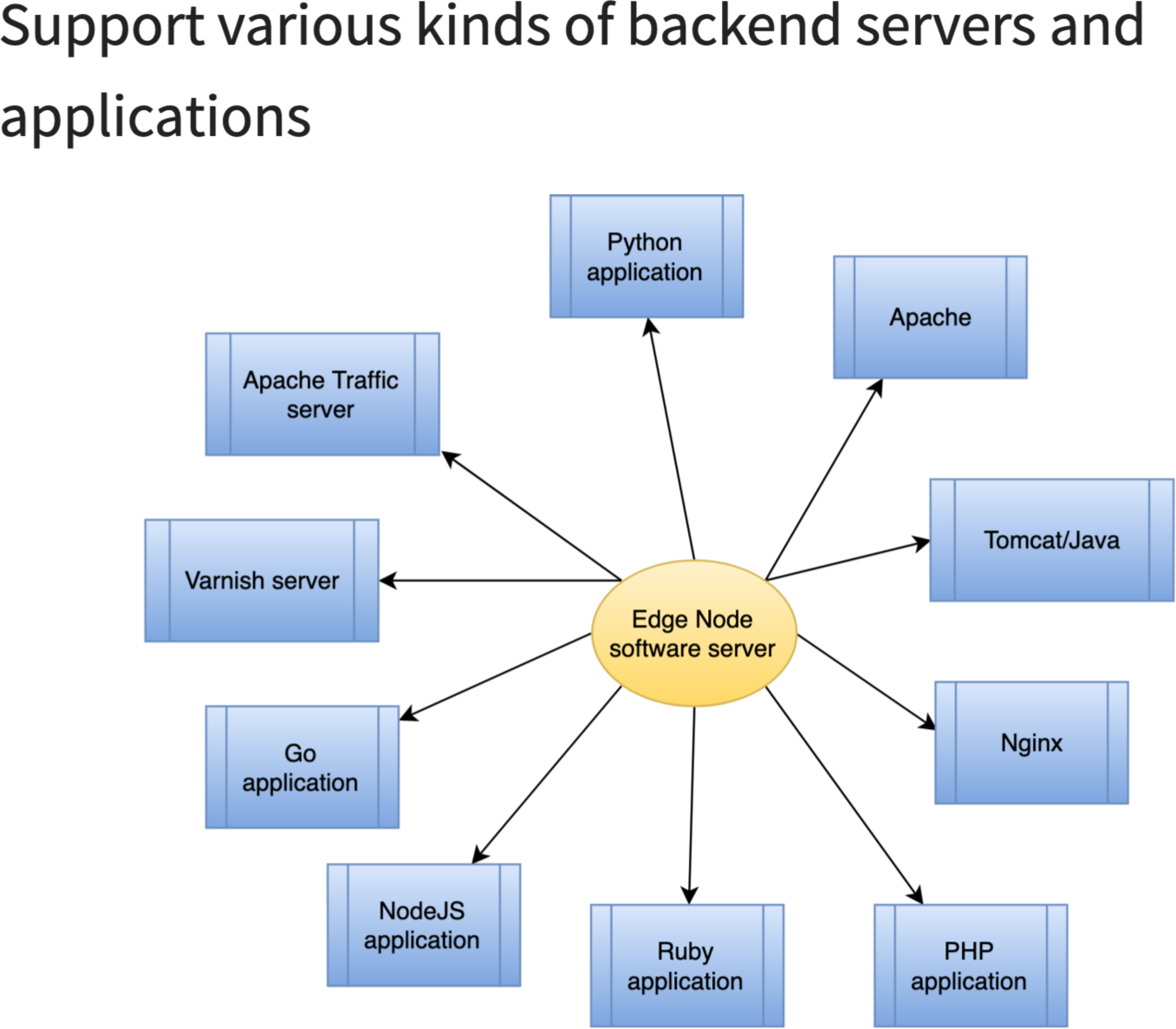

バックエンドサーバーは、HTTP プロトコルを使用する他のソフトウェアを実行することもできます。

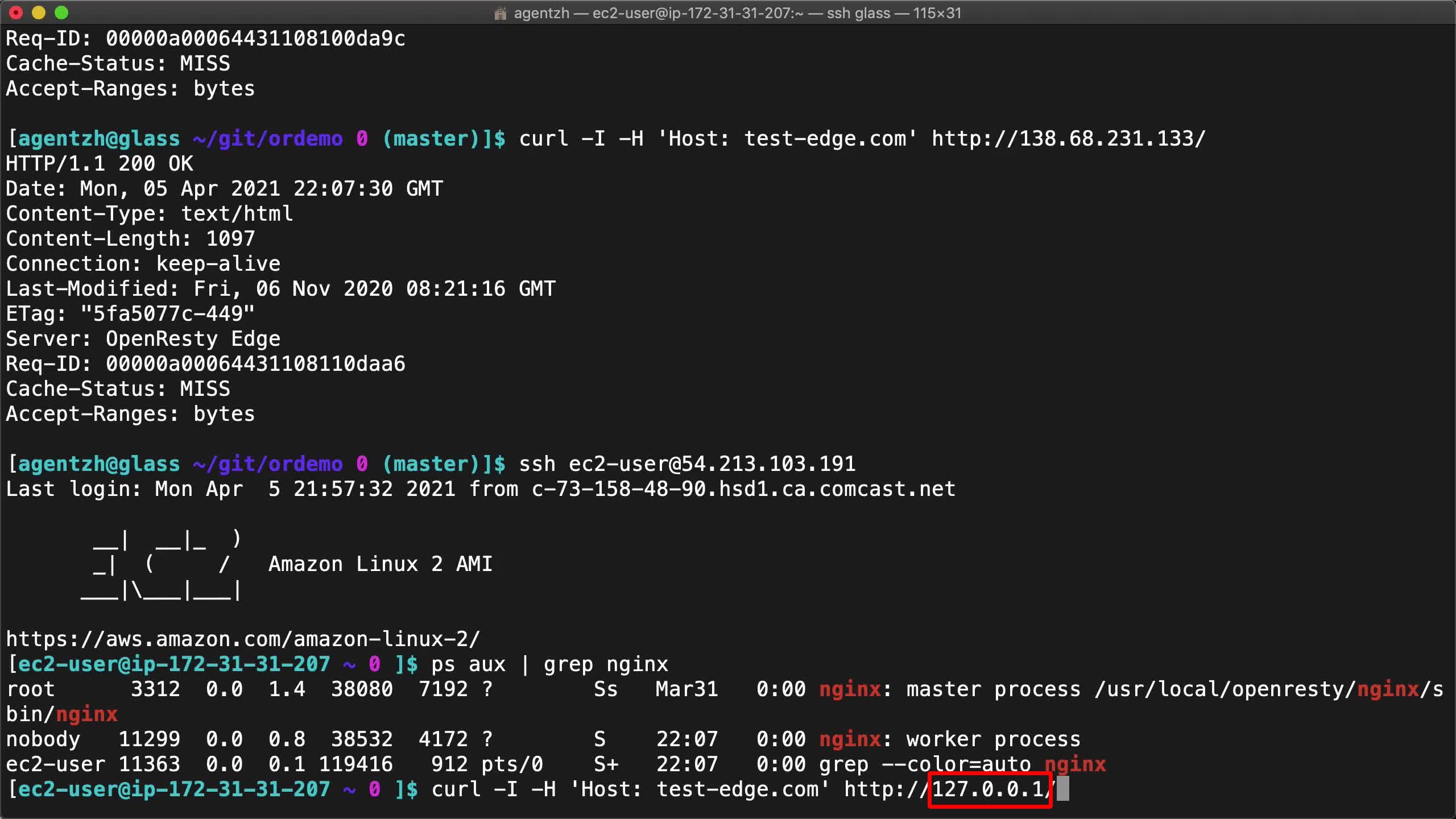

このバックエンドサーバーに直接テストリクエストを送信できます。

curl -I -H 'Host: test-edge.com' http://127.0.0.1/

ローカルホストにアクセスしていることに注意してください。

確かに、Expires や Cache-Control のレスポンスヘッダーは提供されていません。

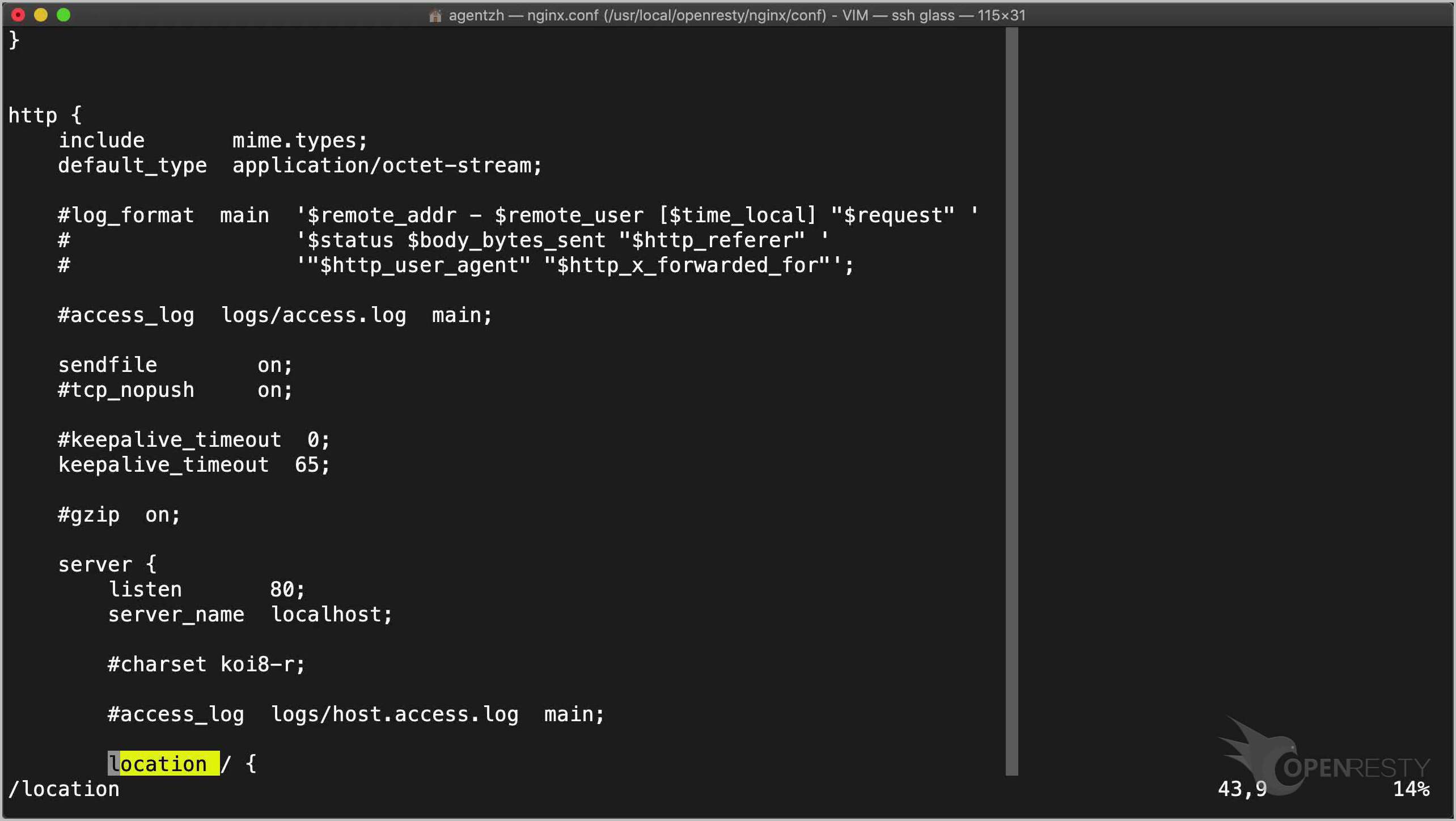

では、バックエンドサーバーを再設定しましょう。

cd /usr/local/openresty/nginx/

nginx 設定ファイルを開きます。

sudo vim conf/nginx.conf

ルートの location / を見つけます。

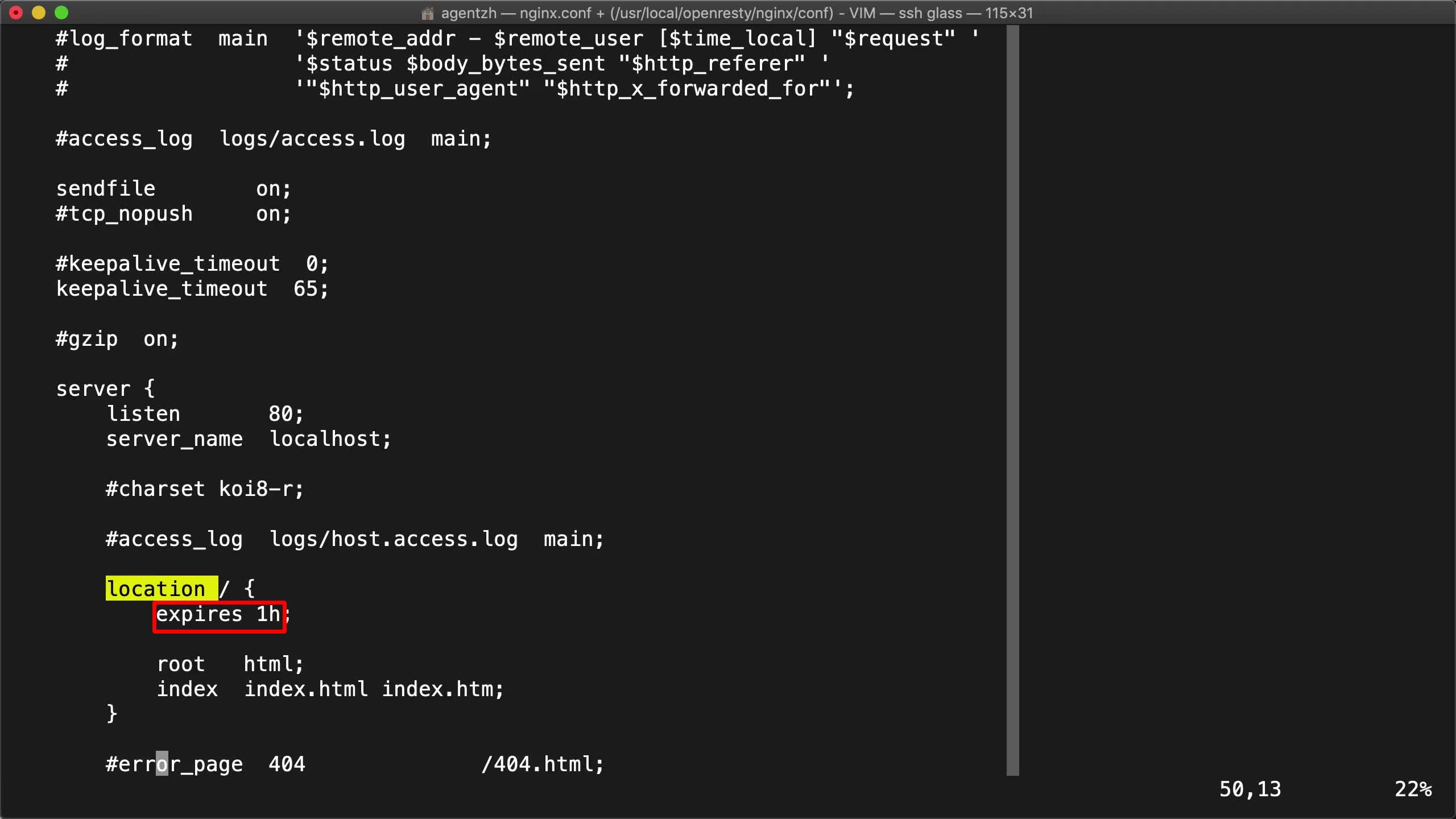

1 時間の有効期限を追加します。

expires 1h;

バックエンドサーバーは、異なる location に対して異なる有効期限を定義したり、特定のレスポンスに対してキャッシュを完全に無効にしたりすることができます。

ファイルを保存して終了します。

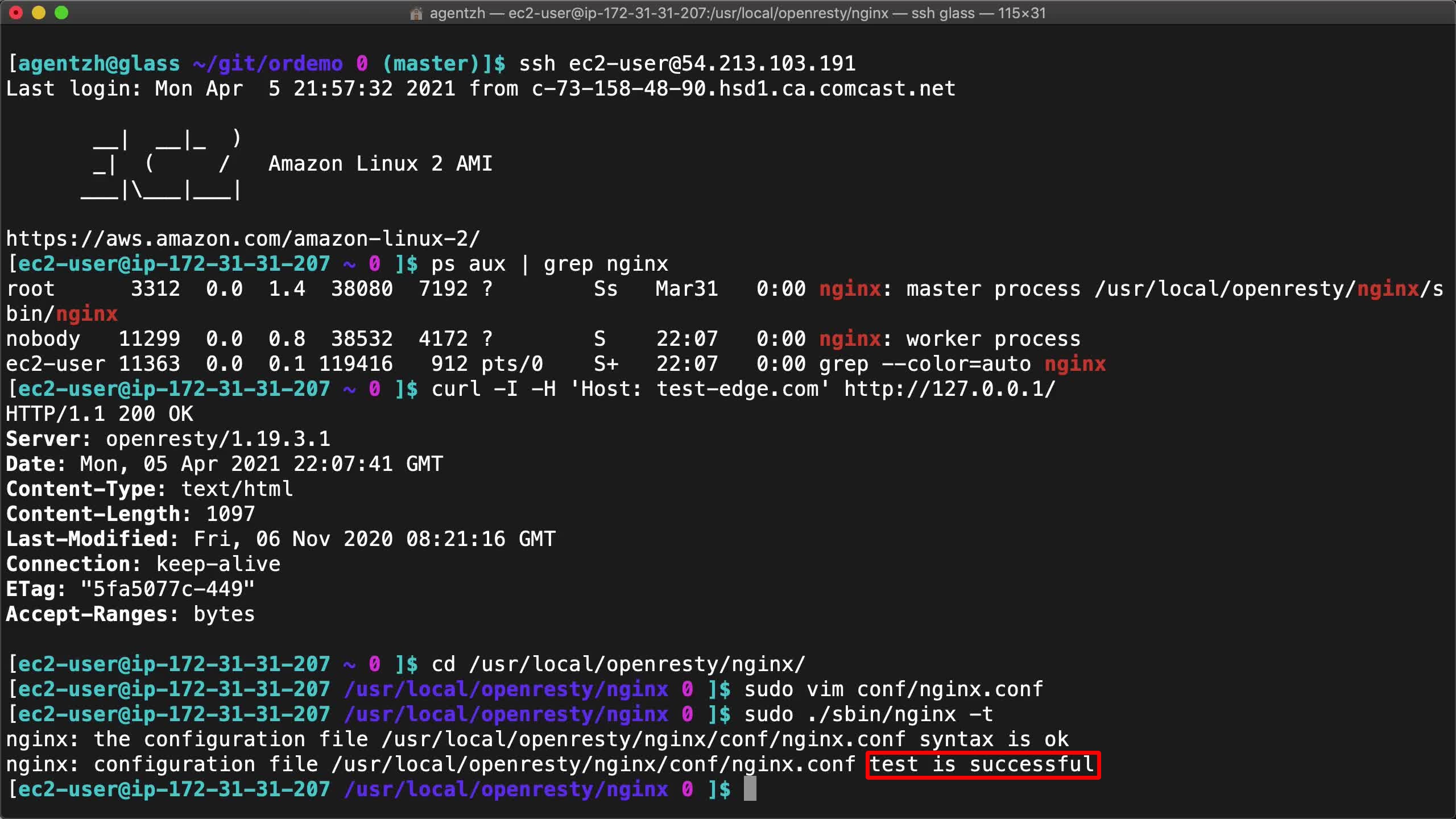

nginx 設定ファイルが正しいかテストします。

sudo ./sbin/nginx -t

問題ありません。

バックエンドサーバープロセスをリロードします。

sudo kill -HUP `cat logs/nginx.pid`

オープンソースの Nginx サーバーの場合も、設定方法は同じです。

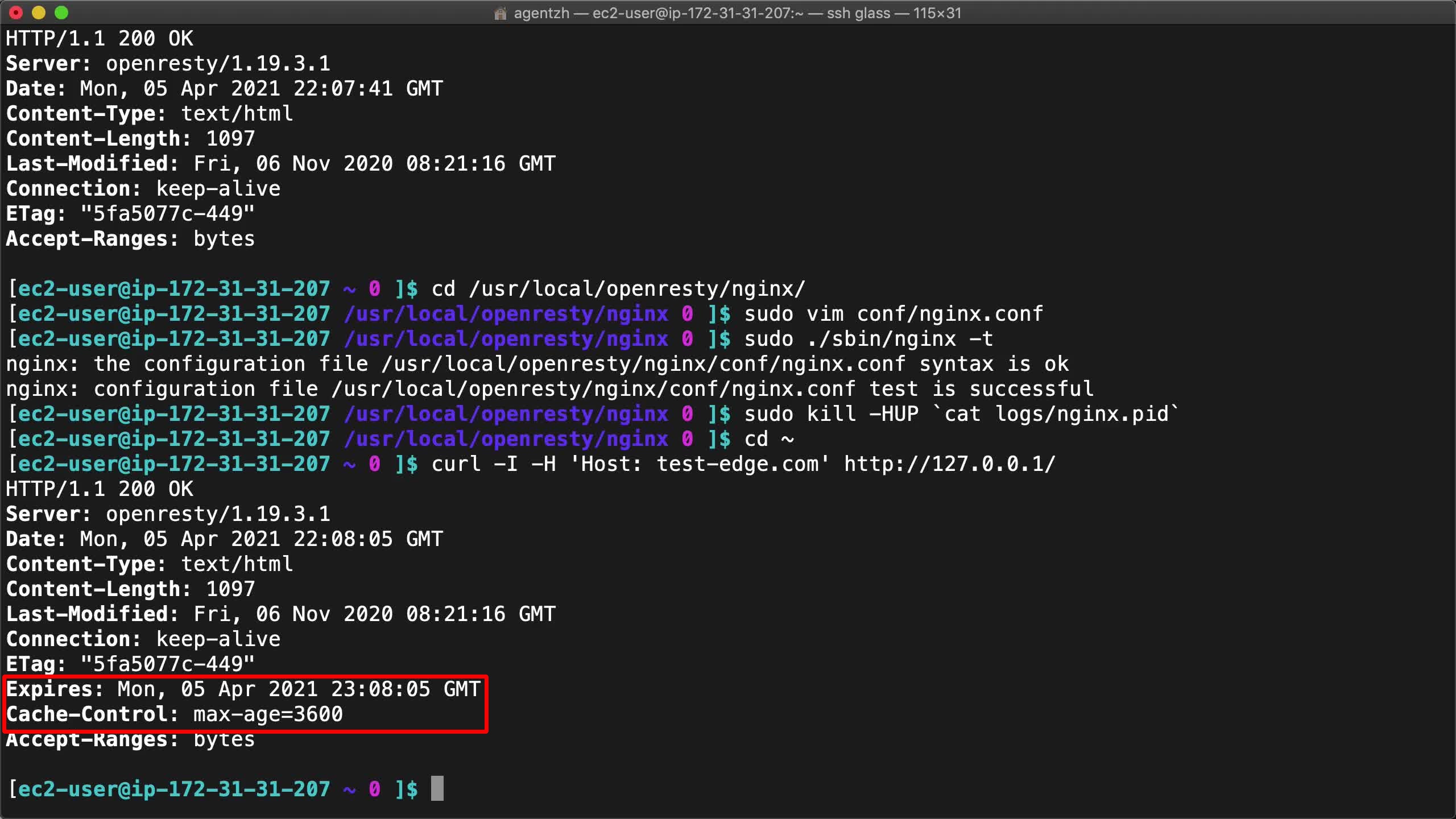

バックエンドサーバーをテストしてみましょう。

curl -I -H 'Host: test-edge.com' http://127.0.0.1/

今度は Expires と Cache-Control の両方のヘッダーがレスポンスに含まれています。

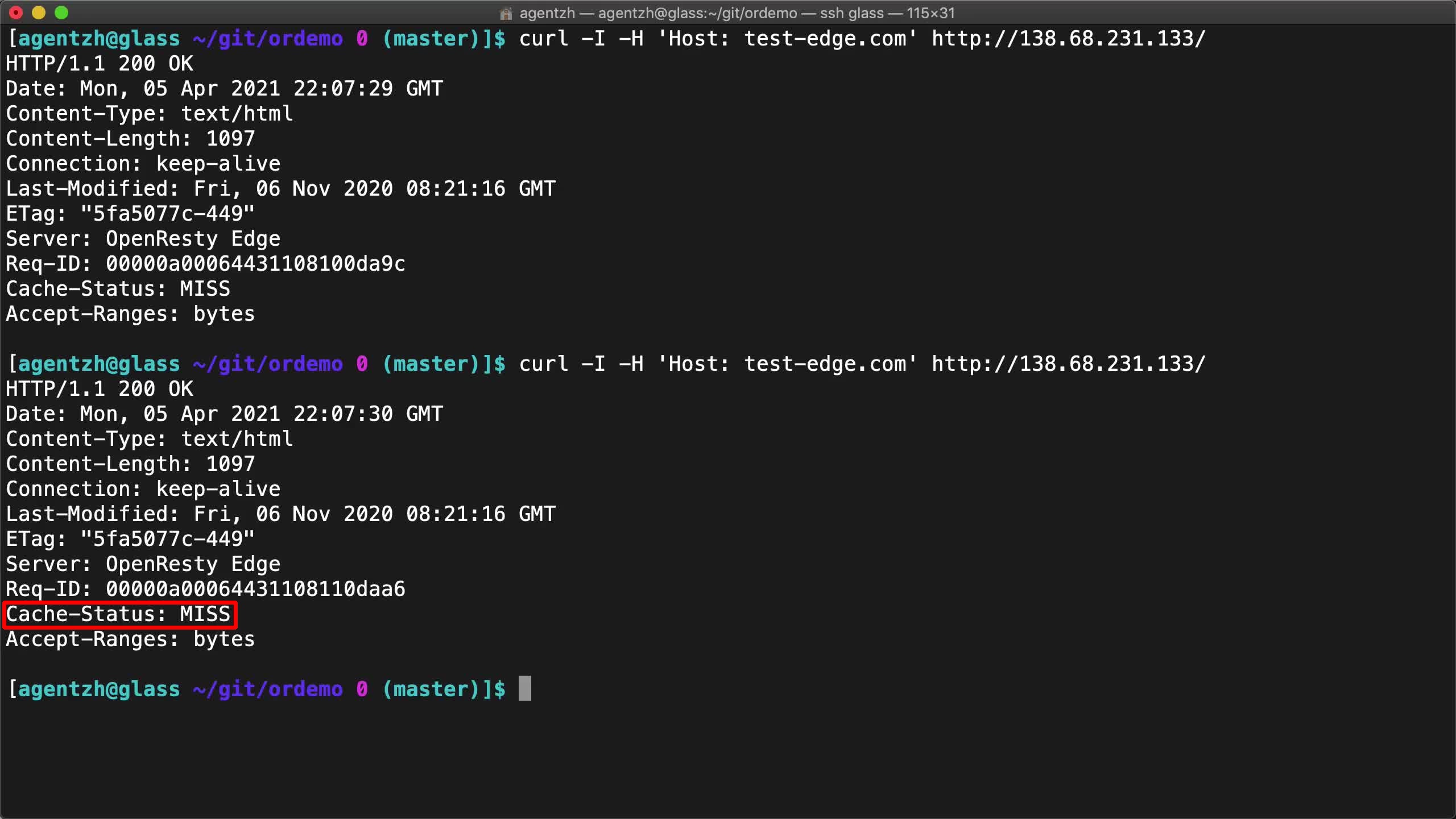

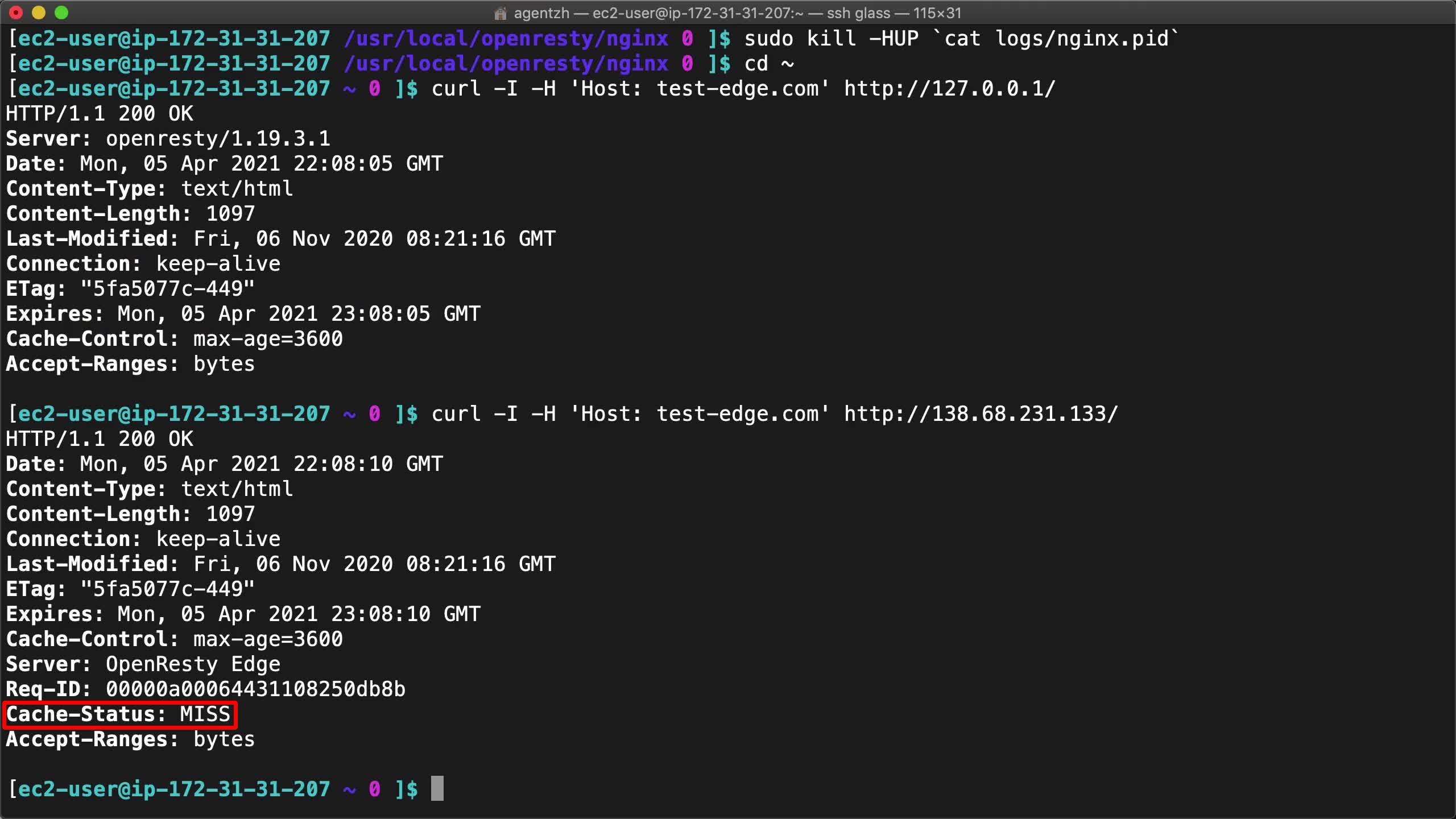

ゲートウェイサーバーにリクエストを送信してみましょう。

curl -I -H 'Host: test-edge.com' http://138.68.231.133/

まだ Cache-Status: MISS ヘッダーが表示されています。

これは予想されたキャッシュミスです。最初のリクエストだからです。

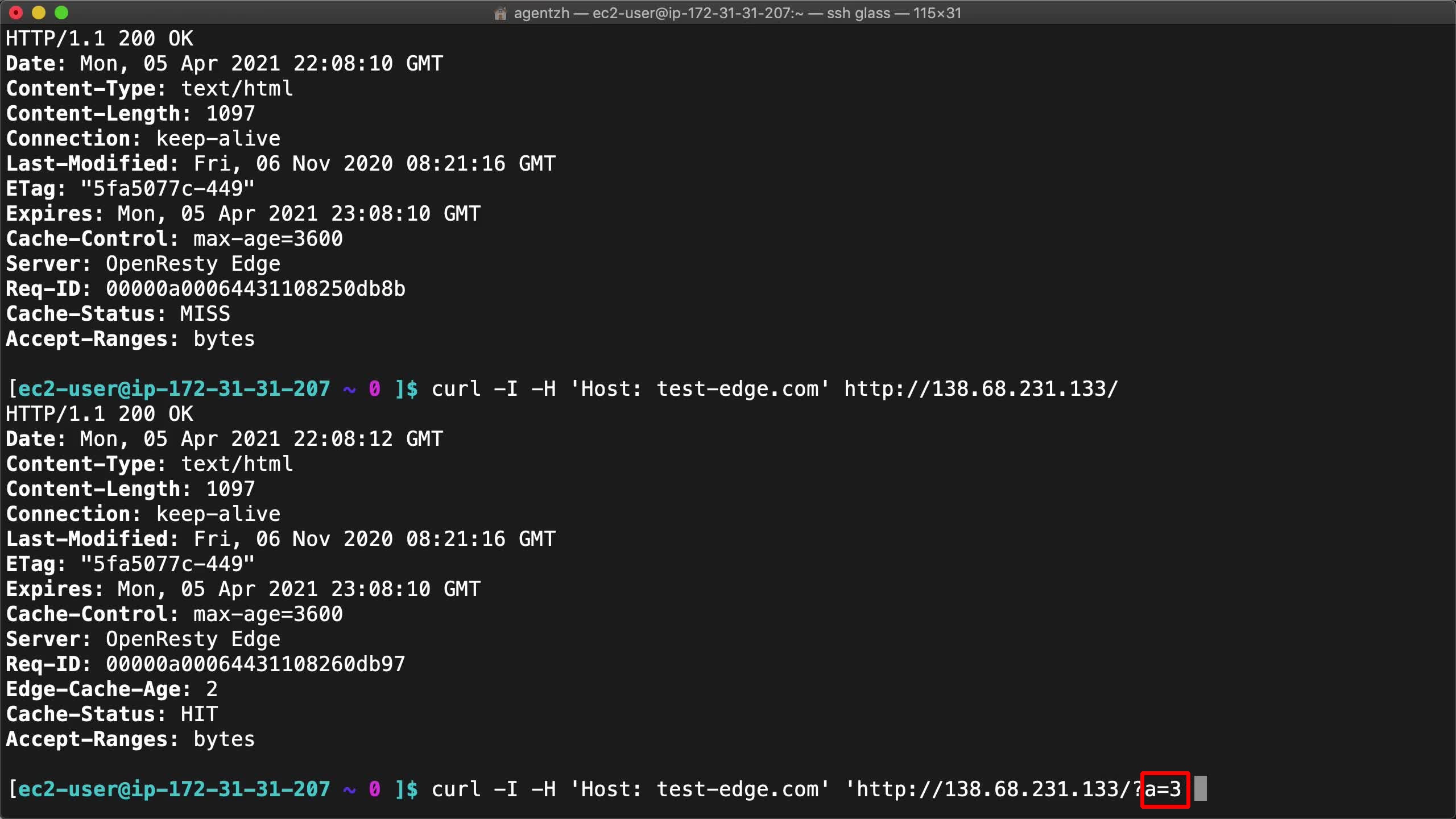

もう一度リクエストを送信してみます。

curl -I -H 'Host: test-edge.com' http://138.68.231.133/

素晴らしい!ついに Cache-Status: HIT ヘッダーが表示されました!

ようやく期待通りにキャッシュにヒットしました。

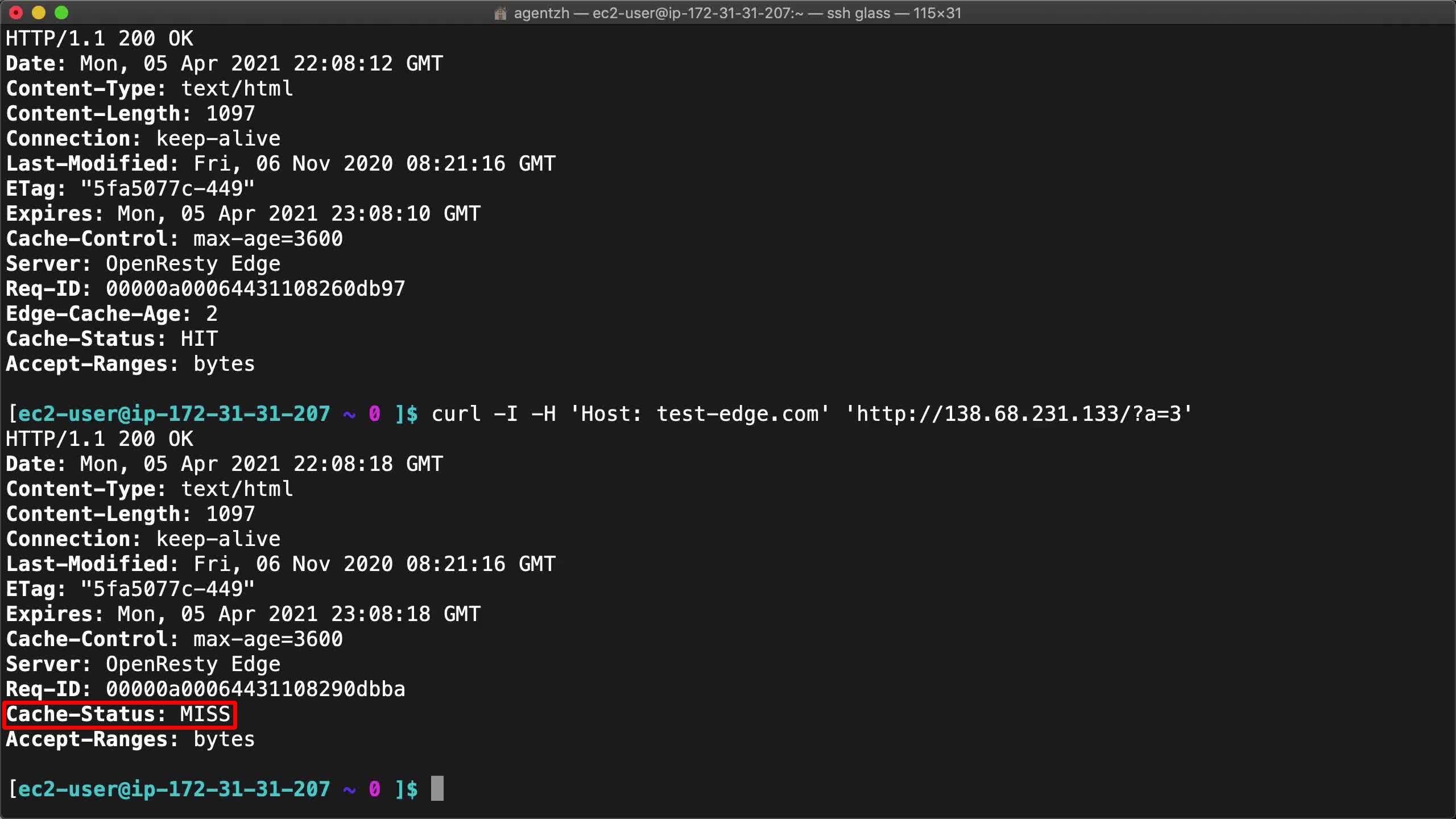

クエリ文字列を追加してみましょう。

curl -I -H 'Host: test-edge.com' 'http://138.68.231.133/?a=3'

a=3 の部分に注目してください。

すると、再びキャッシュミスが発生します。

これは、デフォルトのキャッシュキーにクエリ文字列が含まれているためです。

同じリクエストを再度実行すると、キャッシュにヒットするはずです。

curl -I -H 'Host: test-edge.com' 'http://138.68.231.133/?a=3'

確かにキャッシュにヒットしました。このクエリ文字列を気にしない場合は、キャッシュキーから削除することができます。

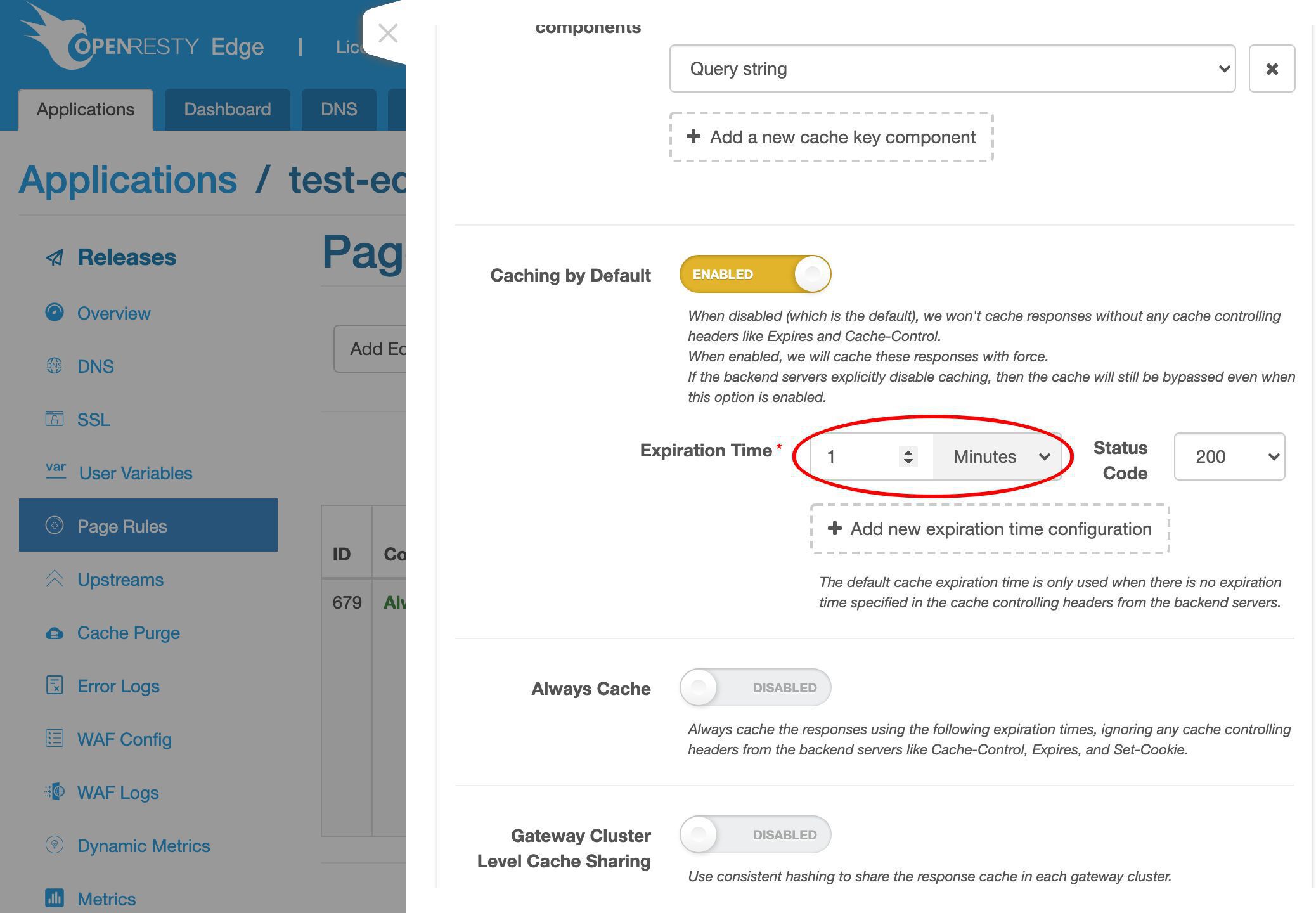

時には、バックエンドサーバーの設定を変更するのが面倒な場合があります。 そのような場合、オリジンレスポンスにキャッシュ制御ヘッダーが存在しない場合でも、デフォルトでレスポンスを強制的にキャッシュすることができます。

この機能を実現するために、元のページルールを修正することができます。

デフォルトの強制キャッシュを有効にします。

キャッシュ可能なレスポンスステータスコードのデフォルトの有効期限も設定できます。

この機能については、別の動画でデモンストレーションを行います。

OpenResty Edge について

OpenResty Edge は、マイクロサービスと分散トラフィックアーキテクチャ向けに設計された多機能ゲートウェイソフトウェアで、当社が独自に開発しました。トラフィック管理、プライベート CDN 構築、API ゲートウェイ、セキュリティ保護などの機能を統合し、現代のアプリケーションの構築、管理、保護を容易にします。OpenResty Edge は業界をリードする性能と拡張性を持ち、高並発・高負荷シナリオの厳しい要求を満たすことができます。K8s などのコンテナアプリケーショントラフィックのスケジューリングをサポートし、大量のドメイン名を管理できるため、大規模ウェブサイトや複雑なアプリケーションのニーズを容易に満たすことができます。

著者について

章亦春(Zhang Yichun)は、オープンソースの OpenResty® プロジェクトの創始者であり、OpenResty Inc. の CEO および創業者です。

章亦春(GitHub ID: agentzh)は中国江蘇省生まれで、現在は米国ベイエリアに在住しております。彼は中国における初期のオープンソース技術と文化の提唱者およびリーダーの一人であり、Cloudflare、Yahoo!、Alibaba など、国際的に有名なハイテク企業に勤務した経験があります。「エッジコンピューティング」、「動的トレーシング」、「機械プログラミング」 の先駆者であり、22 年以上のプログラミング経験と 16 年以上のオープンソース経験を持っております。世界中で 4000 万以上のドメイン名を持つユーザーを抱えるオープンソースプロジェクトのリーダーとして、彼は OpenResty® オープンソースプロジェクトをベースに、米国シリコンバレーの中心部にハイテク企業 OpenResty Inc. を設立いたしました。同社の主力製品である OpenResty XRay動的トレーシング技術を利用した非侵襲的な障害分析および排除ツール)と OpenResty Edge(マイクロサービスおよび分散トラフィックに最適化された多機能

翻訳

英文版の原文と日本語訳版(本文)をご用意しております。読者の皆様による他の言語への翻訳版も歓迎いたします。全文翻訳で省略がなければ、採用を検討させていただきます。心より感謝申し上げます!